Nova

- 1.Nova计算服务

- 2.Nova系统架构

- 3.Nova组件-API

- 4.Nova组件-Scheduler

- 4.1调度器类型

- 4.2过滤调度器

- 4.3 权重

- 5.Nova组件-Compute

- 5.1Compute工作过程

- 6.Nova组件-Conductor

- 7.Nova组件-PlacementAPI

- 8.虚拟机实例化流程

- 8.1控制台接口

- 9.Cell架构

- 10.Placement组件部署

- 11.nova部署

1.Nova计算服务

计算服务是openstack最核心的服务之一,负责维护和管理云环境的计算资源,他在openstack项目中代号是nova,nova自身并没有提供任何虚拟化能力,它提供计算服务,使用不同的虚拟化驱动与底层支持的Hypervisor(虚拟机管理器)进行交互,所有的计算实例由nova进行生命周期的调度管理。而nova需要keystone、glance、neutron。cinder和swift等其他服务的支持,与这些服务集成,实现如加密磁盘,裸金属计算实例等。

2.Nova系统架构

- DB:用于数据存储的sql数据库

- API:用于接收HTTP请求、转换命令、通过消息队列或者其他组件通信的nova组件

- Scheduler:用于决定哪台计算节点承载计算实例的nova调度器

- Network:管理IP转发、网桥或虚拟机之间通信的nova网络组件

- Compute:管理虚拟机管理器与虚拟机之间通信的nova计算组件

- Conductor:处理需要协调(构建虚拟机或调整虚拟机大小)的请求,或者处理对象转换

3.Nova组件-API

API是客户访问nova的http接口,它由nova-api服务实现,nova-api服务接收和响应来自最终用户的计算api请求。作为openstack对外服务的最主要接口,nova-api提供了一个集中的可以查询所有spa的端点,所有对nova的请求首先都是由nova-api处理。API提供REST标准调用服务,便于与第三方系统集成,最终用户不会直接改送RESTful API请求,二十通过openstack命令行、dashbord和其他需要跟nova交换的组件来使用这些API,且只要跟虚拟机生命周期相关的操作,nova-api都可以响应。

Nova-api对接收到HTTP API请求做以下处理:

- 检查客户端传入的参数是否合法有效

- 调用nova其他服务来处理客户端HTTP请求

- 格式化nova其他自服务返回结果并返回给客户端

同时,nova-api是外部访问并使用nova提供各种服务的唯一途径,也是客户端和nova之间的中间层。

4.Nova组件-Scheduler

Scheduler可译为调度器,由nova-scheduler服务实现,主要解决的是如何选择在哪个计算节点上启动实例的问题。它可以应用多种规则,如果考虑内存用率、cpu负载率、cpu构架(intel/amd)等多种因素,根据一定的算法,确定虚拟机实例能够运行在哪一台计算服务器上。Nova-scheduler服务会从队列中接收一个虚拟机实例的请求,通过读取数据库的内容,从可用资源池中选择最合适的计算节点来创建的虚拟机实例。创建虚拟机实例时,用户会提出资源需求,如cpu、内存、磁盘各需要多少。Openstack将这些需求定义在实例类型中,用户只需指定使用哪个实例类型就可以了。

4.1调度器类型

- 随机调度器(chance scheduler):从所有正常运行nova-compute服务的节点中随机选择。

- 过滤器调度器(filter scheduler):根据指定的过滤条件以及权重选择最佳的计算节点。Filter又称为筛选器

- 缓存调度器(caching scheduler):可看作随机调度器的一种特殊类型,在随机调度的基础上将主机资源信息缓存在本地内存中,然后通过后台的定时任务定时从数据库中获取最新的主机资源信息。

4.2过滤调度器

- computerFilter(计算过滤器):保证只有nova-conpute服务正常工作的计算节点才能被scheduler调度,它是必选的过滤器

- commputecapablilitiesFilter(计算能力过滤器):更具计算节点的特性来过滤

- lamagePropertiesFilter(镜像属性过滤器):根据所选 镜像的属性来筛选匹配的计算节点。通过元数据来指定其属性。

4.3 权重

nova-scheduler服务可以通过多个过滤器一次进行过滤,过滤之后的节点再通过计算权重选出能够部署实力的节点。切所有权重位于nova/scheduler/weights目录下

5.Nova组件-Compute

Nova-compute在计算节点上运行,负责管理节点上的实例。通常一个主机运行一个Nova-compute服务,一个实例部署在哪个可用的主机上取决于调度算法。OpenStack对实例的操作,最后都是提交给Nova-compute来完成。 Nova-compute可分为两类,一类是定向openstack报告计算节点的状态,另一类是实现实例生命周期的管理。

5.1Compute工作过程

- 定期向OpenStack报告计算节点的状态

每隔一段时间,nova-compute就会报告当前计算节点的资源使用情况和nova-compute服务状态,nova-compute是通过Hypervisor的驱动获取这些信息的。 - 实现虚拟机实例生命周期的管理

OpenStack对虚拟机实例最主要的操作都是通过nova-compute实现的。

创建、关闭、重启、挂起、恢复、中止、调整大小、迁移、快照

6.Nova组件-Conductor

由nova-conductor模块实现,旨在为数据库的访问提供一层安全保障。Nova-conductor作为nova-compute服务与数据库之间交互的中介,避免了直接访问由nova-compute服务创建对接数据库。 Nova-compute访问数据库的全部操作都改到novaconductor中,nova-conductor作为对数据库操作的一个代理,而且nova-conductor是部署在控制节点上的。 Nova-conductor有助于提高数据库的访问性能,nova-compute可以创建多个线程使用远程过程调用(RPC)访问nova-conductor。 在一个大规模的openstack部署环境里,管理员可以通过增加nova-conductor的数量来应付日益增长的计算节点对数据库的访问量

7.Nova组件-PlacementAPI

PlacementAPl由nova-placement-api服务来实现,旨在追踪记录资源提供者的目录和资源使用情况。

被消费的资源类型是按类进行跟踪的。如计算节点类、共享存储池类、IP地址类等。

8.虚拟机实例化流程

用户可以通过多种多种方式访问虚拟机的控制台

- Nova-novncproxy守护进程:通过vnc连接访问正在运行的实例提供一个代理,支持浏览器novnc客户端

- Nova-spicehtml5proxy守护进程:通过spice连接访问正在运行的实例提供一个代理,支持基于html5浏览器的客户端。

- Nova-xvpvncproxy守护进程:通过vnc连接访问正在运行的实例提供一个代理,支持openstack专用的java客户端

- Nova-consoleayth守护进程:负责对访问虚拟机控制台提供用户令牌认证,这个服务必须与控制台代理程序共同使用

8.1控制台接口

首先用户执行Nova client提供的用于创建虚拟机的命令,然后nova-api服务监听来自于client的http请求,并将这些请求转换为AMQP消息之后加入消息队列,通过消息队列调用nova-condutor服务,conductor服务从消息队列中接受到虚拟机实例化请求后,进行一些准备工作,condutor服务通过消息队列告诉scheduler服务去选择一个合适的计算节点来创建虚拟机,此时scheduler会读取数据库的内容,confuctor服务从nova-scheduler服务中得到了合适的讲计算节点的信息后,再通过消息队列来通知compute服务实现虚拟机的创建

9.Cell架构

当openstack nova集群的规模变大时,数据库和消息队列服务就会出现瓶颈问题,nova为提高水平扩展以及分布式、大规模的部署能力,同时又不增加数据库和消息中间件的复杂度,引入Cell概念

- cell可译为单位,为支持更大规模的部署,openstack较大的nova集群分为小的单元,每个单元都有自己的消息队列和数据库,可以解决规模增大时硬气的瓶颈问题。再cell中,keystone。neutron、cinder、glance等资源是共享的。

10.Placement组件部署

- 创建数据库实例和数据库用户

[root@ct ~]# mysql -uroot -p

Enter password: MariaDB [(none)]> create database placement;

Query OK, 1 row affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'PLACEMENT_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> flush privileges;

Query OK, 0 rows affected (0.000 sec)- 创建placement服务用户和api的endpoint

[root@ct ~]# openstack user create --domain default --password PLACEMENT_PASS placement

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 23be0ae3195f445982bdf98fe66baae2 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+[root@ct ~]# openstack role add --project service --user placement admin //给placement用户对service项目拥有admin权限[root@ct ~]# openstack service create --name placement --description "Placement API" placement //创建placement服务,服务类型为placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | 568dd00cf79d45b2bc36226e971d8b3d |

| name | placement |

| type | placement |

+-------------+----------------------------------+- 注册API端口到plancement的service中,注册的信息会写入到MySQL中

[root@ct ~]# openstack endpoint create --region RegionOne placement public http://ct:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 4cc15e92e9ee484791f52171e6181439 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 568dd00cf79d45b2bc36226e971d8b3d |

| service_name | placement |

| service_type | placement |

| url | http://ct:8778 |

+--------------+----------------------------------+

[root@ct ~]# openstack endpoint create --region RegionOne placement internal http://ct:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | a82b40d3e2a04b42946570d8379bca46 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 568dd00cf79d45b2bc36226e971d8b3d |

| service_name | placement |

| service_type | placement |

| url | http://ct:8778 |

+--------------+----------------------------------+

[root@ct ~]# openstack endpoint create --region RegionOne placement admin http://ct:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | c9a8e1f3a8b142ba891f592c1e3a61ce |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 568dd00cf79d45b2bc36226e971d8b3d |

| service_name | placement |

| service_type | placement |

| url | http://ct:8778 |

+--------------+----------------------------------+[root@ct ~]# yum -y install openstack-placement-api //安装placement服务- 修改配置文件

[root@ct ~]# cp /etc/placement/placement.conf{,.bak}

[root@ct ~]# grep -Ev '^$|#' /etc/placement/placement.conf.bak > /etc/placement/placement.conf

[root@ct ~]# openstack-config --set /etc/placement/placement.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

[root@ct ~]# openstack-config --set /etc/placement/placement.conf api auth_strategy keystone

[root@ct ~]# openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_url http://ct:5000/v3

[root@ct ~]# openstack-config --set /etc/placement/placement.conf keystone_authtoken memcached_servers ct:11211

[root@ct ~]# openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_type password

[root@ct ~]# openstack-config --set /etc/placement/placement.conf keystone_authtoken project_domain_name Default

[root@ct ~]# openstack-config --set /etc/placement/placement.conf keystone_authtoken user_domain_name Default

[root@ct ~]# openstack-config --set /etc/placement/placement.conf keystone_authtoken project_name service

[root@ct ~]# openstack-config --set /etc/placement/placement.conf keystone_authtoken username placement

[root@ct ~]# openstack-config --set /etc/placement/placement.conf keystone_authtoken password PLACEMENT_PASS- 查看placement配置文件

[root@ct ~]# cd /etc/placement/

[root@ct placement]# cat placement.conf

[DEFAULT]

[api]

auth_strategy = keystone

[cors]

[keystone_authtoken]

auth_url = http://ct:5000/v3 //指定keystone地址

memcached_servers = ct:11211 //session信息是缓存放到了memcached中

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = PLACEMENT_PASS

[oslo_policy]

[placement]

[placement_database]

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

[profiler][root@ct placement]# su -s /bin/sh -c "placement-manage db sync" placement //导入数据库

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1280, u"Name 'alembic_version_pkc' ignored for PRIMARY key.")result = self._query(query)[root@ct ~]# cd /etc/httpd/conf.d

[root@ct conf.d]# ls

**00-placement-api.conf** autoindex.conf README userdir.conf welcome.conf wsgi-keystone.conf

//安装完placement服务后会自动创建该文件-虚拟主机配置[root@ct conf.d]# cat 00-placement-api.conf

Listen 8778<VirtualHost *:8778>WSGIProcessGroup placement-apiWSGIApplicationGroup %{GLOBAL}WSGIPassAuthorization OnWSGIDaemonProcess placement-api processes=3 threads=1 user=placement group=placementWSGIScriptAlias / /usr/bin/placement-api<IfVersion >= 2.4>ErrorLogFormat "%M"</IfVersion>ErrorLog /var/log/placement/placement-api.log#SSLEngine On#SSLCertificateFile ...#SSLCertificateKeyFile ...

</VirtualHost>Alias /placement-api /usr/bin/placement-api

<Location /placement-api>SetHandler wsgi-scriptOptions +ExecCGIWSGIProcessGroup placement-apiWSGIApplicationGroup %{GLOBAL}WSGIPassAuthorization On

</Location>

<Directory /usr/bin> //在此处下入下面内容,

<IfVersion >= 2.4> //apache版本;允许apache访问/usr/bin目录;否则在访问apache的api时会报403。 Require all granted

</IfVersion>

<IfVersion < 2.4> Order allow,deny Allow from all //允许apache访问

</IfVersion>

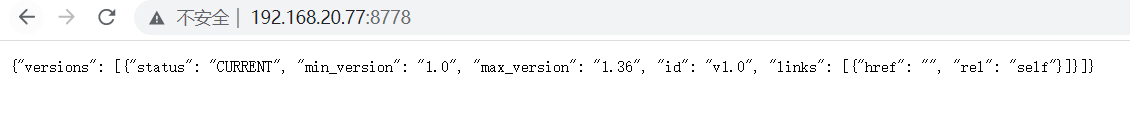

</Directory>[root@ct conf.d]# systemctl restart httpd //重启apache[root@ct conf.d]# curl ct:8778 //测试访问

{"versions": [{"status": "CURRENT", "min_version": "1.0", "max_version": "1.36", "id": "v1.0", "links": [{"href": "", "rel": "self"}]}]}

- web浏览器测试

[root@ct conf.d]# netstat -natp | grep 8778

tcp 0 0 192.168.30.10:40222 192.168.30.10:8778 TIME_WAIT -

tcp 0 0 192.168.30.10:40226 192.168.30.10:8778 TIME_WAIT -

tcp6 0 0 :::8778 :::* LISTEN 81365/httpd [root@ct conf.d]# placement-status upgrade check //查看placement状态

+----------------------------------+

| Upgrade Check Results |

+----------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+----------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+----------------------------------+

11.nova部署

- 控制节点

nova-api(nova主服务)

nova-scheduler(nova调度服务)

nova-conductor(nova数据库服务,提供数据库访问)

nova-novncproxy(nova的vnc服务,提供实例的控制台)

- 计算节点ct1、ct2

nova-compute(nova计算服务)

- 创建实例和数据库用户

[root@ct ~]# mysql -uroot -p

Enter password: MariaDB [(none)]> create database nova_api-> ;

Query OK, 1 row affected (0.001 sec)MariaDB [(none)]> create database nova;

Query OK, 1 row affected (0.000 sec)MariaDB [(none)]> create database nova_cell0;

Query OK, 1 row affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.001 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> flush privileges;

Query OK, 0 rows affected (0.000 sec)[root@ct ~]# openstack user create --domain default --password NOVA_PASS nova //创建nova用户

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 9b8a20213d5340819a9bb375cfe57fca |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

[root@ct ~]# openstack role add --project service --user nova admin //nova用户添加到service项目,拥有admin权限[root@ct ~]# openstack service create --name nova --description "OpenStack Compute" compute //创建nova服务

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 3ceed25fb2144e44bb4030be00abfd26 |

| name | nova |

| type | compute |

+-------------+----------------------------------+- 给nova服务关联endpoint(端点)

[root@ct ~]# openstack endpoint create --region RegionOne compute public http://ct:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 73c84082f2d64b87880b3c5aaa178a76 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3ceed25fb2144e44bb4030be00abfd26 |

| service_name | nova |

| service_type | compute |

| url | http://ct:8774/v2.1 |

+--------------+----------------------------------+

[root@ct ~]# openstack endpoint create --region RegionOne compute internal http://ct:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 0335b286b9ac4627adc0d02a4411dc15 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3ceed25fb2144e44bb4030be00abfd26 |

| service_name | nova |

| service_type | compute |

| url | http://ct:8774/v2.1 |

+--------------+----------------------------------+

[root@ct ~]# openstack endpoint create --region RegionOne compute admin http://ct:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 5eabd4d0c703451e89fe4952f9a138d3 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3ceed25fb2144e44bb4030be00abfd26 |

| service_name | nova |

| service_type | compute |

| url | http://ct:8774/v2.1 |

+--------------+----------------------------------+[root@ct ~]# yum -y install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler //安装nova组件[root@ct ~]# cp -a /etc/nova/nova.conf{,.bak}

[root@ct ~]# grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf- 下面有个my_ip需要指定内网ip

[root@ct ~]# openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

[root@ct ~]# openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.30.10 //#这里的ip指定ct的内网ip

[root@ct ~]# openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

[root@ct ~]# openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

[root@ct ~]# openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@ct

[root@ct ~]# openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:NOVA_DBPASS@ct/nova_api

[root@ct ~]# openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:NOVA_DBPASS@ct/nova

[root@ct ~]# openstack-config --set /etc/nova/nova.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

[root@ct ~]# openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

[root@ct ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://ct:5000/v3

[root@ct ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers ct:11211

[root@ct ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

[root@ct ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

[root@ct ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

[root@ct ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

[root@ct ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

[root@ct ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

[root@ct ~]# openstack-config --set /etc/nova/nova.conf vnc enabled true

[root@ct ~]# openstack-config --set /etc/nova/nova.conf vnc server_listen ' $my_ip'

[root@ct ~]# openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ' $my_ip'

[root@ct ~]# openstack-config --set /etc/nova/nova.conf glance api_servers http://ct:9292

[root@ct ~]# openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp[root@ct ~]# openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

[root@ct ~]# openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

[root@ct ~]# openstack-config --set /etc/nova/nova.conf placement project_name service

[root@ct ~]# openstack-config --set /etc/nova/nova.conf placement auth_type password

[root@ct ~]# openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

[root@ct ~]# openstack-config --set /etc/nova/nova.conf placement auth_url http://ct:5000/v3

[root@ct ~]# openstack-config --set /etc/nova/nova.conf placement username placement

[root@ct ~]# openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS[root@ct ~]# cat /etc/nova/nova.conf 查看

[DEFAULT]

enabled_apis = osapi_compute,metadata //指定支持的api类型

my_ip = 192.168.30.10 //定义本地IP

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

transport_url = rabbit://openstack:RABBIT_PASS@ct //指定连接的rabbitmq

[api]

auth_strategy = keystone //指定使用keystone认证

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@ct/nova_api

[barbican]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@ct/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://ct:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone][keystone_authtoken] //配置keystone的认证信息

auth_url = http://ct:5000/v3 //到此url去认证

memcached_servers = ct:11211 //memcache数据库地址:端口

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21][oslo_concurrency] //指定锁路径

lock_path = /var/lib/nova/tmp //锁的作用是创建虚拟机时,在执行某个操作的时候,需要等此步骤执行完后才能执行下一个步骤,不能并行执行,保证操作是一步一步的执行

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://ct:5000/v3

username = placement

password = PLACEMENT_PASS

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = $my_ip //指定vnc的监听地址

server_proxyclient_address = $my_ip //server的客户端地址为本机地址;此地址是管理网的地址

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm][placement_database]

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement[root@ct ~]# su -s /bin/sh -c "nova-manage api_db sync" nova //初始化nova_api数据库[root@ct ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova //注册cell0数据库

[root@ct ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova //创建cell1单元格

a6a23d0f-122c-4f3f-9a6a-725bdf5a634a

[root@ct ~]# su -s /bin/sh -c "nova-manage db sync" nova //导入数据库

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release')result = self._query(query)

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release')result = self._query(query)

[root@ct ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova //验证是否注册成功

+-------+--------------------------------------+----------------------------+-----------------------------------------+----------+

| 名称 | UUID | Transport URL | 数据库连接 | Disabled |

+-------+--------------------------------------+----------------------------+-----------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@ct/nova_cell0 | False |

| cell1 | a6a23d0f-122c-4f3f-9a6a-725bdf5a634a | rabbit://openstack:****@ct | mysql+pymysql://nova:****@ct/nova | False |

+-------+--------------------------------------+----------------------------+-----------------------------------------+----------+[root@ct ~]# systemctl enable openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service //开机自启

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-api.service to /usr/lib/systemd/system/openstack-nova-api.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-scheduler.service to /usr/lib/systemd/system/openstack-nova-scheduler.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-conductor.service to /usr/lib/systemd/system/openstack-nova-conductor.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-novncproxy.service to /usr/lib/systemd/system/openstack-nova-novncproxy.service.

[root@ct ~]# systemctl start openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service openstack-nova-api.service //开启服务[root@ct ~]# netstat -tnlup|egrep '8774|8775'

tcp 0 0 0.0.0.0:8775 0.0.0.0:* LISTEN 85764/python2

tcp 0 0 0.0.0.0:8774 0.0.0.0:* LISTEN 85764/python2

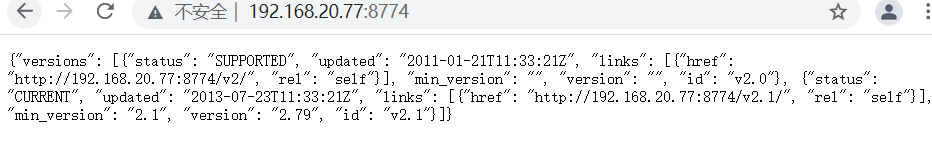

[root@ct ~]# curl http://ct:8774

{"versions": [{"status": "SUPPORTED", "updated": "2011-01-21T11:33:21Z", "links": [{"href": "http://ct:8774/v2/", "rel": "self"}], "min_version": "", "version": "", "id": "v2.0"}, {"status": "CURRENT", "updated": "2013-07-23T11:33:21Z", "links": [{"href": "http://ct:8774/v2.1/", "rel": "self"}], "min_version": "2.1", "version": "2.79", "id": "v2.1"}]}

-

或者web测试

-

计算节点ct1配置

[root@ct1 ~]# yum -y install openstack-nova-compute //安装compute组件[root@ct1 ~]# cp -a /etc/nova/nova.conf{,.bak}

[root@ct1 ~]# grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf- 下面有个my_ip需要指定内网ip

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf DEBIT_PASS@ctport_url rabbit://openstack:RAB

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.30.20 //改为内网ip

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://ct:5000/v3

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers ct:11211

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf vnc enabled true

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf vnc server_listen 0.0.0.0

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ' $my_ip'

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://192.168.100.11:6080/vnc_auto.html

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf glance api_servers http://ct:9292

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf placement project_name service

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf placement auth_type password

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf placement auth_url http://ct:5000/v3

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf placement username placement

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

[root@ct1 ~]# openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu

[root@ct1 ~]# cat nova.conf

cat: nova.conf: 没有那个文件或目录

[root@ct1 ~]# cd /etc/nova/

[root@ct1 nova]# cd /etc/nova/

[root@ct1 nova]# cd

[root@ct1 ~]# cd /etc/nova

[root@ct1 nova]# cat nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:RABBIT_PASS@ct

my_ip = 192.168.30.20

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[api_database]

[barbican]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://ct:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

auth_url = http://ct:5000/v3

memcached_servers = ct:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

[libvirt]

virt_type = qemu

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://ct:5000/v3

username = placement

password = PLACEMENT_PASS

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://192.168.30.10:6080/vnc_auto.html //需要手动添加IP地址,否则之后搭建成功后,无法通过UI控制台访问到内部虚拟机

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm][root@ct1 nova]# systemctl enable libvirtd.service openstack-nova-compute.service //开机自启

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-compute.service to /usr/lib/systemd/system/openstack-nova-compute.service.

[root@ct1 nova]# systemctl start libvirtd.service openstack-nova-compute.service //启动服务

- 计算节点ct2配置

[root@ct2 ~]# cp -a /etc/nova/nova.conf{,.bak}

[root@ct2 ~]# grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstacBIT_PASS@ct

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.30.30

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://ct:5000/v3

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers ct:11211

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf vnc enabled true

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf vnc server_listen 0.0.0.0

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ' $my_ip'

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://192.168.100.11:6080/vnc_auto.html

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf glance api_servers http://ct:9292

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf placement project_name service

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf placement auth_type password

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf placement auth_url http://ct:5000/v3

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf placement username placement

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

[root@ct2 ~]# openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu[root@ct2 ~]# cd /etc/nova

[root@ct2 nova]# cat nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:RABBIT_PASS@ct

my_ip = 192.168.30.30

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[api_database]

[barbican]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://ct:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

auth_url = http://ct:5000/v3

memcached_servers = ct:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

[libvirt]

virt_type = qemu

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://ct:5000/v3

username = placement

password = PLACEMENT_PASS

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://192.168.30.10:6080/vnc_auto.html //需要手动添加IP地址,否则之后搭建成功后,无法通过UI控制台访问到内部虚拟机

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm][root@ct2 nova]# systemctl enable libvirtd.service openstack-nova-compute.service

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-compute.service to /usr/lib/systemd/system/openstack-nova-compute.service.

[root@ct2 nova]# systemctl start libvirtd.service openstack-nova-compute.service- ct控制节点配置

[root@ct ~]# openstack compute service list --service nova-compute //查看是否注册到controller上,通过消息队列

+----+--------------+------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+------+------+---------+-------+----------------------------+

| 7 | nova-compute | ct1 | nova | enabled | up | 2021-08-26T08:57:41.000000 |

| 8 | nova-compute | ct2 | nova | enabled | up | 2021-08-26T08:57:42.000000 |

+----+--------------+------+------+---------+-------+----------------------------+[root@ct ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova //扫描当前openstack上有哪些计算节点可用,发现后把计算节点创建到cell中。

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': a6a23d0f-122c-4f3f-9a6a-725bdf5a634a

Checking host mapping for compute host 'ct1': d2359c17-f4b7-4154-98dc-353a10331de8

Creating host mapping for compute host 'ct1': d2359c17-f4b7-4154-98dc-353a10331de8

Checking host mapping for compute host 'ct2': 51e68996-4221-47a5-abbe-7c028c55225e

Creating host mapping for compute host 'ct2': 51e68996-4221-47a5-abbe-7c028c55225e

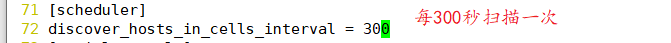

Found 2 unmapped computes in cell: a6a23d0f-122c-4f3f-9a6a-725bdf5a634a[root@ct ~]# vim /etc/nova/nova.conf //默认每次添加个计算节点,在控制端就需要执行一次扫描,,修改控制端nova的主配置文件

[root@ct ~]# systemctl restart openstack-nova-api.service- 检查各个服务是否都是正常的

[root@ct ~]# openstack compute service list //查看compute是否注册成功

+----+----------------+------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+------+----------+---------+-------+----------------------------+

| 4 | nova-conductor | ct | internal | enabled | up | 2021-08-26T09:02:30.000000 |

| 5 | nova-scheduler | ct | internal | enabled | up | 2021-08-26T09:02:30.000000 |

| 7 | nova-compute | ct1 | nova | enabled | up | 2021-08-26T09:02:31.000000 |

| 8 | nova-compute | ct2 | nova | enabled | up | 2021-08-26T09:02:32.000000 |

+----+----------------+------+----------+---------+-------+----------------------------+

[root@ct ~]# openstack catalog list //查看各个组件的 api 是否正常

+-----------+-----------+---------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+---------------------------------+

| nova | compute | RegionOne |

| | | internal: http://ct:8774/v2.1 |

| | | RegionOne |

| | | admin: http://ct:8774/v2.1 |

| | | RegionOne |

| | | public: http://ct:8774/v2.1 |

| | | |

| placement | placement | RegionOne |

| | | public: http://ct:8778 |

| | | RegionOne |

| | | internal: http://ct:8778 |

| | | RegionOne |

| | | admin: http://ct:8778 |

| | | |

| keystone | identity | RegionOne |

| | | public: http://ct:5000/v3/ |

| | | RegionOne |

| | | internal: http://ct:5000/v3/ |

| | | RegionOne |

| | | admin: http://ct:5000/v3/ |

| | | |

| placement | placement | |

| glance | image | RegionOne |

| | | admin: http://ct:9292 |

| | | RegionOne |

| | | public: http://ct:9292 |

| | | RegionOne |

| | | internal: http://ct:9292 |

| | | |

+-----------+-----------+-------------------------------- -+

[root@ct ~]# openstack image list //查看是否能够拿到镜像

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 7f00c6b7-d760-40a8-8973-ada9bb90bcd7 | cirros | active |

+--------------------------------------+--------+--------+

[root@ct ~]# nova-status upgrade check //查看cell的api和placement的api是否正常

+--------------------------------+

| Upgrade Check Results |

+--------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Cinder API |

| Result: Success |

| Details: None |

+--------------------------------+