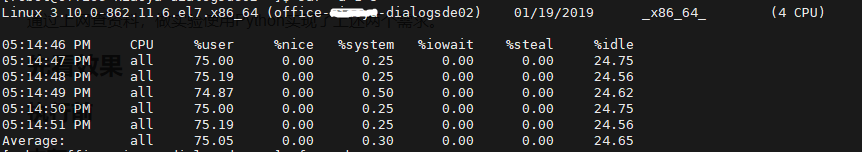

现象:Cassandra集群中一个节点CPU满载,Cassandra连接超时报错。该服务器CPU被Cassandra吃完。

排查过程:

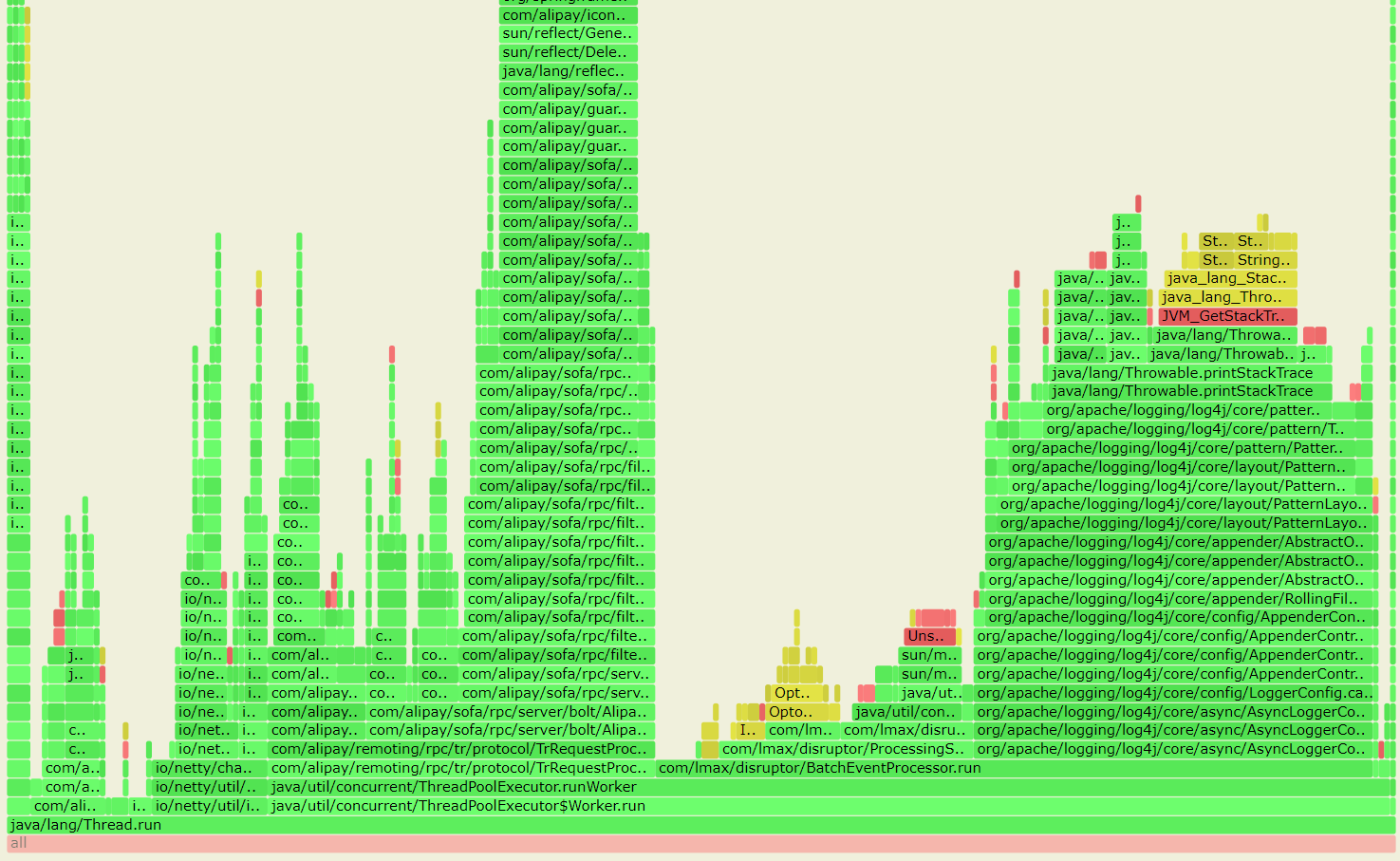

1. top命令查看CPU占用最高的进程,确认为Cassandra

[root@VM_centos ~]# top

20772 cassand+ 20 0 8299168 4.484g 126280 S 376.6 58.7 23:49.92 java

2. jstack -l PID命令查看线程活动情况

[cassandra@VM_129_3_centos tmp]$ jstack -l 20772

2019-04-12 20:29:23

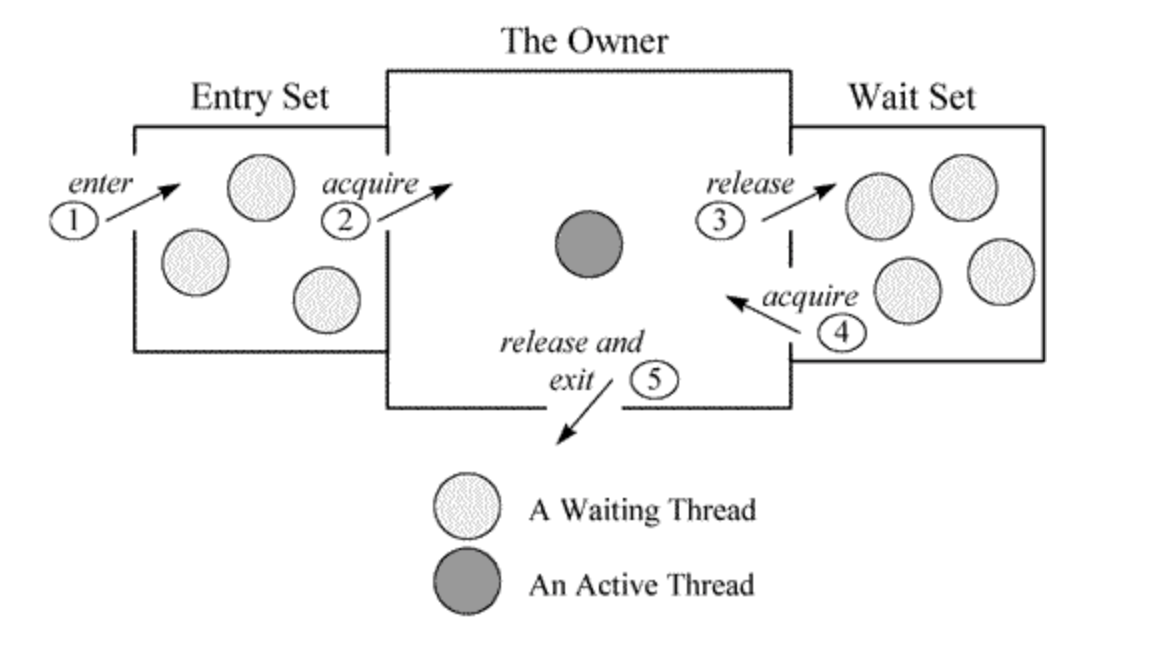

Full thread dump Java HotSpot(TM) 64-Bit Server VM (25.172-b11 mixed mode):"SharedPool-Worker-134" #396 daemon prio=5 os_prio=0 tid=0x00007f907104c1d0 nid=0x58f3 waiting on condition [0x00007f8fbd6b3000]java.lang.Thread.State: WAITING (parking)at sun.misc.Unsafe.park(Native Method)at java.util.concurrent.locks.LockSupport.park(LockSupport.java:304)at org.apache.cassandra.concurrent.SEPWorker.run(SEPWorker.java:87)at java.lang.Thread.run(Thread.java:748)Locked ownable synchronizers:- None

..."Reference Handler" #2 daemon prio=10 os_prio=0 tid=0x00007f90701b2640 nid=0x5193 in Object.wait() [0x00007f9074065000]java.lang.Thread.State: WAITING (on object monitor)at java.lang.Object.wait(Native Method)- waiting on <0x00000006d918e910> (a java.lang.ref.Reference$Lock)at java.lang.Object.wait(Object.java:502)at java.lang.ref.Reference.tryHandlePending(Reference.java:191)- locked <0x00000006d918e910> (a java.lang.ref.Reference$Lock)at java.lang.ref.Reference$ReferenceHandler.run(Reference.java:153)Locked ownable synchronizers:- None"VM Thread" os_prio=0 tid=0x00007f90701a9200 nid=0x5191 runnable "Gang worker#0 (Parallel GC Threads)" os_prio=0 tid=0x00007f907001c630 nid=0x5127 runnable "Gang worker#1 (Parallel GC Threads)" os_prio=0 tid=0x00007f907001db40 nid=0x5128 runnable "Gang worker#2 (Parallel GC Threads)" os_prio=0 tid=0x00007f907001f050 nid=0x5129 runnable "Gang worker#3 (Parallel GC Threads)" os_prio=0 tid=0x00007f9070020560 nid=0x512a runnable "Concurrent Mark-Sweep GC Thread" os_prio=0 tid=0x00007f9070062c20 nid=0x518f runnable "VM Periodic Task Thread" os_prio=0 tid=0x00007f9070345590 nid=0x519d waiting on condition JNI global references: 1050[cassandra@VM_129_3_centos tmp]$ jstack -l 2077210进制的进程号20772转成16进制是0x5124,在上面的结果中找到它

3. 查看堆内存jmap -heap PID

[cassandra@VM_129_3_centos tmp]$ jmap -heap 20772

Attaching to process ID 20772, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 25.172-b11using parallel threads in the new generation.

using thread-local object allocation.

Concurrent Mark-Sweep GCHeap Configuration:MinHeapFreeRatio = 40MaxHeapFreeRatio = 70MaxHeapSize = 4294967296 (4096.0MB)NewSize = 419430400 (400.0MB)MaxNewSize = 419430400 (400.0MB)OldSize = 3875536896 (3696.0MB)NewRatio = 2SurvivorRatio = 8MetaspaceSize = 21807104 (20.796875MB)CompressedClassSpaceSize = 1073741824 (1024.0MB)MaxMetaspaceSize = 17592186044415 MBG1HeapRegionSize = 0 (0.0MB)Heap Usage:

New Generation (Eden + 1 Survivor Space):capacity = 377487360 (360.0MB)used = 348288016 (332.15333557128906MB)free = 29199344 (27.846664428710938MB)92.26481543646918% used

Eden Space:capacity = 335544320 (320.0MB)used = 318190024 (303.4496536254883MB)free = 17354296 (16.55034637451172MB)94.82801675796509% used

From Space:capacity = 41943040 (40.0MB)used = 30097992 (28.70368194580078MB)free = 11845048 (11.296318054199219MB)71.75920486450195% used

To Space:capacity = 41943040 (40.0MB)used = 0 (0.0MB)free = 41943040 (40.0MB)0.0% used

concurrent mark-sweep generation:capacity = 3875536896 (3696.0MB)used = 79467816 (75.78641510009766MB)free = 3796069080 (3620.2135848999023MB)2.050498244050261% used28574 interned Strings occupying 5184216 bytes.

[cassandra@VM_129_3_centos tmp]$ 未完待续...

注意事项:

如果jstack -l PID命令返回“Unable to open socket file: ”,则需要参考Cassandra官方文档指定CASSANDRA_HEAPDUMP_DIR解决。

参考:https://alexzeng.wordpress.com/2013/05/25/debug-cassandrar-jvm-thread-100-cpu-usage-issue/