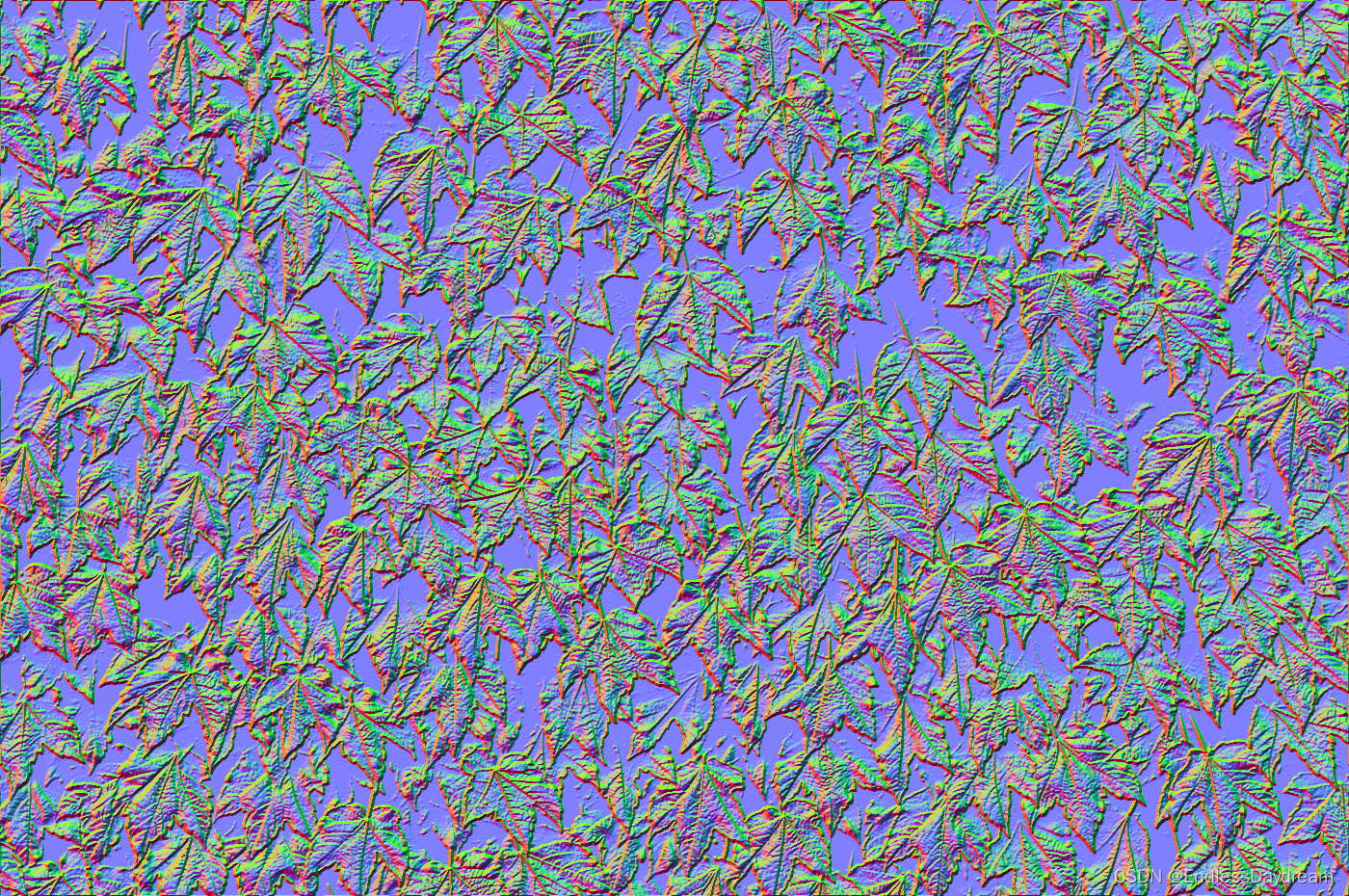

NormalMap-Online (cpetry.github.io) https://cpetry.github.io/NormalMap-Online/

https://cpetry.github.io/NormalMap-Online/

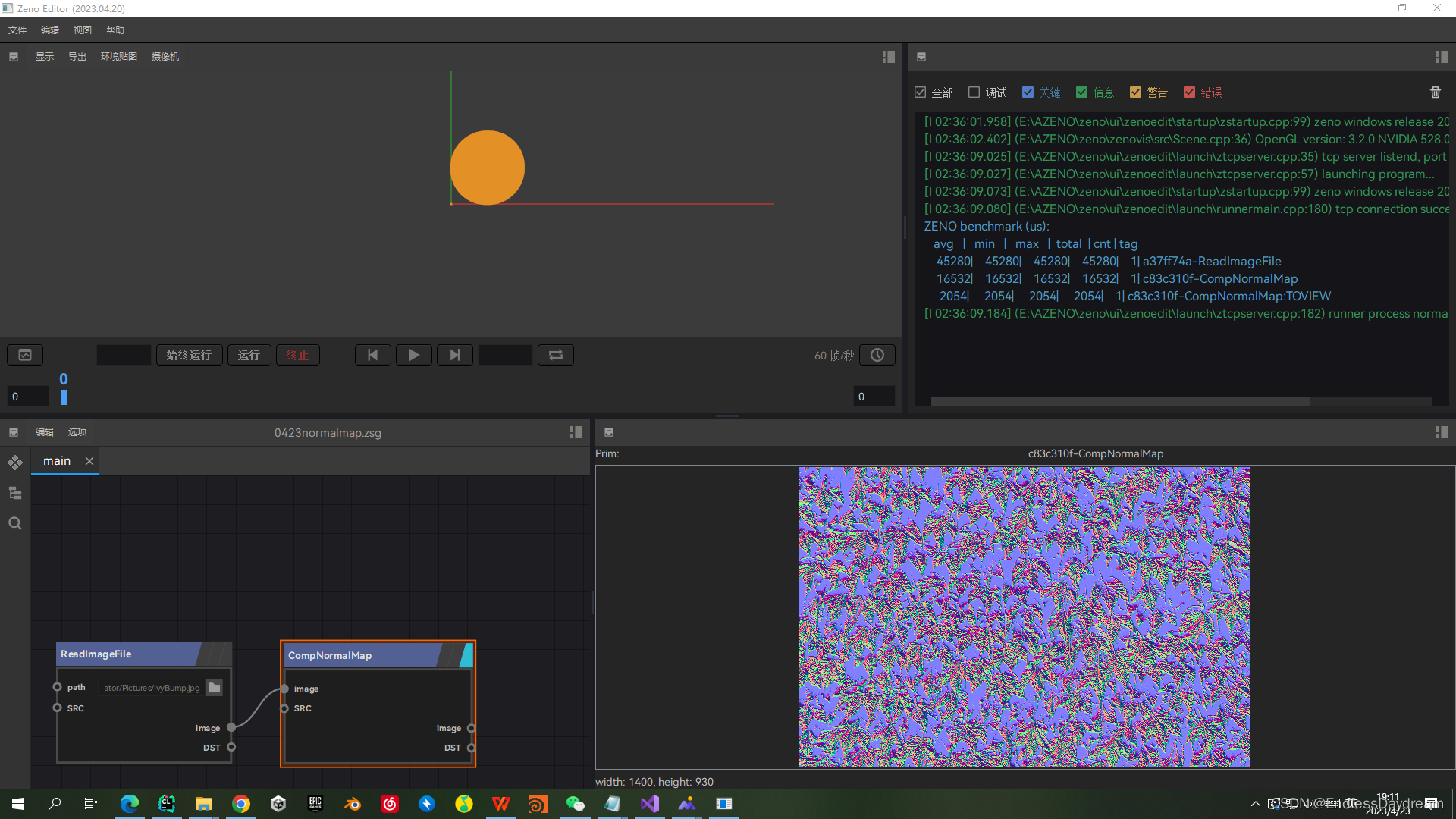

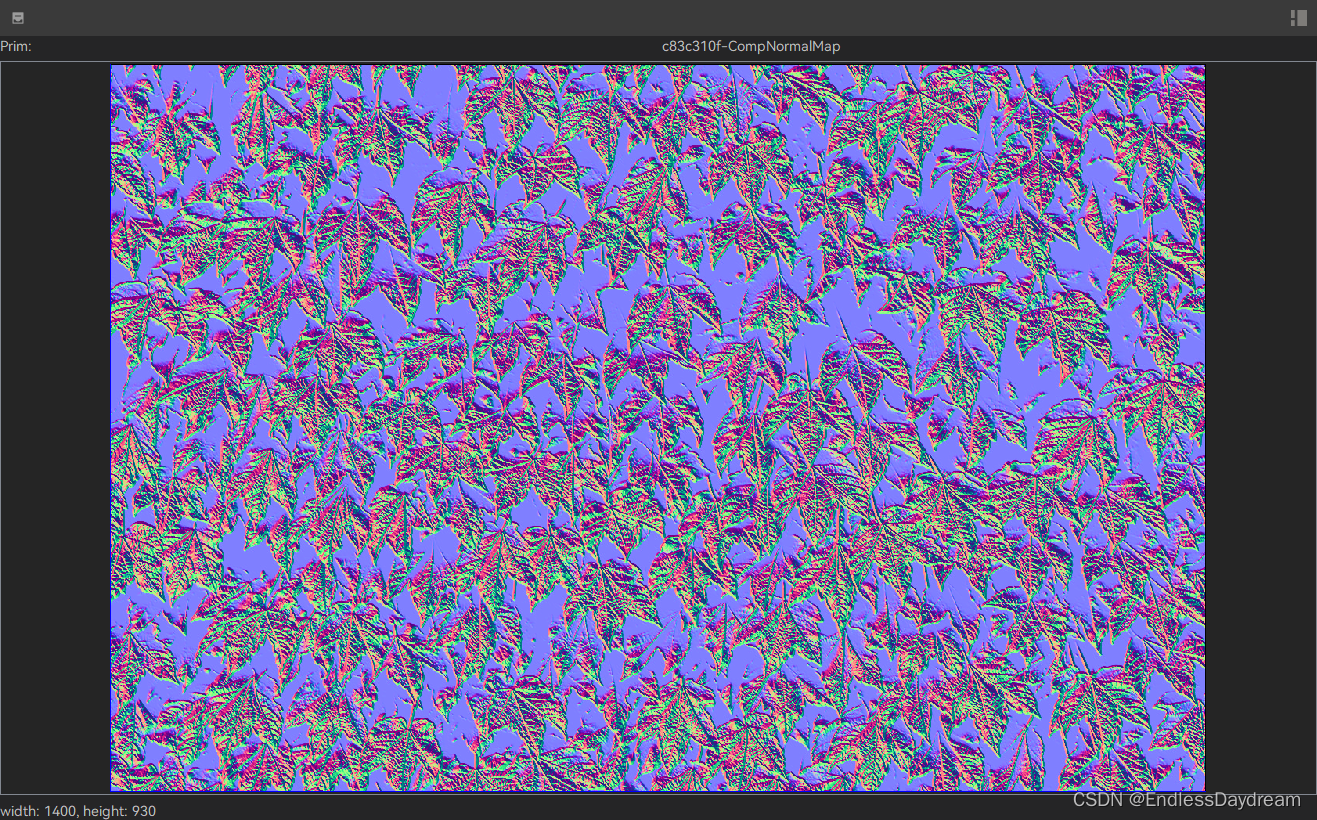

CompNormalMap

将灰度图像转换为法线贴图

将灰度图像转换为法线贴图是一种常见的技术,用于在实时图形渲染中增加表面细节。下面是一个简单的方法来将灰度图像转换为法线贴图:

-

加载灰度图像,并将其转换为浮点数值范围[0, 1]。

-

对于每个像素,计算其相邻像素与其距离,并计算它们之间的斜率。这可以通过使用Sobel算子或其他边缘检测算法来完成。

-

将每个像素的斜率向量归一化为单位长度,并转换为范围[-1, 1]内的值。

-

将每个像素的斜率向量转换为法线向量。法线向量可以通过使用以下公式计算得出:$normal = (2R - 1, 2G - 1, B)$,其中R,G和B分别是像素的红、绿和蓝通道值。由于我们的灰度图像没有颜色信息,我们可以将R,G和B设置为0.5,以得到一个朝向Z轴正方向的法线。

-

存储每个像素的法线向量作为输出法线贴图。

需要注意的是,这个简单的方法只能生成表面细节的近似值,并且可能会在处理像素边缘和细节时出现不准确的结果。更复杂的技术可以使用高斯过滤和其他方法来平滑法线图并产生更精细的结果。

Input:

Expect:

Output:

struct CompNormalMap : INode {virtual void apply() override {auto image = get_input<PrimitiveObject>("image");auto &ud = image->userData();int w = ud.get2<int>("w");int h = ud.get2<int>("h");using normal = std::tuple<float, float, float>;normal n = {0, 0, 1};float n0 = std::get<0>(n);float n1 = std::get<1>(n);float n2 = std::get<2>(n);std::vector<normal> normalmap;normalmap.resize(image->size());float gx = 0;float gy = 0;float gz = 1;for (int i = 0; i < h; i++) {for (int j = 0; j < w; j++) {int idx = i * w + j;if (i == 0 || i == h || j == 0 || j == w) {normalmap[idx] = {0, 0, 1};}}}for (int i = 1; i < h-1; i++) {for (int j = 1; j < w-1; j++) {int idx = i * w + j;gx = (image->verts[idx+1][0] - image->verts[idx-1][0]) / 2.0f * 255;gy = (image->verts[idx+w][0] - image->verts[idx-w][0]) / 2.0f * 255;// 归一化法线向量float len = sqrt(gx * gx + gy * gy + gz * gz);gx /= len;gy /= len;gz /= len;// 计算光照值gx = 0.5f * (gx + 1.0f) ;gy = 0.5f * (gy + 1.0f) ;gz = 0.5f * (gz + 1.0f) ;normalmap[i * w + j] = {gx,gy,gz};}}for (int i = 0; i < h; i++) {for (int j = 0; j < w; j++) {int idx = i * w + j;image->verts[i * w + j][0] = std::get<0>(normalmap[i * w + j]);image->verts[i * w + j][1] = std::get<1>(normalmap[i * w + j]);image->verts[i * w + j][2] = std::get<2>(normalmap[i * w + j]);}}set_output("image", image);}

};

ZENDEFNODE(CompNormalMap, {{{"image"}},{{"image"}},{},{ "comp" },

});cv

#include <opencv2/opencv.hpp>

#include <iostream>using namespace cv;

using namespace std;int main()

{Mat grayImage = imread("gray_image.png", IMREAD_GRAYSCALE);if (grayImage.empty()){cerr << "Could not read input image" << endl;return -1;}Mat normalMap(grayImage.size(), CV_8UC3);for (int i = 1; i < grayImage.rows - 1; i++){for (int j = 1; j < grayImage.cols - 1; j++){double dx = grayImage.at<uchar>(i, j + 1) - grayImage.at<uchar>(i, j - 1);double dy = grayImage.at<uchar>(i + 1, j) - grayImage.at<uchar>(i - 1, j);Vec3b normal(dx, dy, 255);normalize(normal, normal);normalMap.at<Vec3b>(i, j) = normal * 127.5 + Vec3b(127.5, 127.5, 127.5);}}imwrite("normal_map.png", normalMap);return 0;

}

不调库

#include <iostream>

#include <fstream>

#include <cmath>using namespace std;int main() {// 读取灰度图ifstream input("input.bmp", ios::binary);if (!input) {cout << "无法打开文件" << endl;return 1;}char header[54];input.read(header, 54);int width = *(int*)(header + 18);int height = *(int*)(header + 22);int row_size = (width * 24 + 31) / 32 * 4;char* data = new char[row_size * height];input.read(data, row_size * height);input.close();// 计算法线图char* output = new char[row_size * height];for (int y = 1; y < height - 1; y++) {for (int x = 1; x < width - 1; x++) {// 计算梯度double dx = (data[(y + 1) * row_size + (x + 1) * 3] - data[(y - 1) * row_size + (x - 1) * 3]) / 255.0;double dy = (data[(y - 1) * row_size + (x + 1) * 3] - data[(y + 1) * row_size + (x - 1) * 3]) / 255.0;double dz = 1.0;// 归一化法线向量double length = sqrt(dx * dx + dy * dy + dz * dz);dx /= length;dy /= length;dz /= length;// 计算光照值double light = dx * 0.5 + dy * -0.5 + dz * 0.5 + 0.5;int value = int(light * 255);output[y * row_size + x * 3] = value;output[y * row_size + x * 3 + 1] = value;output[y * row_size + x * 3 + 2] = value;}}// 输出法线图ofstream of("output.bmp", ios::binary);of.write(header, 54);of.write(output, row_size * height);of.close();delete[] data;delete[] output;return 0;

}

#include <iostream>

#include <vector>// 计算Sobel算子

void sobel(const std::vector<float>& grayImage, int width, int height, std::vector<float>& dx, std::vector<float>& dy)

{dx.resize(width * height);dy.resize(width * height);for (int y = 1; y < height - 1; y++) {for (int x = 1; x < width - 1; x++) {float gx = -grayImage[(y - 1) * width + x - 1] + grayImage[(y - 1) * width + x + 1]- 2.0f * grayImage[y * width + x - 1] + 2.0f * grayImage[y * width + x + 1]- grayImage[(y + 1) * width + x - 1] + grayImage[(y + 1) * width + x + 1];float gy = grayImage[(y - 1) * width + x - 1] + 2.0f * grayImage[(y - 1) * width + x] + grayImage[(y - 1) * width + x + 1]- grayImage[(y + 1) * width + x - 1] - 2.0f * grayImage[(y + 1) * width + x] - grayImage[(y + 1) * width + x + 1];dx[y * width + x] = gx;dy[y * width + x] = gy;}}

}// 计算法向量

void normalMap(const std::vector<float>& grayImage, int width, int height, std::vector<float>& normal)

{std::vector<float> dx, dy;sobel(grayImage, width, height, dx, dy);normal.resize(width * height * 3);for (int y = 0; y < height; y++) {for (int x = 0; x < width; x++) {int i = y * width + x;float gx = dx[i];float gy = dy[i];float normalX = -gx;float normalY = -gy;float normalZ = 1.0f;float length = sqrt(normalX * normalX + normalY * normalY + normalZ * normalZ);normalX /= length;normalY /= length;normalZ /= length;normal[i * 3 + 0] = normalX;normal[i * 3 + 1] = normalY;normal[i * 3 + 2] = normalZ;}}

}int main()

{// 读取灰度图像std::vector<float> grayImage;int width, height;// TODO: 从文件中读取灰度图像到grayImage中,同时将宽度和高度存储在width和height中

![hitcon_2017_ssrfme、[BJDCTF2020]Easy MD5、[极客大挑战 2019]BuyFlag](https://img-blog.csdnimg.cn/42f3742f04894fba82c5663b10568534.png)