数据科学 python

by Zhen Liu

刘震

首先:数据预处理 (Up first: data preprocessing)

Do you feel frustrated by breaking your data analytics flow when searching for syntax? Why do you still not remember it after looking up it for the third time?? It’s because you haven’t practiced it enough to build muscle memory for it yet.

搜索语法时,您是否因中断数据分析流程而感到沮丧? 为什么第三遍查询后仍不记得呢? 这是因为您尚未练习足够的肌肉记忆。

Now, imagine that when you are coding, the Python syntax and functions just fly out from your fingertips following your analytical thoughts. How great is that! This tutorial is to help you get there.

现在,想象一下,当您进行编码时,Python语法和函数会按照您的分析思路从您的指尖飞出。 那太好了! 本教程是为了帮助您到达那里。

I recommend practicing this script every morning for 10 mins, and repeating it for a week. It’s like doing a few small crunches a day — not for your abs, but for your data science muscles. Gradually, you’ll notice the improvement in data analytics programming efficiency after this repeat training.

我建议每天早上练习此脚本10分钟,然后重复一周。 这就像一天做几次小动作-不是为了您的腹肌,而是为了您的数据科学力量。 经过反复的培训,您会逐渐发现数据分析编程效率的提高。

To begin with my ‘data science workout’, in this tutorial we’ll practice the most common syntax for data preprocessing as a warm-up session ;)

首先,从我的“数据科学锻炼”开始,在本教程中,我们将作为预热课程练习数据预处理的最常用语法;)

Contents:0 . Read, View and Save data1 . Table’s Dimension and Data Types2 . Basic Column Manipulation3 . Null Values: View, Delete and Impute4 . Data Deduplication0.读取,查看和保存数据 (0. Read, View and Save data)

First, load the libraries for our exercise:

首先,为我们的练习加载库:

Now we’ll read data from my GitHub repository. I downloaded the data from Zillow.

现在,我们将从GitHub存储库中读取数据。 我从Zillow下载了数据。

And the results look like this:

结果看起来像这样:

Saving a file is dataframe.to_csv(). If you don’t want the index number to be saved, use dataframe.to_csv( index = False ).

保存文件为dataframe.to_csv()。 如果您不希望保存索引号,请使用dataframe.to_csv(index = False)。

1。 表的维度和数据类型 (1 . Table’s Dimension and Data Types)

1.1尺寸 (1.1 Dimension)

How many rows and columns in this data?

此数据中有多少行和几列?

1.2数据类型 (1.2 Data Types)

What are the data types of your data, and how many columns are numeric?

数据的数据类型是什么,数字有多少列?

Output of the first few columns’ data types:

前几列的数据类型的输出:

If you want to be more specific about your data, use select_dtypes() to include or exclude a data type. Question: if I only want to look at 2018’s data, how do I get that?

如果要更具体地说明数据,请使用select_dtypes()包括或排除数据类型。 问题:如果我只想看一下2018年的数据,那我怎么得到呢?

2.基本列操作 (2. Basic Column Manipulation)

2.1按列子集数据 (2.1 Subset data by columns)

Select columns by data types:

按数据类型选择列:

For example, if you only want float and integer columns:

例如,如果只需要浮点数和整数列:

Select and drop columns by names:

按名称选择和删除列:

2.2重命名列 (2.2 Rename Columns)

How do I rename the columns if I don’t like them? For example, change ‘State’ to ‘state_’; ‘City’ to ‘city_’:

如果我不喜欢这些列,该如何重命名它们? 例如,将“状态”更改为“ state_”; 从“城市”到“ city_”:

3.空值:查看,删除和插入 (3. Null Values: View, Delete and Impute)

3.1多少行和列具有空值? (3.1 How many rows and columns have null values?)

The outputs of isnull.any() versus isnull.sum():

isnull.any()与isnull.sum()的输出:

Select data that isn’t null in one column, for example, ‘Metro’ isn’t null.

选择在一列中不为空的数据,例如,“ Metro”不为空。

3.2为一组固定的列选择不为空的行 (3.2 Select rows that are not null for a fixed set of columns)

Select a subset of data that doesn’t have null after 2000:

选择2000年后不为空的数据子集:

If you want to select the data in July, you need to find the columns containing ‘-07’. To see if a string contains a substring, you can use substring in string, and it’ll output true or false.

如果要选择7月的数据,则需要查找包含“ -07”的列。 要查看字符串是否包含子字符串,可以在字符串中使用子字符串,它会输出true或false。

3.3空值子集行 (3.3 Subset Rows by Null Values)

Select rows where we want to have at least 50 non-NA values, but don’t need to be specific about the columns:

选择我们希望至少具有50个非NA值但不需要具体说明列的行:

3.4丢失和归类缺失值 (3.4 Drop and Impute Missing Values)

Fill NA or impute NA:

填写NA或估算NA:

Use your own condition to fill using the where function:

使用您自己的条件使用where函数填充:

4.重复数据删除 (4. Data Deduplication)

We need to make sure there’s no duplicated rows before we aggregate data or join them.

在聚合数据或将它们联接之前,我们需要确保没有重复的行。

We want to see whether there are any duplicated cities/regions. We need to decide what unique ID (city, region) we want to use in the analysis.

我们想看看是否有重复的城市/地区。 我们需要确定要在分析中使用的唯一ID(城市,地区)。

删除重复的值。 (Drop Duplicated values.)

The ‘CountyName’ and ‘SizeRank’ combination is unique already. So we just use the columns to demonstrate the syntax of drop_duplicated.

“ CountyName”和“ SizeRank”组合已经是唯一的。 因此,我们仅使用列来演示drop_duplicated的语法。

That’s it for the first part of my series on building muscle memory for data science in Python. The full script can be found here.

这就是我在Python中为数据科学构建肌肉内存的系列文章的第一部分。 完整的脚本可以在这里找到。

Stay tuned! My next tutorial will show you how to ‘curl the data science muscles’ for slicing and dicing data.

敬请关注! 我的下一个教程将向您展示如何“卷曲数据科学的力量”来对数据进行切片和切块。

Follow me and give me a few claps if you find this helpful :)

跟随我,如果您觉得有帮助,请给我一些鼓掌:)

While you are working on Python, maybe you’ll be interested in my previous article:

在使用Python时,也许您会对我以前的文章感兴趣:

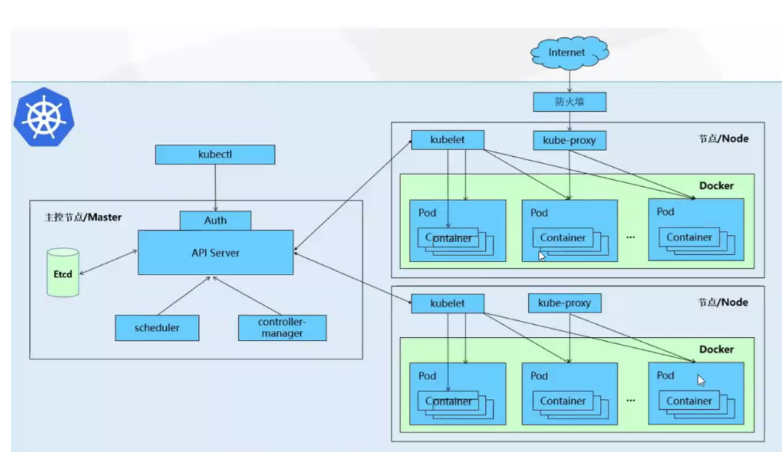

Learn Spark for Big Data Analytics in 15 mins!I guarantee you that this short tutorial will save you a TON of time from reading the long documentations. Ready to…towardsdatascience.com

15分钟之内即可学习Spark for Big Data Analytics! 我向您保证,这个简短的教程将为您节省阅读冗长文档的时间。 准备去…朝向datascience.com

翻译自: https://www.freecodecamp.org/news/how-to-build-up-your-muscle-memory-for-data-science-with-python-5960df1c930e/

数据科学 python