Inception v2 and Inception v3 were presented in the same paper.

** 外网blog**

note V1-V4

https://towardsdatascience.com/a-simple-guide-to-the-versions-of-the-inception-network-7fc52b863202

https://hackmd.io/@bouteille/SkD5Xd4DL

两个要点:

- 引入了Batch-Normalization(批量标准化);

- 将原Inception模块中的5∗5卷积层用两层的3∗3卷积来代替。

卷积核得设计与研究,用小卷积叠加代替大卷积核,2个33=1个55 ,3个33==1个77

作者通过因式分解卷积核,和积极的正则化增加计算效率,googlNet使用手工设计特征,AlextNet远远比不上。即使是googlNet在mobile等有效资源的情况下,减少参数量后,计算仍然十分复杂。

结论:

1.提出来几种提升卷积神经网络的原则,引导我们设计质量很高,计算代价较小的网络结构

2.在single crop评价中,高质量inceptionV3取得得最好结果

3.研究了怎样分解卷积尺寸,降低神经网络内部维度的同时保持相对较低得训练代价和较高得质量。论文表明,较低的参数计数和额外的正则化与批量标准化辅助分类器和标签平滑相结合,允许在相对较小的训练集上训练高质量的网络。

通用设计原则:

-

避免达到表征能力的瓶颈,在输入到输出的过程中,size一般越来越小。信息内容不能仅仅通过表征的维度来评估,因为它抛弃了相关结构等重要因素;维度仅仅提供了信息内容的大致评估;

-

高维表征更容易在本地网络处理。在卷积网络中每个格子上增加激活函数可以得到更多分离的特征,加快训练速度。

-

高维数据处理以前,先降低维度,推测原因是相邻单元之间的强相关性会导致在降维过程中的损失大大减少。

联想到1*1卷积得应用

- 平衡网络深度和宽度,一般同步增加。

以上这些原则不能直观增加网络性能,只在模糊情况下使用。

对称分解

如图两个33可以替换一个55,这种分解大大减少了计算参数量,增加了深度和非线性

不对称分解

使用13后接31卷积来代替3*3卷积,如下图figure7中画红线位置,这种设置也减少了参数量

进一步考虑,一个nn的卷积可以分解为1n+n*1卷积,然鹅,作者提出在较大尺寸的卷积核这种分解才有用。如下figure6所示

In practice, we have found that employing this factorization does not work well on early layers, but it gives very good results on medium grid-sizes (On m*m feature maps, where m ranges between 12 and 20). On that level, very good results can be achieved by using 1 *7 convolutions followed

by 7 * 1 convolutions.

- Reduce representational bottleneck. The intuition was that, neural networks perform better when convolutions didn’t alter the dimensions of the input drastically. Reducing the dimensions too much may cause loss of information, known as a “representational bottleneck”

辅助分类器

- The filter banks in the module were expanded (made wider instead of deeper) to remove the representational bottleneck. If the module was made deeper instead, there would be excessive reduction in dimensions, and hence loss of information. This is illustrated in the below image.

有趣的是,我们发现辅助分类器在训练早期并没有改善收敛性:在两个模型达到高精度之前,有侧头和无侧头网络的训练过程看起来几乎相同。接近训练结束时,带有辅助分支的网络开始超过没有任何辅助分支的网络的精度,并达到略高的平台。

Grid size reduction

- Traditionally, convolutional networks use some pooling before convolution operations to reduce the gride size of the feature maps. Problem is, it can introduce a representational bottleneck.

- The authors think that increasing the number of filters (expand the filter bank) remove the representational bottleneck. This is achieved by the inception module.

观察发现两张图发现先pooling还是后pooling的顺序不同,左边的图先池化然后增加滤波器组(filter bank),这么做引入了a representational bottleneck。

直观的解释就是,一个人在学习的时候应该先进行通识教育还是专业教育,显然我们应该先进行通识教育,然后选择自己喜欢的专业学习。因此左边的图first reduce ,可能丢失重要信息,在随后得layers中导致特征表达局限性。

然而,右边的图计算代价很高,作者提出了另一种方法来降低代价同时消除representational bottleneck。

- (by using 2 parallel stride 2 pooling/convolution blocks).

inception V2

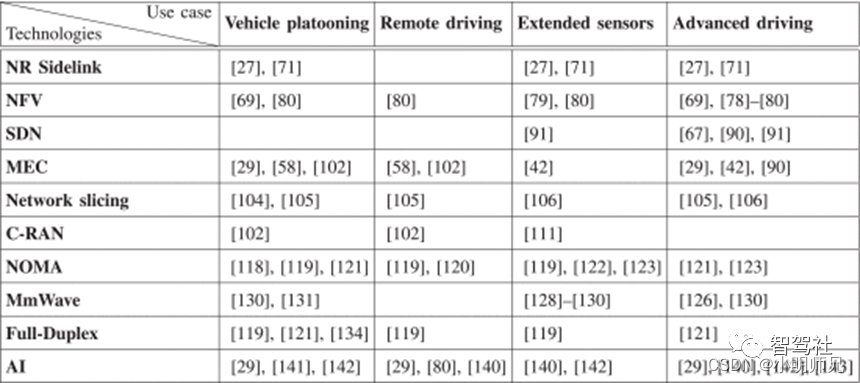

The above three principles were used to build three different types of inception modules

Whole network is 42 layers deep, computational cost is 2.5 times higher than GoogLeNet.

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-DTKIRpsU-1647155574696)(https://secure2.wostatic.cn/static/6zspTf6kF5pTUqUHfJH9Jr/image.png)]](https://img-blog.csdnimg.cn/e1ddbfc8c3d04c558c930a625ff189e1.png?x-oss-process=image/watermark,type_d3F5LXplbmhlaQ,shadow_50,text_Q1NETiBAR1kt6LW1,size_20,color_FFFFFF,t_70,g_se,x_16#pic_center)

Model Regularization via Label Smoothing

Label smoothing

- In CNN, the label is a vector. If you have 3 class, the one-hot labels are [0, 0, 1] or [0, 1, 0] or [1, 0, 0]. Each of the vector stands for a class at the output layer. Label smoothing, in my understanding, is to use a relatvely smooth vector to represent a ground truth label. Say [0, 0, 1] can be represented as [0.1, 0.1, 0.8]. It is used when the loss function is the cross entropy function.

- According to the author:

- First, it (using unsmoothed label) may result in overfitting: if the model learns to assign full probability to the ground-truth label for each training example, it is not guaranteed to generalize.

- Second, it encourages the differences between the largest logit and all others to become large, and this, combined with the bounded gradient, reduces the ability of the model to adapt. Intuitively, this happens because the model becomes too confident about its predictions.

- They claim that by using label smoothing, the top-1 and top-5 error rate are reduced by 0.2%.

#Inception v3

## The Premise* The authors noted that the **auxiliary classifiers** didn’t contribute much until near the end of the training process, when accuracies were nearing saturation. They argued that they function as **regularizes**, especially if they have BatchNorm or Dropout operations.

* Possibilities to improve on the Inception v2 without drastically changing the modules were to be investigated.## The Solution* **Inception Net v3** incorporated all of the above upgrades stated for Inception v2, and in addition used the following:1. RMSProp Optimizer.

2. Factorized 7x7 convolutions.

3. BatchNorm in the Auxillary Classifiers.

4. Label Smoothing (A type of regularizing component added to the loss formula that prevents the network from becoming too confident about a class. Prevents over fitting).

inception V2使用batch Normalization

inception V3 修改了inception块:

1.替换55为多个33卷积层

2.替换55 为17和7*1 卷积层

3.替换33为13和3*1

inception V4 使用残差(resnet)连接