参考:

https://github.com/ultralytics/ultralytics

https://github.com/TommyZihao/Train_Custom_Dataset/tree/main/%E5%85%B3%E9%94%AE%E7%82%B9%E6%A3%80%E6%B5%8B

##安装

pip install ultralytics -i https://pypi.douban.com/simple

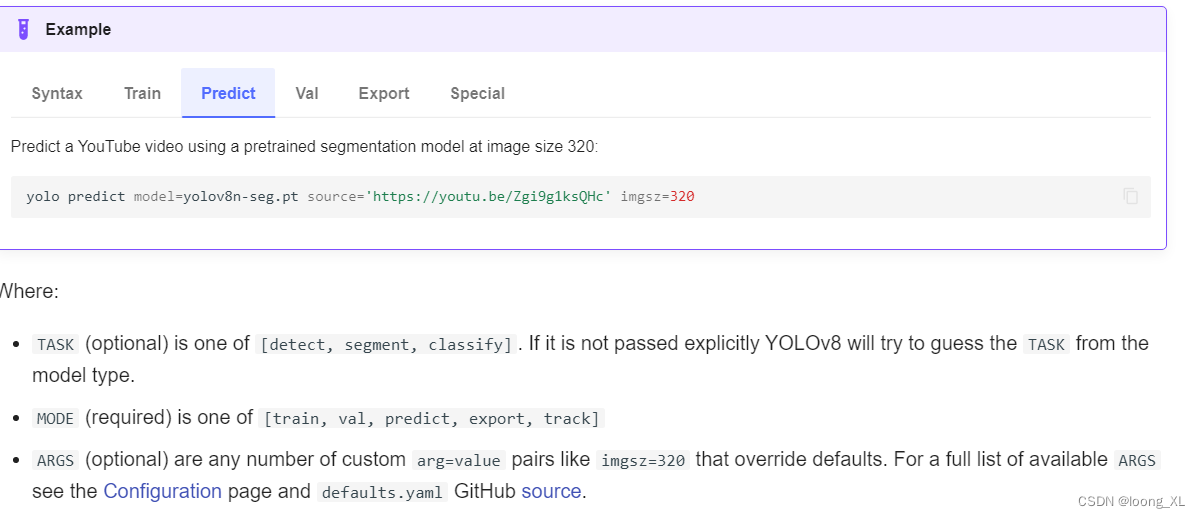

1、命令行运行

pip安装好后就可以yolo 命令运行执行

yolo predict model=yolov8n.pt source='https://ultralytics.com/images/bus.jpg'

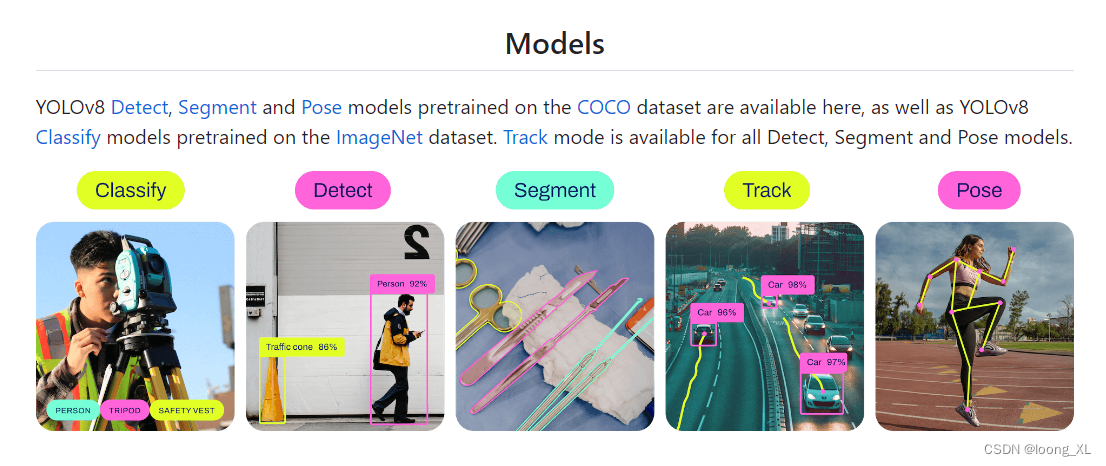

##检测

yolo detect predict model=yolov8n.pt source='https://ultralytics.com/images/bus.jpg' ##分割

yolo segment predict model=yolov8n-seg.pt source='https://ultralytics.com/images/bus.jpg' ##pose

yolo pose predict model=yolov8n-pose.pt source='https://ultralytics.com/images/bus.jpg'yolo pose predict model=yolov8x-pose-p6.pt source=videos/cxk.mp4 device=0

yolo task=detect mode=predict model=yolov8n.pt source=ultralytics/assets/bus.jpg imgsz=640 show=True save=True##跟踪

yolo track model=yolov8n.pt source=test.avi show=True save=True

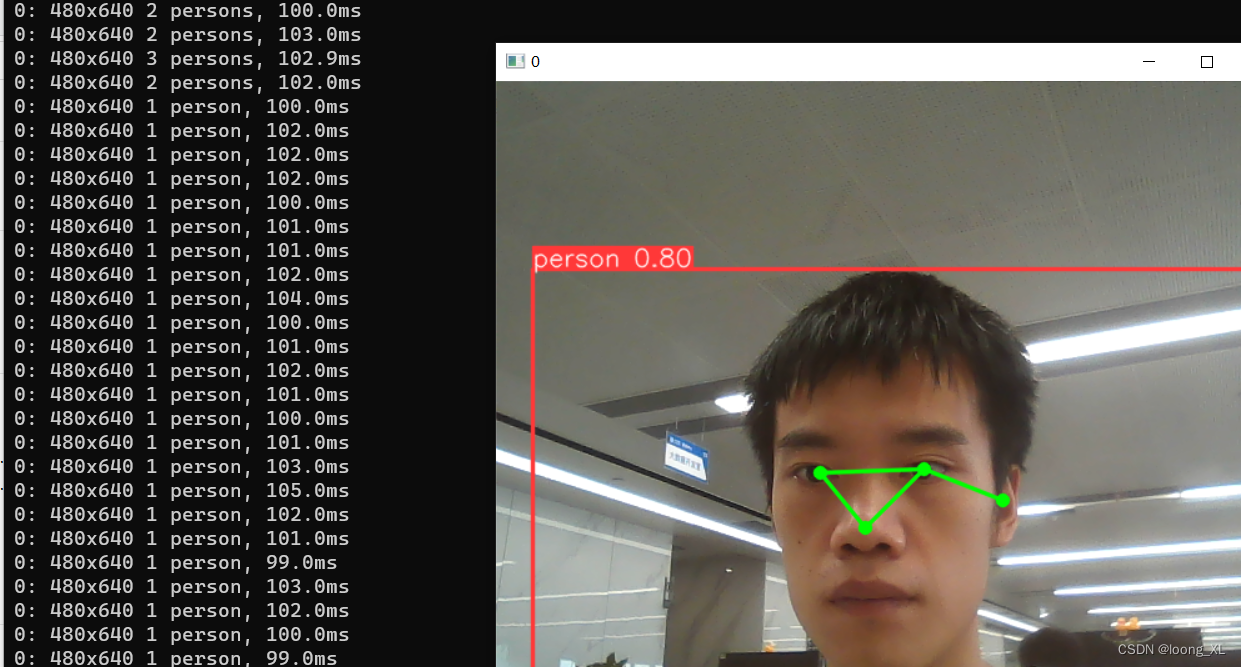

*** 运行效果,source=0是直接调用本地摄像头

yolo pose predict model=yolov8l-pose.pt source=0 show

2、转onnx

命令行转:

yolo export model=yolov8n.pt format=onnx opset=12

python api转:

from ultralytics import YOLO# 载入pytorch模型

model = YOLO('checkpoint/Triangle_215_yolov8l_pretrain.pt')# 导出模型

model.export(format='onnx')

3、python接口运行

注意:boxs、keypoints数据获取现在都在data里了

from ultralytics import YOLOimport cv2

import matplotlib.pyplot as pltimport torch# 有 GPU 就用 GPU,没有就用 CPU

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')# 载入预训练模型

# model = YOLO('yolov8n-pose.pt')

# model = YOLO('yolov8s-pose.pt')

# model = YOLO('yolov8m-pose.pt')

# model = YOLO('yolov8l-pose.pt')

# model = YOLO('yolov8x-pose.pt')

model = YOLO(r'C:\Users\lonng\Downloads\yolov8l-pose.pt')# 切换计算设备

model.to(device)##预测 传入图像、视频、摄像头ID(对应命令行的 source 参数)

img_path = '11.jpg'

results = model(img_path)##结果print(len(results),results[0])

结果:

640x512 1 person, 114.0ms

Speed: 8.0ms preprocess, 114.0ms inference, 7.0ms postprocess per image at shape (1, 3, 640, 640)

1 ultralytics.yolo.engine.results.Results object with attributes:

boxes: ultralytics.yolo.engine.results.Boxes object

keypoints: ultralytics.yolo.engine.results.Keypoints object

keys: [‘boxes’, ‘keypoints’]

masks: None

names: {0: ‘person’} ## 0分类表示person

orig_img: array([[[ 25, 27, 36],

[ 25, 27, 36],

[ 25, 27, 36],

…,

[ 35, 55, 90],

[ 35, 55, 90],

[ 35, 55, 90]],

[[ 25, 27, 36],[ 25, 27, 36],[ 25, 27, 36],...,[ 35, 55, 90],[ 35, 55, 90],[ 35, 55, 90]],[[ 25, 27, 36],[ 25, 27, 36],[ 25, 27, 36],...,[ 36, 56, 91],[ 36, 56, 91],[ 36, 56, 91]],...,[[ 24, 21, 8],[ 24, 21, 8],[ 24, 21, 8],...,[102, 120, 162],[102, 120, 162],[102, 120, 162]],[[ 22, 22, 8],[ 22, 22, 8],[ 22, 22, 8],...,[102, 120, 162],[102, 120, 162],[102, 120, 162]],[[ 22, 22, 8],[ 22, 22, 8],[ 22, 22, 8],...,[102, 120, 162],[102, 120, 162],[102, 120, 162]]], dtype=uint8)

orig_shape: (2092, 1600)

path: ‘C:\Users\lonng\Desktop\rdkit_learn\opencv2\11.jpg’

probs: None

speed: {‘preprocess’: 8.000612258911133, ‘inference’: 113.99602890014648, ‘postprocess’: 6.998538970947266}

完整代码

from ultralytics import YOLOimport cv2

import matplotlib.pyplot as pltimport torch# 有 GPU 就用 GPU,没有就用 CPU

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')# 载入预训练模型

# model = YOLO('yolov8n-pose.pt')

# model = YOLO('yolov8s-pose.pt')

# model = YOLO('yolov8m-pose.pt')

# model = YOLO('yolov8l-pose.pt')

# model = YOLO('yolov8x-pose.pt')

model = YOLO(r'C:\Users\lonng\Downloads\yolov8l-pose.pt')# 切换计算设备

model.to(device)##预测 传入图像、视频、摄像头ID(对应命令行的 source 参数)

img_path = '11.jpg'

results = model(img_path)##结果print(len(results),results[0])# 框(rectangle)可视化配置

bbox_color = (150, 0, 0) # 框的 BGR 颜色

bbox_thickness = 6 # 框的线宽# 框类别文字

bbox_labelstr = {'font_size':6, # 字体大小'font_thickness':14, # 字体粗细'offset_x':0, # X 方向,文字偏移距离,向右为正'offset_y':-80, # Y 方向,文字偏移距离,向下为正

}# 关键点 BGR 配色

kpt_color_map = {0:{'name':'Nose', 'color':[0, 0, 255], 'radius':10}, # 鼻尖1:{'name':'Right Eye', 'color':[255, 0, 0], 'radius':10}, # 右边眼睛2:{'name':'Left Eye', 'color':[255, 0, 0], 'radius':10}, # 左边眼睛3:{'name':'Right Ear', 'color':[0, 255, 0], 'radius':10}, # 右边耳朵4:{'name':'Left Ear', 'color':[0, 255, 0], 'radius':10}, # 左边耳朵5:{'name':'Right Shoulder', 'color':[193, 182, 255], 'radius':10}, # 右边肩膀6:{'name':'Left Shoulder', 'color':[193, 182, 255], 'radius':10}, # 左边肩膀7:{'name':'Right Elbow', 'color':[16, 144, 247], 'radius':10}, # 右侧胳膊肘8:{'name':'Left Elbow', 'color':[16, 144, 247], 'radius':10}, # 左侧胳膊肘9:{'name':'Right Wrist', 'color':[1, 240, 255], 'radius':10}, # 右侧手腕10:{'name':'Left Wrist', 'color':[1, 240, 255], 'radius':10}, # 左侧手腕11:{'name':'Right Hip', 'color':[140, 47, 240], 'radius':10}, # 右侧胯12:{'name':'Left Hip', 'color':[140, 47, 240], 'radius':10}, # 左侧胯13:{'name':'Right Knee', 'color':[223, 155, 60], 'radius':10}, # 右侧膝盖14:{'name':'Left Knee', 'color':[223, 155, 60], 'radius':10}, # 左侧膝盖15:{'name':'Right Ankle', 'color':[139, 0, 0], 'radius':10}, # 右侧脚踝16:{'name':'Left Ankle', 'color':[139, 0, 0], 'radius':10}, # 左侧脚踝

}# 点类别文字

kpt_labelstr = {'font_size':4, # 字体大小'font_thickness':2, # 字体粗细'offset_x':0, # X 方向,文字偏移距离,向右为正'offset_y':150, # Y 方向,文字偏移距离,向下为正

}# 骨架连接 BGR 配色

skeleton_map = [{'srt_kpt_id':15, 'dst_kpt_id':13, 'color':[0, 100, 255], 'thickness':5}, # 右侧脚踝-右侧膝盖{'srt_kpt_id':13, 'dst_kpt_id':11, 'color':[0, 255, 0], 'thickness':5}, # 右侧膝盖-右侧胯{'srt_kpt_id':16, 'dst_kpt_id':14, 'color':[255, 0, 0], 'thickness':5}, # 左侧脚踝-左侧膝盖{'srt_kpt_id':14, 'dst_kpt_id':12, 'color':[0, 0, 255], 'thickness':5}, # 左侧膝盖-左侧胯{'srt_kpt_id':11, 'dst_kpt_id':12, 'color':[122, 160, 255], 'thickness':5}, # 右侧胯-左侧胯{'srt_kpt_id':5, 'dst_kpt_id':11, 'color':[139, 0, 139], 'thickness':5}, # 右边肩膀-右侧胯{'srt_kpt_id':6, 'dst_kpt_id':12, 'color':[237, 149, 100], 'thickness':5}, # 左边肩膀-左侧胯{'srt_kpt_id':5, 'dst_kpt_id':6, 'color':[152, 251, 152], 'thickness':5}, # 右边肩膀-左边肩膀{'srt_kpt_id':5, 'dst_kpt_id':7, 'color':[148, 0, 69], 'thickness':5}, # 右边肩膀-右侧胳膊肘{'srt_kpt_id':6, 'dst_kpt_id':8, 'color':[0, 75, 255], 'thickness':5}, # 左边肩膀-左侧胳膊肘{'srt_kpt_id':7, 'dst_kpt_id':9, 'color':[56, 230, 25], 'thickness':5}, # 右侧胳膊肘-右侧手腕{'srt_kpt_id':8, 'dst_kpt_id':10, 'color':[0,240, 240], 'thickness':5}, # 左侧胳膊肘-左侧手腕{'srt_kpt_id':1, 'dst_kpt_id':2, 'color':[224,255, 255], 'thickness':5}, # 右边眼睛-左边眼睛{'srt_kpt_id':0, 'dst_kpt_id':1, 'color':[47,255, 173], 'thickness':5}, # 鼻尖-左边眼睛{'srt_kpt_id':0, 'dst_kpt_id':2, 'color':[203,192,255], 'thickness':5}, # 鼻尖-左边眼睛{'srt_kpt_id':1, 'dst_kpt_id':3, 'color':[196, 75, 255], 'thickness':5}, # 右边眼睛-右边耳朵{'srt_kpt_id':2, 'dst_kpt_id':4, 'color':[86, 0, 25], 'thickness':5}, # 左边眼睛-左边耳朵{'srt_kpt_id':3, 'dst_kpt_id':5, 'color':[255,255, 0], 'thickness':5}, # 右边耳朵-右边肩膀{'srt_kpt_id':4, 'dst_kpt_id':6, 'color':[255, 18, 200], 'thickness':5} # 左边耳朵-左边肩膀

]## 解析目标检测预测结果

num_bbox = len(results[0].boxes.cls)

print('预测出 {} 个框'.format(num_bbox))

# 转成整数的 numpy array

bboxes_xyxy = results[0].boxes.xyxy.cpu().numpy().astype('uint32')

print("bboxes_xyxy:",bboxes_xyxy)

## 解析关键点检测预测结果

# print("bboxes_keypoints:",results[0].keypoints.data.cpu().numpy().astype('uint32'))

bboxes_keypoints = results[0].keypoints.data.cpu().numpy().astype('uint32')for idx in range(num_bbox): # 遍历每个框# 获取该框坐标bbox_xyxy = bboxes_xyxy[idx] # 获取框的预测类别(对于关键点检测,只有一个类别)bbox_label = results[0].names[0]img_bgr = cv2.imread(img_path)# 画框img_bgr = cv2.rectangle(img_bgr, (bbox_xyxy[0], bbox_xyxy[1]), (bbox_xyxy[2], bbox_xyxy[3]), bbox_color, bbox_thickness)# 写框类别文字:图片,文字字符串,文字左上角坐标,字体,字体大小,颜色,字体粗细img_bgr = cv2.putText(img_bgr, bbox_label, (bbox_xyxy[0]+bbox_labelstr['offset_x'], bbox_xyxy[1]+bbox_labelstr['offset_y']), cv2.FONT_HERSHEY_SIMPLEX, bbox_labelstr['font_size'], bbox_color, bbox_labelstr['font_thickness'])bbox_keypoints = bboxes_keypoints[idx] # 该框所有关键点坐标和置信度# 画该框的骨架连接for skeleton in skeleton_map:# 获取起始点坐标srt_kpt_id = skeleton['srt_kpt_id']srt_kpt_x = bbox_keypoints[srt_kpt_id][0]srt_kpt_y = bbox_keypoints[srt_kpt_id][1]# 获取终止点坐标dst_kpt_id = skeleton['dst_kpt_id']dst_kpt_x = bbox_keypoints[dst_kpt_id][0]dst_kpt_y = bbox_keypoints[dst_kpt_id][1]# 获取骨架连接颜色skeleton_color = skeleton['color']# 获取骨架连接线宽skeleton_thickness = skeleton['thickness']# 画骨架连接img_bgr = cv2.line(img_bgr, (srt_kpt_x, srt_kpt_y),(dst_kpt_x, dst_kpt_y),color=skeleton_color,thickness=skeleton_thickness)# 画该框的关键点for kpt_id in kpt_color_map:# 获取该关键点的颜色、半径、XY坐标kpt_color = kpt_color_map[kpt_id]['color']kpt_radius = kpt_color_map[kpt_id]['radius']kpt_x = bbox_keypoints[kpt_id][0]kpt_y = bbox_keypoints[kpt_id][1]# 画圆:图片、XY坐标、半径、颜色、线宽(-1为填充)img_bgr = cv2.circle(img_bgr, (kpt_x, kpt_y), kpt_radius, kpt_color, -1)# 写关键点类别文字:图片,文字字符串,文字左上角坐标,字体,字体大小,颜色,字体粗细# kpt_label = str(kpt_id) # 写关键点类别 ID(二选一)# kpt_label = str(kpt_color_map[kpt_id]['name']) # 写关键点类别名称(二选一)# img_bgr = cv2.putText(img_bgr, kpt_label, (kpt_x+kpt_labelstr['offset_x'], kpt_y+kpt_labelstr['offset_y']), cv2.FONT_HERSHEY_SIMPLEX, kpt_labelstr['font_size'], kpt_color, kpt_labelstr['font_thickness'])plt.imshow(img_bgr[:,:,::-1])

plt.show()

cv2.imwrite('11_pose.jpg', img_bgr)

实时摄像头关键点检测

from ultralytics import YOLOimport cv2

import matplotlib.pyplot as pltimport time

import torch# 有 GPU 就用 GPU,没有就用 CPU

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')# 载入预训练模型

# model = YOLO('yolov8n-pose.pt')

# model = YOLO('yolov8s-pose.pt')

# model = YOLO('yolov8m-pose.pt')

# model = YOLO('yolov8l-pose.pt')

# model = YOLO('yolov8x-pose.pt')

model = YOLO(r'C:\Users\lonng\Downloads\yolov8l-pose.pt')# 切换计算设备

model.to(device)# 框(rectangle)可视化配置

bbox_color = (150, 0, 0) # 框的 BGR 颜色

bbox_thickness = 2 # 框的线宽# 框类别文字

bbox_labelstr = {'font_size':2, # 字体大小'font_thickness':4, # 字体粗细'offset_x':0, # X 方向,文字偏移距离,向右为正'offset_y':-20, # Y 方向,文字偏移距离,向下为正

}# 关键点 BGR 配色

kpt_color_map = {0:{'name':'Nose', 'color':[0, 0, 255], 'radius':6}, # 鼻尖1:{'name':'Right Eye', 'color':[255, 0, 0], 'radius':6}, # 右边眼睛2:{'name':'Left Eye', 'color':[255, 0, 0], 'radius':6}, # 左边眼睛3:{'name':'Right Ear', 'color':[0, 255, 0], 'radius':6}, # 右边耳朵4:{'name':'Left Ear', 'color':[0, 255, 0], 'radius':6}, # 左边耳朵5:{'name':'Right Shoulder', 'color':[193, 182, 255], 'radius':6}, # 右边肩膀6:{'name':'Left Shoulder', 'color':[193, 182, 255], 'radius':6}, # 左边肩膀7:{'name':'Right Elbow', 'color':[16, 144, 247], 'radius':6}, # 右侧胳膊肘8:{'name':'Left Elbow', 'color':[16, 144, 247], 'radius':6}, # 左侧胳膊肘9:{'name':'Right Wrist', 'color':[1, 240, 255], 'radius':6}, # 右侧手腕10:{'name':'Left Wrist', 'color':[1, 240, 255], 'radius':6}, # 左侧手腕11:{'name':'Right Hip', 'color':[140, 47, 240], 'radius':6}, # 右侧胯12:{'name':'Left Hip', 'color':[140, 47, 240], 'radius':6}, # 左侧胯13:{'name':'Right Knee', 'color':[223, 155, 60], 'radius':6}, # 右侧膝盖14:{'name':'Left Knee', 'color':[223, 155, 60], 'radius':6}, # 左侧膝盖15:{'name':'Right Ankle', 'color':[139, 0, 0], 'radius':6}, # 右侧脚踝16:{'name':'Left Ankle', 'color':[139, 0, 0], 'radius':6}, # 左侧脚踝

}# 点类别文字

kpt_labelstr = {'font_size':1.5, # 字体大小'font_thickness':3, # 字体粗细'offset_x':10, # X 方向,文字偏移距离,向右为正'offset_y':0, # Y 方向,文字偏移距离,向下为正

}# 骨架连接 BGR 配色

skeleton_map = [{'srt_kpt_id':15, 'dst_kpt_id':13, 'color':[0, 100, 255], 'thickness':2}, # 右侧脚踝-右侧膝盖{'srt_kpt_id':13, 'dst_kpt_id':11, 'color':[0, 255, 0], 'thickness':2}, # 右侧膝盖-右侧胯{'srt_kpt_id':16, 'dst_kpt_id':14, 'color':[255, 0, 0], 'thickness':2}, # 左侧脚踝-左侧膝盖{'srt_kpt_id':14, 'dst_kpt_id':12, 'color':[0, 0, 255], 'thickness':2}, # 左侧膝盖-左侧胯{'srt_kpt_id':11, 'dst_kpt_id':12, 'color':[122, 160, 255], 'thickness':2}, # 右侧胯-左侧胯{'srt_kpt_id':5, 'dst_kpt_id':11, 'color':[139, 0, 139], 'thickness':2}, # 右边肩膀-右侧胯{'srt_kpt_id':6, 'dst_kpt_id':12, 'color':[237, 149, 100], 'thickness':2}, # 左边肩膀-左侧胯{'srt_kpt_id':5, 'dst_kpt_id':6, 'color':[152, 251, 152], 'thickness':2}, # 右边肩膀-左边肩膀{'srt_kpt_id':5, 'dst_kpt_id':7, 'color':[148, 0, 69], 'thickness':2}, # 右边肩膀-右侧胳膊肘{'srt_kpt_id':6, 'dst_kpt_id':8, 'color':[0, 75, 255], 'thickness':2}, # 左边肩膀-左侧胳膊肘{'srt_kpt_id':7, 'dst_kpt_id':9, 'color':[56, 230, 25], 'thickness':2}, # 右侧胳膊肘-右侧手腕{'srt_kpt_id':8, 'dst_kpt_id':10, 'color':[0,240, 240], 'thickness':2}, # 左侧胳膊肘-左侧手腕{'srt_kpt_id':1, 'dst_kpt_id':2, 'color':[224,255, 255], 'thickness':2}, # 右边眼睛-左边眼睛{'srt_kpt_id':0, 'dst_kpt_id':1, 'color':[47,255, 173], 'thickness':2}, # 鼻尖-左边眼睛{'srt_kpt_id':0, 'dst_kpt_id':2, 'color':[203,192,255], 'thickness':2}, # 鼻尖-左边眼睛{'srt_kpt_id':1, 'dst_kpt_id':3, 'color':[196, 75, 255], 'thickness':2}, # 右边眼睛-右边耳朵{'srt_kpt_id':2, 'dst_kpt_id':4, 'color':[86, 0, 25], 'thickness':2}, # 左边眼睛-左边耳朵{'srt_kpt_id':3, 'dst_kpt_id':5, 'color':[255,255, 0], 'thickness':2}, # 右边耳朵-右边肩膀{'srt_kpt_id':4, 'dst_kpt_id':6, 'color':[255, 18, 200], 'thickness':2} # 左边耳朵-左边肩膀

]## 逐帧处理函数

def process_frame(img_bgr):'''输入摄像头画面 bgr-array,输出图像 bgr-array'''# 记录该帧开始处理的时间start_time = time.time()results = model(img_bgr, verbose=False) # verbose设置为False,不单独打印每一帧预测结果# 预测框的个数num_bbox = len(results[0].boxes.cls)# 预测框的 xyxy 坐标bboxes_xyxy = results[0].boxes.xyxy.cpu().numpy().astype('uint32') # 关键点的 xy 坐标bboxes_keypoints = results[0].keypoints.data.cpu().numpy().astype('uint32')for idx in range(num_bbox): # 遍历每个框# 获取该框坐标bbox_xyxy = bboxes_xyxy[idx] # 获取框的预测类别(对于关键点检测,只有一个类别)bbox_label = results[0].names[0]# 画框img_bgr = cv2.rectangle(img_bgr, (bbox_xyxy[0], bbox_xyxy[1]), (bbox_xyxy[2], bbox_xyxy[3]), bbox_color, bbox_thickness)# 写框类别文字:图片,文字字符串,文字左上角坐标,字体,字体大小,颜色,字体粗细img_bgr = cv2.putText(img_bgr, bbox_label, (bbox_xyxy[0]+bbox_labelstr['offset_x'], bbox_xyxy[1]+bbox_labelstr['offset_y']), cv2.FONT_HERSHEY_SIMPLEX, bbox_labelstr['font_size'], bbox_color, bbox_labelstr['font_thickness'])bbox_keypoints = bboxes_keypoints[idx] # 该框所有关键点坐标和置信度# 画该框的骨架连接for skeleton in skeleton_map:# 获取起始点坐标srt_kpt_id = skeleton['srt_kpt_id']srt_kpt_x = bbox_keypoints[srt_kpt_id][0]srt_kpt_y = bbox_keypoints[srt_kpt_id][1]# 获取终止点坐标dst_kpt_id = skeleton['dst_kpt_id']dst_kpt_x = bbox_keypoints[dst_kpt_id][0]dst_kpt_y = bbox_keypoints[dst_kpt_id][1]# 获取骨架连接颜色skeleton_color = skeleton['color']# 获取骨架连接线宽skeleton_thickness = skeleton['thickness']# 画骨架连接img_bgr = cv2.line(img_bgr, (srt_kpt_x, srt_kpt_y),(dst_kpt_x, dst_kpt_y),color=skeleton_color,thickness=skeleton_thickness)# 画该框的关键点for kpt_id in kpt_color_map:# 获取该关键点的颜色、半径、XY坐标kpt_color = kpt_color_map[kpt_id]['color']kpt_radius = kpt_color_map[kpt_id]['radius']kpt_x = bbox_keypoints[kpt_id][0]kpt_y = bbox_keypoints[kpt_id][1]# 画圆:图片、XY坐标、半径、颜色、线宽(-1为填充)img_bgr = cv2.circle(img_bgr, (kpt_x, kpt_y), kpt_radius, kpt_color, -1)# 写关键点类别文字:图片,文字字符串,文字左上角坐标,字体,字体大小,颜色,字体粗细# kpt_label = str(kpt_id) # 写关键点类别 ID(二选一)# kpt_label = str(kpt_color_map[kpt_id]['name']) # 写关键点类别名称(二选一)# img_bgr = cv2.putText(img_bgr, kpt_label, (kpt_x+kpt_labelstr['offset_x'], kpt_y+kpt_labelstr['offset_y']), cv2.FONT_HERSHEY_SIMPLEX, kpt_labelstr['font_size'], kpt_color, kpt_labelstr['font_thickness'])# 记录该帧处理完毕的时间end_time = time.time()# 计算每秒处理图像帧数FPSFPS = 1/(end_time - start_time)# 在画面上写字:图片,字符串,左上角坐标,字体,字体大小,颜色,字体粗细FPS_string = 'FPS '+str(int(FPS)) # 写在画面上的字符串img_bgr = cv2.putText(img_bgr, FPS_string, (25, 60), cv2.FONT_HERSHEY_SIMPLEX, 1.25, (255, 0, 255), 2)return img_bgr# 调用摄像头逐帧实时处理模板

# 不需修改任何代码,只需修改process_frame函数即可

# 同济子豪兄 2021-7-8# 导入opencv-python

import cv2

import time# 获取摄像头,传入0表示获取系统默认摄像头

cap = cv2.VideoCapture(1)# 打开cap

cap.open(0)# 无限循环,直到break被触发

while cap.isOpened():# 获取画面success, frame = cap.read()if not success: # 如果获取画面不成功,则退出print('获取画面不成功,退出')break## 逐帧处理frame = process_frame(frame)# 展示处理后的三通道图像cv2.imshow('my_window',frame)key_pressed = cv2.waitKey(60) # 每隔多少毫秒毫秒,获取键盘哪个键被按下# print('键盘上被按下的键:', key_pressed)if key_pressed in [ord('q'),27]: # 按键盘上的q或esc退出(在英文输入法下)break# 关闭摄像头

cap.release()# 关闭图像窗口

cv2.destroyAllWindows()