这里写目录标题

- 请求逻辑

- 装饰器使用方法

- 定义指定并发量的协程

- 获得协程返回的结果

- 给task 添加回调函数

- task

- 运行结果

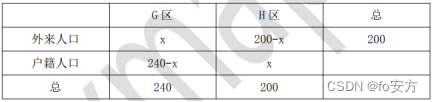

分享一个爬虫,其实只用修改一下爬虫的请求逻辑就会很通用了。在工作中,尽管python慢,但是异步加载的协程可不慢,在请求接口的时候快的飞起。

请求逻辑

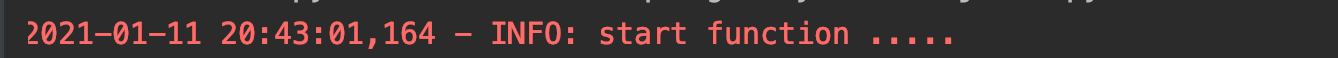

这是python的装饰器,其实就是帮助我们打印一下运行日志,挺好用的。

def logged(func):@wraps(func)def wrapper(*args, **kwargs):logger = logging.getLogger(func.__name__)start = datetime.datetime.now()logger.info(f"start function .....")f = func(*args, **kwargs)logger.info("cost time %s" % (datetime.datetime.now() - start))return freturn wrapper

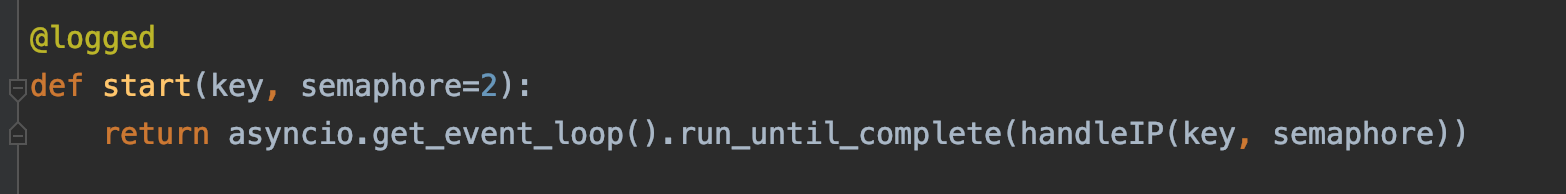

装饰器使用方法

直接在函数的头上@一下装饰器的名字就可以了

运行效果:

定义指定并发量的协程

下面的这个函数表示信号量为2的函数开始的地方。首先是创建一个事件循环(event_loop)返回loop,然后在循环事件中定义一个run_until_complete()方法,用于执行future。什么是future呢?future可以理解为需要获得的网页结果的函数,简单点就是协程运行后的返回结果。

def start(key, semaphore=2):return asyncio.get_event_loop().run_until_complete(handleIP(key, semaphore))

获得协程返回的结果

首先创建了一个全局session,这样的好处在于避免频繁的上下文切换,只用开启一个session就可以持续向一些网页发起请求,最后使用close关闭开启的资源。注意这里限制了会话窗口的大小64,也就是避免访问的IO开销过大,注意这样并不会影响我们的协程性能,只是控制计算机的IO承受能力。

接下来我们使用asyncio.ensure_future()封装我们的task,每个task就是一个URL请求。然后我们将task放在列表里面,为什么这样做?是因为为了给每一个task添加回调返回值,你再快获得不了结果有啥用?

async def handleIP(key, SEMAPHORE):global sessionsession = aiohttp.ClientSession(connector=aiohttp.TCPConnector(limit=64, ssl=False))temp = []tasks = [asyncio.ensure_future(get(i, j,SEMAPHORE)) for i in range(5) for j in key]for task in tasks:task.add_done_callback(partial(callback, data=temp))await asyncio.gather(*tasks)await session.close()return temp

给task 添加回调函数

因为我们的python有全局解释锁,也就是线程安全。我们添加回调函数的时候就不涉及到上锁的问题。

这里的回调函数用了一个技巧,python的偏函数,为什么要这么用?因为为了固定返回的结果,回调函数我只需要把记录总的结果的list给他,让他直接append就行,这样所有的结果都放在一个list中,这是全局解释器的好处,没有任何资源冲突

partial偏函数,有兴趣的自定百度。

from functools import partialdef callback(future, data):"""回调函数用来搜集数据:param future: 协程对象:param data: 全局的中间变量:return: 全局变量不需要返回值,原地操作"""result = future.result().encode(encoding='gbk',errors='ignore')if result is not None:data.append(result)

task

task就是一个url 请求,这里唯一的不同点在于信号量semaphore。直接使用python自带的with上下文管理,帮助我们减少CPU上下文切换的资源消耗。同时我们设置信号量为2,表示同一时刻只执行2个协程请求。

async def get(i,key, SEMAPHORE):global sessionsemaphore = asyncio.Semaphore(SEMAPHORE)ua = UserAgent(verify_ssl=False)headers = {"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9","Accept-Encoding": "gzip, deflate","Accept-Language": "zh-CN,zh;q=0.9","Connection": "keep-alive","Cookie": "ip_ck=1Iir1Yu6v8EuMDU3MDcwLjE2MDU2NjY1ODY%3D; z_pro_city=s_provice%3Dbeijing%26s_city%3Dbeijing; userProvinceId=1; userCityId=0; userCountyId=0; userLocationId=1; BAIDU_SSP_lcr=https://www.baidu.com/link?url=J8WdAONe5pXZPY4ESgardpbrejMsHoE_bY92xgWLUHG7mA42iEQkt8lRTrr-adVI&wd=&eqid=9601dc9c00034a24000000025ffc1298; lv=1610355358; vn=4; Hm_lvt_ae5edc2bc4fc71370807f6187f0a2dd0=1610355358; realLocationId=1; userFidLocationId=1; z_day=ixgo20=1&rdetail=9; visited_subcateId=57|0; visited_subcateProId=57-0|0-0; listSubcateId=57; Adshow=3; Hm_lpvt_ae5edc2bc4fc71370807f6187f0a2dd0=1610358703; visited_serachKw=huawei%7Ciphone%7C%u534E%u4E3Amate40pro%7C%u534E%u4E3AMate30; questionnaire_pv=1610323229","Host": "detail.zol.com.cn","Referer": f"https://detail.zol.com.cn/index.php?c=SearchList&kword={parse.quote(key)}","Upgrade-Insecure-Requests": "1","User-Agent": ua.random,}URL = 'http://detail.zol.com.cn/index.php?c=SearchList&keyword=' + parse.quote(key) + "&page=" + str(i)async with semaphore:try:logging.info('scraping %s', URL)async with session.get(URL, headers=headers) as response:await asyncio.sleep(1)return await response.text()except aiohttp.ClientError:logging.error('error occurred while scraping %s', URL, exc_info=True)

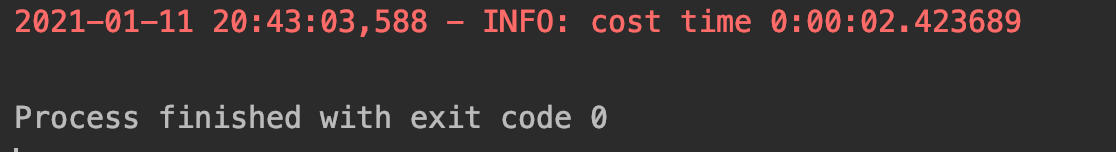

运行结果

65个URL,不到3秒完成请求。不服你自己动手试试request请求,你看看差距有多大?scrapy框架我不清楚。如果是go可能更快,我这个菜鸡只会一点点。。。。

送上可以直接运行的代码

#!/urs/bin/python

# coding=utf-8

import re

import sys

import json

import time

import logging

import asyncio

import aiohttp

import datetime

from urllib import parse

from functools import partial

from functools import wraps

from pyquery import PyQuery as pq

from fake_useragent import UserAgent

from concurrent.futures import ThreadPoolExecutorlogging.basicConfig(level=logging.INFO,format='%(asctime)s - %(levelname)s: %(message)s')def logged(func):@wraps(func)def wrapper(*args, **kwargs):logger = logging.getLogger(func.__name__)start = datetime.datetime.now()logger.info(f"start function .....")f = func(*args, **kwargs)logger.info("cost time %s" % (datetime.datetime.now() - start))return freturn wrapperdef callback(future, data):"""回调函数用来搜集数据:param future: 协程对象:param data: 全局的中间变量:return: 全局变量不需要返回值,原地操作"""result = future.result().encode(encoding='gbk',errors='ignore')if result is not None:data.append(result)async def get(i,key, SEMAPHORE):global sessionsemaphore = asyncio.Semaphore(SEMAPHORE)ua = UserAgent(verify_ssl=False)headers = {"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9","Accept-Encoding": "gzip, deflate","Accept-Language": "zh-CN,zh;q=0.9","Connection": "keep-alive","Cookie": "ip_ck=1Iir1Yu6v8EuMDU3MDcwLjE2MDU2NjY1ODY%3D; z_pro_city=s_provice%3Dbeijing%26s_city%3Dbeijing; userProvinceId=1; userCityId=0; userCountyId=0; userLocationId=1; BAIDU_SSP_lcr=https://www.baidu.com/link?url=J8WdAONe5pXZPY4ESgardpbrejMsHoE_bY92xgWLUHG7mA42iEQkt8lRTrr-adVI&wd=&eqid=9601dc9c00034a24000000025ffc1298; lv=1610355358; vn=4; Hm_lvt_ae5edc2bc4fc71370807f6187f0a2dd0=1610355358; realLocationId=1; userFidLocationId=1; z_day=ixgo20=1&rdetail=9; visited_subcateId=57|0; visited_subcateProId=57-0|0-0; listSubcateId=57; Adshow=3; Hm_lpvt_ae5edc2bc4fc71370807f6187f0a2dd0=1610358703; visited_serachKw=huawei%7Ciphone%7C%u534E%u4E3Amate40pro%7C%u534E%u4E3AMate30; questionnaire_pv=1610323229","Host": "detail.zol.com.cn","Referer": f"https://detail.zol.com.cn/index.php?c=SearchList&kword={parse.quote(key)}","Upgrade-Insecure-Requests": "1","User-Agent": ua.random,}URL = 'http://detail.zol.com.cn/index.php?c=SearchList&keyword=' + parse.quote(key) + "&page=" + str(i)async with semaphore:try:logging.info('scraping %s', URL)async with session.get(URL, headers=headers) as response:await asyncio.sleep(1)return await response.text()except aiohttp.ClientError:logging.error('error occurred while scraping %s', URL, exc_info=True)async def handleIP(key, SEMAPHORE):global sessionsession = aiohttp.ClientSession(connector=aiohttp.TCPConnector(limit=64, ssl=False))temp = []tasks = [asyncio.ensure_future(get(i, j,SEMAPHORE)) for i in range(5) for j in key]for task in tasks:task.add_done_callback(partial(callback, data=temp))await asyncio.gather(*tasks)await session.close()return temp@logged

def start(key, semaphore=2):return asyncio.get_event_loop().run_until_complete(handleIP(key, semaphore))def parse_html(data):dicts = dict()for page in data:doc = pq(page)lic = doc(".list-box .list-item.clearfix").items()for li in lic:goods = li(".pro-intro .title").text()price = li(".price-box .price-type").text()pattern = re.compile("([^(]+)")phone = pattern.findall(goods)[0].strip()if dicts.get(phone):if price < dicts[phone]:dicts[phone] = priceelse:dicts[phone] = pricewith open("./goods.txt","w+") as f:for item in dicts.items():f.write(item[0] + "\t" + item[1] + "\n")def main():key = ["华为手机","三星手机","小米手机","OPPO","VIVO","TCL手机","酷派手机","中兴手机","索尼手机","康佳手机","联想手机","360手机","朵唯手机"]result = start(key)parse_html(result)if __name__ == '__main__':main()