pandas简介

pandas 是基于NumPy 的一种工具,该工具是为解决数据分析任务而创建的。Pandas 纳入了大量库和一些标准的数据模型,提供了高效地操作大型数据集所需的工具。pandas提供了大量能使我们快速便捷地处理数据的函数和方法。pandas 是 Python 的核心数据分析支持库,提供了快速、灵活、明确的数据结构,旨在简单、直观地处理关系型、标记型数据。

1.数据读取

首先,pip install pandas 安装Pandas库。

引用pandas库,通常简称为pd,如下:

import pandas as pd1.1获取样本数据-以波士顿房价数据为例

从sklearn.datasets数据集中下载波士顿房价数据:

from sklearn.datasets import load_boston

boston = load_boston()

# 输出对boston数据集的描述

print("波士顿房价的数据集描述是\n", boston.DESCR)运行结果:

波士顿房价的数据集描述是.. _boston_dataset:Boston house prices dataset

---------------------------

**Data Set Characteristics:** :Number of Instances: 506 :Number of Attributes: 13 numeric/categorical predictive. Median Value (attribute 14) is usually the target.:Attribute Information (in order):- CRIM per capita crime rate by town- ZN proportion of residential land zoned for lots over 25,000 sq.ft.- INDUS proportion of non-retail business acres per town- CHAS Charles River dummy variable (= 1 if tract bounds river; 0 otherwise)- NOX nitric oxides concentration (parts per 10 million)- RM average number of rooms per dwelling- AGE proportion of owner-occupied units built prior to 1940- DIS weighted distances to five Boston employment centres- RAD index of accessibility to radial highways- TAX full-value property-tax rate per $10,000- PTRATIO pupil-teacher ratio by town- B 1000(Bk - 0.63)^2 where Bk is the proportion of black people by town- LSTAT % lower status of the population- MEDV Median value of owner-occupied homes in $1000's:Missing Attribute Values: None:Creator: Harrison, D. and Rubinfeld, D.L.This is a copy of UCI ML housing dataset.

https://archive.ics.uci.edu/ml/machine-learning-databases/housing/This dataset was taken from the StatLib library which is maintained at Carnegie Mellon University.

The Boston house-price data of Harrison, D. and Rubinfeld, D.L. 'Hedonic

prices and the demand for clean air', J. Environ. Economics & Management,

vol.5, 81-102, 1978. Used in Belsley, Kuh & Welsch, 'Regression diagnostics

...', Wiley, 1980. N.B. Various transformations are used in the table on

pages 244-261 of the latter.The Boston house-price data has been used in many machine learning papers that address regression

problems. .. topic:: References- Belsley, Kuh & Welsch, 'Regression diagnostics: Identifying Influential Data and Sources of Collinearity', Wiley, 1980. 244-261.- Quinlan,R. (1993). Combining Instance-Based and Model-Based Learning. In Proceedings on the Tenth International Conference of Machine Learning, 236-243, University of Massachusetts, Amherst. Morgan Kaufmann.

波士顿房价数据集的特征共有14种,分别是CRIM(城镇人均犯罪率)、ZN(占地面积超过25000平方英尺的住宅用地比例)、INDUS(非零售商业用地占比)、CHAS(是否临河)、NOX(氮氧化物浓度)、RM(房屋房间数)、AGE(房屋年龄)、DIS(和就业中心的距离)、RAD(是否容易上高速路)、TAX(税率)、PTRATTO(学生人数比老师人数)、B(城镇黑人比例计算的统计值)、LSTAT(低收入人群比例)和MEDV(房价中位数)。原文链接:https://blog.csdn.net/f18896984569/article/details/127759937。

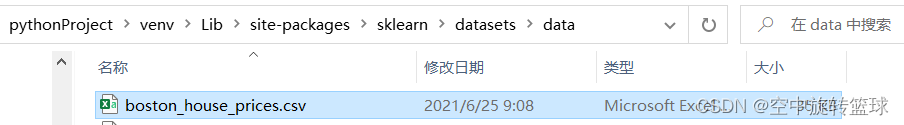

这个数据下载到哪里了呢?我们可以通过打印boston获取位置信息(print(boston)),这里列出部分信息:位置在:D:\\pythonProject\\venv\\lib\\site-packages\\sklearn\\datasets\\data\\boston_house_prices.csv

per $10,000\n - PTRATIO pupil-teacher ratio by town\n - B 1000(Bk - 0.63)^2 where Bk is the proportion of black people by town\n - LSTAT % lower status of the population\n - MEDV Median value of owner-occupied homes in $1000's\n\n :Missing Attribute Values: None\n\n :Creator: Harrison, D. and Rubinfeld, D.L.\n\nThis is a copy of UCI ML housing dataset.\nhttps://archive.ics.uci.edu/ml/machine-learning-databases/housing/\n\n\nThis dataset was taken from the StatLib library which is maintained at Carnegie Mellon University.\n\nThe Boston house-price data of Harrison, D. and Rubinfeld, D.L. 'Hedonic\nprices and the demand for clean air', J. Environ. Economics & Management,\nvol.5, 81-102, 1978. Used in Belsley, Kuh & Welsch, 'Regression diagnostics\n...', Wiley, 1980. N.B. Various transformations are used in the table on\npages 244-261 of the latter.\n\nThe Boston house-price data has been used in many machine learning papers that address regression\nproblems. \n \n.. topic:: References\n\n - Belsley, Kuh & Welsch, 'Regression diagnostics: Identifying Influential Data and Sources of Collinearity', Wiley, 1980. 244-261.\n - Quinlan,R. (1993). Combining Instance-Based and Model-Based Learning. In Proceedings on the Tenth International Conference of Machine Learning, 236-243, University of Massachusetts, Amherst. Morgan Kaufmann.\n", 'filename': 'D:\\pythonProject\\venv\\lib\\site-packages\\sklearn\\datasets\\data\\boston_house_prices.csv'}Process finished with exit code 0

我们打开路径可以看到:

显示时间不是当前时间,说明之前已经下载过。

打开数据如下,显示前面11行:

CRIM | ZN | INDUS | CHAS | NOX | RM | AGE | DIS | RAD | TAX | PTRATIO | B | LSTAT | MEDV |

0.00632 | 18 | 2.31 | 0 | 0.538 | 6.575 | 65.2 | 4.09 | 1 | 296 | 15.3 | 396.9 | 4.98 | 24 |

0.02731 | 0 | 7.07 | 0 | 0.469 | 6.421 | 78.9 | 4.9671 | 2 | 242 | 17.8 | 396.9 | 9.14 | 21.6 |

0.02729 | 0 | 7.07 | 0 | 0.469 | 7.185 | 61.1 | 4.9671 | 2 | 242 | 17.8 | 392.83 | 4.03 | 34.7 |

0.03237 | 0 | 2.18 | 0 | 0.458 | 6.998 | 45.8 | 6.0622 | 3 | 222 | 18.7 | 394.63 | 2.94 | 33.4 |

0.06905 | 0 | 2.18 | 0 | 0.458 | 7.147 | 54.2 | 6.0622 | 3 | 222 | 18.7 | 396.9 | 5.33 | 36.2 |

0.02985 | 0 | 2.18 | 0 | 0.458 | 6.43 | 58.7 | 6.0622 | 3 | 222 | 18.7 | 394.12 | 5.21 | 28.7 |

0.08829 | 12.5 | 7.87 | 0 | 0.524 | 6.012 | 66.6 | 5.5605 | 5 | 311 | 15.2 | 395.6 | 12.43 | 22.9 |

0.14455 | 12.5 | 7.87 | 0 | 0.524 | 6.172 | 96.1 | 5.9505 | 5 | 311 | 15.2 | 396.9 | 19.15 | 27.1 |

0.21124 | 12.5 | 7.87 | 0 | 0.524 | 5.631 | 100 | 6.0821 | 5 | 311 | 15.2 | 386.63 | 29.93 | 16.5 |

0.17004 | 12.5 | 7.87 | 0 | 0.524 | 6.004 | 85.9 | 6.5921 | 5 | 311 | 15.2 | 386.71 | 17.1 | 18.9 |

0.22489 | 12.5 | 7.87 | 0 | 0.524 | 6.377 | 94.3 | 6.3467 | 5 | 311 | 15.2 | 392.52 | 20.45 | 15 |

第一行显示数据有506行记录,13个变量,最后一列为房价中位数。我们将第一行删除掉便于数据操作。把文件复制到当前路径下与操作,另存为一份Excel格式。

excel文件读取

def read_excel(io: {engine, parse},sheet_name: int = 0,header: int = 0,names: Any = None,index_col: Any = None,usecols: Any = None,squeeze: bool = False,dtype: Any = None,engine: {__ne__} = None,converters: Any = None,true_values: Any = None,false_values: Any = None,skiprows: Any = None,nrows: Any = None,na_values: Any = None,keep_default_na: bool = True,na_filter: bool = True,verbose: bool = False,parse_dates: bool = False,date_parser: Any = None,thousands: Any = None,comment: Any = None,skipfooter: int = 0,convert_float: bool = True,mangle_dupe_cols: bool = True,storage_options: Optional[Dict[str, Any]] = None)示例:读取excel文件数据,默认读取所有数据:

df=pd.read_excel('boston_house_prices.xls')

print(df)csv文件读取

read_csv函数中参数更多:

def read_csv(filepath_or_buffer: PathLike[str],sep: Any = lib.no_default,delimiter: Any = None,header: str = "infer",names: Any = None,index_col: Any = None,usecols: Any = None,squeeze: bool = False,prefix: Any = None,mangle_dupe_cols: bool = True,dtype: Any = None,engine: Any = None,converters: Any = None,true_values: Any = None,false_values: Any = None,skipinitialspace: bool = False,skiprows: Any = None,skipfooter: int = 0,nrows: Any = None,na_values: Any = None,keep_default_na: bool = True,na_filter: bool = True,verbose: bool = False,skip_blank_lines: bool = True,parse_dates: bool = False,infer_datetime_format: bool = False,keep_date_col: bool = False,date_parser: Any = None,dayfirst: bool = False,cache_dates: bool = True,iterator: bool = False,chunksize: Any = None,compression: str = "infer",thousands: Any = None,decimal: str = ".",lineterminator: Any = None,quotechar: str = '\"',quoting: int = csv.QUOTE_MINIMAL,doublequote: bool = True,escapechar: Any = None,comment: Any = None,encoding: Any = None,dialect: Any = None,error_bad_lines: bool = True,warn_bad_lines: bool = True,delim_whitespace: bool = False,low_memory: Optional[bool] = _c_parser_defaults["low_memory"],memory_map: bool = False,float_precision: Any = None,storage_options: Optional[Dict[str, Any]] = None)示例:读取csv数据,默认读取前5行:

df = pd.read_csv(# 该参数为数据在电脑中的路径,可以不填写filepath_or_buffer='boston_house_prices.csv',# 该参数代表数据的分隔符,csv文件默认是逗号。其他常见的是'\t'sep=',',# 该参数代表跳过数据文件的的第1行不读入# skiprows=1,# nrows,只读取前n行数据,若不指定,读入全部的数据nrows=5,

)2.数据保存

excel文件保存,需要import xlwt

df.to_excel('boston_part.xls')csv文件保存

df.to_csv('boston_part.csv')3.数据指定位置读取与切片

可通过iloc方法来实现

newdf=df.iloc[:,:] ,索引从0开始

示例:读取指定位置数据,比如第5行第5列数据

df = pd.read_csv('boston_house_prices.csv')

df=df.iloc[4,4]读取5行5列数据:

df = pd.read_csv('boston_house_prices.csv')

df=df.iloc[:5,:5]

print(df)结果如下:

CRIM ZN INDUS CHAS NOX

0 0.00632 18.0 2.31 0 0.538

1 0.02731 0.0 7.07 0 0.469

2 0.02729 0.0 7.07 0 0.469

3 0.03237 0.0 2.18 0 0.458

4 0.06905 0.0 2.18 0 0.458读取指定位置5行数据所有列:

df = pd.read_csv('boston_house_prices.csv')

df=df.iloc[10:15,:]

print(df)运行结果:

CRIM ZN INDUS CHAS NOX ... TAX PTRATIO B LSTAT MEDV

10 0.22489 12.5 7.87 0 0.524 ... 311 15.2 392.52 20.45 15.0

11 0.11747 12.5 7.87 0 0.524 ... 311 15.2 396.90 13.27 18.9

12 0.09378 12.5 7.87 0 0.524 ... 311 15.2 390.50 15.71 21.7

13 0.62976 0.0 8.14 0 0.538 ... 307 21.0 396.90 8.26 20.4

14 0.63796 0.0 8.14 0 0.538 ... 307 21.0 380.02 10.26 18.2同样的,读取指定列所有行也是一样的。

4.数据合并连接

pd.concat([df1,df2],axis=1) 横向合并数据

df = pd.read_csv('boston_house_prices.csv')

df1=df.iloc[:,:13]

df2=df.iloc[:,13]

print(df1,df2)

df3=pd.concat([df1,df2],axis=1)

print(df3)纵向合并数据:

df = pd.read_csv('boston_house_prices.csv')

df1=df.iloc[:5,:]

df2=df.iloc[5:10,:]

print(df1,df2)

df3=pd.concat([df1,df2],axis=0)

print(df3)5.根据条件读取数据

只选择中位数房价大于30的数据。df['MEDV']>30

df = pd.read_csv('boston_house_prices.csv')

df=df[df['MEDV']>30]

print(df)6.根据条件删除数据

删除房价大于30的数据:

indexname=df[df['MEDV']>30].index

df.drop(index,Inplace=True)7.统计函数

df = pd.read_csv('boston_house_prices.csv')

print(df['MEDV'].mean()) # 求一整列的均值,返回一个数。会自动排除空值。

print(df[['MEDV', 'LSTAT']].mean()) # 求两列的均值,返回两个数,Series

print(df[['MEDV', 'LSTAT']])

print(df[['MEDV', 'LSTAT']].mean(axis=1)) # 求两列的均值,返回DataFrame。axis=0或者1要搞清楚。

#axis=1,代表对整几列进行操作。axis=0(默认)代表对几行进行操作。实际中弄混很正常,到时候试一下就知道了。

print(df['MEDV'].max()) # 最大值

print(df['MEDV'].min()) # 最小值

print(df['MEDV'].std()) # 标准差

print(df['MEDV'].count()) # 非空的数据的数量

print(df['MEDV'].median()) # 中位数

print(df['MEDV'].quantile(0.25)) # 25%分位数8.数据排序

8.1 按索引排序

函数:sort_index()是 pandas 中按索引排序的函数,默认情况下, sort_index 是按行索引升序排序。

df = pd.read_csv('boston_house_prices.csv',nrows=5,index_col=['CRIM'],#设置该属性为索引列usecols = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS'])

print(df)

df1=df.sort_index()

print('sort_index:')

print(df1)运行结果:

ZN INDUS CHAS NOX RM AGE DIS

CRIM

0.00632 18 2.31 0 0.538 6.575 65.2 4.0900

0.02731 0 7.07 0 0.469 6.421 78.9 4.9671

0.02729 0 7.07 0 0.469 7.185 61.1 4.9671

0.03237 0 2.18 0 0.458 6.998 45.8 6.0622

0.06905 0 2.18 0 0.458 7.147 54.2 6.0622

sort_index:ZN INDUS CHAS NOX RM AGE DIS

CRIM

0.00632 18 2.31 0 0.538 6.575 65.2 4.0900

0.02729 0 7.07 0 0.469 7.185 61.1 4.9671

0.02731 0 7.07 0 0.469 6.421 78.9 4.9671

0.03237 0 2.18 0 0.458 6.998 45.8 6.0622

0.06905 0 2.18 0 0.458 7.147 54.2 6.0622默认索引就是从小到达排序的.我们反序排列:

df = pd.read_csv('boston_house_prices.csv',nrows=5,index_col=['CRIM'],usecols = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS'])

print(df)

df1=df.sort_index(ascending=False)

print('sort_index:')

print(df1) ZN INDUS CHAS NOX RM AGE DIS

CRIM

0.00632 18 2.31 0 0.538 6.575 65.2 4.0900

0.02731 0 7.07 0 0.469 6.421 78.9 4.9671

0.02729 0 7.07 0 0.469 7.185 61.1 4.9671

0.03237 0 2.18 0 0.458 6.998 45.8 6.0622

0.06905 0 2.18 0 0.458 7.147 54.2 6.0622

sort_index:ZN INDUS CHAS NOX RM AGE DIS

CRIM

0.06905 0 2.18 0 0.458 7.147 54.2 6.0622

0.03237 0 2.18 0 0.458 6.998 45.8 6.0622

0.02731 0 7.07 0 0.469 6.421 78.9 4.9671

0.02729 0 7.07 0 0.469 7.185 61.1 4.9671

0.00632 18 2.31 0 0.538 6.575 65.2 4.09008.2按数值排序

sort_values() 中设置单个列的列名称,可以对单个列进行排序,通过设置参数 ascending 可以设置升序或降序排列,默认升序排序。

df = pd.read_csv('boston_house_prices.csv',nrows=5,index_col=['CRIM'],usecols = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS'])

print(df)

df1=df.sort_values('NOX')

print('sort_values:')

print(df1) ZN INDUS CHAS NOX RM AGE DIS

CRIM

0.00632 18 2.31 0 0.538 6.575 65.2 4.0900

0.02731 0 7.07 0 0.469 6.421 78.9 4.9671

0.02729 0 7.07 0 0.469 7.185 61.1 4.9671

0.03237 0 2.18 0 0.458 6.998 45.8 6.0622

0.06905 0 2.18 0 0.458 7.147 54.2 6.0622

sort_values:ZN INDUS CHAS NOX RM AGE DIS

CRIM

0.03237 0 2.18 0 0.458 6.998 45.8 6.0622

0.06905 0 2.18 0 0.458 7.147 54.2 6.0622

0.02731 0 7.07 0 0.469 6.421 78.9 4.9671

0.02729 0 7.07 0 0.469 7.185 61.1 4.9671

0.00632 18 2.31 0 0.538 6.575 65.2 4.0900