这个系列写了好几篇文章,这是相关文章的索引,仅供参考:

接上文《深度学习主机攒机小记》,这台GTX1080主机准备好之后,就是配置深度学习环境了,这里选择了比较熟悉Ubuntu系统,不过是最新的16.04版本,另外在Nvidia GTX1080的基础上安装相关GPU驱动,外加CUDA8.0,因为都比较新,所以踩了很多坑。

1. 安装Ubuntu16.04

不考虑双系统,直接安装 Ubuntu16.04,从ubuntu官方下载64位版本: ubuntu-16.04-desktop-amd64.iso 。

在MAC下制作了 Ubuntu USB 安装盘,具体方法可参考: 在MAC下使用ISO制作Linux的安装USB盘,之后通过Bios引导U盘启动安装Ubuntu系统:

1)一开始安装就踩了一个坑,选择"Install Ubuntu"回车后过一会儿屏幕显示“输入不支持”,google了好多方案,最终和ubuntu对显卡的支持有关,需要手动添加显卡选项: nomodeset,使其支持Nvidia系列显卡,参考:安装ubuntu黑屏问题的解决 or How do I set 'nomodeset' after I've already installed Ubuntu?

2) 磁盘分区,全部干掉之前主机自带的Window 10系统,分区为 /boot, /, /home 等几个目录,同时把第二块4T硬盘也挂载了上去,作为数据盘。

3)安装完毕后Ubuntu 16.04的分辨率很低,在显卡驱动未安装之前,可以手动修改一下grub文件:

sudo vim /etc/default/grub

# The resolution used on graphical terminal

# note that you can use only modes which your graphic card supports via VBE

# you can see them in real GRUB with the command `vbeinfo'

#GRUB_GFXMODE=640x480

# 这里分辨率自行设置

GRUB_GFXMODE=1024x768

sudo update-grub

4)安装SSH Server,这样可以远程ssh访问这台GTX1080主机:

sudo apt-get install openssh-server

5)更新Ubuntu16.04源,用的是中科大的源:

cd /etc/apt/

sudo cp sources.list sources.list.bak

sudo vi sources.list

把下面的这些源添加到source.list文件头部:

deb http://mirrors.ustc.edu.cn/ubuntu/ xenial main restricted universe multiverse

deb http://mirrors.ustc.edu.cn/ubuntu/ xenial-security main restricted universe multiverse

deb http://mirrors.ustc.edu.cn/ubuntu/ xenial-updates main restricted universe multiverse

deb http://mirrors.ustc.edu.cn/ubuntu/ xenial-proposed main restricted universe multiverse

deb http://mirrors.ustc.edu.cn/ubuntu/ xenial-backports main restricted universe multiverse

deb-src http://mirrors.ustc.edu.cn/ubuntu/ xenial main restricted universe multiverse

deb-src http://mirrors.ustc.edu.cn/ubuntu/ xenial-security main restricted universe multiverse

deb-src http://mirrors.ustc.edu.cn/ubuntu/ xenial-updates main restricted universe multiverse

deb-src http://mirrors.ustc.edu.cn/ubuntu/ xenial-proposed main restricted universe multiverse

deb-src http://mirrors.ustc.edu.cn/ubuntu/ xenial-backports main restricted universe multiverse

最后更新源和更新已安装的包:

sudo apt-get update

sudo apt-get upgrade

2. 安装GTX1080驱动

安装 Nvidia 驱动 367.27

sudo add-apt-repository ppa:graphics-drivers/ppa

第一次运行出现如下的警告:

Fresh drivers from upstream, currently shipping Nvidia.

## Current Status

We currently recommend: `nvidia-361`, Nvidia's current long lived branch.

For GeForce 8 and 9 series GPUs use `nvidia-340`

For GeForce 6 and 7 series GPUs use `nvidia-304`

## What we're working on right now:

- Normal driver updates

- Investigating how to bring this goodness to distro on a cadence.

## WARNINGS:

This PPA is currently in testing, you should be experienced with packaging before you dive in here. Give us a few days to sort out the kinks.

Volunteers welcome! See also: https://github.com/mamarley/nvidia-graphics-drivers/

http://www.ubuntu.com/download/desktop/contribute

更多信息: https://launchpad.net/~graphics-drivers/+archive/ubuntu/ppa

按回车继续或者 Ctrl+c 取消添加

回车后继续:

sudo apt-get update

sudo apt-get install nvidia-367

sudo apt-get install mesa-common-dev

sudo apt-get install freeglut3-dev

之后重启系统让GTX1080显卡驱动生效。

3. 下载和安装CUDA

在安装CUDA之前,google了一下,发现在Ubuntu16.04下安装CUDA7.5问题多多,幸好CUDA8已出,支持GTX1080:

New in CUDA 8

Pascal Architecture Support

Out of box performance improvements on Tesla P100, supports GeForce GTX 1080

Simplify programming using Unified memory on Pascal including support for large datasets, concurrent data access and atomics*

Optimize Unified Memory performance using new data migration APIs*

Faster Deep Learning using optimized cuBLAS routines for native FP16 computation

Developer Tools

Quickly identify latent system-level bottlenecks using the new critical path analysis feature

Improve productivity with up to 2x faster NVCC compilation speed

Tune OpenACC applications and overall host code using new profiling extensions

Libraries

Accelerate graph analytics algorithms with nvGRAPH

New cuBLAS matrix multiply optimizations for matrices with sizes smaller than 512 and for batched operation

不过下载CUDA需要注册和登陆NVIDIA开发者账号,CUDA8下载页面提供了很详细的系统选择和安装说明,

这里选择了Ubuntu16.04系统runfile安装方案,千万不要选择deb方案,前方无数坑:

下载的“cuda_8.0.27_linux.run”有1.4G,按照Nivdia官方给出的方法安装CUDA8:

sudo sh cuda_8.0.27_linux.run --tmpdir=/opt/temp/

这里加了--tmpdir主要是直接运行“sudo sh cuda_8.0.27_linux.run”会提示空间不足的错误,其实是全新的电脑主机,硬盘足够大的,google了以下发现加个tmpdir就可以了:

Not enough space on parition mounted at /.

Need 5091561472 bytes.

Disk space check has failed. Installation cannot continue.

执行后会有一系列提示让你确认,非常非常非常非常关键的地方是是否安装361这个低版本的驱动:

Install NVIDIA Accelerated Graphics Driver for Linux-x86_64 361.62?

答案必须是n,否则之前安装的GTX1080驱动就白费了,而且问题多多。

Logging to /opt/temp//cuda_install_6583.log

Using more to view the EULA.

End User License Agreement

--------------------------

Preface

-------

The following contains specific license terms and conditions

for four separate NVIDIA products. By accepting this

agreement, you agree to comply with all the terms and

conditions applicable to the specific product(s) included

herein.

Do you accept the previously read EULA?

accept/decline/quit: accept

Install NVIDIA Accelerated Graphics Driver for Linux-x86_64 361.62?

(y)es/(n)o/(q)uit: n

Install the CUDA 8.0 Toolkit?

(y)es/(n)o/(q)uit: y

Enter Toolkit Location

[ default is /usr/local/cuda-8.0 ]:

Do you want to install a symbolic link at /usr/local/cuda?

(y)es/(n)o/(q)uit: y

Install the CUDA 8.0 Samples?

(y)es/(n)o/(q)uit: y

Enter CUDA Samples Location

[ default is /home/textminer ]:

Installing the CUDA Toolkit in /usr/local/cuda-8.0 ...

Installing the CUDA Samples in /home/textminer ...

Copying samples to /home/textminer/NVIDIA_CUDA-8.0_Samples now...

Finished copying samples.

===========

= Summary =

===========

Driver: Not Selected

Toolkit: Installed in /usr/local/cuda-8.0

Samples: Installed in /home/textminer

Please make sure that

- PATH includes /usr/local/cuda-8.0/bin

- LD_LIBRARY_PATH includes /usr/local/cuda-8.0/lib64, or, add /usr/local/cuda-8.0/lib64 to /etc/ld.so.conf and run ldconfig as root

To uninstall the CUDA Toolkit, run the uninstall script in /usr/local/cuda-8.0/bin

Please see CUDA_Installation_Guide_Linux.pdf in /usr/local/cuda-8.0/doc/pdf for detailed information on setting up CUDA.

***WARNING: Incomplete installation! This installation did not install the CUDA Driver. A driver of version at least 361.00 is required for CUDA 8.0 functionality to work.

To install the driver using this installer, run the following command, replacing with the name of this run file:

sudo .run -silent -driver

Logfile is /opt/temp//cuda_install_6583.log

安装完毕后,再声明一下环境变量,并将其写入到 ~/.bashrc 的尾部:

export PATH=/usr/local/cuda-8.0/bin\${PATH:+:\${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-8.0/lib64\${LD_LIBRARY_PATH:+:\${LD_LIBRARY_PATH}}

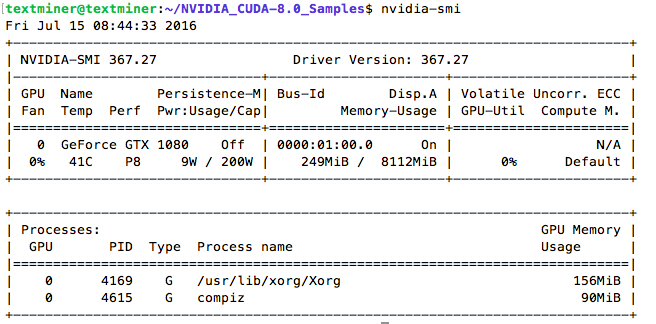

最后再来测试一下CUDA,运行:

nvidia-smi

结果如下所示:

再来试几个CUDA例子:

cd 1_Utilities/deviceQuery

make

这里如果提示gcc版本过高,可以安装低版本的gcc并做软连接替换,具体方法请自行google,我用低版本的gcc4.9替换了ubuntu16.04自带的gcc5.x版本。

"/usr/local/cuda-8.0"/bin/nvcc -ccbin g++ -I../../common/inc -m64 -gencode arch=compute_20,code=sm_20 -gencode arch=compute_30,code=sm_30 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_37,code=sm_37 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_52,code=sm_52 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_60,code=compute_60 -o deviceQuery.o -c deviceQuery.cpp

"/usr/local/cuda-8.0"/bin/nvcc -ccbin g++ -m64 -gencode arch=compute_20,code=sm_20 -gencode arch=compute_30,code=sm_30 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_37,code=sm_37 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_52,code=sm_52 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_60,code=compute_60 -o deviceQuery deviceQuery.o

mkdir -p ../../bin/x86_64/linux/release

cp deviceQuery ../../bin/x86_64/linux/release

执行 ./deviceQuery ,得到:

./deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "GeForce GTX 1080"

CUDA Driver Version / Runtime Version 8.0 / 8.0

CUDA Capability Major/Minor version number: 6.1

Total amount of global memory: 8112 MBytes (8506179584 bytes)

(20) Multiprocessors, (128) CUDA Cores/MP: 2560 CUDA Cores

GPU Max Clock rate: 1835 MHz (1.84 GHz)

Memory Clock rate: 5005 Mhz

Memory Bus Width: 256-bit

L2 Cache Size: 2097152 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 2048

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 1 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 8.0, CUDA Runtime Version = 8.0, NumDevs = 1, Device0 = GeForce GTX 1080

Result = PASS

再测试试一下nobody:

cd ../../5_Simulations/nbody/

make

执行:

./nbody -benchmark -numbodies=256000 -device=0

得到:

> Windowed mode

> Simulation data stored in video memory

> Single precision floating point simulation

> 1 Devices used for simulation

gpuDeviceInit() CUDA Device [0]: "GeForce GTX 1080

> Compute 6.1 CUDA device: [GeForce GTX 1080]

number of bodies = 256000

256000 bodies, total time for 10 iterations: 2291.469 ms

= 286.000 billion interactions per second

= 5719.998 single-precision GFLOP/s at 20 flops per interaction