ElasticSearch介绍

引言

- 在海量数据中执行搜索功能, Mysql对于大数据的搜索,效率太低

- 如果关键字不准确, 一样可以搜索到想要的数据

es介绍

es是使用java语言并且基于Lucene编写的搜索引擎框架, 提供了分布式的全文检索功能, 可以近乎实时的存储, 检索数据, 提供了统一的基于RESTful风格的web接口, 官方客户端对多种语言提供了相应的API

Lucene: Lucene本身就是一个搜索引擎的底层, 本质是一个jar包,里面包含了封装好的各种建立倒排索引,以及进行搜索的代码,包括各种算法。

全文检索是指计算机索引程序通过扫描文章中的每一个词,对每一个词建立一个索引,指明该词在文章中出现的次数和位置,当用户查询时,根据关键字去分词库中进行检索, 找到匹配内容

结构化检索:我想搜索商品分类为日化用品的商品都有哪些,select * from products where category_id=‘日化用品’

es和solr

-

Solr在查询死数据时, 速度相对于es会更快. 但是如果数据是实时改变的, Solr的查询速度会降低很多, ES的查询效率基本没有变化

-

Solr搭建基于需要依赖Zookeeper来帮助管理. ES本身就支持集群的搭建, 不需要第三方介入

-

Solr针对国内的文档并不多, 在ES出现后, 火爆程度直线上升, 文档非常健全

-

ES对云计算和大数据支持特别好

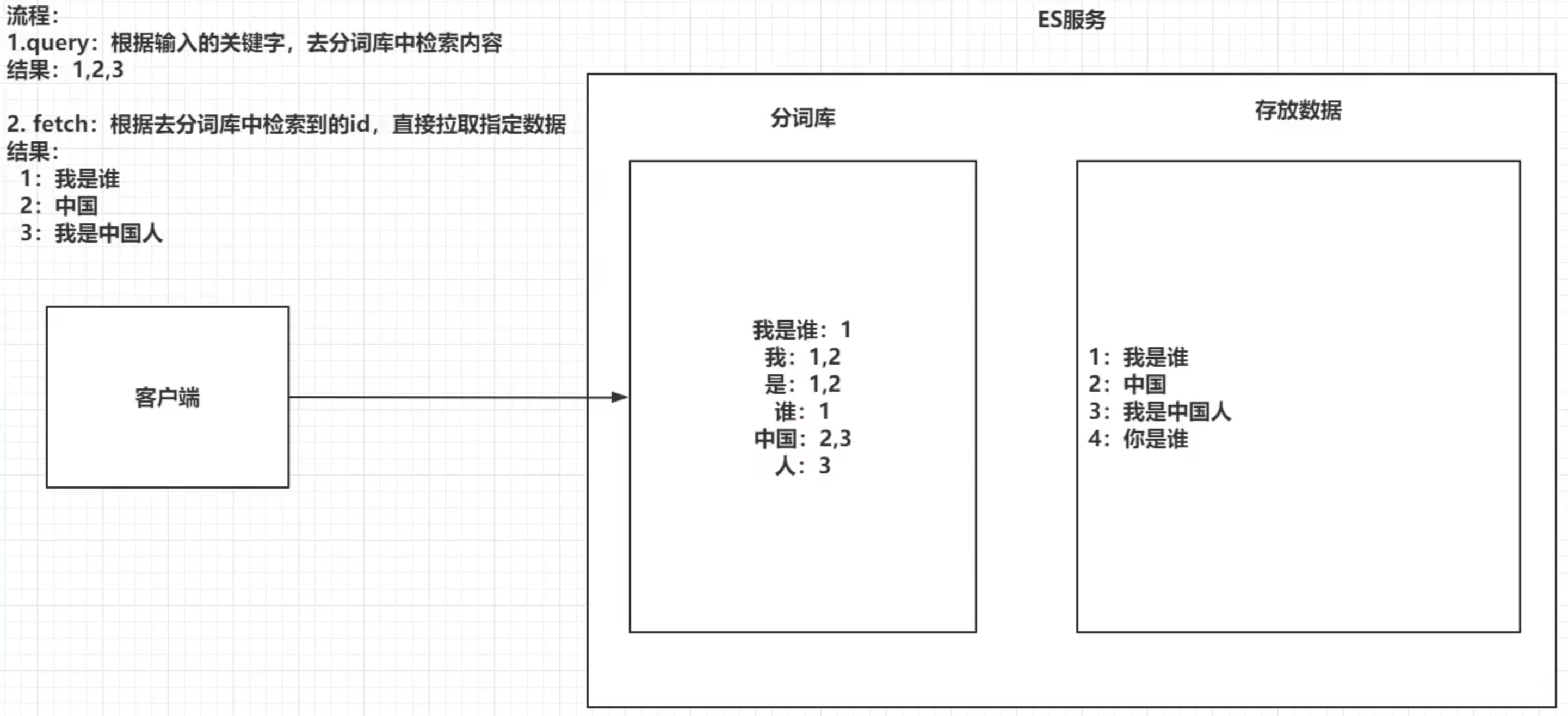

倒排索引

将存放的数据, 按照一定的方式进行分词, 并且将分词的内容存放到一个单独的分词库中

当用户去查询时, 会将用户的查询关键词进行分词

然后去分词库中匹配内容, 最终得到数据的id标识

根据id标识去存放数据的位置拉取到指定的数据

ElasticSearch安装

安装ES&Kibana

安装ES

version: "3.1"

services:elasticsearch:image: daocloud.io/library/elasticsearch:6.5.4restart: alwayscontainer_name: elasticsearchenvironment: # 分配的内存,必须指定,因为es默认指定2g,直接内存溢出了,必须改- "ES_JAVA_OPTS=-Xms128m -Xmx256m"- "discovery.type=single-node"- "COMPOSE_PROJECT_NAME=elasticsearch-server"ports:- 9200:9200kibana:image: daocloud.io/library/kibana:6.5.4restart: alwayscontainer_name: kibanaports:- 5601:5601environment:- elasticsearch_url:http://115.159.222.145:9200depends_on:- elasticsearch

es文件目录

bin 启动文件

config 配置文件-log4j2 日志配置-jvm.options java虚拟机配置, 配置运行所需内存, 内存不够时配置小一点-elasticsearch.yml elasticsearch配置文件, 端口9200

lib 相关jar包

logs 日志

module 功能模块

plugins 插件

elasticsearch启动不起来

elasticsearch exited with code 78

解决:

切换到root用户

执行命令:

sysctl -w vm.max_map_count=262144

查看结果:

sysctl -a|grep vm.max_map_count

显示:

vm.max_map_count = 262144

上述方法修改之后,如果重启虚拟机将失效,所以:

解决办法:

在 /etc/sysctl.conf文件最后添加一行

vm.max_map_count=262144

如果还有问题,注意服务器的内存状态, 可能是内存不够, 需要清理出一些内存.

启动成功后测试

浏览器访问es

http://host:9200

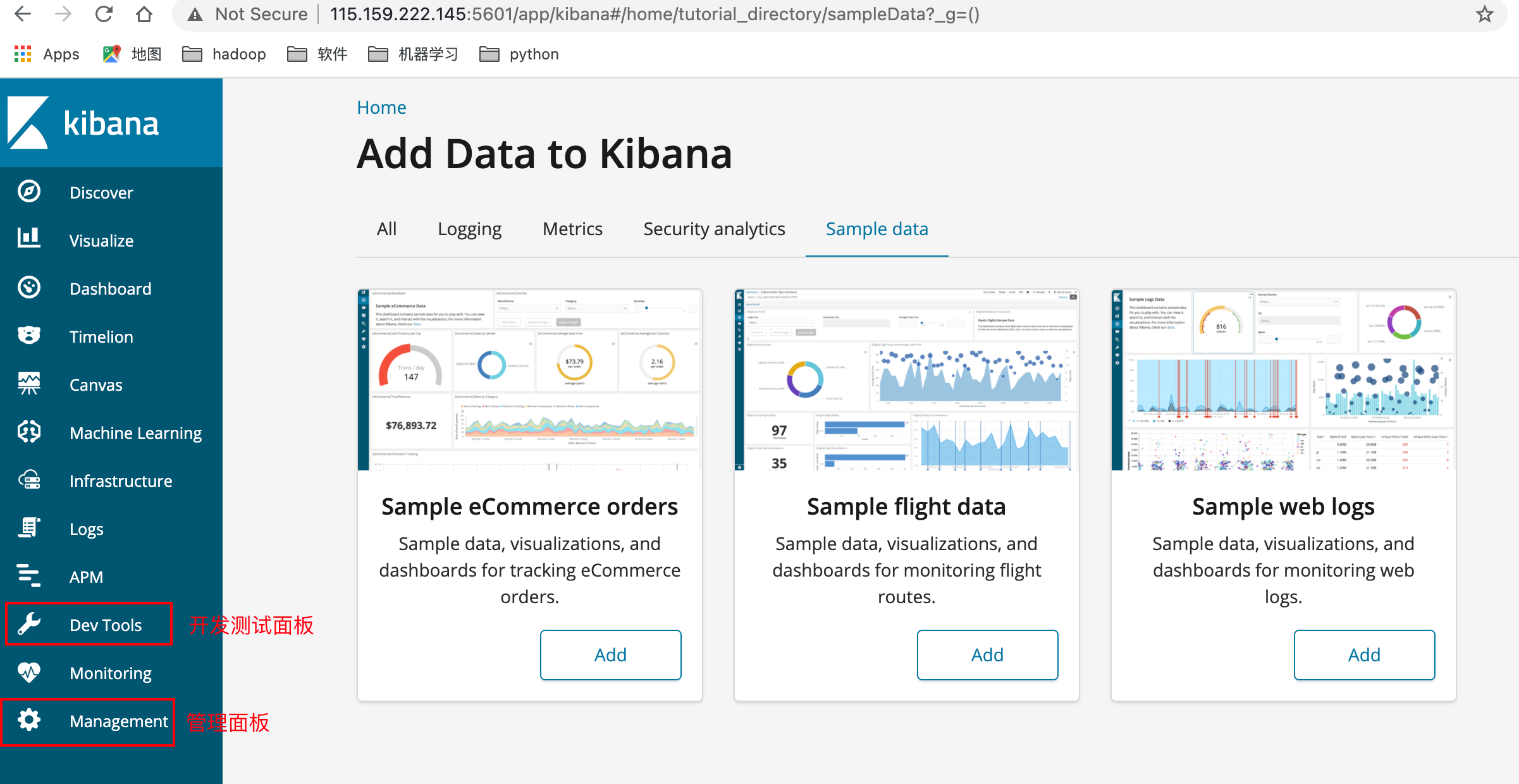

安装Kibana

kibana是一个针对ElasticSearch的开源分析及可视化平台, 用来搜索, 查看交互存储在es索引中的数据.可以通过各种图标进行高级数据分析及展示.

操作简单方便, 数据展示直观

在访问kibana

http://host:5601

安装可视化ES插件head

-

下载地址:http://mobz.github.io/elasticsearch-head

-

启动

npm install npm run start -

跨域问题解决

# 修改es配置文件elasticsearch.yml http.cors.enabled: true http.cors.allow-origin: "*" -

重启es服务器, 再次连接

安装ik分词器

ik分词器下载地址

https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v6.5.4/elasticsearch-analysis-ik-6.5.4.zip

查看es容器

docker ps | grep elastic

进入es容器内部, 执行bin/目录下elasticsearch-plugin安装ik分词器

./elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v6.5.4/elasticsearch-analysis-ik-6.5.4.zip

如果github网络不好,可以找其他版本使用国内路径

使用接口测试分词效果

注意:

需要重启es加载安装的分词器

docker restart es容器名/id

等待重启后测试分词

POST _analyze

{"analyzer": "ik_max_word","text": "尚硅谷教育"

}

需要指定分词器类型 analyzer

返回值

{"tokens" : [{"token" : "尚","start_offset" : 0,"end_offset" : 1,"type" : "CN_CHAR","position" : 0},{"token" : "硅谷","start_offset" : 1,"end_offset" : 3,"type" : "CN_WORD","position" : 1},{"token" : "教育","start_offset" : 3,"end_offset" : 5,"type" : "CN_WORD","position" : 2}]

}

ElasticSearch核心

ES组件

近实时

分为两个意思

- 从写入数据到数据可以被搜索到有一个小延迟(大概1秒);

- 基于es执行搜索和分析可以达到秒级。

Cluster(集群)

集群包含多个节点,每个节点属于哪个集群是通过一个配置(集群名称,默认是elasticsearch)来决定的,对于中小型应用来说,刚开始一个集群就一个节点很正常

node(节点)

集群中的一个节点,节点也有一个名称(默认是随机分配的),节点名称很重要(在执行运维管理操作的时候),默认节点会去加入一个名称为“elasticsearch”的集群,如果直接启动一堆节点,那么它们会自动组成一个elasticsearch集群,当然一个节点也可以组成一个elasticsearch集群。

ElasticSearch存储结构

Index(索引-数据库)

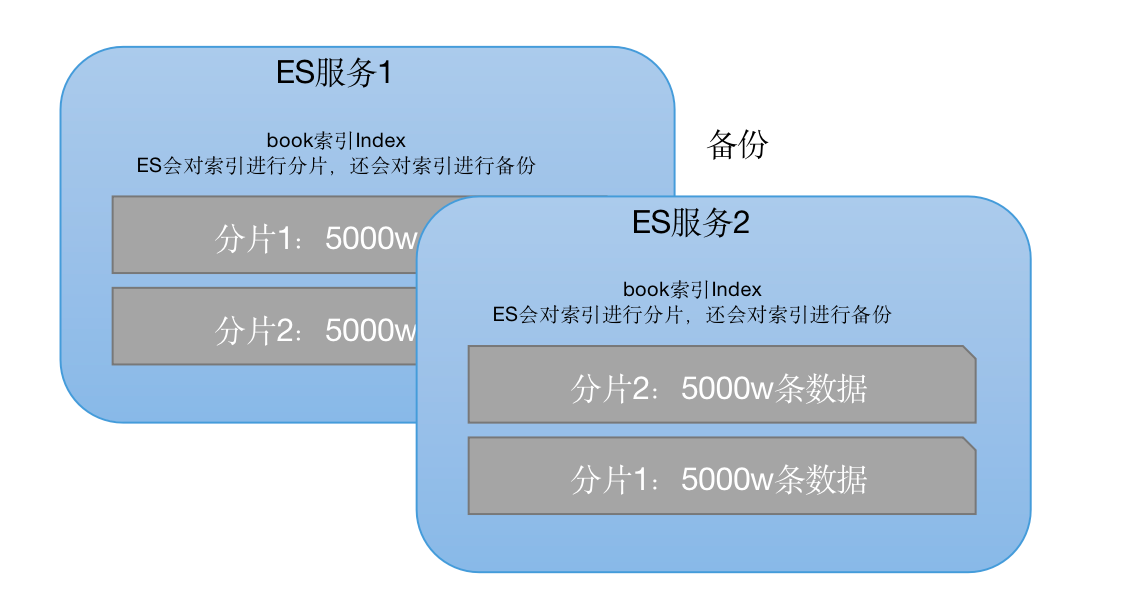

索引包含一堆有相似结构的文档数据,ES服务中,可以建立多个索引

如可以有一个客户索引,商品分类索引,订单索引,索引有一个名称。

- 每一个索引默认分为5片存储

- 每个分片会存在至少一个备份

- 备份分片默认不会帮助检索,当检索压力特别大时, 备份才会帮助检索

- 备份分片需要放在不同的服务器中

Type(类型-表)

每个索引里都可以有一个或多个type,type是index中的一个逻辑数据分类,一个type下的document,都有相同的field

注意:

- ES5.x版本,一个Index下可以创建多个Type

- ES5.x版本,一个Index下可以创建一个Type

- ES5.x版本,一个Index没有Type

Document(文档-行)

文档是es中的最小数据单元,一个类型下可以有多个document, 一个document可以是一条或多条客户数据

Field(字段-列)

Field是Elasticsearch的最小单位。一个document里面有多个field,每个field就是一个数据字段。

操作ES的RESTful语法

GET请求:

- http://ip:port/index: 查询索引信息

- http://ip:port/index/type/doc_id: 查询指定的文档信息

POST请求:

- http://ip:port/index/type/_search: 查询文档, 可以在请求体中添加json字符串代表查询条件

- http://ip:port/index/type/doc_id/_update: 修改文档, 可以在请求体中添加json字符串代表修改的具体内容

PUT请求:

- http://ip:port/index: 创建一个索引, 需要在请求体中指定索引的具体信息

- http://ip:port/index/type/_mapping: 代表创建索引时, 指定索引文档存储的属性信息

DELETE请求:

- http://ip:port/index: 删除索引

- http://ip:port/index/type/doc_id: 删除指定文档

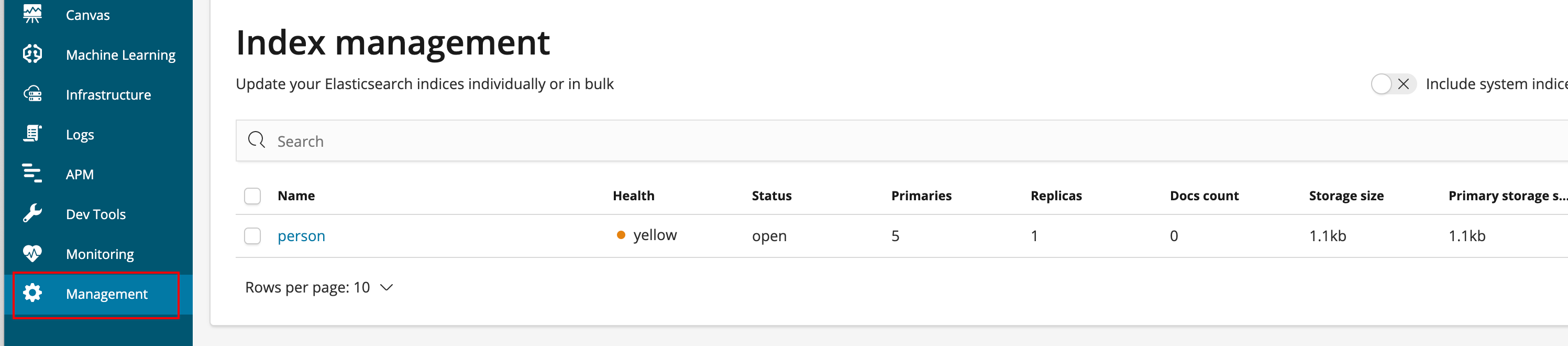

索引的操作

创建一个索引

PUT /person

{"settings": {"number_of_shards": 5,"number_of_replicas": 1}

}

查看索引信息

- kibana图形界面查询

-

接口查询

# 查看索引信息 GET /person返回

{"person" : {"aliases" : { },"mappings" : { },"settings" : {"index" : {"creation_date" : "1614596113957","number_of_shards" : "5","number_of_replicas" : "1","uuid" : "bC8PJsegQ16t5EAtNWh_vg","version" : {"created" : "6050499"},"provided_name" : "person"}}} }

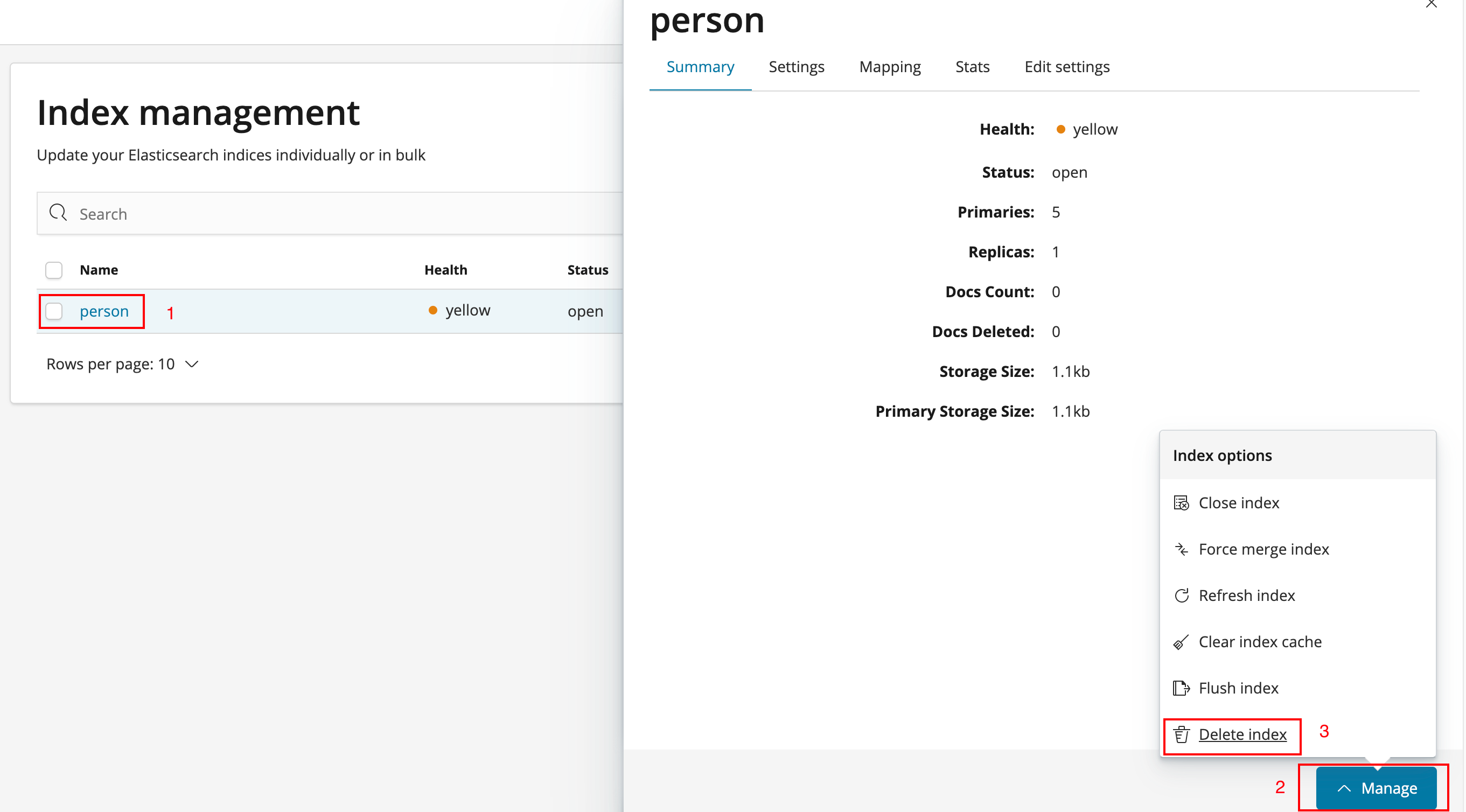

删除索引

-

图形管理界面

-

接口删除

# 删除索引 DELETE /person返回

{"acknowledged" : true }

ES中Field类型

String:

- text: 用于全文检索, 将当前Field进行分词

- keyworld: 当前Field不会被分词

数值类型:

- long

- integer

- byte

- double

- float

时间类型:

- date类型: 针对时间类型指定具体的格式

布尔类型:

- boolean类型, 表达true和false

二进制类型:

- binary类型暂时支持Base64 encoding string

范围类型:

- long_range: 赋值是,只需存储一个范围即可, 指定gt, lt, gte, lte

- float_range:

- integer_range:

- date_range:

- ip_range:

经纬度类型:

- geo_point: 用来存储经纬度的

ip类型:

- ip: 可以存储ipv4或者ipv6

其他

创建索引并指定数据结构

# 创建索引, 指定数据类型

PUT /book

{"settings": {"number_of_shards": 5,"number_of_replicas": 1},"mappings": {"novel": {"properties": {"name": {"type": "text","analyzer": "ik_max_word","index": true,"store": false },"author": {"type": "keyword"},"count": {"type": "long"},"onSale": {"type": "date","format": "yyyy-MM-dd HH:mm:ss||yyyy-MM-dd|epoch_millis"},"desc": {"type": "text","analyzer": "ik_max_word"}}}}

}

解释:

- number_of_shards: 分片

- number_of_replicas: 备份

- mappings: 指定数据结构

- novel: 指定的类型名

- properties: 文档中字段的定义

- name: 指定一个字段名为name

- type: 指定该字段的类型

- analyzer: 指定使用的分词器

- index: true指定当前的field可以被作为查询条件

- store: 是否需要额外存储

- format: 指定时间存储的格式

文档的操作

文档在ES服务器中唯一的标识, _index, _type, _id三个内容为组合, 锁定一个文档, 操作时添加还是修改

新建文档

自动生成id

# 添加文档

POST /book/novel

{"name": "斗罗","author": "西红柿","count": 10000,"onSale": "2000-01-01","desc": "斗罗大陆修仙小说"

}

手动指定id

# 手动指定id

PUT /book/novel/1

{"name": "红楼梦","author": "曹雪芹","count": 10000,"onSale": "1758-01-01","desc": "红楼梦小说"

}

修改文档

覆盖式修改

# 手动指定id

PUT /book/novel/1

{"name": "红楼梦","author": "曹雪芹","count": 20000,"onSale": "1758-01-01","desc": "红楼梦小说"

}

doc修改方式

# 修改文档,基于doc方式

POST /book/novel/1/_update

{"doc": {"count": 123455# 指定修改的field和对应的值}

}

删除文档

# 根据id删除文档

DELETE /book/novel/Ile37XcBdlEqQ4RmWKpJ

Java操作ElasticSearch

java连接ES

-

创建maven工程

-

导入依赖

<dependencies><!-- 1.elasticsearch --><dependency><groupId>org.elasticsearch</groupId><artifactId>elasticsearch</artifactId><version>6.5.4</version></dependency><!-- 2.elasticsearch API --><dependency><groupId>org.elasticsearch.client</groupId><artifactId>elasticsearch-rest-high-level-client</artifactId><version>6.5.4</version></dependency><!-- 3. junit--><dependency><groupId>junit</groupId><artifactId>junit</artifactId><version>4.12</version></dependency><!-- 4. lombok--><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><version>1.16.22</version></dependency><!-- 5. jackson --><dependency><groupId>com.fasterxml.jackson.core</groupId><artifactId>jackson-databind</artifactId><version>2.10.2</version></dependency> </dependencies> -

创建es连接

package com.example.utils;import org.apache.http.HttpHost; import org.elasticsearch.client.RestClient; import org.elasticsearch.client.RestClientBuilder; import org.elasticsearch.client.RestHighLevelClient;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/1/21* @Description:*/ public class ESClient {public static RestHighLevelClient getClient() {// 1.创建HttpHost对象HttpHost httpHost = new HttpHost("115.159.222.145", 9200);// 2. 创建RestClientBuilderRestClientBuilder clientBuilder = RestClient.builder(httpHost);// 3. 创建RestHighLevelClientRestHighLevelClient client = new RestHighLevelClient(clientBuilder);// 返回client对象return client;} }

java操作索引

创建索引

package com.example.test;import com.example.utils.ESClient;

import org.elasticsearch.action.admin.indices.create.CreateIndexRequest;

import org.elasticsearch.action.admin.indices.create.CreateIndexResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.settings.Settings;

import org.elasticsearch.common.xcontent.XContentBuilder;

import org.elasticsearch.common.xcontent.json.JsonXContent;

import org.junit.Test;import java.io.IOException;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/1/21* @Description:*/

public class Demo02 {RestHighLevelClient client = ESClient.getClient();String index = "person";String type = "info";/*"mappings": {"novel": {"properties": {"name": {"type": "text","analyzer": "ik_max_word","index": true,"store": false},"author": {"type": "keyword"},"count": {"type": "long"},"onSale": {"type": "date","format": "yyyy-MM-dd HH:mm:ss||yyyy-MM-dd||epoch_millis"},"desc": {"type": "text","analyzer": "ik_max_word"}}}}*/@Testpublic void createIndex() throws IOException {// 1. 准备索引的settingsSettings.Builder settings = Settings.builder();settings.put("number_of_shards", 3);settings.put("number_of_replicas", 1);// 2. 准备关于索引的结构mappingsXContentBuilder mappings = JsonXContent.contentBuilder().startObject().startObject("properties").startObject("name").field("type", "text").endObject().startObject("age").field("type", "integer").endObject().startObject("birthday").field("type", "date").field("format", "yyyy-MM-dd").endObject().endObject().endObject();// 3. 将settings和mappings封装到Request对象中CreateIndexRequest request = new CreateIndexRequest(index);request.settings(settings);request.mapping(type, mappings);// 4. 通过client连接ES并创建索引CreateIndexResponse response = client.indices().create(request, RequestOptions.DEFAULT);// 5. 输出System.out.println(response.toString());}

}

检查索引是否存在

@Test

public void isExists() throws IOException {// 1. 准备request对象GetIndexRequest request = new GetIndexRequest();request.indices(index);// 2. 通过client去操作boolean exists = client.indices().exists(request, RequestOptions.DEFAULT);// 3. 打印System.out.println(exists);

}

删除索引

@Test

public void deleteIndex() throws IOException {// 1. 准备request对象DeleteIndexRequest delete = new DeleteIndexRequest();delete.indices(index);// 2. 通过client操作AcknowledgedResponse resp = client.indices().delete(delete, RequestOptions.DEFAULT);// 3. 获取返回结果System.out.println(resp.isAcknowledged());

}

java操作文档

添加文档

person实例

@Data

@NoArgsConstructor

@AllArgsConstructor

public class Person {@JsonIgnore // 注解: 序列化是忽略id字段private Integer id;private String name;private Integer age;@JsonFormat(pattern = "yyyy-MM-dd") // 序列化是将date格式化为 yyyy-MM-dd类型private Date birthday;}

创建案例

package com.example.test;import com.example.entity.Person;

import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.action.index.IndexResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.xcontent.XContentType;

import org.junit.Test;import java.io.IOException;

import java.util.Date;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/1/21* @Description:*/

public class Demo03 {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "person";String type = "info";@Testpublic void createDocument() throws IOException {// 1. 准备一个json数据Person person = new Person(1, "张三", 23, new Date());String json = mapper.writeValueAsString(person);System.out.println(json);// 2. 准备一个request对象IndexRequest indexRequest = new IndexRequest(index, type, person.getId().toString());indexRequest.source(json, XContentType.JSON);// 3. 通过client对象添加文档IndexResponse resp = client.index(indexRequest, RequestOptions.DEFAULT);// 4. 打印结果System.out.println(resp.getResult().toString());}

}修改文档

@Test

public void updateDocument() throws IOException {// 1. 创建一个map, 指定修改的内容Map<String, Object> doc = new HashMap<String, Object>();doc.put("name", "李四");String docId = "1";// 2. 创建request对象, 封装数据UpdateRequest updateRequest = new UpdateRequest(index, type, docId);updateRequest.doc(doc);// 3. 通过client对象执行UpdateResponse response = client.update(updateRequest, RequestOptions.DEFAULT);// 4. 输出返回结果System.out.println(response.getResult().toString());}

删除文档

@Test

public void deleteDocument() throws IOException {// 1. 封装request对象DeleteRequest deleteRequest = new DeleteRequest(index, type, "1");// 2. 通过client执行DeleteResponse response = client.delete(deleteRequest, RequestOptions.DEFAULT);// 3. 输出结果System.out.println(response.getResult().toString());}

java批量操作文档

批量添加文档

@Test

public void bulkCreateDocument() throws IOException {// 1. 准备多个json数据Person p1 = new Person(1, "张三", 23, new Date());Person p2 = new Person(2, "李四", 24, new Date());Person p3 = new Person(3, "王五", 25, new Date());String json1 = mapper.writeValueAsString(p1);String json2 = mapper.writeValueAsString(p2);String json3 = mapper.writeValueAsString(p3);// 2. 创建Request, 将准备好的数据封装BulkRequest bulkRequest = new BulkRequest();bulkRequest.add(new IndexRequest(index, type, p1.getId().toString()).source(json1, XContentType.JSON));bulkRequest.add(new IndexRequest(index, type, p2.getId().toString()).source(json2, XContentType.JSON));bulkRequest.add(new IndexRequest(index, type, p3.getId().toString()).source(json3, XContentType.JSON));// 3. client执行BulkResponse resp = client.bulk(bulkRequest, RequestOptions.DEFAULT);// 4. 打印System.out.println(resp.toString());

}

批量删除

@Test

public void bulkDeleteDocument() throws IOException {// 1. 封装Request对象BulkRequest bulkRequest = new BulkRequest();bulkRequest.add(new DeleteRequest(index, type, "1"));bulkRequest.add(new DeleteRequest(index, type, "2"));bulkRequest.add(new DeleteRequest(index, type, "3"));// 2. client执行BulkResponse response = client.bulk(bulkRequest, RequestOptions.DEFAULT);// 3. 输出System.out.println(response.toString());}

ElasticSearch练习案例

索引: sms-logs-index

类型: sms-logs-type

| 字段名称 | 备注 |

|---|---|

| createDate | 创建时间 |

| senDate | 发送时间 |

| longCode | 发送的长号码 如"10698886622" |

| mobile | 电话, 如"13800000000" |

| corpName | 发送公司名, 需要分词检索 |

| smsContent | 发送短信内容, 需要分词检索 |

| state | 短信发送状态, 0成功, 1失败 |

| operateId | 运营商编号1-移动,2-联通,3-电信 |

| province | 省份 |

| ipAddr | 下发服务器IP地址 |

| replyTotal | 短信状态报告返回时长(s) |

| fee | 扣费(分) |

SmsLogs

package com.example.entity;

import com.fasterxml.jackson.annotation.JsonFormat;

import com.fasterxml.jackson.annotation.JsonIgnore;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;import java.util.ArrayList;

import java.util.Date;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/1/21* @Description:*/

@Data

@NoArgsConstructor

@AllArgsConstructor

public class SmsLogs {@JsonIgnoreprivate Integer id;@JsonFormat(pattern = "yyyy-MM-dd")private Date createDate;@JsonFormat(pattern = "yyyy-MM-dd")private Date sendDate;private String longCode;private String mobile;private String corpName;private String smsContent;private Integer state;private Integer operatorId;private String province;private String ipAddr;private Integer replyTotal;private String fee;@JsonIgnorepublic static String doc = "乌山镇,玉兰大陆第一山脉‘魔兽山脉’西方的芬莱王国中的一个普通小镇。朝阳初升,乌山镇这个小镇上依旧有着清晨的一丝清冷之气,只是小镇中的居民几乎都已经出来开始工作了,即使是六七岁的稚童,也差不多也都起床开始了传统性的晨练。乌山镇东边的空地上,早晨温热的阳光透过空地旁边的大树,在空地上留下了斑驳的光点。只见一大群孩子,目视过去估摸着差不多有一两百个。这群孩子分成了三个团队,每个团队都是排成几排,孩子们一个个都静静地站在空地上,面色严肃。纠结了好久买多大的屏,全凭感觉和运气,最后确定了65寸的,非常合适,大小刚刚好,我家客厅面积是34平方米,差不多的面积尽管拍就好了,其实在大一些可能更棒吧!双十一下手,电视越大越好,实惠好用,电视功能多了也用不着,之前的什么画中画,三D功能,有几个用的上的,电视这东西简单实用就行。我一共买了4台,一台75寸,一台70寸,两台65寸。松下冰箱一台,华帝油烟机燃气灶两套,马桶2个,西门子开关插座55个,丝涟床垫一个,七七八八加起来一共4万5左右,大家电我只信京东,服务杠杠滴";

}

初始化数据

package com.example.test;

import com.example.entity.SmsLogs;

import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.admin.indices.create.CreateIndexRequest;

import org.elasticsearch.action.admin.indices.create.CreateIndexResponse;

import org.elasticsearch.action.bulk.BulkRequest;

import org.elasticsearch.action.bulk.BulkResponse;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.settings.Settings;

import org.elasticsearch.common.xcontent.XContentBuilder;

import org.elasticsearch.common.xcontent.XContentType;

import org.elasticsearch.common.xcontent.json.JsonXContent;

import org.junit.Test;import java.util.ArrayList;

import java.util.Date;

import java.util.List;

import java.util.Random;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/1/21* @Description:*/

public class InitDate {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type="sms-logs-type";@Testpublic void createIndex() throws Exception{// 1.准备关于索引的settingSettings.Builder settings = Settings.builder().put("number_of_shards", 5).put("number_of_replicas", 1);// 2.准备关于索引的mappingXContentBuilder mappings = JsonXContent.contentBuilder().startObject().startObject("properties").startObject("corpName").field("type", "keyword").endObject().startObject("createDate").field("type", "date").field("format", "yyyy-MM-dd").endObject().startObject("fee").field("type", "long").endObject().startObject("ipAddr").field("type", "ip").endObject().startObject("longCode").field("type", "keyword").endObject().startObject("mobile").field("type", "keyword").endObject().startObject("operatorId").field("type", "integer").endObject().startObject("province").field("type", "keyword").endObject().startObject("replyTotal").field("type", "integer").endObject().startObject("sendDate").field("type", "date").field("format", "yyyy-MM-dd").endObject().startObject("smsContent").field("type", "text").field("analyzer", "ik_max_word").endObject().startObject("state").field("type", "integer").endObject().endObject().endObject();// 3.将settings和mappings 封装到到一个Request对象中CreateIndexRequest request = new CreateIndexRequest(index).settings(settings).mapping(type, mappings);// 4.使用client 去连接ESCreateIndexResponse response = client.indices().create(request, RequestOptions.DEFAULT);System.out.println("response:"+response.toString());}@Testpublic void bulkCreateDoc() throws Exception{// 1.准备多个json 对象String longCode = "1008687";String mobile ="138340658";List<String> companies = new ArrayList<String>();companies.add("腾讯课堂");companies.add("阿里旺旺");companies.add("海尔电器");companies.add("海尔智家公司");companies.add("格力汽车");companies.add("苏宁易购");companies.add("盒马鲜生");companies.add("途虎养车");List<String> provinces = new ArrayList<String>();provinces.add("北京");provinces.add("重庆");provinces.add("上海");provinces.add("晋城");provinces.add("深圳");provinces.add("武汉");Random random = new Random();BulkRequest bulkRequest = new BulkRequest();for (int i = 1; i <20 ; i++) {Thread.sleep(1000);SmsLogs s1 = new SmsLogs();s1.setId(i);s1.setCreateDate(new Date((int) (Math.random() * (854526980000L + 1 - 852526980000L)) + 852526980000L));s1.setSendDate(new Date((int) (Math.random() * (854526980000L + 1 - 852526980000L)) + 852526980000L));s1.setLongCode(longCode+i);s1.setMobile(mobile+2*i);s1.setCorpName(companies.get(random.nextInt(companies.size())));s1.setSmsContent(SmsLogs.doc.substring((i-1)*20,i*20));s1.setState(i%2);s1.setOperatorId(i%3);s1.setProvince(provinces.get(random.nextInt(provinces.size())));s1.setIpAddr("127.0.0."+i);s1.setReplyTotal(i*3);s1.setFee(i*6+"");String json1 = mapper.writeValueAsString(s1);bulkRequest.add(new IndexRequest(index,type,s1.getId().toString()).source(json1, XContentType.JSON));System.out.println("数据"+i+s1.toString());}// 3.client 执行BulkResponse responses = client.bulk(bulkRequest, RequestOptions.DEFAULT);// 4.输出结果System.out.println(responses.getItems().toString());}

}

ElasticSearch查询

Term&terms查询

term查询

term的查询代表完全匹配, 搜索之前不会对你搜索的关键词进行分词,直接去文档分词库中匹配内容

# term查询

POST /sms-logs-index/sms-logs-type/_search

{"from": 0, # limit起始"size": 5, # limit查询条数"query": {"term": { # 查询类型, term全匹配"province": {"value": "北京"}}}

}

返回

{"took" : 31,"timed_out" : false,"_shards" : {"total" : 5,"successful" : 5,"skipped" : 0,"failed" : 0},"hits" : {"total" : 4,"max_score" : 1.3862944,"hits" : [{"_index" : "sms-logs-index","_type" : "sms-logs-type","_id" : "12","_score" : 1.3862944,"_source" : {"createDate" : "1997-01-20","sendDate" : "1997-01-19","longCode" : "100868712","mobile" : "13834065824","corpName" : "海尔智家公司","smsContent" : "了好久买多大的屏,全凭感觉和运气,最后确","state" : 0,"operatorId" : 0,"province" : "北京","ipAddr" : "127.0.0.12","replyTotal" : 36,"fee" : "72"}},...}]}

}

java代码实现

package com.example.test;import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.junit.Test;import java.io.IOException;

import java.util.Map;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class Demo04Query {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type = "sms-logs-type";@Testpublic void termQuery() throws IOException {// 1. 创建Request对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.from(0);builder.size(5);builder.query(QueryBuilders.termQuery("province", "北京"));request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取_source中的数据, 并展示for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}}

}

terms查询

与term查询机制一样, 不会对查询关键字分词, 直接匹配

不同点:

terms针对一个 字段包含多个值的时候使用

如:

- term: where province=“北京”

- terms: where province=“北京” or province="?"

# terms查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"terms": {"province": ["北京","武汉"]}}

}

java代码实现

@Test

public void termsQuery() throws IOException {// 1. 创建Request对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.termsQuery("province", "北京", "武汉"));request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取_source中的数据, 并展示for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}

}

match查询

match查询属于高层查询, 她会根据你查询的字段类型不一样, 采用不同的查询方式

- 查询的是日期或者数值的话, 他会将你基于的字符串查询内容转换为日期或数值对待

- 如果查询的内容是一个不能被分词的内容(keyword), match查询不会对你指定的查询关键字进行分词

- 如果查询内容是一个可以被分词的内容(text), match会将指定的查询内容根据一定方式去分词, 去分词库匹配指定的内容

match底层实际是多个term查询, 将查到的结果封装在一起

match_all查询

查询全部结果

# match_all查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"match_all": {}}

}

java代码实现

package com.example.test;import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.junit.Test;import java.io.IOException;

import java.util.Map;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class Demo05Match {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type = "sms-logs-type";@Testpublic void matchAllQuery() throws IOException {// 1. 创建Request对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.matchAllQuery());builder.size(20); // es默认只查询10条, 查询更多需要指定request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取_source中的数据, 并展示for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}System.out.println(response.getHits().getHits().length);}

}

match查询

指定field查询条件

# match查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"match": {"smsContent": "面积"}}

}

java代码实现

@Test

public void matchQuery() throws IOException {// 1. 创建Request对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.matchQuery("smsContent", "面积"));request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取_source中的数据, 并展示for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}System.out.println(response.getHits().getHits().length);

}

布尔match查询

基于一个field查询条件,进行and或or的连接方式查询

# 布尔match查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"match": {"smsContent": {"query": "孩子 团队","operator": "or" # "operator": "and" 按照and,既包含"孩子"又包含"团队"的}}}

}

java代码实现

@Test

public void booleanMatchQuery() throws IOException {// 1. 创建Request对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.matchQuery("smsContent", "团队 孩子").operator(Operator.OR));request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取_source中的数据, 并展示for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}System.out.println(response.getHits().getHits().length);

}

multi_match查询

match针对一个field做检索, multi_match针对多个field进行检索, 多个filed针对一个text

# multi_match查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"multi_match": {"query": "北京","fields": ["province", "smsContent"]}}

}

# 省份或信息中包含"北京"的都符合

java代码实现

@Test

public void multiMatchQuery() throws IOException {// 1. 创建Request对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.multiMatchQuery("北京", "province", "smsContent").operator(Operator.OR));request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取_source中的数据, 并展示for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}System.out.println(response.getHits().getHits().length);

}

其他查询

id查询

# id查询

GET /sms-logs-index/sms-logs-type/1

java代码实现

package com.example.test;import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.get.GetRequest;

import org.elasticsearch.action.get.GetResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.junit.Test;import java.io.IOException;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class demo06Other {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type = "sms-logs-type";@Testpublic void findById() throws IOException {// 1. 创建GetRequestGetRequest request = new GetRequest(index, type, "1");// 2. 执行查询GetResponse response = client.get(request, RequestOptions.DEFAULT);// 3. 输出结果System.out.println(response.getSourceAsMap());}

}

Ids查询

根据多个id查询, 类似Mysql中的where id in (id1, id2, id3…)

# ids查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"ids": {"values": ["1", "2", "100"]}}

}

java代码实现

@Test

public void findByIds() throws IOException {// 1. 创建searchRequest对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.idsQuery().addIds("1", "2", "100"));request.source(builder);// 3. 执行SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取结果for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}}

prefix查询

前缀查询, 通过一个关键字去指定一个field的前缀, 从而查询到指定的文档

# prefix查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"prefix": {"corpName": {"value": "海尔"}}}

}

java代码实现

@Test

public void findByPrefix() throws IOException {// 1. 创建searchRequest对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.prefixQuery("corpName", "海尔"));request.source(builder);// 3. 执行SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取结果for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}

}

fuzzy查询

模糊查询

输入字符的大概, ES可以根据输入的内容进行查询, 即时有错别字也可以, 查询结果相应不会太精确

# fuzzy查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"fuzzy": {"corpName": {"value": "苏拧易购","prefix_length": 1 # 指定前面几个字符不允许出错}}}

}

java代码实现

@Test

public void findByFuzzy() throws IOException {// 1. 创建searchRequest对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.fuzzyQuery("corpName", "苏宁易购").prefixLength(2));request.source(builder);// 3. 执行SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取结果for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}

}

wildcard查询

通配查询, 和Mysql中的like是一个套路, 在查询时, 指定通配符*和占位符

# wildcard查询公司以"海尔"开头的

POST /sms-logs-index/sms-logs-type/_search

{"query": {"wildcard": {"corpName": {"value": "海尔*" # 可以使用 * 和 ? 指定通配符和占位符}}}

}

java代码实现

@Test

public void findByWillCard() throws IOException {// 1. 创建searchRequest对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.wildcardQuery("corpName", "海尔*"));request.source(builder);// 3. 执行SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取结果for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}

}

range查询

范围查询, 只针对数值类型, 对某一个field进行大于或者小于的范围指定

# range查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"range": {"fee": {"gte": 10,"lte": 50 # gt >, lt <, gte >=, lte <=}}}

}

java代码实现

@Test

public void findByRange() throws IOException {// 1. 创建searchRequest对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.rangeQuery("fee").lt(50).gt(10));request.source(builder);// 3. 执行SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取结果for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}

}

regexp查询

正则表达式查询, 根据编写的正则表达式去匹配内容

注意: prefix, willcard, fuzzy和regexp查询效率相对较低, 对效率要求高时, 避免使用

# regexp查询 电话以38结尾的

POST /sms-logs-index/sms-logs-type/_search

{"query": {"regexp": {"mobile": "[0-9]{9}38"}}

}

java代码实现

@Test

public void findByRegexp() throws IOException {// 1. 创建searchRequest对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.query(QueryBuilders.regexpQuery("mobile", "[0-9]{9}38"));request.source(builder);// 3. 执行SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 获取结果for (SearchHit hit : response.getHits().getHits()) {Map<String, Object> result = hit.getSourceAsMap();System.out.println(result);}

}

深分页Scroll

ES对from + size是有限制的, from和size二者之和不能超过1W

原理:

from + size 在ES查询数据的方式

- 将用户指定的关键字进行分词,

- 将词汇去分词库中进行检索, 得到多个文档id

- 去各个分片中去拉取指定的数据, 耗时较长

- 将数据根据score分数进行排序, 耗时较长

- 根据from的值,将查询的数据进行取舍

- 返回结果

Scroll + size 在ES中查询数据的方式

- 将用户指定的关键字进行分词,

- 将词汇去分词库中进行检索, 得到多个文档id

- 将文档的id存放在es的上下文中

- 根据指定的size去ES中检索指定的数据, 拿完数据的文档id, 会从上下文中移除

- 如果需要下一页数据, 直接去ES的上下文中, 找后续内容

- 循环第四和第五步,获取查询内容

Scroll查询方式, 不适合做实时的查询

# Scroll查询, 返回第一页数据, 将文档id存放在ES上下文中, 指定生存时间1m

POST /sms-logs-index/sms-logs-type/_search?scroll=1m

{"query": {"match_all": {}},"size": 2,"sort": [{"fee": {"order": "desc"}}]

}# 根据scroll查询下一页数据

POST /_search/scroll

{"scroll_id": "DnF1ZXJ5VGhlbkZldGNoBQAAAAAAAWlZFnhjSU5MM0RBUk9hb2Eza1g5OWtzbncAAAAAAAFpWhZ4Y0lOTDNEQVJPYW9hM2tYOTlrc253AAAAAAABaVsWeGNJTkwzREFST2FvYTNrWDk5a3NudwAAAAAAAWlcFnhjSU5MM0RBUk9hb2Eza1g5OWtzbncAAAAAAAFpXRZ4Y0lOTDNEQVJPYW9hM2tYOTlrc253", # 根据第一步得到的scroll_id"scroll": "1m" # scroll信息的生存时间

}# 删除scroll在ES上下文中的数据

DELETE /_search/scroll/DnF1ZXJ5VGhlbkZldGNoBQAAAAAAAWlZFnhjSU5MM0RBUk9hb2Eza1g5OWtzbncAAAAAAAFpWhZ4Y0lOTDNEQVJPYW9hM2tYOTlrc253AAAAAAABaVsWeGNJTkwzREFST2FvYTNrWDk5a3NudwAAAAAAAWlcFnhjSU5MM0RBUk9hb2Eza1g5OWtzbncAAAAAAAFpXRZ4Y0lOTDNEQVJPYW9hM2tYOTlrc253 # scroll_id

java代码实现

package com.example.test;import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.get.GetRequest;

import org.elasticsearch.action.get.GetResponse;

import org.elasticsearch.action.search.*;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.unit.TimeValue;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.elasticsearch.search.sort.SortOrder;

import org.junit.Test;import java.io.IOException;

import java.util.Map;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class demo07Scroll {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type = "sms-logs-type";@Testpublic void scrollQuery() throws IOException {// 1. 创建searchRequest对象SearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定scroll信息request.scroll(TimeValue.timeValueMinutes(1L));// 3. 指定查询条件SearchSourceBuilder builder = new SearchSourceBuilder();builder.size(4);builder.sort("fee", SortOrder.DESC);builder.query(QueryBuilders.matchAllQuery());request.source(builder);// 4. 获取返回结果的scrollId, sourceSearchResponse response = client.search(request, RequestOptions.DEFAULT);String scrollId = response.getScrollId();System.out.println("--------首页-------");for (SearchHit hit : response.getHits().getHits()) {System.out.println(hit.getSourceAsMap());}while (true) {// 5. 创建SearchScrollRequestSearchScrollRequest scrollRequest = new SearchScrollRequest(scrollId);// 6. 指定scrollId的生存时间scrollRequest.scroll(TimeValue.timeValueMinutes(1L));// 7. 执行查询获取返回结果SearchResponse scrollResponse = client.scroll(scrollRequest, RequestOptions.DEFAULT);// 8. 判断是否查询到了数据输出SearchHit[] hits = scrollResponse.getHits().getHits();if (hits != null && hits.length > 0) {System.out.println("---------下一页--------");for (SearchHit hit : hits) {System.out.println(hit.getSourceAsMap());}} else {// 9. 判断没有查询到的数据- 退出循环System.out.println("---------结束--------");break;}}// 10. 创建ClearScrollRequestClearScrollRequest clearScrollRequest = new ClearScrollRequest();// 11. 指定ScrollIdclearScrollRequest.addScrollId(scrollId);// 12. 删除scrollIdClearScrollResponse clearScrollResponse = client.clearScroll(clearScrollRequest, RequestOptions.DEFAULT);// 13. 输出结果System.out.println("删除scroll" + clearScrollResponse.isSucceeded());}}

delete-by-query

根据term, match等查询方式去删除大量的文档

注意: 如果你需要删除的内容, 是index下的大部分数据, 推荐创建一个全新的index, 将保留的文档内容, 添加到全新的索引

# delete-by-query查询删除

POST /sms-logs-index/sms-logs-type/_delete_by_query

{"query": {"range": {"fee": {"gte": 10,"lte": 15}}}

}

java代码实现

package com.example.test;import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.search.*;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.unit.TimeValue;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.index.reindex.BulkByScrollResponse;

import org.elasticsearch.index.reindex.DeleteByQueryRequest;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.elasticsearch.search.sort.SortOrder;

import org.junit.Test;import java.io.IOException;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class demo08Delete {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type = "sms-logs-type";@Testpublic void deleteByQuery() throws IOException {// 1. 创建DeleteByQueryRequestDeleteByQueryRequest request = new DeleteByQueryRequest(index);request.types(type);// 2. 指定检索条件request.setQuery(QueryBuilders.rangeQuery("fee").gt(10).lt(20));// 3. 执行删除BulkByScrollResponse response = client.deleteByQuery(request, RequestOptions.DEFAULT);// 4. 输出返回结果System.out.println(response.toString());}

}

复合查询

bool查询

符合过滤器, 将你的多个查询条件, 以一定的逻辑组合在一起.

- must: 所有条件都符合,表示and

- must_not : 所有条件都不匹配, 表示not

- should: 所有条件, 满足其一即可, 表示or

# 复合查询

# 1. 省份为武汉或北京

# 2. 运营商不是电信

# 3. smsContent中包含 客厅 和 面积

POST /sms-logs-index/sms-logs-type/_search

{"query": {"bool": {"should": [{"term": {"province": {"value": "北京"}}},{"term": {"province": {"value": "武汉"}}}],"must_not": [{"term": {"operatorId": {"value": "3"}}}],"must": [{"match": {"smsContent": "客厅"}},{"match": {"smsContent": "面积"}}]}}

}

java代码实现

package com.example.test;import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchRequestBuilder;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.index.query.BoolQueryBuilder;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.index.reindex.BulkByScrollResponse;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.junit.Test;import java.io.IOException;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class demo08complex {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type = "sms-logs-type";@Testpublic void boolQuery() throws IOException {// 1. 创建SearchRequestSearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定检索条件SearchSourceBuilder builder = new SearchSourceBuilder();BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();// 指定省份为武汉或北京boolQueryBuilder.should(QueryBuilders.termQuery("province", "武汉"));boolQueryBuilder.should(QueryBuilders.termQuery("province", "北京"));// 指定运营方不为电信boolQueryBuilder.mustNot(QueryBuilders.termQuery("operatorId", 3));// smsContent中包含 面积 和 客厅boolQueryBuilder.must(QueryBuilders.matchQuery("smsContent", "面积"));boolQueryBuilder.must(QueryBuilders.matchQuery("smsContent", "客厅"));builder.query(boolQueryBuilder);request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 输出返回结果for (SearchHit hit : response.getHits().getHits()) {System.out.println(hit.getSourceAsMap());}}}

boosting查询

boosting查询可以帮助我们影响查询后的score

- positive: 只有匹配上positive查询的内容, 才会放到返回的结果中

- negative: 在匹配上positive后同时匹配上了negative, 可以降低这样的文档score

- negative_boost: 指定降低的系数, 必须小于1.0

关于查询时 分数如何计算:

- 搜索的关键字在文档中出现的频次越高, 分数越高

- 指定的文档内容越短, 分数就越高

- 指定的关键字也会被分词, 被分词的内容在分词库匹配的个数越多, 分数越高

# boosting查询 客厅面积

POST /sms-logs-index/sms-logs-type/_search

{"query": {"boosting": {"positive": {"match": {"smsContent": "客厅面积"}},"negative": {"match": {"smsContent": "差不多"}},"negative_boost": 0.5}}

}

java代码实现

@Test

public void boostingQuery() throws IOException {// 1. 创建SearchRequestSearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定检索条件SearchSourceBuilder builder = new SearchSourceBuilder();BoostingQueryBuilder boostingQueryBuilder = QueryBuilders.boostingQuery(QueryBuilders.matchQuery("smsContent", "客厅面积"),QueryBuilders.matchQuery("smsContent", "差不多")).negativeBoost(0.5f);builder.query(boostingQueryBuilder);request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 输出返回结果for (SearchHit hit : response.getHits().getHits()) {System.out.println(hit.getSourceAsMap());}

}

filter查询

query和filter区别:

- query: 根据你的查询条件, 去计算文档的匹配度获取一个分数, 根据分数进行排序, 不会作缓存

- filter: 根据你的查询条件,去查询文档, 不会计算匹配分数, 但是filter会对经常被过滤的数据进行缓存

# filter查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"bool": {"filter": [{"term": {"corpName": "苏宁易购"}},{"range": {"fee": {"gt": 4}}}]}}

}

java代码实现

package com.example.test;import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.index.query.BoolQueryBuilder;

import org.elasticsearch.index.query.BoostingQueryBuilder;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.junit.Test;import java.io.IOException;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class demo10Filter {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type = "sms-logs-type";@Testpublic void filter() throws IOException {// 1. 创建SearchRequestSearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定检索条件SearchSourceBuilder builder = new SearchSourceBuilder();BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();boolQueryBuilder.filter(QueryBuilders.termQuery("corpName", "苏宁易购"));boolQueryBuilder.filter(QueryBuilders.rangeQuery("fee").gt(10));builder.query(boolQueryBuilder);request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 输出返回结果for (SearchHit hit : response.getHits().getHits()) {System.out.println(hit.getSourceAsMap());}}

}

高亮查询

高亮查询, 将用户输入的关键字, 以一定的特殊样式展示给用户, 让用户知道为什么结果被检索出来

高亮展示的数据, 本身是文档中的一个field, 单独将Field以highlight的形式返回给你

ES中提供一个highlight属性, 和query同级别

-

fields: 指定那几个字段以高亮显示

-

fragment_size: 指定高亮数据展示多少个字符

-

pre_tags: 指定前缀标签, 如<font color=“red”>

-

post_tags: 指定后缀标签, 如</font>

# highlight查询

POST /sms-logs-index/sms-logs-type/_search

{"query": {"match": {"smsContent": "面积"}},"highlight": {"fields": {"smsContent": {}},"pre_tags": "<font color='red'>","post_tags": "</font>","fragment_size": 10}

}

java代码实现

package com.example.test;import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.index.query.BoolQueryBuilder;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.elasticsearch.search.fetch.subphase.highlight.HighlightBuilder;

import org.junit.Test;import java.io.IOException;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class demo11HighLight {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type = "sms-logs-type";@Testpublic void highlightQuery() throws IOException {// 1. 创建SearchRequestSearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定检索条件SearchSourceBuilder builder = new SearchSourceBuilder();// 2.1 指定查询条件builder.query(QueryBuilders.matchQuery("smsContent", "面积"));// 2.2 指定高亮HighlightBuilder highlightBuilder = new HighlightBuilder();highlightBuilder.field("smsContent", 10).preTags("<font color='red'>").postTags("</font>");builder.highlighter(highlightBuilder);request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 输出返回结果for (SearchHit hit : response.getHits().getHits()) {System.out.println(hit.getHighlightFields().get("smsContent"));}}

}

聚合查询

ES的聚合查询和mysql的聚合查询类似, 相比mysql更强大, 提供了多种多样的统计数据方法

# 聚合查询RESTful语法

POST /sms-logs-index/sms-logs-type/_search

{"aggs": {"名字(agg)": {"agg_type": {"属性": "值"}}}

}

去重计数查询

去重计数Cardinality

- 将返回的文档中的一个指定的field进行去重, 统计一共有多少条

# cardinality去重计数查询

POST /sms-logs-index/sms-logs-type/_search

{"aggs": {"agg": {"cardinality": {"field": "province"}}}

}

java代码实现

package com.example.test;import com.example.utils.ESClient;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.aggregations.Aggregation;

import org.elasticsearch.search.aggregations.AggregationBuilders;

import org.elasticsearch.search.aggregations.metrics.cardinality.Cardinality;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.elasticsearch.search.fetch.subphase.highlight.HighlightBuilder;

import org.junit.Test;import java.io.IOException;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class Demo12Aggs {ObjectMapper mapper = new ObjectMapper();RestHighLevelClient client = ESClient.getClient();String index = "sms-logs-index";String type = "sms-logs-type";@Testpublic void cardinality() throws IOException {// 1. 创建SearchRequestSearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定使用的聚合查询方式SearchSourceBuilder builder = new SearchSourceBuilder();builder.aggregation(AggregationBuilders.cardinality("agg").field("province"));request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 输出返回结果Cardinality agg = response.getAggregations().get("agg"); // 向下转型long value = agg.getValue();System.out.println(value);}

}

范围统计

统计一定范围内出现的文档个数, 比如, 针对一个Field的值在0~100, 100~200等之间出现的个数分别是多少

范围统计可以针对普通的数值, 也可以针对时间类型, 针对ip类型都可以做响应的统计

-

range: 数值统计

# 数值方式范围统计 POST /sms-logs-index/sms-logs-type/_search {"aggs": {"agg": {"range": {"field": "fee","ranges": [{"from": 10,"to": 50},{"from": 50,"to": 100},{"from": 100}]}}} } -

date_range: 时间统计

# 时间方式范围统计 POST /sms-logs-index/sms-logs-type/_search {"aggs": {"agg": {"range": {"field": "createDate","format": "yyyy","ranges": [{"to": 1996},{"from": 1996}]}}} } -

ip_range: ip统计

# ip方式范围统计 POST /sms-logs-index/sms-logs-type/_search {"aggs": {"agg": {"ip_range": {"field": "ipAddr","ranges": [{"to": "127.0.0.10"},{"from": "127.0.0.10"}]}}} }

java代码实现

数值方式范围统计

@Test

public void range() throws IOException {// 1. 创建SearchRequestSearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定使用的聚合查询方式SearchSourceBuilder builder = new SearchSourceBuilder();builder.aggregation(AggregationBuilders.range("agg").field("fee").addUnboundedTo(10).addRange(10, 50).addUnboundedFrom(50));request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 输出返回结果Range agg = response.getAggregations().get("agg");for (Range.Bucket bucket : agg.getBuckets()) {String key = bucket.getKeyAsString();Object from = bucket.getFrom();Object to = bucket.getTo();long docCount = bucket.getDocCount();System.out.println(String.format("key: %s, from: %s, to: %s, doc: %s",key, from, to, docCount));}

}

其他类似

统计聚合

查询指定field的最大值, 最小值, 平均值, 平方和…

使用 extended_stats

# 统计聚合查询

POST /sms-logs-index/sms-logs-type/_search

{"aggs": {"agg": {"extended_stats": {"field": "fee"}}}

}

java实现统计聚合查询

@Test

public void extendedStats() throws IOException {// 1. 创建SearchRequestSearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定使用的聚合查询方式SearchSourceBuilder builder = new SearchSourceBuilder();builder.aggregation(AggregationBuilders.extendedStats("agg").field("fee"));request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 输出返回结果ExtendedStats agg = response.getAggregations().get("agg");double max = agg.getMax();double min = agg.getMin();System.out.println("fee的最大是为:" + max + ", 最小值为: " + min);

}

地图经纬度搜索

ES中提供了一个数据类型geo_point, 用来存储经纬度

# 创建一个索引, 一个name, 一个location

PUT /map

{"settings": {"number_of_replicas": 1,"number_of_shards": 5},"mappings": {"map": {"properties": {"name": {"type": "text"},"location": {"type": "geo_point"}}}}

}# 添加测试数据

PUT /map/map/1

{"name": "天安门","location": {"lon": 116.402981,"lat": 39.914492}

}PUT /map/map/2

{"name": "海淀公园","location": {"lon": 116.302509,"lat": 39.991152}

}PUT /map/map/3

{"name": "北京动物园","location": {"lon": 116.343184,"lat": 39.947468}

}

ES地图检索方式

- geo_distance: 直线距离检索方式

- geo_bounding_box: 以两个点确定一个矩形, 获取矩形内的全部数据

- geo_polygon: 以多个点确定一个多边形, 获取多边形内的全部数据

基于RESTful实现地图检索

geo_distance

# geo_distance

POST /map/map/_search

{"query": {"geo_distance": {"location": { # 找一个目标点"lon": 116.433733,"lat": 39.908404},"distance": 3000, # 确定半径"distance_type": "arc" # 确定形状为园}}

}

geo_bounding_box

# geo_bounding_box

POST /map/map/_search

{"query": {"geo_bounding_box": {"location": {"top_left": {"lon": 116.326943,"lat": 39.95499},"bottom_right": {"lon": 116.347783,"lat": 39.939281}}}}

}

geo_polygen

# geo_polygon

POST /map/map/_search

{"query": {"geo_polygon": {"location": {"points": [{"lon": 116.298916,"lat": 39.99878},{"lon": 116.29561,"lat": 39.972576},{"lon": 116.327661,"lat": 39.984736}]}}}

}

java实现代码geo_polygon

package com.example.test;import com.example.utils.ESClient;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.geo.GeoPoint;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.aggregations.AggregationBuilders;

import org.elasticsearch.search.aggregations.bucket.range.Range;

import org.elasticsearch.search.aggregations.metrics.cardinality.Cardinality;

import org.elasticsearch.search.aggregations.metrics.stats.extended.ExtendedStats;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.junit.Test;import java.io.IOException;

import java.util.ArrayList;

import java.util.List;/*** @author : ryxiong728* @email : ryxiong728@126.com* @date : 3/2/21* @Description:*/

public class Demo13GeoSearch {RestHighLevelClient client = ESClient.getClient();String index = "map";String type = "map";@Testpublic void geoPolygon() throws IOException {// 1. 创建SearchRequestSearchRequest request = new SearchRequest(index);request.types(type);// 2. 指定检索方式SearchSourceBuilder builder = new SearchSourceBuilder();List<GeoPoint> points = new ArrayList<GeoPoint>();points.add(new GeoPoint(39.99878, 116.298916));points.add(new GeoPoint(39.972576, 116.29561));points.add(new GeoPoint(39.984739, 116.327661));builder.query(QueryBuilders.geoPolygonQuery("location", points));request.source(builder);// 3. 执行查询SearchResponse response = client.search(request, RequestOptions.DEFAULT);// 4. 输出返回结果for (SearchHit hit : response.getHits().getHits()) {System.out.println(hit.getSourceAsMap());}}

}