nvidia-smi命令详解

1. nvidia-smi命令介绍

在深度学习等场景中,nvidia-smi命令是我们经常接触到的一个命令,用来查看GPU的占用情况,可以说是一个必须要学会的命令了,普通用户一般用的比较多的就是nvidia-smi的命令,其实掌握了这一个命令也就能够覆盖绝大多数场景了,但是本质求真务实的态度,本文调研了相关资料,整理了一些比较常用的nvidia-smi命令的其他用法。

2. nvidia-smi 支持的 GPU

NVIDIA 的 SMI 工具基本上支持自 2011 年以来发布的任何 NVIDIA GPU。其中包括来自 Fermi 和更高架构系列(Kepler、Maxwell、Pascal、Volta , Turing, Ampere等)的 Tesla、Quadro 和 GeForce 设备。

支持的产品包括:

Tesla:S1070、S2050、C1060、C2050/70、M2050/70/90、X2070/90、K10、K20、K20X、K40、K80、M40、P40、P100、V100、A100、H100。

Quadro:4000、5000、6000、7000、M2070-Q、K 系列、M 系列、P 系列

RTX 系列

GeForce:不同级别的支持,可用的指标少于 Tesla 和 Quadro 产品。

3. 桌面显卡和服务器显卡均支持的命令

(1)nvidia-smi

用户名@主机名:~# nvidia-smi

Mon Dec 5 08:48:49 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.172.01 Driver Version: 450.172.01 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 A100-SXM4-40GB On | 00000000:26:00.0 Off | 0 |

| N/A 22C P0 54W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 1 A100-SXM4-40GB On | 00000000:2C:00.0 Off | 0 |

| N/A 25C P0 53W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 2 A100-SXM4-40GB On | 00000000:66:00.0 Off | 0 |

| N/A 25C P0 50W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 3 A100-SXM4-40GB On | 00000000:6B:00.0 Off | 0 |

| N/A 23C P0 51W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 4 A100-SXM4-40GB On | 00000000:A2:00.0 Off | 0 |

| N/A 23C P0 57W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 5 A100-SXM4-40GB On | 00000000:A7:00.0 Off | 0 |

| N/A 25C P0 52W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------++-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

下面详细介绍一下每个部分分别表示什么:

下面是上图的一些具体介绍,比较简单的我就不列举了,只列举一些不常见的:

持久模式:persistence mode 能够让 GPU 更快响应任务,待机功耗增加。关闭 persistence mode 同样能够启动任务。持续模式虽然耗能大,但是在新的GPU应用启动时,花费的时间更少

风扇转速:主动散热的显卡一般会有这个参数,服务器显卡一般是被动散热,这个参数显示N/A。从0到100%之间变动,这个速度是计算机期望的风扇转速,实际情况下如果风扇堵转,可能打不到显示的转速。有的设备不会返回转速,因为它不依赖风扇冷却而是通过其他外设保持低温(比如有些实验室的服务器是常年放在空调房间里的)。

温度:单位是摄氏度。

性能状态:从P0到P12,P0表示最大性能,P12表示状态最小性能。

Disp.A:Display Active,表示GPU的显示是否初始化。

ECC纠错:这个只有近几年的显卡才具有这个功能,老版显卡不具备这个功能。

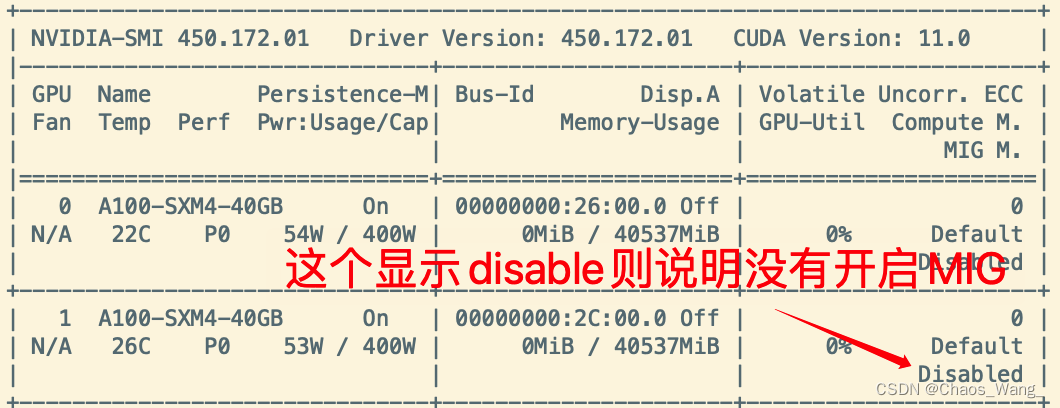

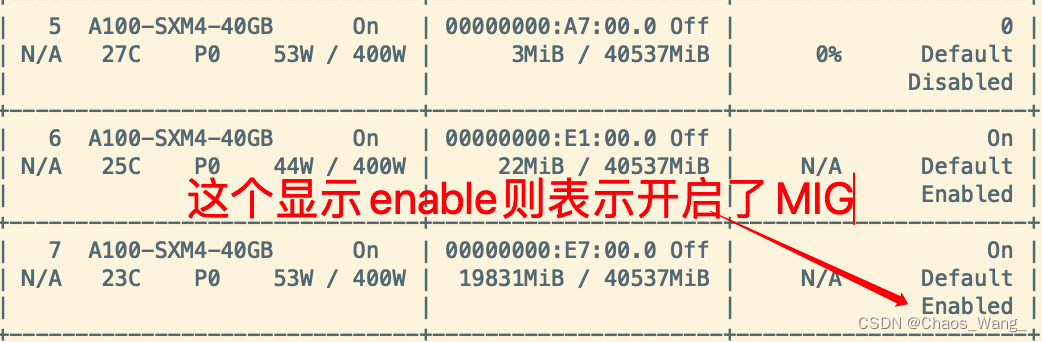

MIG:Multi-Instance GPU,多实例显卡技术,支持将一张显卡划分成多张显卡使用,目前只支持安培架构显卡。

新的多实例GPU (MIG)特性允许GPU(从NVIDIA安培架构开始)被安全地划分为多达7个独立的GPU实例。

用于CUDA应用,为多个用户提供独立的GPU资源,以实现最佳的GPU利用率。

对于GPU计算能力未完全饱和的工作负载,该特性尤其有益,因此用户可能希望并行运行不同的工作负载,以最大化利用率。

开启MIG的显卡使用nvidia-smi命令如下所示:

Mon Dec 5 16:48:56 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.172.01 Driver Version: 450.172.01 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 A100-SXM4-40GB On | 00000000:26:00.0 Off | 0 |

| N/A 22C P0 54W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 1 A100-SXM4-40GB On | 00000000:2C:00.0 Off | 0 |

| N/A 24C P0 53W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 2 A100-SXM4-40GB On | 00000000:66:00.0 Off | 0 |

| N/A 25C P0 50W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 3 A100-SXM4-40GB On | 00000000:6B:00.0 Off | 0 |

| N/A 23C P0 51W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 4 A100-SXM4-40GB On | 00000000:A2:00.0 Off | 0 |

| N/A 23C P0 56W / 400W | 3692MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 5 A100-SXM4-40GB On | 00000000:A7:00.0 Off | 0 |

| N/A 25C P0 52W / 400W | 0MiB / 40537MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 6 A100-SXM4-40GB On | 00000000:E1:00.0 Off | On |

| N/A 24C P0 43W / 400W | 22MiB / 40537MiB | N/A Default |

| | | Enabled |

+-------------------------------+----------------------+----------------------+

| 7 A100-SXM4-40GB On | 00000000:E7:00.0 Off | On |

| N/A 22C P0 52W / 400W | 19831MiB / 40537MiB | N/A Default |

| | | Enabled |

+-------------------------------+----------------------+----------------------++-----------------------------------------------------------------------------+

| MIG devices: |

+------------------+----------------------+-----------+-----------------------+

| GPU GI CI MIG | Memory-Usage | Vol| Shared |

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG|

| | | ECC| |

|==================+======================+===========+=======================|

| 6 1 0 0 | 11MiB / 20096MiB | 42 0 | 3 0 2 0 0 |

| | 0MiB / 32767MiB | | |

+------------------+----------------------+-----------+-----------------------+

| 6 2 0 1 | 11MiB / 20096MiB | 42 0 | 3 0 2 0 0 |

| | 0MiB / 32767MiB | | |

+------------------+----------------------+-----------+-----------------------+

| 7 1 0 0 | 11MiB / 20096MiB | 42 0 | 3 0 2 0 0 |

| | 0MiB / 32767MiB | | |

+------------------+----------------------+-----------+-----------------------+

| 7 2 0 1 | 19820MiB / 20096MiB | 42 0 | 3 0 2 0 0 |

| | 4MiB / 32767MiB | | |

+------------------+----------------------+-----------+-----------------------++-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 4 N/A N/A 22888 C python 3689MiB |

| 7 2 0 37648 C ...vs/tf2.4-py3.8/bin/python 19805MiB |

+-----------------------------------------------------------------------------+

如上图我将GPU 6和7两张显卡使用MIG技术虚拟化成4张显卡,分别是GPU6:0,GPU6:1,GPU7:0,GPU7:1

每张卡的显存为20GB,MIG技术支持将A100显卡虚拟化成7张显卡,具体介绍我会在之后的博客中更新。

(2)nvidia-smi -h

输入 nvidia-smi -h 可查看该命令的帮助手册,如下所示:

用户名@主机名:$ nvidia-smi -h

NVIDIA System Management Interface -- v450.172.01NVSMI provides monitoring information for Tesla and select Quadro devices.

The data is presented in either a plain text or an XML format, via stdout or a file.

NVSMI also provides several management operations for changing the device state.Note that the functionality of NVSMI is exposed through the NVML C-based

library. See the NVIDIA developer website for more information about NVML.

Python wrappers to NVML are also available. The output of NVSMI is

not guaranteed to be backwards compatible; NVML and the bindings are backwards

compatible.http://developer.nvidia.com/nvidia-management-library-nvml/

http://pypi.python.org/pypi/nvidia-ml-py/

Supported products:

- Full Support- All Tesla products, starting with the Kepler architecture- All Quadro products, starting with the Kepler architecture- All GRID products, starting with the Kepler architecture- GeForce Titan products, starting with the Kepler architecture

- Limited Support- All Geforce products, starting with the Kepler architecture

nvidia-smi [OPTION1 [ARG1]] [OPTION2 [ARG2]] ...-h, --help Print usage information and exit.LIST OPTIONS:-L, --list-gpus Display a list of GPUs connected to the system.-B, --list-blacklist-gpus Display a list of blacklisted GPUs in the system.SUMMARY OPTIONS:<no arguments> Show a summary of GPUs connected to the system.[plus any of]-i, --id= Target a specific GPU.-f, --filename= Log to a specified file, rather than to stdout.-l, --loop= Probe until Ctrl+C at specified second interval.QUERY OPTIONS:-q, --query Display GPU or Unit info.[plus any of]-u, --unit Show unit, rather than GPU, attributes.-i, --id= Target a specific GPU or Unit.-f, --filename= Log to a specified file, rather than to stdout.-x, --xml-format Produce XML output.--dtd When showing xml output, embed DTD.-d, --display= Display only selected information: MEMORY,UTILIZATION, ECC, TEMPERATURE, POWER, CLOCK,COMPUTE, PIDS, PERFORMANCE, SUPPORTED_CLOCKS,PAGE_RETIREMENT, ACCOUNTING, ENCODER_STATS,FBC_STATS, ROW_REMAPPERFlags can be combined with comma e.g. ECC,POWER.Sampling data with max/min/avg is also returned for POWER, UTILIZATION and CLOCK display types.Doesn't work with -u or -x flags.-l, --loop= Probe until Ctrl+C at specified second interval.-lms, --loop-ms= Probe until Ctrl+C at specified millisecond interval.SELECTIVE QUERY OPTIONS:Allows the caller to pass an explicit list of properties to query.[one of]--query-gpu= Information about GPU.Call --help-query-gpu for more info.--query-supported-clocks= List of supported clocks.Call --help-query-supported-clocks for more info.--query-compute-apps= List of currently active compute processes.Call --help-query-compute-apps for more info.--query-accounted-apps= List of accounted compute processes.Call --help-query-accounted-apps for more info.This query is not supported on vGPU host.--query-retired-pages= List of device memory pages that have been retired.Call --help-query-retired-pages for more info.--query-remapped-rows= Information about remapped rows.Call --help-query-remapped-rows for more info.[mandatory]--format= Comma separated list of format options:csv - comma separated values (MANDATORY)noheader - skip the first line with column headersnounits - don't print units for numericalvalues[plus any of]-i, --id= Target a specific GPU or Unit.-f, --filename= Log to a specified file, rather than to stdout.-l, --loop= Probe until Ctrl+C at specified second interval.-lms, --loop-ms= Probe until Ctrl+C at specified millisecond interval.DEVICE MODIFICATION OPTIONS:[any one of]-pm, --persistence-mode= Set persistence mode: 0/DISABLED, 1/ENABLED-e, --ecc-config= Toggle ECC support: 0/DISABLED, 1/ENABLED-p, --reset-ecc-errors= Reset ECC error counts: 0/VOLATILE, 1/AGGREGATE-c, --compute-mode= Set MODE for compute applications:0/DEFAULT, 1/EXCLUSIVE_PROCESS,2/PROHIBITED--gom= Set GPU Operation Mode:0/ALL_ON, 1/COMPUTE, 2/LOW_DP-r --gpu-reset Trigger reset of the GPU.Can be used to reset the GPU HW state in situationsthat would otherwise require a machine reboot.Typically useful if a double bit ECC error hasoccurred.Reset operations are not guarenteed to work inall cases and should be used with caution.-vm --virt-mode= Switch GPU Virtualization Mode:Sets GPU virtualization mode to 3/VGPU or 4/VSGAVirtualization mode of a GPU can only be set whenit is running on a hypervisor.-lgc --lock-gpu-clocks= Specifies <minGpuClock,maxGpuClock> clocks as apair (e.g. 1500,1500) that defines the range of desired locked GPU clock speed in MHz.Setting this will supercede application clocksand take effect regardless if an app is running.Input can also be a singular desired clock value(e.g. <GpuClockValue>).-rgc --reset-gpu-clocksResets the Gpu clocks to the default values.-ac --applications-clocks= Specifies <memory,graphics> clocks as apair (e.g. 2000,800) that defines GPU'sspeed in MHz while running applications on a GPU.-rac --reset-applications-clocksResets the applications clocks to the default values.-acp --applications-clocks-permission=Toggles permission requirements for -ac and -rac commands:0/UNRESTRICTED, 1/RESTRICTED-pl --power-limit= Specifies maximum power management limit in watts.-cc --cuda-clocks= Overrides or restores default CUDA clocks.In override mode, GPU clocks higher frequencies when running CUDA applications.Only on supported devices starting from the Volta series.Requires administrator privileges.0/RESTORE_DEFAULT, 1/OVERRIDE-am --accounting-mode= Enable or disable Accounting Mode: 0/DISABLED, 1/ENABLED-caa --clear-accounted-appsClears all the accounted PIDs in the buffer.--auto-boost-default= Set the default auto boost policy to 0/DISABLEDor 1/ENABLED, enforcing the change only after thelast boost client has exited.--auto-boost-permission=Allow non-admin/root control over auto boost mode:0/UNRESTRICTED, 1/RESTRICTED-mig --multi-instance-gpu= Enable or disable Multi Instance GPU: 0/DISABLED, 1/ENABLEDRequires root.[plus optional]-i, --id= Target a specific GPU.-eow, --error-on-warning Return a non-zero error for warnings.UNIT MODIFICATION OPTIONS:-t, --toggle-led= Set Unit LED state: 0/GREEN, 1/AMBER[plus optional]-i, --id= Target a specific Unit.SHOW DTD OPTIONS:--dtd Print device DTD and exit.[plus optional]-f, --filename= Log to a specified file, rather than to stdout.-u, --unit Show unit, rather than device, DTD.--debug= Log encrypted debug information to a specified file. STATISTICS: (EXPERIMENTAL)stats Displays device statistics. "nvidia-smi stats -h" for more information.Device Monitoring:dmon Displays device stats in scrolling format."nvidia-smi dmon -h" for more information.daemon Runs in background and monitor devices as a daemon process.This is an experimental feature. Not supported on Windows baremetal"nvidia-smi daemon -h" for more information.replay Used to replay/extract the persistent stats generated by daemon.This is an experimental feature."nvidia-smi replay -h" for more information.Process Monitoring:pmon Displays process stats in scrolling format."nvidia-smi pmon -h" for more information.TOPOLOGY:topo Displays device/system topology. "nvidia-smi topo -h" for more information.DRAIN STATES:drain Displays/modifies GPU drain states for power idling. "nvidia-smi drain -h" for more information.NVLINK:nvlink Displays device nvlink information. "nvidia-smi nvlink -h" for more information.CLOCKS:clocks Control and query clock information. "nvidia-smi clocks -h" for more information.ENCODER SESSIONS:encodersessions Displays device encoder sessions information. "nvidia-smi encodersessions -h" for more information.FBC SESSIONS:fbcsessions Displays device FBC sessions information. "nvidia-smi fbcsessions -h" for more information.GRID vGPU:vgpu Displays vGPU information. "nvidia-smi vgpu -h" for more information.MIG:mig Provides controls for MIG management. "nvidia-smi mig -h" for more information.Please see the nvidia-smi(1) manual page for more detailed information.

(3)nvidia-smi -L

输入 nvidia-smi -L 可以列出所有的GPU设备及其UUID,如下所示:

用户名@主机名:$ nvidia-smi -L

GPU 0: A100-SXM4-40GB (UUID: GPU-9f2df045-8650-7b2e-d442-cc0d7ba0150d)

GPU 1: A100-SXM4-40GB (UUID: GPU-ad117600-5d0e-557d-c81d-7c4d60555eaa)

GPU 2: A100-SXM4-40GB (UUID: GPU-087ca21d-0c14-66d0-3869-59ff65a48d58)

GPU 3: A100-SXM4-40GB (UUID: GPU-eb9943a0-d78e-4220-fdb2-e51f2b490064)

GPU 4: A100-SXM4-40GB (UUID: GPU-8302a1f7-a8be-753f-eb71-6f60c5de1703)

GPU 5: A100-SXM4-40GB (UUID: GPU-ee1be011-0d98-3d6f-8c89-b55c28966c63)

GPU 6: A100-SXM4-40GB (UUID: GPU-8b3c7f5b-3fb4-22c3-69c9-4dd6a9f31586)MIG 3g.20gb Device 0: (UUID: MIG-GPU-8b3c7f5b-3fb4-22c3-69c9-4dd6a9f31586/1/0)MIG 3g.20gb Device 1: (UUID: MIG-GPU-8b3c7f5b-3fb4-22c3-69c9-4dd6a9f31586/2/0)

GPU 7: A100-SXM4-40GB (UUID: GPU-fab40b5f-c286-603f-8909-cdb73e5ffd6c)MIG 3g.20gb Device 0: (UUID: MIG-GPU-fab40b5f-c286-603f-8909-cdb73e5ffd6c/1/0)MIG 3g.20gb Device 1: (UUID: MIG-GPU-fab40b5f-c286-603f-8909-cdb73e5ffd6c/2/0)

(4)nvidia-smi -q

输入 nvidia-smi -q 可以列出所有GPU设备的详细信息。如果只想列出某一GPU的详细信息,可使用 -i 选项指定,如下图所示:

用户名@主机名:$ nvidia-smi -q -i 0

==============NVSMI LOG==============Timestamp : Mon Dec 5 17:31:45 2022

Driver Version : 450.172.01

CUDA Version : 11.0Attached GPUs : 8

GPU 00000000:26:00.0Product Name : A100-SXM4-40GBProduct Brand : TeslaDisplay Mode : EnabledDisplay Active : DisabledPersistence Mode : EnabledMIG ModeCurrent : DisabledPending : DisabledAccounting Mode : DisabledAccounting Mode Buffer Size : 4000Driver ModelCurrent : N/APending : N/ASerial Number : 1564720004631GPU UUID : GPU-9f2df045-8650-7b2e-d442-cc0d7ba0150dMinor Number : 2VBIOS Version : 92.00.19.00.10MultiGPU Board : NoBoard ID : 0x2600GPU Part Number : 692-2G506-0200-002Inforom VersionImage Version : G506.0200.00.04OEM Object : 2.0ECC Object : 6.16Power Management Object : N/AGPU Operation ModeCurrent : N/APending : N/AGPU Virtualization ModeVirtualization Mode : NoneHost VGPU Mode : N/AIBMNPURelaxed Ordering Mode : N/APCIBus : 0x26Device : 0x00Domain : 0x0000Device Id : 0x20B010DEBus Id : 00000000:26:00.0Sub System Id : 0x134F10DEGPU Link InfoPCIe GenerationMax : 4Current : 4Link WidthMax : 16xCurrent : 16xBridge ChipType : N/AFirmware : N/AReplays Since Reset : 0Replay Number Rollovers : 0Tx Throughput : 0 KB/sRx Throughput : 0 KB/sFan Speed : N/APerformance State : P0Clocks Throttle ReasonsIdle : ActiveApplications Clocks Setting : Not ActiveSW Power Cap : Not ActiveHW Slowdown : Not ActiveHW Thermal Slowdown : Not ActiveHW Power Brake Slowdown : Not ActiveSync Boost : Not ActiveSW Thermal Slowdown : Not ActiveDisplay Clock Setting : Not ActiveFB Memory UsageTotal : 40537 MiBUsed : 0 MiBFree : 40537 MiBBAR1 Memory UsageTotal : 65536 MiBUsed : 29 MiBFree : 65507 MiBCompute Mode : DefaultUtilizationGpu : 0 %Memory : 0 %Encoder : 0 %Decoder : 0 %Encoder StatsActive Sessions : 0Average FPS : 0Average Latency : 0FBC StatsActive Sessions : 0Average FPS : 0Average Latency : 0Ecc ModeCurrent : EnabledPending : EnabledECC ErrorsVolatileSRAM Correctable : 0SRAM Uncorrectable : 0DRAM Correctable : 0DRAM Uncorrectable : 0AggregateSRAM Correctable : 0SRAM Uncorrectable : 0DRAM Correctable : 0DRAM Uncorrectable : 0Retired PagesSingle Bit ECC : N/ADouble Bit ECC : N/APending Page Blacklist : N/ARemapped RowsCorrectable Error : 0Uncorrectable Error : 0Pending : NoRemapping Failure Occurred : NoBank Remap Availability HistogramMax : 640 bank(s)High : 0 bank(s)Partial : 0 bank(s)Low : 0 bank(s)None : 0 bank(s)TemperatureGPU Current Temp : 26 CGPU Shutdown Temp : 92 CGPU Slowdown Temp : 89 CGPU Max Operating Temp : 85 CMemory Current Temp : 38 CMemory Max Operating Temp : 95 CPower ReadingsPower Management : SupportedPower Draw : 55.79 WPower Limit : 400.00 WDefault Power Limit : 400.00 WEnforced Power Limit : 400.00 WMin Power Limit : 100.00 WMax Power Limit : 400.00 WClocksGraphics : 210 MHzSM : 210 MHzMemory : 1215 MHzVideo : 585 MHzApplications ClocksGraphics : 1095 MHzMemory : 1215 MHzDefault Applications ClocksGraphics : 1095 MHzMemory : 1215 MHzMax ClocksGraphics : 1410 MHzSM : 1410 MHzMemory : 1215 MHzVideo : 1290 MHzMax Customer Boost ClocksGraphics : 1410 MHzClock PolicyAuto Boost : N/AAuto Boost Default : N/AProcesses : None

(5)nvidia-smi -l [second]

输入 nvidia-smi -l [second] 后会每隔 second 秒刷新一次面板。监控GPU利用率通常会选择每隔1秒刷新一次,例如:

nvidia-smi -l 2

(6)nvidia-smi -pm

在 Linux 上,您可以将 GPU 设置为持久模式,以保持加载 NVIDIA 驱动程序,即使没有应用程序正在访问这些卡。 当您运行一系列短作业时,这特别有用。 持久模式在每个空闲 GPU 上使用更多瓦特,但可以防止每次启动 GPU 应用程序时出现相当长的延迟。 如果您为 GPU 分配了特定的时钟速度或功率限制(因为卸载 NVIDIA 驱动程序时这些更改会丢失),这也是必要的。 通过运行以下命令在所有 GPU 上启用持久性模式:

nvidia-smi -pm 1

也可以指定开启某个显卡的持久模式:

nvidia-smi -pm 1 -i 0

(7)nvidia-smi dmon

以 1 秒的更新间隔监控整体 GPU 使用情况

(8)nvidia-smi pmon

以 1 秒的更新间隔监控每个进程的 GPU 使用情况:

(9)…

4. 服务器显卡才支持的命令

(1)使用 nvidia-smi 查看系统/GPU 拓扑和 NVLink

要正确利用更高级的 NVIDIA GPU 功能(例如 GPU Direct),正确配置系统拓扑至关重要。 拓扑是指各种系统设备(GPU、InfiniBand HCA、存储控制器等)如何相互连接以及如何连接到系统的 CPU。 某些拓扑类型会降低性能甚至导致某些功能不可用。 为了帮助解决此类问题,nvidia-smi 支持系统拓扑和连接查询:

nvidia-smi topo --matrix #查看系统/GPU 拓扑

nvidia-smi nvlink --status #查询 NVLink 连接本身以确保状态、功能和运行状况。

nvidia-smi nvlink --capabilities #查询 NVLink 连接本身以确保状态、功能和运行状况。

(2)显卡分片技术

详见英伟达NVIDIA服务器显卡多实例技术(MIG)

开启MIG技术

一般情况下,MIG技术默认是关闭的,需要手动开启,可以使用nvidia-smi命令查看是否开启MIG,nvidia-smi命令的一些详细内容可以查看之前的博客:nvidia-smi命令详解和一些高阶技巧介绍

上图是没有开启MIG的显示结果,下图是开启了MIG的显示结果。

如果没有开启MIG,可以使用如下命令开启MIG:

sudo nvidia-smi -i [显卡ID] -mig 1

这个是针对某个显卡开启MIG的命令,例如我想开启第一张显卡的MIG,则可以使用以下命令

sudo nvidia-smi -i 0 -mig 1

在这个特定的DGX例子中,必须停止nvsm和dcgm服务,在所需的GPU上启用MIG模式,然后恢复监控服务,如下所示:

sudo systemctl stop nvsm

sudo systemctl stop dcgm

使用nvidia-smi mig -lgipp可以查看开启MIG的显卡可以划分成那几个实力

用户名@主机名:~$ sudo nvidia-smi mig -lgipp

GPU 6 Profile ID 19 Placements: {0,1,2,3,4,5,6}:1

GPU 6 Profile ID 14 Placements: {0,2,4}:2

GPU 6 Profile ID 9 Placements: {0,4}:4

GPU 6 Profile ID 5 Placement : {0}:4

GPU 6 Profile ID 0 Placement : {0}:8

GPU 7 Profile ID 19 Placements: {0,1,2,3,4,5,6}:1

GPU 7 Profile ID 14 Placements: {0,2,4}:2

GPU 7 Profile ID 9 Placements: {0,4}:4

GPU 7 Profile ID 5 Placement : {0}:4

GPU 7 Profile ID 0 Placement : {0}:8

使用nvidia-smi mig -lgip可以查看每个开启MIG的设备支持的实例类型,一共有1g.5gb(7个4.75GB显卡)、2g.10gb(3个9.75GB显卡)、3g.20gb(2个19.62GB显卡)、4g.20gb(1个19.62B显卡)、7g.40gb(1个39.50GB显卡)五种类型。

用户名@主机名:~$ sudo nvidia-smi mig -lgip

+--------------------------------------------------------------------------+

| GPU instance profiles: |

| GPU Name ID Instances Memory P2P SM DEC ENC |

| Free/Total GiB CE JPEG OFA |

|==========================================================================|

| 6 MIG 1g.5gb 19 0/7 4.75 No 14 0 0 |

| 1 0 0 |

+--------------------------------------------------------------------------+

| 6 MIG 2g.10gb 14 0/3 9.75 No 28 1 0 |

| 2 0 0 |

+--------------------------------------------------------------------------+

| 6 MIG 3g.20gb 9 0/2 19.62 No 42 2 0 |

| 3 0 0 |

+--------------------------------------------------------------------------+

| 6 MIG 4g.20gb 5 0/1 19.62 No 56 2 0 |

| 4 0 0 |

+--------------------------------------------------------------------------+

| 6 MIG 7g.40gb 0 0/1 39.50 No 98 5 0 |

| 7 1 1 |

+--------------------------------------------------------------------------+

| 7 MIG 1g.5gb 19 0/7 4.75 No 14 0 0 |

| 1 0 0 |

+--------------------------------------------------------------------------+

| 7 MIG 2g.10gb 14 0/3 9.75 No 28 1 0 |

| 2 0 0 |

+--------------------------------------------------------------------------+

| 7 MIG 3g.20gb 9 0/2 19.62 No 42 2 0 |

| 3 0 0 |

+--------------------------------------------------------------------------+

| 7 MIG 4g.20gb 5 0/1 19.62 No 56 2 0 |

| 4 0 0 |

+--------------------------------------------------------------------------+

| 7 MIG 7g.40gb 0 0/1 39.50 No 98 5 0 |

| 7 1 1 |

+--------------------------------------------------------------------------+

创建MIG实例

使用如下命令创建MIG实例

sudo nvidia-smi mig -cgi [ID],[实例名称] -C

[ID]指的是sudo nvidia-smi mig -lgip中ID那一列的值

[实例名称]指的是Name那一列的内容,注意ID需要与[实例名称]对应

例如,使用以下命令,创建ID为9的那个实例,实例名称为3g.20gb,即创建了两个显存为19.62GB的显卡实例。

sudo nvidia-smi mig -cgi 9,3g.20gb -C

5. 其他实用小工具

5.1 gpustat

使用以下命令安装:

pip install gpustat

使用以下命令查看GPU状态:

gpustat # 默认展示方式,使用不同颜色进行展示

watch -n 1 -c gpustat --color # 每秒刷新,并只显示当前信息

gpustat -i # 动态展示信息

5.2 nvitop

使用以下命令安装:

pip install nvitop

展示的模式有三种:

- auto (默认)

- compact

- full

使用以下命令查看GPU状态:

nvitop #默认展示方式

nvitop -m full #展示全部信息

还有其他有用命令欢迎扔到评论区

参考文献

[1] nvidia-smi详解 https://blog.csdn.net/kunhe0512/article/details/126265050

[2] nvidia-smi常用选项汇总 https://raelum.blog.csdn.net/article/details/126914188

[3] nvidia-smi 命令详解 https://blog.csdn.net/m0_60721514/article/details/125241141

[4] 多实例显卡指南 https://docs.nvidia.com/datacenter/tesla/mig-user-guide/index.html

[5] 【工具篇】如何优雅地监控显卡(GPU)使用情况?https://blog.csdn.net/qq_16763983/article/details/126994939