接触强化学习大概有半年了,也了解了一些算法,一些简单的算法在gym框架也实现了,那么结合仿真平台Carla该怎么用呢?由于比较熟悉gym框架,就偷个懒先从这个开始写代码。

项目地址:https://github.com/cjy1992/gym-carla

文章目录

- 一、环境配置

- 1.1 基本配置

- 1.2 配置工作环境

- 1.3 运行测试

- 二、环境解读

- 2.1 test.py--超参数设置

- 2.2 环境介绍

- 2.2.1 动作空间

- 2.2.2 状态空间

- 2.2.3 test.py主函数

- 2.3.4 Q-learning

- 2.3.5 实验结果

一、环境配置

1.1 基本配置

- Ubuntu 16.04(作者在Ubuntu 20.04测试成功)

- Anaconda

- Carla 0.96

1.2 配置工作环境

- 建立pyhon3.6的环境

$ conda create -n py36 python=3.6

- git代码

$ git clone https://github.com/cjy1992/gym-carla.git

- 进入该路径,

cd XXX

$ pip install -r requiremtns.txt

$ pip install -e .

-

下载

Carla0.9.6版本https://github.com/carla-simulator/carla/releases/tag/0.9.6

-

添加环境变量(否则会出现

No module named 'carla')-

方法一:

$ conda activate py36 $ export PYTHONPATH=$PYTHONPATH:/home/shy/CARLA_0.9.6/PythonAPI/carla/dist/carla-0.9.6-py3.5-linux-x86_64.egg -

方法二:在环境变量配置文件中添加上述环境变量

-

方法三:安装

easy_install后,easy_install XXX.egg即可 -

方法四:在主函数代码里添加这句即可

import sys try:sys.path.append('/home/shy/CARLA_0.9.6/PythonAPI/carla/dist/carla-0.9.6-py3.5-linux-x86_64.egg')方法五:

https://github.com/carla-simulator/carla/issues/1466

-

1.3 运行测试

- 打开CARLA的目录,右键终端启动Carla

$ ./CarlaUE4.sh -windowed -carla-port=2000

也可以启动无界面模式(提高运行速度)

$ DISPLAY= ./CarlaUE4.sh -opengl -carla-port=2000

- 打开该项目的目录,右键终端输入

python test.py

二、环境解读

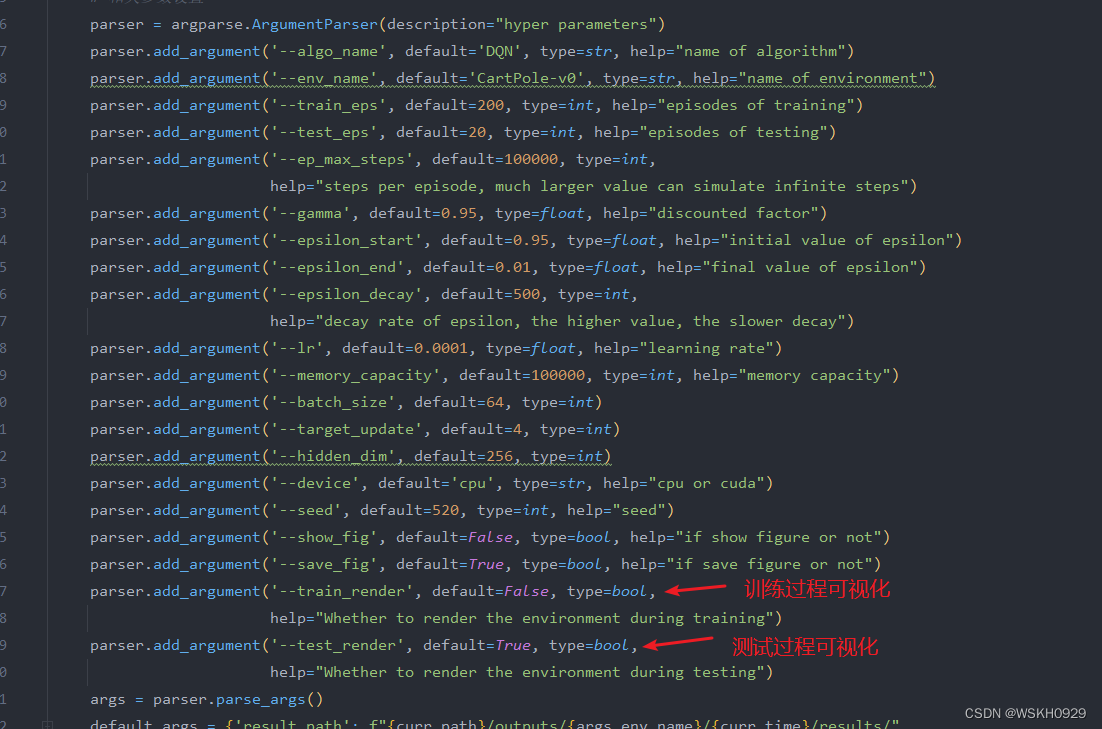

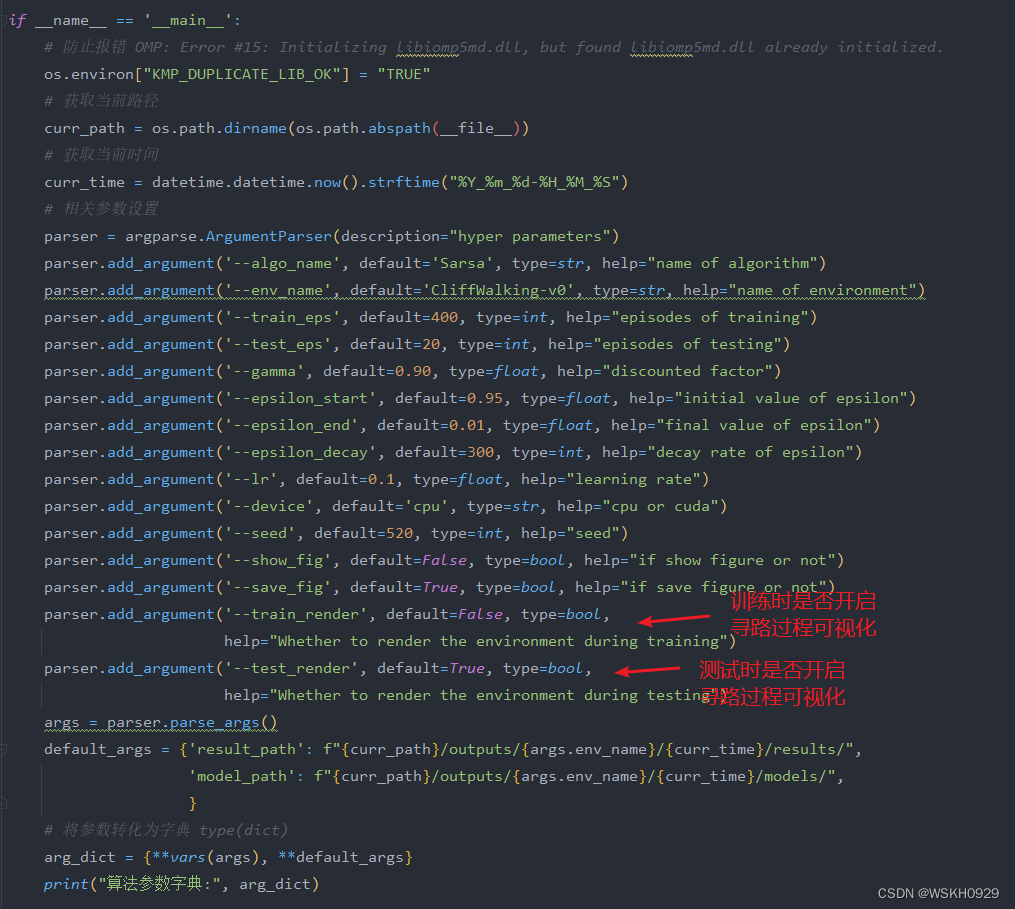

2.1 test.py–超参数设置

#!/usr/bin/env python# Copyright (c) 2019: Jianyu Chen (jianyuchen@berkeley.edu).

#

# This work is licensed under the terms of the MIT license.

# For a copy, see <https://opensource.org/licenses/MIT>.import gym

import sys

try:sys.path.append('/home/shy/CARLA_0.9.6/PythonAPI/carla/dist/carla-0.9.6-py3.5-linux-x86_64.egg') # 手动添加环境变量

except IndexError:pass

import gym_carla

import carladef main():# parameters for the gym_carla environmentparams = {'number_of_vehicles': 100,'number_of_walkers': 0,'display_size': 512, # screen size of bird-eye render'max_past_step': 1, # the number of past steps to draw'dt': 0.1, # time interval between two frames'discrete': False, # whether to use discrete control space'discrete_acc': [-3.0, 0.0, 3.0], # discrete value of accelerations'discrete_steer': [-0.2, 0.0, 0.2], # discrete value of steering angles'continuous_accel_range': [-3.0, 3.0], # continuous acceleration range'continuous_steer_range': [-0.3, 0.3], # continuous steering angle range'ego_vehicle_filter': 'vehicle.lincoln*', # filter for defining ego vehicle'port': 2000, # connection port'town': 'Town03', # which town to simulate'task_mode': 'random', # mode of the task, [random, roundabout (only for Town03)]'max_time_episode': 1000, # maximum timesteps per episode'max_waypt': 12, # maximum number of waypoints'obs_range': 32, # observation range (meter)'lidar_bin': 0.125, # bin size of lidar sensor (meter)'d_behind': 12, # distance behind the ego vehicle (meter)'out_lane_thres': 2.0, # threshold for out of lane'desired_speed': 30, # desired speed (m/s)'max_ego_spawn_times': 200, # maximum times to spawn ego vehicle'display_route': True, # whether to render the desired route'pixor_size': 64, # size of the pixor labels'pixor': False, # whether to output PIXOR observation}2.2 环境介绍

2.2.1 动作空间

包括两个动作,分别是油门和方向,并且有离散和连续两种情况。

- 离散空间:

'discrete_acc': [-3.0, 0.0, 3.0], # discrete value of accelerations

'discrete_steer': [-0.2, 0.0, 0.2], # discrete value of steering angles

- 连续空间

'continuous_accel_range': [-3.0, 3.0], # continuous acceleration range

'continuous_steer_range': [-0.3, 0.3], # continuous steering angle range

2.2.2 状态空间

包括四个部分,分别是鸟瞰图,雷达,相机,以及车辆的当前状态。

如果输出它们的维度,则为:

# BIRDEYE shape is (256, 256, 3)

# LIDAR shape is (256, 256, 3)

# CAMERA shape is (256, 256, 3)

# STATE shape is (4,)

状态的代码如下,分别表示与车道线的横向距离,与车道线的夹角,当前速度,和前方车辆的距离。

# State observationego_trans = self.ego.get_transform()ego_x = ego_trans.location.xego_y = ego_trans.location.yego_yaw = ego_trans.rotation.yaw/180*np.pilateral_dis, w = get_preview_lane_dis(self.waypoints, ego_x, ego_y)delta_yaw = np.arcsin(np.cross(w, np.array(np.array([np.cos(ego_yaw), np.sin(ego_yaw)]))))v = self.ego.get_velocity()speed = np.sqrt(v.x**2 + v.y**2)state = np.array([lateral_dis, - delta_yaw, speed, self.vehicle_front])

2.2.3 test.py主函数

# Set gym-carla environmentenv = gym.make('carla-v0', params=params)obs = env.reset() # 重置环境while True:action = [2.0, 0.0] #此时只执行前进动作,没有转向动作obs,r,done,info = env.step(action) #状态,奖励,是否完成,info,done=Falseif done: #如果这个episode结束了,done=True,比如碰撞了压过车道线多少了等情况obs = env.reset() # 重置环境if __name__ == '__main__':main()

如果试着写一个Q-learning的话,框架是什么样的呢?显然,这里的obs应该选取obs[states],前三个状态图像适合做深度网络,比如图片输入到CNN这种,结合类似DQN的方法,第四个状态包含了4个信息,与车道线的横向距离,与车道线的夹角,当前速度,和前方车辆的距离。

为了方便写代码,就选取了一个单独的状态,做一个Q-table

2.3.4 Q-learning

- 动作空间,选取离散空间,即动作为

[acc,steer],一共9种组合。 - 进一步建立函数

action输入 0 − 8 0-8 0−8,则选择对应离散的9个动作。

'discrete_acc': [-3.0, 0.0, 3.0], # discrete value of accelerations

'discrete_steer': [-0.2, 0.0, 0.2], # discrete value of steering angles

- 状态空间,采取自身的前两个状态,即

obs[states][0],分别表示和车道线的距离。由于距离可能为负数和小数,那么就直接取整处理了,并且给一个number。 - 创建一个Q值表,维度为 9 × 9 9\times 9 9×9

#!/usr/bin/env python# Copyright (c) 2019: Jianyu Chen (jianyuchen@berkeley.edu).

#

# This work is licensed under the terms of the MIT license.

# For a copy, see <https://opensource.org/licenses/MIT>.import gym

import sys

import numpy as np

import matplotlib.pyplot as plttry:sys.path.append('/home/shy/CARLA_0.9.6/PythonAPI/carla/dist/carla-0.9.6-py3.5-linux-x86_64.egg')

except IndexError:pass

import gym_carla

import carladef select_action(action_number):if action_number == 0:real_action = [1, -0.2]elif action_number == 1:real_action = [1, 0]elif action_number == 2:real_action = [1, 0.2]elif action_number == 3:real_action = [2, -0.2]elif action_number == 4:real_action = [2, 0]elif action_number == 5:real_action = [2, 0.2]elif action_number == 6:real_action = [3.0, -0.2]elif action_number == 7:real_action = [3.0, 0]elif action_number == 8:real_action = [3.0, 0.2]return real_actiondef discrete_state(obs):distance = np.floor(obs['state'][0])if distance < -3:distance_number = 0elif distance == -3:distance_number = 1elif distance == -2:distance_number = 2elif distance == -1:distance_number = 3elif distance == 0:distance_number = 4elif distance == 1:distance_number = 5elif distance == 2:distance_number = 6elif distance == 3:distance_number = 7else:distance_number = 8return distance_numberdef main():# parameters for the gym_carla environmentparams = {'number_of_vehicles': 100,'number_of_walkers': 0,'display_size': 512, # screen size of bird-eye render'max_past_step': 1, # the number of past steps to draw'dt': 0.1, # time interval between two frames'discrete': False, # whether to use discrete control space'discrete_acc': [-3.0, 0.0, 3.0], # discrete value of accelerations'discrete_steer': [-0.2, 0.0, 0.2], # discrete value of steering angles'continuous_accel_range': [-3.0, 3.0], # continuous acceleration range'continuous_steer_range': [-0.3, 0.3], # continuous steering angle range'ego_vehicle_filter': 'vehicle.lincoln*', # filter for defining ego vehicle'port': 2000, # connection port'town': 'Town03', # which town to simulate'task_mode': 'random', # mode of the task, [random, roundabout (only for Town03)]'max_time_episode': 1000, # maximum timesteps per episode'max_waypt': 12, # maximum number of waypoints'obs_range': 32, # observation range (meter)'lidar_bin': 0.125, # bin size of lidar sensor (meter)'d_behind': 12, # distance behind the ego vehicle (meter)'out_lane_thres': 2.0, # threshold for out of lane'desired_speed': 10, # desired speed (m/s)'max_ego_spawn_times': 200, # maximum times to spawn ego vehicle'display_route': True, # whether to render the desired route'pixor_size': 64, # size of the pixor labels'pixor': False, # whether to output PIXOR observation'learning_rate': 0.1,'discount': 0.9,'epsilon': 0.8,}env = gym.make('carla-v0', params=params)env.reset()q_table = np.zeros([9, 9]) # 创建一个空的Q值表action_number = 7 # 选择初始动作为油门,不转向reward_list = []for episode in range(10000):if np.random.random() > params['epsilon']:action = select_action(action_number)else:action = select_action(np.random.randint(0, 8))print("# Episode{} start!".format(episode))print("choose_action ", action)obs, reward, done, info = env.step(action) # 根据初始动作观察环境状态,此时done=Falsereward_list.append(reward)s = discrete_state(obs)print("# the reward is", reward)print("# the state distance is", s)if not done:max_future_q = np.max(q_table[s, :])q_table[s, action_number] = (1 - params["learning_rate"]) * q_table[s, action_number] + params["learning_rate"] * (reward + params["discount"] * max_future_q)action_number = np.argmax(q_table[s, :])print("new_action number",action_number)else:env.reset()return q_table, reward_listif __name__ == '__main__':q_table, reward_list = main()print(q_table)操作后,可以选择np.save保存这个表,然后下次直接np.load这个初始表,替换np.zeros那个表即可。

2.3.5 实验结果

非常烂!奖励函数的话波动非常剧烈,不太好。

不过可以明显看出来随着episode的增加,似乎比开始的策略走的好一些。

一个环境的初始策略演示视频

有时候也会出现一个bug,意思是传感器还没有销毁。看对应的代码中reset()函数中销毁了,应该是速度太快了,还没有销毁完下一次生成就来了,可以尝试sleep(0.1),或者重新启动这个程序,也可以每次的episode设置小一些,然后用训练好的表继续读进去反复训练。

就这样,下一次尝试写一个DQN,继续熟悉环境!