这篇记录的是AIStation安装过程中碰到的一些奇奇怪怪的报错

之前做了个3090和服务器的适配测试,完事以后测试环境也没撤,正好最近有个大学AI实验室的实施项目要装AIStation(浪潮的人工智能开发平台),脑子一热就准备在这现成的测试环境里搭一套玩玩,最新的3090配上最新的AIStationV3.0,芜湖!起飞!

目录

- 测试环境

- 开始

- 第2步报错

- 第9步报错

- 第11步报错

- 第7步报错

- 安装成功后

测试环境

- AIStationV3.0:

- GeForce RTX 3090:给你们看看我的大宝贝

- NF5280M5:

开始

硬件装机就8说了,不会还有人不会装内存条装CPU装硬盘装阵列卡装显卡装网卡装扩展卡配RAID装系统吧不会吧不会吧(dog:)

好的现在已经进入正式部署环节(具体操作看部署手册),我们即将在第2步迎来第一个报错!接下来的报错是第9步,第11步,第7步…

第2步报错

-

报错信息

-

报错原因

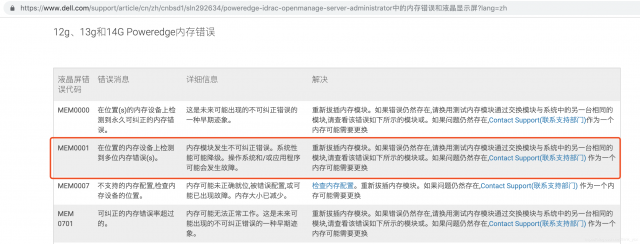

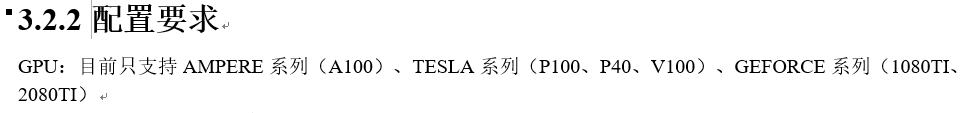

很明显能知道是安装显卡驱动报错TASK [driver : nvidia gpu driver | install driver]仔细一看配置要求,哦豁,不支持3090

-

解决方案

linux系统安装gpu驱动主要注意俩点:- kernel-devel&kernel-headers要与实际内核版本匹配,都是3.10.0-1127

- GPU驱动要和实际型号匹配

[root@node1 aistation]# cat /proc/version Linux version 3.10.0-1127.el7.x86_64 (mockbuild@kbuilder.bsys.centos.org) (gcc version 4.8.5 20150623 (Red Hat 4.8.5-39) (GCC) ) #1 SMP Tue Mar 31 23:36:51 UTC 2020 [root@node1 aistation]# rpm -qa | grep kernel kernel-tools-3.10.0-1127.el7.x86_64 kernel-tools-libs-3.10.0-1127.el7.x86_64 kernel-3.10.0-1127.el7.x86_64 kernel-headers-3.10.0-1127.19.1.el7.x86_64 kernel-devel-3.10.0-1127.el7.x86_64[root@node1 ~]# cd /home/packages/gpu_driver [root@node1 gpu_driver]# ll total 402564 -rw-r--r--. 1 root root 86253524 Jul 14 2020 datacenter-gpu-manager-1.7.2-1.x86_64.rpm -rw-r--r--. 1 root root 1052036 Nov 3 13:57 nvidia-fabricmanager-450-450.80.02-1.x86_64.rpm -rw-r--r--. 1 root root 373768 Nov 3 13:57 nvidia-fabricmanager-devel-450-450.80.02-1.x86_64.rpm -rwxr-xr-x. 1 root root 183481072 Jan 13 15:09 NVIDIA-Linux-x86_64-450.80.02.run上面可以看到,内核版正确,而英伟达官网GeForce RTX 3090的驱动版本是NVIDIA-Linux-x86_64-455.45.01.run,所以问题就出在这了,但是部署文档上又没有说能支持3090,不管了!上传3090的驱动,然后再把3090的驱动文件名改成系统默认的那个:

[root@node1 gpu_driver]# ll total 402564 -rw-r--r--. 1 root root 86253524 Jul 14 2020 datacenter-gpu-manager-1.7.2-1.x86_64.rpm -rw-r--r--. 1 root root 1052036 Nov 3 13:57 nvidia-fabricmanager-450-450.80.02-1.x86_64.rpm -rw-r--r--. 1 root root 373768 Nov 3 13:57 nvidia-fabricmanager-devel-450-450.80.02-1.x86_64.rpm -rwxr-xr-x. 1 root root 141055124 Nov 3 13:57 NVIDIA-Linux-x86_64-450.80.02.run -rwxr-xr-x. 1 root root 183481072 Jan 13 15:09 NVIDIA-Linux-x86_64-455.45.01.run[root@node1 gpu_driver]# mv NVIDIA-Linux-x86_64-450.80.02.run NVIDIA-Linux-x86_64-450.80.02.run.bak [root@node1 gpu_driver]# mv NVIDIA-Linux-x86_64-455.45.01.run NVIDIA-Linux-x86_64-450.80.02.run [root@node1 gpu_driver]# ll total 402564 -rw-r--r--. 1 root root 86253524 Jul 14 2020 datacenter-gpu-manager-1.7.2-1.x86_64.rpm -rw-r--r--. 1 root root 1052036 Nov 3 13:57 nvidia-fabricmanager-450-450.80.02-1.x86_64.rpm -rw-r--r--. 1 root root 373768 Nov 3 13:57 nvidia-fabricmanager-devel-450-450.80.02-1.x86_64.rpm -rwxr-xr-x. 1 root root 183481072 Jan 13 15:09 NVIDIA-Linux-x86_64-450.80.02.run -rwxr-xr-x. 1 root root 141055124 Nov 3 13:57 NVIDIA-Linux-x86_64-450.80.02.run.bak再执行安装操作…成功!!!

[root@node1 gpu_driver]# nvidia-smi Wed Jan 13 21:31:16 2021 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 455.45.01 Driver Version: 455.45.01 CUDA Version: 11.1 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 GeForce RTX 3090 Off | 00000000:AF:00.0 Off | N/A | | 30% 16C P8 7W / 350W | 0MiB / 24268MiB | 0% Default | | | | N/A | +-------------------------------+----------------------+----------------------++-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

第9步报错

- 报错信息

TASK [image : load kolla images] ************************************************************************************************************************************** fatal: [node1]: FAILED! => {"ansible_job_id": "664877348652.39512", "changed": true, "cmd": "cd /opt/aistation/kolla_images && bash -x loadimages.sh 192.168.0.170:5000 eb5a7c0df3494817845d1fcd21133afa kolla_images_list inspur-kollaimages.tar.gz", "delta": "0:00:32.406170", "end": "2021-01-13 15:35:38.628654", "finished": 1, "msg": "non-zero return code", "rc": 1, "start": "2021-01-13 15:35:06.222484", "stderr": "+ '[' 4 -ne 4 ']'\n+ registryaddress=192.168.0.170:5000\n+ registry_admin_password=eb5a7c0df3494817845d1fcd21133afa\n+ images_list_file=kolla_images_list\n+ images_file=inspur-kollaimages.tar.gz\n+ echo 'start pushing image to docker registry'\n+ docker load\n++ cat kolla_images_list\n+ images=com.inspur/centos-source-mariadb:aistation.0.0.200\n+ echo eb5a7c0df3494817845d1fcd21133afa\n+ docker login -u admin --password-stdin 192.168.0.170:5000\nError response from daemon: Get http://192.168.0.170:5000/v2/: dial tcp 192.168.0.170:5000: connect: connection refused\n+ for image in '${images[@]}'\n+ newImageName=192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200\n+ echo 192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200\n+ docker tag com.inspur/centos-source-mariadb:aistation.0.0.200 192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200\n+ docker push 192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200\nGet http://192.168.0.170:5000/v2/: dial tcp 192.168.0.170:5000: connect: connection refused", "stderr_lines": ["+ '[' 4 -ne 4 ']'", "+ registryaddress=192.168.0.170:5000", "+ registry_admin_password=eb5a7c0df3494817845d1fcd21133afa", "+ images_list_file=kolla_images_list", "+ images_file=inspur-kollaimages.tar.gz", "+ echo 'start pushing image to docker registry'", "+ docker load", "++ cat kolla_images_list", "+ images=com.inspur/centos-source-mariadb:aistation.0.0.200", "+ echo eb5a7c0df3494817845d1fcd21133afa", "+ docker login -u admin --password-stdin 192.168.0.170:5000", "Error response from daemon: Get http://192.168.0.170:5000/v2/: dial tcp 192.168.0.170:5000: connect: connection refused", "+ for image in '${images[@]}'", "+ newImageName=192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200", "+ echo 192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200", "+ docker tag com.inspur/centos-source-mariadb:aistation.0.0.200 192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200", "+ docker push 192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200", "Get http://192.168.0.170:5000/v2/: dial tcp 192.168.0.170:5000: connect: connection refused"], "stdout": "start pushing image to docker registry\nLoaded image: com.inspur/centos-source-mariadb:aistation.0.0.200\n192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200\nThe push refers to repository [192.168.0.170:5000/com.inspur/centos-source-mariadb]", "stdout_lines": ["start pushing image to docker registry", "Loaded image: com.inspur/centos-source-mariadb:aistation.0.0.200", "192.168.0.170:5000/com.inspur/centos-source-mariadb:aistation.0.0.200", "The push refers to repository [192.168.0.170:5000/com.inspur/centos-source-mariadb]"]}NO MORE HOSTS LEFT ****************************************************************************************************************************************************to retry, use: --limit @/home/deploy-script/common/kolla_mariadb/cluster.retryPLAY RECAP ************************************************************************************************************************************************************ node1 : ok=7 changed=5 unreachable=0 failed=1 - 解决方法

再执行一遍安装就好拉(是不是很简单,但是中间费了老大功夫仍然解决不了,最后放弃第9步直接安装第10步,结果点快了又重新执行了一遍第9步安装,结果就安装成功了…)

第11步报错

- 报错信息

已经安装完成到了检测步骤结果来了报错…

明显看到是k8s出问题了,所以要解决的其实是第7步报错PLAY RECAP ************************************************************************************************************************************************************ node1 : ok=49 changed=37 unreachable=0 failed=0+ sleep 5 + kubectl get pod -n aistation Error from server (Timeout): the server was unable to return a response in the time allotted, but may still be processing the request (get pods) [root@AIStationV3test aistation2.0]# kubectl get pod -A -o wide Error from server (Timeout): the server was unable to return a response in the time allotted, but may still be processing the request (get pods)[root@AIStationV3test aistation2.0]# bash health-check.sh Error from server (Timeout): the server was unable to return a response in the time allotted, but may still be processing the request (get pods) Error from server (Timeout): the server was unable to return a response in the time allotted, but may still be processing the request (get pods)

第7步报错

-

报错信息

[root@AIStationV3test aistation2.0]# systemctl status kubelet ● kubelet.service - Kubernetes Kubelet ServerLoaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)Active: active (running) since Wed 2021-01-13 15:14:04 CST; 1h 12min agoDocs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 77402 (kubelet)Tasks: 0Memory: 21.9MCGroup: /system.slice/kubelet.service‣ 77402 /usr/local/bin/kubelet --logtostderr=true --v=2 --address=192.168.0.170 --node-ip=192.168.0.170 --hostname-override=node1 --allow-privileged=true...Jan 13 16:26:06 node1 kubelet[77402]: W0113 16:26:06.298288 77402 container.go:523] Failed to update stats for container "/system.slice/docker-8b92c965f0879f6b5c4... Jan 13 16:26:06 node1 kubelet[77402]: E0113 16:26:06.707414 77402 fsHandler.go:118] failed to collect filesystem stats - rootDiskErr: could not stat "/v...f7ff6191d9 Jan 13 16:26:07 node1 kubelet[77402]: I0113 16:26:07.264780 77402 kubelet.go:1932] SyncLoop (PLEG): "metrics-server-7c5c656d5d-dprj8_kube-system(35b2338c-556f-11e... Jan 13 16:26:07 node1 kubelet[77402]: E0113 16:26:07.266211 77402 pod_workers.go:190] Error syncing pod 35b2338c-556f-11eb-9e0d-b4055d088f2a ("metrics-server-7c5c... Jan 13 16:26:07 node1 kubelet[77402]: E0113 16:26:07.797865 77402 pod_workers.go:190] Error syncing pod 9d33ac32-5575-11eb-9e0d-b4055d088f2a ("alert-engine-5b6dff... Jan 13 16:26:08 node1 kubelet[77402]: E0113 16:26:08.797593 77402 pod_workers.go:190] Error syncing pod 1eede667-5576-11eb-9e0d-b4055d088f2a ("aistation-api-gatew... Jan 13 16:26:08 node1 kubelet[77402]: W0113 16:26:08.998309 77402 status_manager.go:485] Failed to get status for pod "ibase-service-74684b8c9f-sd9s8_ai...c9f-sd9s8) Jan 13 16:26:09 node1 kubelet[77402]: W0113 16:26:09.481292 77402 container.go:523] Failed to update stats for container "/system.slice/docker-e5af4a80ae2eb868188... Jan 13 16:26:09 node1 kubelet[77402]: W0113 16:26:09.818149 77402 container.go:523] Failed to update stats for container "/system.slice/docker-84ea2dd832b0f05adfc... Jan 13 16:26:09 node1 kubelet[77402]: E0113 16:26:09.985407 77402 kubelet_node_status.go:385] Error updating node status, will retry: error getting node...des node1) Hint: Some lines were ellipsized, use -l to show in full.接下来就是一番restart然后status

[root@AIStationV3test aistation2.0]# systemctl restart kubelet [root@AIStationV3test aistation2.0]# systemctl status kubelet ● kubelet.service - Kubernetes Kubelet ServerLoaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)Active: active (running) since Wed 2021-01-13 16:26:25 CST; 1s agoDocs: https://github.com/GoogleCloudPlatform/kubernetesProcess: 103130 ExecStartPre=/bin/mkdir -p /var/lib/kubelet/volume-plugins (code=exited, status=0/SUCCESS)Main PID: 103133 (kubelet)Tasks: 45Memory: 40.5MCGroup: /system.slice/kubelet.service└─103133 /usr/local/bin/kubelet --logtostderr=true --v=2 --address=192.168.0.170 --node-ip=192.168.0.170 --hostname-override=node1 --allow-privileged=tru...Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.340248 103133 remote_image.go:50] parsed scheme: "" Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.340263 103133 remote_image.go:50] scheme "" not registered, fallback to default scheme Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.340389 103133 asm_amd64.s:1337] ccResolverWrapper: sending new addresses to cc: [{/var/run/dockersh...0 <nil>}] Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.340440 103133 clientconn.go:796] ClientConn switching balancer to "pick_first" Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.340447 103133 asm_amd64.s:1337] ccResolverWrapper: sending new addresses to cc: [{/var/run/dockersh...0 <nil>}] Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.340482 103133 clientconn.go:796] ClientConn switching balancer to "pick_first" Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.340571 103133 balancer_conn_wrappers.go:131] pickfirstBalancer: HandleSubConnStateChange: 0xc000d5d...CONNECTING Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.340573 103133 balancer_conn_wrappers.go:131] pickfirstBalancer: HandleSubConnStateChange: 0xc00029a...CONNECTING Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.341816 103133 balancer_conn_wrappers.go:131] pickfirstBalancer: HandleSubConnStateChange: 0xc000d5d950, READY Jan 13 16:26:26 node1 kubelet[103133]: I0113 16:26:26.344265 103133 balancer_conn_wrappers.go:131] pickfirstBalancer: HandleSubConnStateChange: 0xc00029acd0, READY Hint: Some lines were ellipsized, use -l to show in full.[root@AIStationV3test aistation2.0]# kubectl get nodes --show-labelsError from server (Timeout): the server was unable to return a response in the time allotted, but may still be processing the request (get nodes)[root@AIStationV3test install_config]# systemctl status kubelet -l ● kubelet.service - Kubernetes Kubelet ServerLoaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)Active: active (running) since Wed 2021-01-13 16:42:52 CST; 4min 2s agoDocs: https://github.com/GoogleCloudPlatform/kubernetesProcess: 57554 ExecStartPre=/bin/mkdir -p /var/lib/kubelet/volume-plugins (code=exited, status=0/SUCCESS)Main PID: 57556 (kubelet)Tasks: 0Memory: 63.5MCGroup: /system.slice/kubelet.service‣ 57556 /usr/local/bin/kubelet --logtostderr=true --v=2 --address=192.168.0.170 --node-ip=192.168.0.170 --hostname-override=node1 --allow-privileged=true --tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256 --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --authentication-token-webhook --enforce-node-allocatable= --client-ca-file=/etc/kubernetes/ssl/ca.crt --rotate-certificates --pod-manifest-path=/etc/kubernetes/manifests --pod-infra-container-image=192.168.0.170:5000/com.inspur/pause-amd64:3.1 --node-status-update-frequency=10s --cgroup-driver=systemd --cgroups-per-qos=False --max-pods=110 --anonymous-auth=false --read-only-port=0 --fail-swap-on=True --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice --cluster-dns=10.233.0.3 --cluster-domain=cluster.local --resolv-conf=/etc/resolv.conf --node-labels= --eviction-hard= --image-gc-high-threshold=100 --image-gc-low-threshold=99 --kube-reserved cpu=100m --system-reserved cpu=100m --registry-burst=110 --registry-qps=110 --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --volume-plugin-dir=/var/lib/kubelet/volume-pluginsJan 13 16:46:54 node1 kubelet[57556]: E0113 16:46:54.255588 57556 eviction_manager.go:247] eviction manager: failed to get summary stats: failed to get node info: node "node1" not found Jan 13 16:46:54 node1 kubelet[57556]: E0113 16:46:54.268144 57556 kubelet.go:2246] node "node1" not found Jan 13 16:46:54 node1 kubelet[57556]: E0113 16:46:54.368355 57556 kubelet.go:2246] node "node1" not found Jan 13 16:46:54 node1 kubelet[57556]: E0113 16:46:54.468645 57556 kubelet.go:2246] node "node1" not found Jan 13 16:46:54 node1 kubelet[57556]: E0113 16:46:54.568872 57556 kubelet.go:2246] node "node1" not found Jan 13 16:46:54 node1 kubelet[57556]: E0113 16:46:54.669081 57556 kubelet.go:2246] node "node1" not found Jan 13 16:46:54 node1 kubelet[57556]: E0113 16:46:54.769283 57556 kubelet.go:2246] node "node1" not found Jan 13 16:46:54 node1 kubelet[57556]: E0113 16:46:54.869529 57556 kubelet.go:2246] node "node1" not found Jan 13 16:46:54 node1 kubelet[57556]: E0113 16:46:54.969820 57556 kubelet.go:2246] node "node1" not found Jan 13 16:46:55 node1 kubelet[57556]: E0113 16:46:55.069981 57556 kubelet.go:2246] node "node1" not found我一直在找的2个关键:

Error from server (Timeout): the server was unable to return a response in the time allotted, but may still be processing the request (get pods)kubelet.go:2246] node "node1" not found

google+bing+baidu,把能找到的方案都尝试了一遍,还是解决不了,最后跑去问研发,研发表示:

2. 解决方案

重启大法好!!!

这个问题最后被重启服务器解决了…

就在要去重装的路上,突然想到了重启大法,因为老是调侃说重启能解决80%的问题,于是它真的解决了这个问题…

然后就安装成功了

这个世界真的好神奇呀

安装成功后

它就长这样