目录

一、概述

1.1历史服务器JobHistory

1.2 Spark历史服务器HistoryServer

二、集成配置

一、概述

1.1历史服务器JobHistory

为了查看程序的历史运行情况,需要配置一下历史服务器。方便在xxxx:8088查看历史任务运行日志信息。

1.2 Spark历史服务器HistoryServer

HistoryServer服务可以让用户通过Spark UI界面,查看历史应用(已经执行完的应用)的执行细节,比如job信息、stage信息、task信息等,该功能是基于spark eventlogs日志文件的,所以必须打开eventlogs日志开关。方便在xxxx:8088查看历史任务运行日志信息。

二、集成配置

yarn-site.xml

<property><name>yarn.log.server.url</name><value>http://xxxxx:19888/jobhistory/logs</value>

</property>mapred-site.xml

<property><name>mapreduce.jobhistory.address</name><value>xxxxx:10020</value></property><property><name>mapreduce.jobhistory.webapp.address</name><value>xxxxx19888</value></property>如下是YARN完整的service_ddl.json

cat YARN/service_ddl.json

{"name": "YARN","label": "YARN","description": "分布式资源调度与管理平台","version": "3.3.3","sortNum": 2,"dependencies":["HDFS"],"packageName": "hadoop-3.3.3.tar.gz","decompressPackageName": "hadoop-3.3.3","roles": [{"name": "ResourceManager","label": "ResourceManager","roleType": "master","cardinality": "1","runAs": {"user": "yarn","group": "hadoop"},"sortNum": 1,"logFile": "logs/hadoop-yarn-resourcemanager-${host}.log","jmxPort": 9323,"startRunner": {"timeout": "60","program": "control_hadoop.sh","args": ["start","resourcemanager"]},"stopRunner": {"timeout": "600","program": "control_hadoop.sh","args": ["stop","resourcemanager"]},"statusRunner": {"timeout": "60","program": "control_hadoop.sh","args": ["status","resourcemanager"]},"restartRunner": {"timeout": "60","program": "control_hadoop.sh","args": ["restart","resourcemanager"]},"externalLink": {"name": "ResourceManager UI","label": "ResourceManager UI","url": "http://${host}:8088/ui2"}},{"name": "NodeManager","label": "NodeManager","roleType": "worker","cardinality": "1","runAs": {"user": "yarn","group": "hadoop"},"sortNum": 2,"logFile": "logs/hadoop-yarn-nodemanager-${host}.log","jmxPort": 9324,"startRunner": {"timeout": "60","program": "control_hadoop.sh","args": ["start","nodemanager"]},"stopRunner": {"timeout": "600","program": "control_hadoop.sh","args": ["stop","nodemanager"]},"statusRunner": {"timeout": "60","program": "control_hadoop.sh","args": ["status","nodemanager"]}},{"name": "HistoryServer","label": "HistoryServer","roleType": "master","cardinality": "1","runAs": {"user": "mapred","group": "hadoop"},"sortNum": 3,"logFile": "logs/hadoop-mapred-historyserver-${host}.log","jmxPort": 9325,"startRunner": {"timeout": "60","program": "control_hadoop.sh","args": ["start","historyserver"]},"stopRunner": {"timeout": "600","program": "control_hadoop.sh","args": ["stop","historyserver"]},"statusRunner": {"timeout": "60","program": "control_hadoop.sh","args": ["status","historyserver"]}},{"name": "YarnClient","label": "YarnClient","roleType": "client","cardinality": "1","runAs": {"user": "yarn","group": "hadoop"}}],"configWriter": {"generators": [{"filename": "yarn-site.xml","configFormat": "xml","outputDirectory": "etc/hadoop/","includeParams": ["yarn.nodemanager.resource.cpu-vcores","yarn.nodemanager.resource.memory-mb","yarn.scheduler.minimum-allocation-mb","yarn.scheduler.minimum-allocation-vcores","yarn.log.server.url","yarn.nodemanager.aux-services","yarn.log-aggregation-enable","yarn.resourcemanager.ha.enabled","yarn.resourcemanager.hostname","yarn.resourcemanager.address","yarn.resourcemanager.webapp.address","yarn.resourcemanager.scheduler.address","yarn.resourcemanager.resource-tracker.address","yarn.resourcemanager.store.class","yarn.application.classpath","yarn.nodemanager.local-dirs","yarn.nodemanager.log-dirs","yarn.nodemanager.address","yarn.nodemanager.resource.count-logical-processors-as-cores","yarn.nodemanager.resource.detect-hardware-capabilities","yarn.nodemanager.resource.pcores-vcores-multiplier","yarn.resourcemanager.am.max-attempts","yarn.node-labels.enabled","yarn.node-labels.fs-store.root-dir","yarn.resourcemanager.principal","yarn.resourcemanager.keytab","yarn.nodemanager.principal","yarn.nodemanager.keytab","yarn.nodemanager.container-executor.class","yarn.nodemanager.linux-container-executor.group","yarn.nodemanager.linux-container-executor.path","custom.yarn.site.xml"]},{"filename": "mapred-site.xml","configFormat": "xml","outputDirectory": "etc/hadoop/","includeParams": ["mapreduce.jobhistory.keytab","mapreduce.jobhistory.principal","mapreduce.cluster.local.dir","mapreduce.jobhistory.address","mapreduce.jobhistory.webapp.address","custom.mapred.site.xml"]}]},"parameters": [{"name": "yarn.node-labels.fs-store.root-dir","label": "Node Label存储目录","description": "Node Label存储目录","required": true,"type": "input","value": "hdfs://${dfs.nameservices}/user/yarn/nodeLabels","configurableInWizard": true,"hidden": false,"defaultValue": "hdfs://${dfs.nameservices}/user/yarn/nodeLabels"},{"name": "yarn.nodemanager.resource.cpu-vcores","label": "nodemanager虚拟核数","description": "nodemanager虚拟核数","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "-1"},{"name": "yarn.nodemanager.resource.memory-mb","label": "Nodemanaer节点上YARN可使用的物理内存总量","description": "Nodemanaer节点上YARN可使用的物理内存总量","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "2048"},{"name": "yarn.scheduler.minimum-allocation-mb","label": "最小可分配容器的大小","description": "最小可分配容器的大小","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "1024"}, {"name": "yarn.scheduler.minimum-allocation-vcores","label": "nodemanager最小虚拟核数","description": "nodemanager最小虚拟核数","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "1"},{"name": "yarn.nodemanager.resource.count-logical-processors-as-cores","label": "是否将物理核数作为虚拟核数","description": "是否将物理核数作为虚拟核数","required": true,"type": "switch","value": "","configurableInWizard": true,"hidden": false,"defaultValue": true},{"name": "yarn.nodemanager.resource.detect-hardware-capabilities","label": "是否让yarn自己检测硬件进行配置","description": "是否让yarn自己检测硬件进行配置","required": true,"type": "switch","value": "","configurableInWizard": true,"hidden": false,"defaultValue": true},{"name": "yarn.nodemanager.resource.pcores-vcores-multiplier","label": "虚拟核数与物理核数比例","description": "","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "0.75"},{"name": "yarn.resourcemanager.am.max-attempts","label": "AM重试次数","description": "","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "4"},{"name": "yarn.nodemanager.aux-services","label": "yarn服务机制","description": "yarn服务机制","required": true,"type": "input","value": "mapreduce_shuffle","configurableInWizard": true,"hidden": false,"defaultValue": "mapreduce_shuffle"},{"name": "yarn.log-aggregation-enable","label": "是否开启yarn日志聚合","description": "开启yarn日志聚合","required": true,"type": "switch","value":"","configurableInWizard": true,"hidden": false,"defaultValue": true},{"name": "yarn.resourcemanager.ha.enabled","label": "是否启用resourcemanager ha","description": "是否启用resourcemanager ha","configType": "ha","required": true,"type": "switch","value":"","configurableInWizard": true,"hidden": false,"defaultValue": false},{"name": "yarn.resourcemanager.hostname","label": "rm主机名","description": "","configType": "ha","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "${host}"},{"name": "yarn.resourcemanager.webapp.address","label": "rm web地址","description": "","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "${host}:8088"},{"name": "yarn.resourcemanager.address","label": "ResourceManager对客户端暴露的地址rm","description": "","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": true,"defaultValue": "${host}:8032"},{"name": "yarn.resourcemanager.scheduler.address","label": "ResourceManager对ApplicationMaster暴露的访问地址rm","description": "","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": true,"defaultValue": "${host}:8030"},{"name": "yarn.resourcemanager.resource-tracker.address","label": "ResourceManager对NodeManager暴露的地址rm","description": "","configType": "ha","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": true,"defaultValue": "${host}:8031"},{"name": "yarn.resourcemanager.store.class","label": "yarn状态信息存储类","description": "","configType": "ha","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore"},{"name": "yarn.nodemanager.address","label": "nodemanager地址","description": "","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "0.0.0.0:45454"},{"name": "yarn.log.server.url","label": "historyserver地址","description": "","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "http://${historyserverHost}:19888/jobhistory/logs"},{"name": "yarn.log-aggregation.retain-seconds","label": "日志保留时长","description": "","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "2592000"},{"name": "yarn.nodemanager.remote-app-log-dir","label": "日志保留位置","description": "","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "hdfs://${dfs.nameservices}/user/yarn/yarn-logs/"},{"name": "yarn.nodemanager.local-dirs","label": "NodeManager本地存储目录","description": "NodeManager本地存储目录,可配置多个,按逗号分隔","required": true,"configType": "path","separator": ",","type": "multiple","value": ["/data/nm"],"configurableInWizard": true,"hidden": false,"defaultValue": ""},{"name": "yarn.nodemanager.log-dirs","label": "NodeManager日志存储目录","description": "NodeManager日志存储目录,可配置多个,按逗号分隔","required": true,"configType": "path","separator": ",","type": "multiple","value": ["/data/nm/userlogs"],"configurableInWizard": true,"hidden": false,"defaultValue": ""},{"name": "mapreduce.cluster.local.dir","label": "MapReduce本地存储目录","description": "MapReduce本地存储目录","required": true,"configType": "path","separator": ",","type": "input","value": "/data/mapred/local","configurableInWizard": true,"hidden": false,"defaultValue": "/data/mapred/local"},{"name": "mapreduce.jobhistory.address","label": "MapReduceJobHistoryServer地址","description": "MapReduceJobHistoryServer地址","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "${host}:10020"},{"name": "mapreduce.jobhistory.webapp.address","label": "MapReduceJobHistoryServerWeb-UI地址","description": "MapReduceJobHistoryServerWeb-UI地址","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "${host}:19888"},{"name": "yarn.application.classpath","label": "yarn应用程序加载的classpath","description": "yarn应用程序加载的classpath","required": true,"separator": ",","type": "multiple","value":["${HADOOP_HOME}/etc/hadoop","${HADOOP_HOME}/share/hadoop/common/lib/*","${HADOOP_HOME}/share/hadoop/common/*","${HADOOP_HOME}/share/hadoop/hdfs","${HADOOP_HOME}/share/hadoop/hdfs/lib/*","${HADOOP_HOME}/share/hadoop/hdfs/*","${HADOOP_HOME}/share/hadoop/mapreduce/lib/*","${HADOOP_HOME}/share/hadoop/mapreduce/*","${HADOOP_HOME}/share/hadoop/yarn","${HADOOP_HOME}/share/hadoop/yarn/lib/*","${HADOOP_HOME}/share/hadoop/yarn/*"],"configurableInWizard": true,"hidden": false,"defaultValue": ["${HADOOP_HOME}/etc/hadoop","${HADOOP_HOME}/share/hadoop/common/lib/*","${HADOOP_HOME}/share/hadoop/common/*","${HADOOP_HOME}/share/hadoop/hdfs","${HADOOP_HOME}/share/hadoop/hdfs/lib/*","${HADOOP_HOME}/share/hadoop/hdfs/*","${HADOOP_HOME}/share/hadoop/mapreduce/lib/*","${HADOOP_HOME}/share/hadoop/mapreduce/*","${HADOOP_HOME}/share/hadoop/yarn","${HADOOP_HOME}/share/hadoop/yarn/lib/*","${HADOOP_HOME}/share/hadoop/yarn/*"]},{"name": "yarn.resourcemanager.principal","label": "ResourceManager服务的Kerberos主体","description": "","required": false,"configType": "kb","configWithKerberos": true,"type": "input","value": "rm/_HOST@HADOOP.COM","configurableInWizard": true,"hidden": true,"defaultValue": "rm/_HOST@HADOOP.COM"},{"name": "yarn.resourcemanager.keytab","label": "ResourceManager服务的Kerberos密钥文件路径","description": "","required": false,"configType": "kb","configWithKerberos": true,"type": "input","value": "/etc/security/keytab/rm.service.keytab","configurableInWizard": true,"hidden": true,"defaultValue": "/etc/security/keytab/rm.service.keytab"},{"name": "yarn.nodemanager.principal","label": "NodeManager服务的Kerberos主体","description": "","required": false,"configType": "kb","configWithKerberos": true,"type": "input","value": "nm/_HOST@HADOOP.COM","configurableInWizard": true,"hidden": true,"defaultValue": "nm/_HOST@HADOOP.COM"},{"name": "yarn.nodemanager.keytab","label": "NodeManager服务的Kerberos密钥文件路径","description": "","required": false,"configType": "kb","configWithKerberos": true,"type": "input","value": "/etc/security/keytab/nm.service.keytab","configurableInWizard": true,"hidden": true,"defaultValue": "/etc/security/keytab/nm.service.keytab"},{"name": "mapreduce.jobhistory.principal","label": "JobHistory服务的Kerberos主体","description": "","required": false,"configType": "kb","configWithKerberos": true,"type": "input","value": "jhs/_HOST@HADOOP.COM","configurableInWizard": true,"hidden": true,"defaultValue": "jhs/_HOST@HADOOP.COM"},{"name": "mapreduce.jobhistory.keytab","label": "JobHistory服务的Kerberos密钥文件路径","description": "","required": false,"configType": "kb","configWithKerberos": true,"type": "input","value": "/etc/security/keytab/jhs.service.keytab","configurableInWizard": true,"hidden": true,"defaultValue": "/etc/security/keytab/jhs.service.keytab"},{"name": "yarn.nodemanager.container-executor.class","label": "使用LinuxContainerExecutor管理Container","description": "","required": false,"configType": "kb","configWithKerberos": true,"type": "input","value": "org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor","configurableInWizard": true,"hidden": true,"defaultValue": "org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor"},{"name": "yarn.nodemanager.linux-container-executor.group","label": "NodeManager的启动用户的所属组","description": "","required": false,"configType": "kb","configWithKerberos": true,"type": "input","value": "hadoop","configurableInWizard": true,"hidden": true,"defaultValue": "hadoop"},{"name": "yarn.nodemanager.linux-container-executor.path","label": "LinuxContainerExecutor脚本路径","description": "","required": false,"configType": "kb","configWithKerberos": true,"type": "input","value": "${HADOOP_HOME}/bin/container-executor","configurableInWizard": true,"hidden": true,"defaultValue": "${HADOOP_HOME}/bin/container-executor"},{"name": "yarn.node-labels.enabled","label": "启用YARN标签调度","description": "开启 YARN Node Labels","required": true,"type": "switch","value": "","configurableInWizard": true,"hidden": false,"defaultValue": false},{"name": "enableKerberos","label": "启用Kerberos认证","description": "启用Kerberos认证","required": true,"type": "switch","value": false,"configurableInWizard": true,"hidden": false,"defaultValue": false},{"name": "custom.yarn.site.xml","label": "自定义配置yarn-site.xml","description": "自定义配置","configType": "custom","required": false,"type": "multipleWithKey","value": [],"configurableInWizard": true,"hidden": false,"defaultValue": ""},{"name": "custom.mapred.site.xml","label": "自定义配置mapred-site.xml","description": "自定义配置","configType": "custom","required": false,"type": "multipleWithKey","value": [],"configurableInWizard": true,"hidden": false,"defaultValue": ""}]

}如下是SPARK3完整的service_ddl.json

cat SPARK3/service_ddl.json

{"name": "SPARK3","label": "Spark3","description": "分布式计算系统","version": "3.1.3","sortNum": 7,"dependencies":[],"packageName": "spark-3.1.3.tar.gz","decompressPackageName": "spark-3.1.3","roles": [{"name": "SparkClient3","label": "SparkClient3","roleType": "client","cardinality": "1","logFile": "logs/hadoop-${user}-datanode-${host}.log"},{"name": "SparkHistoryServer","label": "SparkHistoryServer","roleType": "master","runAs": {"user": "hdfs","group": "hadoop"},"cardinality": 1,"logFile": "logs/spark-HistoryServer-${host}.out","jmxPort": 9094,"startRunner": {"timeout": "60","program": "control_histroy_server.sh","args": ["start","histroyserver"]},"stopRunner": {"timeout": "600","program": "control_histroy_server.sh","args": ["stop","histroyserver"]},"statusRunner": {"timeout": "60","program": "control_histroy_server.sh","args": ["status","histroyserver"]},"restartRunner": {"timeout": "60","program": "control_histroy_server.sh","args": ["restart","histroyserver"]},"externalLink": {"name": "SparkHistoryServer Ui","label": "SparkHistoryServer Ui","url": "http://${host}:18081"}}],"configWriter": {"generators": [{"filename": "spark-env.sh","configFormat": "custom","templateName": "spark-env.ftl","outputDirectory": "conf","includeParams": ["SPARK_DIST_CLASSPATH","HADOOP_CONF_DIR","YARN_CONF_DIR","custom.spark.env.sh"]},{"filename": "spark-defaults.conf","configFormat": "properties2","outputDirectory": "conf","includeParams": ["spark.eventLog.dir","spark.history.fs.logDirectory","spark.eventLog.enabled","spark.master","spark.history.ui.port","spark.yarn.historyServer.address","spark.yarn.queue","spark.history.fs.cleaner.enabled","spark.history.fs.cleaner.interval","spark.history.fs.cleaner.maxAge","custom.spark.defaults.conf"]}]},"parameters": [{"name": "spark.eventLog.dir","label": "eventLog输出的hdfs路径","description": "eventLog输出的hdfs路径","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "hdfs://${host}:8020/spark3-history"},{"name": "spark.history.fs.logDirectory","label": "Spark历史日志HDFS目录","description": "Spark历史日志HDFS目录","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "hdfs://${host}:8020/spark3-history"},{"name": "spark.eventLog.enabled","label": "开启spark事件日志","description": "开启spark事件日志","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "true"},{"name": "spark.master","label": "开启sparkmaster","description": "开启sparkmaster","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "yarn"},{"name": "spark.history.ui.port","label": "sparkhistoryweb端口","description": "sparkhistoryweb端口","required": true,"type": "input","value": "18081","configurableInWizard": true,"hidden": false,"defaultValue": "18081"},{"name": "spark.yarn.queue","label": "指定提交到Yarn的资源池","description": "指定提交到Yarn的资源池","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "default"},{"name": "spark.yarn.historyServer.address","label": "application的日志访问地址","description": "application的日志访问地址","required": true,"type": "input","value":"","configurableInWizard": true,"hidden": false,"defaultValue": "${host}:18081"},{"name": "spark.history.fs.cleaner.enabled","label": "sparkhistory日志是否定时清除","description": "sparkhistory日志是否定时清除","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "true"},{"name": "spark.history.fs.cleaner.interval","label": "history-server的日志检查间隔","description": "history-server的日志检查间隔","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "1d"},{"name": "spark.history.fs.cleaner.maxAge","label": "history-server日志生命周期","description": "history-server日志生命周期","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "30d"},{"name": "SPARK_DIST_CLASSPATH","label": "spark加载Classpath路径","description": "","required": true,"configType": "map","type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "$(${HADOOP_HOME}/bin/hadoop classpath)"},{"name": "HADOOP_CONF_DIR","label": "Hadoop配置文件目录","description": "","configType": "map","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "${HADOOP_HOME}/etc/hadoop"},{"name": "YARN_CONF_DIR","label": "Yarn配置文件目录","description": "","configType": "map","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "${HADOOP_HOME}/etc/hadoop"},{"name": "custom.spark.env.sh","label": "自定义配置spark-env.sh","description": "自定义配置spark-env.sh","configType": "custom","required": false,"type": "multipleWithKey","value": [{"SPARK_CLASSPATH":"${INSTALL_PATH}/spark-3.1.3/carbonlib/*"}],"configurableInWizard": true,"hidden": false,"defaultValue": ""},{"name": "custom.spark.defaults.conf","label": "自定义配置spark-defaults.conf","description": "自定义配置","configType": "custom","required": false,"type": "multipleWithKey","value": [],"configurableInWizard": true,"hidden": false,"defaultValue": ""}]

}

sparhistroyServer启停脚本

cat spark-3.1.3/control_histroy_server.sh

#!/bin/bash

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#usage="Usage: start.sh (start|stop|status|restart) <command> "# if no args specified, show usage

if [ $# -le 1 ]; thenecho $usageexit 1

fi

startStop=$1

shift

command=$1

SH_DIR=`dirname $0`

export LOG_DIR=$SH_DIR/logs

export PID_DIR=$SH_DIR/pidexport HOSTNAME=`hostname`if [ ! -d "$LOG_DIR" ]; thenmkdir $LOG_DIR

fisource /etc/profile.d/datasophon-env.sh

# 创建日志路径

sudo -u hdfs /opt/datasophon/hdfs/bin/hdfs dfs -test -d /spark3-history

if [ $? -ne 0 ] ;thensource /etc/profile.d/datasophon-env.shsudo -u hdfs /opt/datasophon/hdfs/bin/hdfs dfs -mkdir -p /spark3-historysudo -u hdfs /opt/datasophon/hdfs/bin/hdfs dfs -chown -R hdfs:hadoop /spark3-history/sudo -u hdfs /opt/datasophon/hdfs/bin/hdfs dfs -chmod -R 777 /spark3-history/fistart(){[ -w "$PID_DIR" ] || mkdir -p "$PID_DIR"ifOrNot=`ps -ef |grep HistoryServer | grep spark |grep -v "grep" |wc -l`if [ 1 == $ifOrNot ]thenecho "$command is running "exit 1elseecho "$command is not running"fiecho starting $command, loggingexec_command="$SH_DIR/sbin/start-history-server.sh"echo " $exec_command"$exec_command}

stop(){ifOrNot=`ps -ef |grep HistoryServer | grep spark |grep -v "grep" |wc -l`if [ 1 == $ifOrNot ];thenifStop=`jps | grep -E 'HistoryServer' | grep -v 'Job*' | awk '{print $1}'`if [ ! -z $ifStop ]; thenecho "stop $command "kill -9 $ifStopfielseecho "$command is not running"exit 1fi}

status(){ifOrNot=`ps -ef |grep HistoryServer | grep spark |grep -v "grep" |wc -l`if [ 1 == $ifOrNot ]thenecho "$command is running "elseecho "$command is not running"exit 1fi

}

restart(){stopsleep 10start

}

case $startStop in(start)start;;(stop)stop;;(status)status;;(restart)restart;;(*)echo $usageexit 1;;

esacecho "End $startStop $command."

spark重新打包我们部署安装

选择SparkhistoryServer安装节点

选择SparkhistoryServer安装节点

选择Sparkclient

服务配置

安装完成

安装完后的spark-defaults.conf 配置文件

cat spark3/conf/spark-defaults.conf

spark.eventLog.dir hdfs://windp-aio:8020/spark3-history

spark.history.fs.logDirectory hdfs://windp-aio:8020/spark3-history

spark.eventLog.enabled true

spark.master yarn

spark.history.ui.port 18081

spark.yarn.historyServer.address windp-aio:18081

spark.yarn.queue default

spark.history.fs.cleaner.enabled true

spark.history.fs.cleaner.interval 1d

spark.history.fs.cleaner.maxAge 30d

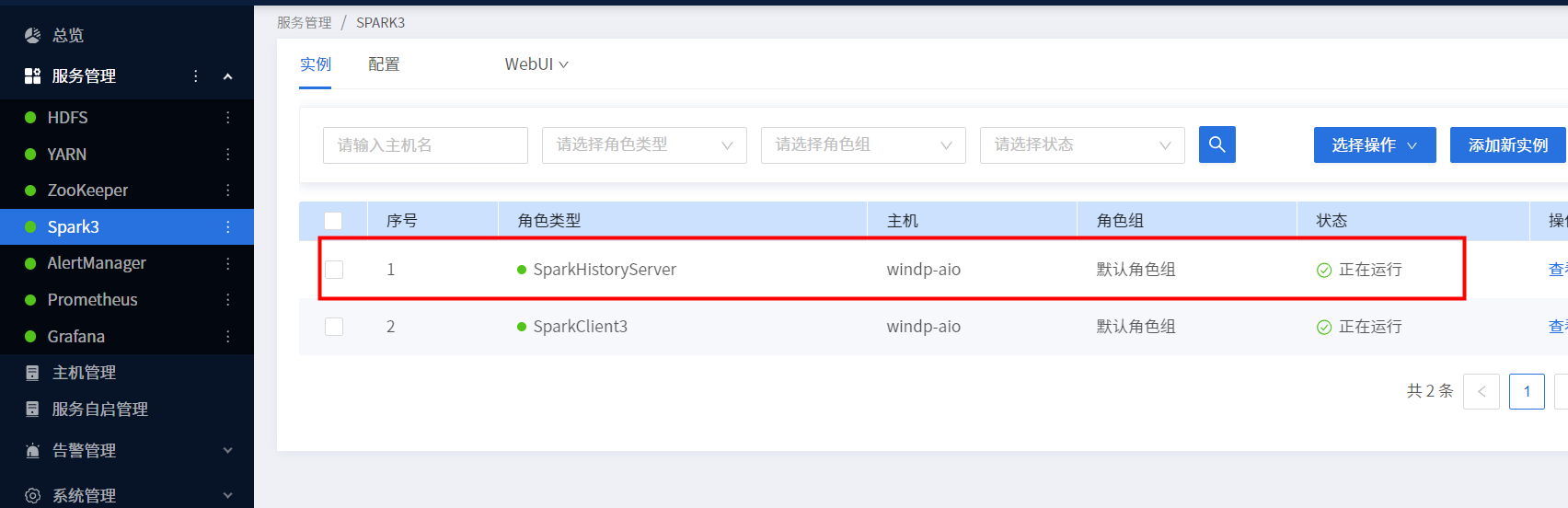

datasophon页面

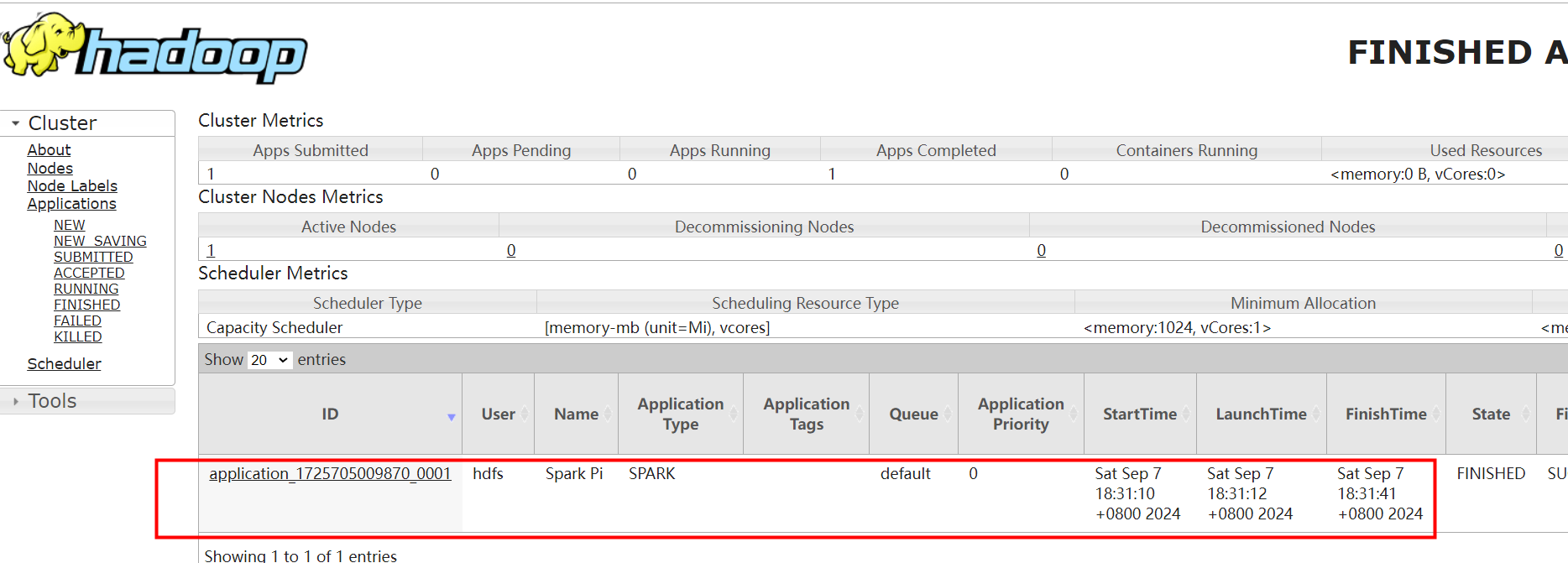

提交spark-examples测试任务

spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn \

--executor-memory 1G \

--num-executors 2 \

/opt/datasophon/spark3/examples/jars/spark-examples_2.12-3.1.3.jar \

10查看YARN

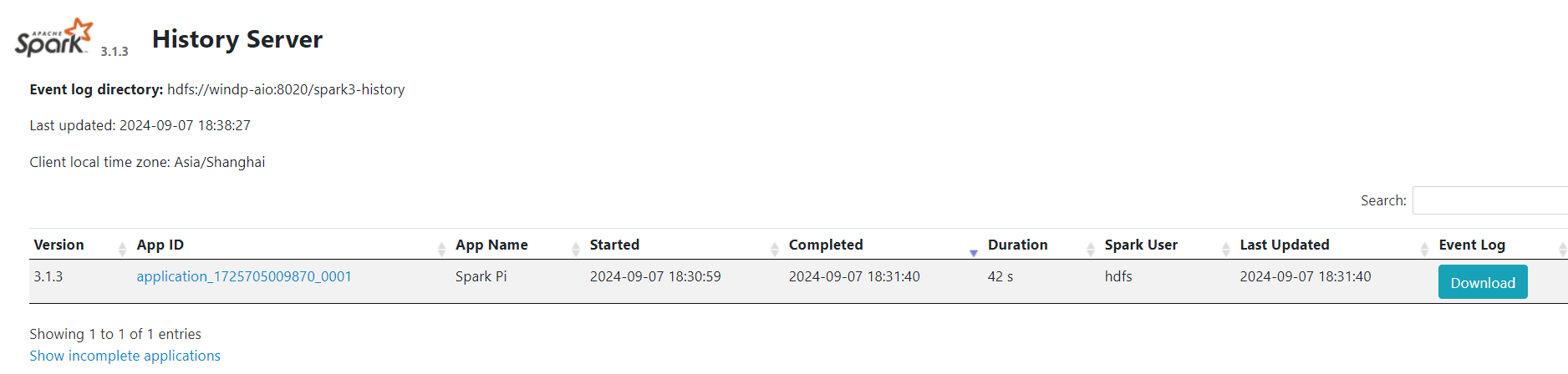

查看SPARK

至此集成完成。

![[LitCTF 2024]SAS - Serializing Authentication](https://i-blog.csdnimg.cn/direct/3979787cccb14a4cb1f2bda157079ca9.png)