flutter开发实战-外接纹理处理图片展示

在Flutter中,如果你想要创建一个外接纹理的widget,你可以使用Texture widget。Texture widget用于显示视频或者画布(canvas)的内容。该组件只有唯一入参textureId

通过外接纹理的方式,实际上Flutter和Native传输的数据载体就是PixelBuffer,Native端的数据源(摄像头、播放器等)将数据写入PixelBuffer,Flutter拿到PixelBuffer以后转成OpenGLES Texture,交由Skia绘制。

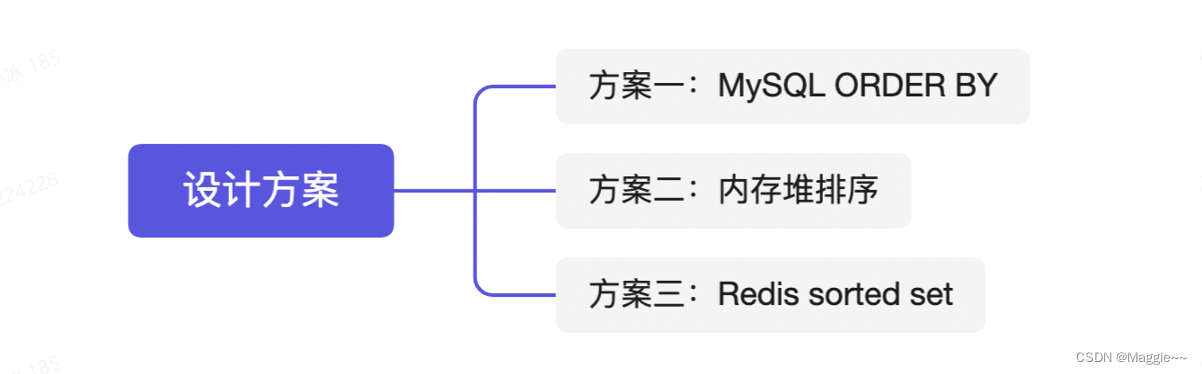

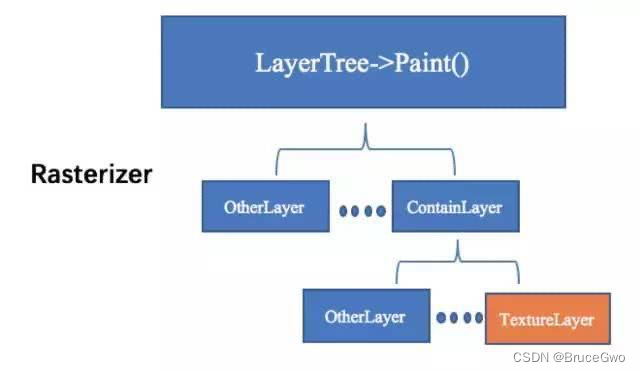

flutter渲染框架如图

layerTree的一个简单架构图

这篇文章分析外接纹理:https://juejin.im/post/5b7b9051e51d45388b6aeceb

一、Texture使用

import 'package:flutter/material.dart';

import 'package:flutter/services.dart';void main() {runApp(MyApp());

}class MyApp extends StatelessWidget {@overrideWidget build(BuildContext context) {return MaterialApp(home: Scaffold(appBar: AppBar(title: Text('外接纹理示例'),),body: Center(child: Texture(textureId: 12345, // 这里应该是从平台通道接收到的纹理ID),),),);}

}这里的textureId是一个假设的纹理ID,实际使用时你需要从平台通道(platform channel)获取实际的纹理ID。例如,如果你是从Android原生代码中获取纹理,你可能会使用MethodChannel来发送纹理ID给Dart代码。

二、外接纹理展示图片

通过外接纹理的方式,flutter与native传输的载体是PixelBuffer,flutter端将图片缓存等交给iOS端的SDWebImage来获取,

通过SDWebImage下载后得到UIImage,将UIImage转换成CVPixelBufferRef。

这样,可以有一个SDTexturePresenter需要实现FlutterTexture协议。SDTexturePresenter来通过SDWebImage下载图片并且转换成CVPixelBufferRef。flutter端通过channel调用iOS端NSObject *textures的registerTexture方法。

- Flutter端使用Texture的图片Widget

import 'package:flutter/material.dart';

import 'package:flutter/services.dart';enum NetworkImageBoxFit { Fill, AspectFit, AspectFill }class NetworkImageWidget extends StatefulWidget {const NetworkImageWidget({Key? key,required this.imageUrl,this.boxFit = NetworkImageBoxFit.AspectFill,this.width = 0,this.height = 0,this.placeholder,this.errorHolder,}) : super(key: key);final String imageUrl;final NetworkImageBoxFit boxFit;final double width;final double height;final Widget? placeholder;final Widget? errorHolder;@override_NetworkImageWidgetState createState() => _NetworkImageWidgetState();

}class _NetworkImageWidgetState extends State<NetworkImageWidget> {final MethodChannel _channel = MethodChannel('sd_texture_channel'); //名称随意, 2端统一就好int textureId = -1; //系统返回的正常id会大于等于0, -1则可以认为 还未加载纹理@overridevoid initState() {super.initState();newTexture();}@overridevoid dispose() {super.dispose();if (textureId >= 0) {_channel.invokeMethod('dispose', {'textureId': textureId});}}BoxFit textureBoxFit(NetworkImageBoxFit imageBoxFit) {if (imageBoxFit == NetworkImageBoxFit.Fill) {return BoxFit.fill;}if (imageBoxFit == NetworkImageBoxFit.AspectFit) {return BoxFit.contain;}if (imageBoxFit == NetworkImageBoxFit.AspectFill) {return BoxFit.cover;}return BoxFit.fill;}Widget showTextureWidget(BuildContext context) {return Container(color: Colors.white,width: widget.width,height: widget.height,child: Texture(textureId: textureId),);}void newTexture() async {int aTextureId = await _channel.invokeMethod('create', {'imageUrl': widget.imageUrl, //本地图片名'width': widget.width,'height': widget.height,'asGif': false, //是否是gif,也可以不这样处理, 平台端也可以自动判断});setState(() {textureId = aTextureId;});}@overrideWidget build(BuildContext context) {Widget body = textureId >= 0? showTextureWidget(context): showDefault() ??Container(color: Colors.white,width: widget.width,height: widget.height,);return body;}Widget? showDefault() {if (widget.placeholder != null) {return widget.placeholder;}if (widget.errorHolder != null) {return widget.errorHolder;}return Container(color: Colors.white,width: widget.width,height: widget.height,);}

}- iOS端下载图片并转换CVPixelBufferRef

SDTexturePresenter.h

#import <Foundation/Foundation.h>

#import <Flutter/Flutter.h>@interface SDTexturePresenter : NSObject <FlutterTexture>@property(copy,nonatomic) void(^updateBlock) (void);- (instancetype)initWithImageStr:(NSString*)imageStr size:(CGSize)size asGif:(Boolean)asGif;-(void)dispose;@endSDTexturePresenter.m

//

// SDTexturePresenter.m

// Pods

//

// Created by xhw on 2020/5/15.

//#import "SDTexturePresenter.h"

#import <Foundation/Foundation.h>

//#import <OpenGLES/EAGL.h>

//#import <OpenGLES/ES2/gl.h>

//#import <OpenGLES/ES2/glext.h>

//#import <CoreVideo/CVPixelBuffer.h>

#import <UIKit/UIKit.h>

#import <SDWebImage/SDWebImageDownloader.h>

#import <SDWebImage/SDWebImageManager.h>static uint32_t bitmapInfoWithPixelFormatType(OSType inputPixelFormat, bool hasAlpha){if (inputPixelFormat == kCVPixelFormatType_32BGRA) {uint32_t bitmapInfo = kCGImageAlphaPremultipliedFirst | kCGBitmapByteOrder32Host;if (!hasAlpha) {bitmapInfo = kCGImageAlphaNoneSkipFirst | kCGBitmapByteOrder32Host;}return bitmapInfo;}else if (inputPixelFormat == kCVPixelFormatType_32ARGB) {uint32_t bitmapInfo = kCGImageAlphaPremultipliedFirst | kCGBitmapByteOrder32Big;return bitmapInfo;}else{NSLog(@"不支持此格式");return 0;}

}// alpha的判断

BOOL CGImageRefContainsAlpha(CGImageRef imageRef) {if (!imageRef) {return NO;}CGImageAlphaInfo alphaInfo = CGImageGetAlphaInfo(imageRef);BOOL hasAlpha = !(alphaInfo == kCGImageAlphaNone ||alphaInfo == kCGImageAlphaNoneSkipFirst ||alphaInfo == kCGImageAlphaNoneSkipLast);return hasAlpha;

}

@interface SDTexturePresenter()

@property (nonatomic) CVPixelBufferRef target;@property (nonatomic,assign) CGSize size;

@property (nonatomic,assign) CGSize imageSize;//图片实际大小 px

@property(nonatomic,assign)Boolean useExSize;//是否使用外部设置的大小@property(nonatomic,assign)Boolean iscopy;//gif

@property (nonatomic, assign) Boolean asGif;//是否是gif

//下方是展示gif图相关的

@property (nonatomic, strong) CADisplayLink * displayLink;

@property (nonatomic, strong) NSMutableArray<NSDictionary*> *images;

@property (nonatomic, assign) int now_index;//当前展示的第几帧

@property (nonatomic, assign) CGFloat can_show_duration;//下一帧要展示的时间差@end@implementation SDTexturePresenter- (instancetype)initWithImageStr:(NSString*)imageStr size:(CGSize)size asGif:(Boolean)asGif {self = [super init];if (self){self.size = size;self.asGif = asGif;self.useExSize = YES;//默认使用外部传入的大小if ([imageStr hasPrefix:@"http://"]||[imageStr hasPrefix:@"https://"]) {[self loadImageWithStrFromWeb:imageStr];} else {[self loadImageWithStrForLocal:imageStr];}}return self;

}-(void)dealloc{}

- (CVPixelBufferRef)copyPixelBuffer {//copyPixelBuffer方法执行后 释放纹理id的时候会自动释放_target//如果没有走copyPixelBuffer方法时 则需要手动释放_target_iscopy = YES;CVPixelBufferRetain(_target);//运行发现 这里不用加;return _target;

}-(void)dispose{self.displayLink.paused = YES;[self.displayLink invalidate];self.displayLink = nil;if (!_iscopy) {CVPixelBufferRelease(_target);}

}// 此方法能还原真实的图片

- (CVPixelBufferRef)CVPixelBufferRefFromUiImage:(UIImage *)img size:(CGSize)size {if (!img) {return nil;}CGImageRef image = [img CGImage];// CGSize size = CGSizeMake(5000, 5000);

// CGFloat frameWidth = CGImageGetWidth(image);

// CGFloat frameHeight = CGImageGetHeight(image);CGFloat frameWidth = size.width;CGFloat frameHeight = size.height;//兼容外部 不传大小if (frameWidth<=0 || frameHeight<=0) {if (img!=nil) {frameWidth = CGImageGetWidth(image);frameHeight = CGImageGetHeight(image);}else{frameWidth = 1;frameHeight = 1;}}else if (!self.useExSize && img!=nil) {//使用图片大小frameWidth = CGImageGetWidth(image);frameHeight = CGImageGetHeight(image);}BOOL hasAlpha = CGImageRefContainsAlpha(image);CFDictionaryRef empty = CFDictionaryCreate(kCFAllocatorDefault, NULL, NULL, 0, &kCFTypeDictionaryKeyCallBacks, &kCFTypeDictionaryValueCallBacks);NSDictionary *options = [NSDictionary dictionaryWithObjectsAndKeys:[NSNumber numberWithBool:YES], kCVPixelBufferCGImageCompatibilityKey,[NSNumber numberWithBool:YES], kCVPixelBufferCGBitmapContextCompatibilityKey,empty, kCVPixelBufferIOSurfacePropertiesKey,nil];// NSDictionary *options = @{// (NSString *)kCVPixelBufferCGImageCompatibilityKey:@YES,// (NSString *)kCVPixelBufferCGBitmapContextCompatibilityKey:@YES,// (NSString *)kCVPixelBufferIOSurfacePropertiesKey:[NSDictionary dictionary]// };CVPixelBufferRef pxbuffer = NULL;CVReturn status = CVPixelBufferCreate(kCFAllocatorDefault, frameWidth, frameHeight, kCVPixelFormatType_32BGRA, (__bridge CFDictionaryRef) options, &pxbuffer);NSParameterAssert(status == kCVReturnSuccess && pxbuffer != NULL);CVPixelBufferLockBaseAddress(pxbuffer, 0);void *pxdata = CVPixelBufferGetBaseAddress(pxbuffer);NSParameterAssert(pxdata != NULL);CGColorSpaceRef rgbColorSpace = CGColorSpaceCreateDeviceRGB();uint32_t bitmapInfo = bitmapInfoWithPixelFormatType(kCVPixelFormatType_32BGRA, (bool)hasAlpha);CGContextRef context = CGBitmapContextCreate(pxdata, frameWidth, frameHeight, 8, CVPixelBufferGetBytesPerRow(pxbuffer), rgbColorSpace, bitmapInfo);// CGContextRef context = CGBitmapContextCreate(pxdata, size.width, size.height, 8, CVPixelBufferGetBytesPerRow(pxbuffer), rgbColorSpace, (CGBitmapInfo)kCGImageAlphaNoneSkipFirst);NSParameterAssert(context);CGContextConcatCTM(context, CGAffineTransformIdentity);CGContextDrawImage(context, CGRectMake(0, 0, frameWidth, frameHeight), image);CGColorSpaceRelease(rgbColorSpace);CGContextRelease(context);CVPixelBufferUnlockBaseAddress(pxbuffer, 0);return pxbuffer;

}#pragma mark - image

-(void)loadImageWithStrForLocal:(NSString*)imageStr{if (self.asGif) {self.images = [NSMutableArray array];[self sd_GIFImagesWithLocalNamed:imageStr];} else {UIImage *iamge = [UIImage imageNamed:imageStr];self.target = [self CVPixelBufferRefFromUiImage:iamge size:self.size];}

}

-(void)loadImageWithStrFromWeb:(NSString*)imageStr{__weak typeof(SDTexturePresenter*) weakSelf = self;[[SDWebImageDownloader sharedDownloader] downloadImageWithURL:[NSURL URLWithString:imageStr] completed:^(UIImage * _Nullable image, NSData * _Nullable data, NSError * _Nullable error, BOOL finished) {if (weakSelf.asGif) {for (UIImage * uiImage in image.images) {NSDictionary *dic = @{@"duration":@(image.duration*1.0/image.images.count),@"image":uiImage};[weakSelf.images addObject:dic];}[weakSelf startGifDisplay];} else {weakSelf.target = [weakSelf CVPixelBufferRefFromUiImage:image size:weakSelf.size];if (weakSelf.updateBlock) {weakSelf.updateBlock();}}}];}-(void)updategif:(CADisplayLink*)displayLink{// NSLog(@"123--->%f",displayLink.duration);if (self.images.count==0) {self.displayLink.paused = YES;[self.displayLink invalidate];self.displayLink = nil;return;}self.can_show_duration -=displayLink.duration;if (self.can_show_duration<=0) {NSDictionary*dic = [self.images objectAtIndex:self.now_index];if (_target &&!_iscopy) {CVPixelBufferRelease(_target);}self.target = [self CVPixelBufferRefFromUiImage:[dic objectForKey:@"image"] size:self.size];_iscopy = NO;self.updateBlock();self.now_index += 1;if (self.now_index>=self.images.count) {self.now_index = 0;// self.displayLink.paused = YES;// [self.displayLink invalidate];// self.displayLink = nil;}self.can_show_duration = ((NSNumber*)[dic objectForKey:@"duration"]).floatValue;}}

- (void)startGifDisplay {self.displayLink = [CADisplayLink displayLinkWithTarget:self selector:@selector(updategif:)];// self.displayLink.paused = YES;[self.displayLink addToRunLoop:[NSRunLoop currentRunLoop] forMode:NSRunLoopCommonModes];

}- (void)sd_GifImagesWithLocalData:(NSData *)data {if (!data) {return;}CGImageSourceRef source = CGImageSourceCreateWithData((__bridge CFDataRef)data, NULL);size_t count = CGImageSourceGetCount(source);UIImage *animatedImage;if (count <= 1) {animatedImage = [[UIImage alloc] initWithData:data];}else {// CVPixelBufferRef targets[count];for (size_t i = 0; i < count; i++) {CGImageRef image = CGImageSourceCreateImageAtIndex(source, i, NULL);if (!image) {continue;}UIImage *uiImage = [UIImage imageWithCGImage:image scale:[UIScreen mainScreen].scale orientation:UIImageOrientationUp];NSDictionary *dic = @{@"duration":@([self sd_frameDurationAtIndex:i source:source]),@"image":uiImage};[_images addObject:dic];CGImageRelease(image);}}CFRelease(source);[self startGifDisplay];

}- (float)sd_frameDurationAtIndex:(NSUInteger)index source:(CGImageSourceRef)source {float frameDuration = 0.1f;CFDictionaryRef cfFrameProperties = CGImageSourceCopyPropertiesAtIndex(source, index, nil);NSDictionary *frameProperties = (__bridge NSDictionary *)cfFrameProperties;NSDictionary *gifProperties = frameProperties[(NSString *)kCGImagePropertyGIFDictionary];NSNumber *delayTimeUnclampedProp = gifProperties[(NSString *)kCGImagePropertyGIFUnclampedDelayTime];if (delayTimeUnclampedProp) {frameDuration = [delayTimeUnclampedProp floatValue];}else {NSNumber *delayTimeProp = gifProperties[(NSString *)kCGImagePropertyGIFDelayTime];if (delayTimeProp) {frameDuration = [delayTimeProp floatValue];}}if (frameDuration < 0.011f) {frameDuration = 0.100f;}CFRelease(cfFrameProperties);return frameDuration;

}- (void)sd_GIFImagesWithLocalNamed:(NSString *)name {if ([name hasSuffix:@".gif"]) {name = [name stringByReplacingCharactersInRange:NSMakeRange(name.length-4, 4) withString:@""];}CGFloat scale = [UIScreen mainScreen].scale;if (scale > 1.0f) {NSData *data = nil;if (scale>2.0f) {NSString *retinaPath = [[NSBundle mainBundle] pathForResource:[name stringByAppendingString:@"@3x"] ofType:@"gif"];data = [NSData dataWithContentsOfFile:retinaPath];}if (!data){NSString *retinaPath = [[NSBundle mainBundle] pathForResource:[name stringByAppendingString:@"@2x"] ofType:@"gif"];data = [NSData dataWithContentsOfFile:retinaPath];}if (!data) {NSString *path = [[NSBundle mainBundle] pathForResource:name ofType:@"gif"];data = [NSData dataWithContentsOfFile:path];}if (data) {[self sd_GifImagesWithLocalData:data];}}else {NSString *path = [[NSBundle mainBundle] pathForResource:name ofType:@"gif"];NSData *data = [NSData dataWithContentsOfFile:path];if (data) {[self sd_GifImagesWithLocalData:data];}}

}

@end- 通过channel将textureId回调回传给flutter端

SDTexturePlugin.h

#import <Flutter/Flutter.h>@interface SDTexturePlugin : NSObject<FlutterPlugin>+ (void)registerWithRegistrar:(NSObject<FlutterPluginRegistrar>*)registrar;@endSDTexturePlugin.m

#import "SDTexturePlugin.h"

#import "SDTexturePresenter.h"

#import "SDWeakProxy.h"@interface SDTexturePlugin()@property (nonatomic, strong) NSMutableDictionary<NSNumber *, SDTexturePresenter *> *renders;

@property (nonatomic, strong) NSObject<FlutterTextureRegistry> *textures;@end@implementation SDTexturePlugin- (instancetype)initWithTextures:(NSObject<FlutterTextureRegistry> *)textures {self = [super init];if (self) {_renders = [[NSMutableDictionary alloc] init];_textures = textures;}return self;

}+ (void)registerWithRegistrar:(NSObject<FlutterPluginRegistrar>*)registrar {FlutterMethodChannel* channel = [FlutterMethodChannelmethodChannelWithName:@"sd_texture_channel"binaryMessenger:[registrar messenger]];SDTexturePlugin* instance = [[SDTexturePlugin alloc] initWithTextures:[registrar textures]];[registrar addMethodCallDelegate:instance channel:channel];

}- (void)handleMethodCall:(FlutterMethodCall*)call result:(FlutterResult)result {NSLog(@"call method:%@ arguments:%@", call.method, call.arguments);if ([@"create" isEqualToString:call.method] || [@"acreate" isEqualToString:call.method]) {NSString *imageStr = call.arguments[@"imageUrl"];Boolean asGif = NO;CGFloat width = [call.arguments[@"width"] floatValue]*[UIScreen mainScreen].scale;CGFloat height = [call.arguments[@"height"] floatValue]*[UIScreen mainScreen].scale;CGSize size = CGSizeMake(width, height);SDTexturePresenter *render = [[SDTexturePresenter alloc] initWithImageStr:imageStr size:size asGif:asGif];int64_t textureId = [self.textures registerTexture:render];render.updateBlock = ^{[self.textures textureFrameAvailable:textureId];};NSLog(@"handleMethodCall textureId:%lld", textureId);[_renders setObject:render forKey:@(textureId)];result(@(textureId));}else if ([@"dispose" isEqualToString:call.method]) {if (call.arguments[@"textureId"]!=nil && ![call.arguments[@"textureId"] isKindOfClass:[NSNull class]]) {SDTexturePresenter *render = [_renders objectForKey:call.arguments[@"textureId"]];[_renders removeObjectForKey:call.arguments[@"textureId"]];[render dispose];NSNumber*numb = call.arguments[@"textureId"];if (numb) {[self.textures unregisterTexture:numb.longLongValue];}}}else {result(FlutterMethodNotImplemented);}

}-(void)refreshTextureWithTextureId:(int64_t)textureId{}

@end三、小结

flutter开发实战-外接纹理处理图片展示

学习记录,每天不停进步。