LlaMA 3 系列博客

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (一)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (二)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (三)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (四)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (五)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (六)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (七)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (八)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (九)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (十)

构建安全的GenAI/LLMs核心技术解密之大模型对抗攻击(一)

构建安全的GenAI/LLMs核心技术解密之大模型对抗攻击(二)

构建安全的GenAI/LLMs核心技术解密之大模型对抗攻击(三)

构建安全的GenAI/LLMs核心技术解密之大模型对抗攻击(四)

构建安全的GenAI/LLMs核心技术解密之大模型对抗攻击(五)

你好 GPT-4o!

大模型标记器之Tokenizer可视化(GPT-4o)

大模型标记器 Tokenizer之Byte Pair Encoding (BPE) 算法详解与示例

大模型标记器 Tokenizer之Byte Pair Encoding (BPE)源码分析

大模型之自注意力机制Self-Attention(一)

大模型之自注意力机制Self-Attention(二)

大模型之自注意力机制Self-Attention(三)

基于 LlaMA 3 + LangGraph 在windows本地部署大模型 (十一)

Llama 3 模型家族构建安全可信赖企业级AI应用之 Code Llama (一)

Llama 3 模型家族构建安全可信赖企业级AI应用之 Code Llama (二)

Llama 3 模型家族构建安全可信赖企业级AI应用之 Code Llama (三)

Llama 3 模型家族构建安全可信赖企业级AI应用之 Code Llama (四)

Llama 3 模型家族构建安全可信赖企业级AI应用之 Code Llama (五)

Llama 3 模型家族构建安全可信赖企业级AI应用之使用 Llama Guard 保护大模型对话(一)

Llama 3 模型家族构建安全可信赖企业级AI应用之使用 Llama Guard 保护大模型对话(二)

Llama 3 模型家族构建安全可信赖企业级AI应用之使用 Llama Guard 保护大模型对话(三)

大模型之深入理解Transformer位置编码(Positional Embedding)

大模型之深入理解Transformer Layer Normalization(一)

大模型之深入理解Transformer Layer Normalization(二)

大模型之深入理解Transformer Layer Normalization(三)

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(一)初学者的起点

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(二)矩阵操作的演练

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(三)初始化一个嵌入层

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(四)预先计算 RoPE 频率

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(五)预先计算因果掩码

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(六)首次归一化:均方根归一化(RMSNorm)

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(七) 初始化多查询注意力

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(八)旋转位置嵌入

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(九) 计算自注意力

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(十) 残差连接及SwiGLU FFN

大模型之一步一步使用PyTorch编写Meta的Llama 3代码(十一)输出概率分布 及损失函数计算

大模型之使用PyTorch编写Meta的Llama 3实际功能代码(一)加载简化分词器及设置参数

大模型之使用PyTorch编写Meta的Llama 3实际功能代码(二)RoPE 及注意力机制

大模型之使用PyTorch编写Meta的Llama 3实际功能代码(三) FeedForward 及 Residual Layers

大模型之使用PyTorch编写Meta的Llama 3实际功能代码(四) 构建 Llama3 类模型本身

大模型之使用PyTorch编写Meta的Llama 3实际功能代码(五)训练并测试你自己的 minLlama3

大模型之使用PyTorch编写Meta的Llama 3实际功能代码(六)加载已经训练好的miniLlama3模型

Llama 3 模型家族构建安全可信赖企业级AI应用之使用 Llama Guard 保护大模型对话 (四)

Llama 3 模型家族构建安全可信赖企业级AI应用之使用 Llama Guard 保护大模型对话 (五)

Llama 3 模型家族构建安全可信赖企业级AI应用之使用 Llama Guard 保护大模型对话 (六)

Llama 3 模型家族构建安全可信赖企业级AI应用之使用 Llama Guard 保护大模型对话 (七)

Llama 3 模型家族构建安全可信赖企业级AI应用之使用 Llama Guard 保护大模型对话 (八)

Llama 3 模型家族构建安全可信赖企业级AI应用之 CyberSecEval 2:量化 LLM 安全和能力的基准(一)

Llama 3 模型家族构建安全可信赖企业级AI应用之 CyberSecEval 2:量化 LLM 安全和能力的基准(二)

Llama 3 模型家族构建安全可信赖企业级AI应用之 CyberSecEval 2:量化 LLM 安全和能力的基准(三)

Llama 3 模型家族构建安全可信赖企业级AI应用之 CyberSecEval 2:量化 LLM 安全和能力的基准(四)

Llama 3 模型家族构建安全可信赖企业级AI应用之code shield(一)Code Shield简介

Llama 3 模型家族构建安全可信赖企业级AI应用之code shield(二)防止 LLM 生成不安全代码

Llama 3 模型家族构建安全可信赖企业级AI应用之code shield(三)Code Shield代码示例

Llama模型家族之使用 Supervised Fine-Tuning(SFT)微调预训练Llama 3 语言模型(一) LLaMA-Factory简介

Llama模型家族之使用 Supervised Fine-Tuning(SFT)微调预训练Llama 3 语言模型(二) LLaMA-Factory训练方法及数据集

大模型之Ollama:在本地机器上释放大型语言模型的强大功能

Llama模型家族之使用 Supervised Fine-Tuning(SFT)微调预训练Llama 3 语言模型(三)通过Web UI微调

Llama模型家族之使用 Supervised Fine-Tuning(SFT)微调预训练Llama 3 语言模型(四)通过命令方式微调

命令方式进行微调

import jsonargs = dict(stage="sft", # do supervised fine-tuningdo_train=True,model_name_or_path="unsloth/llama-3-8b-Instruct-bnb-4bit", # use bnb-4bit-quantized Llama-3-8B-Instruct modeldataset="identity,alpaca_en_demo", # use alpaca and identity datasetstemplate="llama3", # use llama3 prompt templatefinetuning_type="lora", # use LoRA adapters to save memorylora_target="all", # attach LoRA adapters to all linear layersoutput_dir="llama3_lora", # the path to save LoRA adaptersper_device_train_batch_size=2, # the batch sizegradient_accumulation_steps=4, # the gradient accumulation stepslr_scheduler_type="cosine", # use cosine learning rate schedulerlogging_steps=10, # log every 10 stepswarmup_ratio=0.1, # use warmup schedulersave_steps=1000, # save checkpoint every 1000 stepslearning_rate=5e-5, # the learning ratenum_train_epochs=3.0, # the epochs of trainingmax_samples=500, # use 500 examples in each datasetmax_grad_norm=1.0, # clip gradient norm to 1.0quantization_bit=4, # use 4-bit QLoRAloraplus_lr_ratio=16.0, # use LoRA+ algorithm with lambda=16.0use_unsloth=True, # use UnslothAI's LoRA optimization for 2x faster trainingfp16=True, # use float16 mixed precision training

)json.dump(args, open("train_llama3.json", "w", encoding="utf-8"), indent=2)%cd /content/LLaMA-Factory/!llamafactory-cli train train_llama3.json

-

import json:这行代码导入Python的json模块,这个模块提供了处理JSON数据的函数。 -

args = dict(...):这行代码创建了一个名为args的字典,其中包含了一系列的键值对,这些键值对定义了训练过程中的各种参数。stage="sft":设置训练阶段为监督式微调(supervised fine-tuning)。do_train=True:指示执行训练操作。model_name_or_path="unsloth/llama-3-8b-Instruct-bnb-4bit":指定使用的模型路径,这里是一个经过量化的LLaMA-3-8B-Instruct模型。dataset="identity,alpaca_en_demo":指定使用的数据集,这里包括了identity和alpaca_en_demo。template="llama3":指定使用的提示模板。finetuning_type="lora":指定微调类型为LoRA(Low-Rank Adaptation)。lora_target="all":指定LoRA适配器应用于所有线性层。output_dir="llama3_lora":指定保存LoRA适配器的目录。per_device_train_batch_size=2:设置每个设备的训练批次大小。gradient_accumulation_steps=4:设置梯度累积步骤。lr_scheduler_type="cosine":设置学习率调度器类型为余弦。logging_steps=10:设置每10步记录一次日志。warmup_ratio=0.1:设置预热比例。save_steps=1000:设置每1000步保存一次检查点。learning_rate=5e-5:设置学习率。num_train_epochs=3.0:设置训练的轮数。max_samples=500:设置每个数据集使用的最大样本数。max_grad_norm=1.0:设置梯度裁剪的最大值。quantization_bit=4:设置量化位宽为4位。loraplus_lr_ratio=16.0:设置LoRA+算法的lambda值为16.0。use_unsloth=True:指定使用UnslothAI的LoRA优化。fp16=True:指定使用float16混合精度训练。

-

json.dump(args, open("train_llama3.json", "w", encoding="utf-8"), indent=2):这行代码使用json.dump()函数将字典args写入到名为train_llama3.json的文件中。文件以写入模式打开,指定编码为utf-8,并且indent=2参数使得输出的JSON文件格式美观,易于阅读。 -

%cd /content/LLaMA-Factory/: 改变当前工作目录到/content/LLaMA-Factory/。 -

!llamafactory-cli train train_llama3.json: 在命令行中执行llamafactory-cli工具的train命令,并传入train_llama3.json文件作为参数。!符号表示在Jupyter Notebook中执行系统命令。这行代码将启动训练任务,使用train_llama3.json文件中定义的参数。

官网提供的日志为

/content/LLaMA-Factory

2024-05-18 13:59:50.424077: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2024-05-18 13:59:50.424155: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2024-05-18 13:59:50.548022: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

2024-05-18 13:59:52.887533: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

05/18/2024 13:59:57 - WARNING - llamafactory.hparams.parser - We recommend enable `upcast_layernorm` in quantized training.

05/18/2024 13:59:57 - INFO - llamafactory.hparams.parser - Process rank: 0, device: cuda:0, n_gpu: 1, distributed training: False, compute dtype: torch.float16

/usr/local/lib/python3.10/dist-packages/huggingface_hub/file_download.py:1132: FutureWarning: `resume_download` is deprecated and will be removed in version 1.0.0. Downloads always resume when possible. If you want to force a new download, use `force_download=True`.warnings.warn(

tokenizer_config.json: 100% 51.1k/51.1k [00:00<00:00, 19.6MB/s]

tokenizer.json: 100% 9.09M/9.09M [00:00<00:00, 13.7MB/s]

special_tokens_map.json: 100% 459/459 [00:00<00:00, 3.85MB/s]

[INFO|tokenization_utils_base.py:2087] 2024-05-18 13:59:58,903 >> loading file tokenizer.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/tokenizer.json

[INFO|tokenization_utils_base.py:2087] 2024-05-18 13:59:58,903 >> loading file added_tokens.json from cache at None

[INFO|tokenization_utils_base.py:2087] 2024-05-18 13:59:58,903 >> loading file special_tokens_map.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/special_tokens_map.json

[INFO|tokenization_utils_base.py:2087] 2024-05-18 13:59:58,903 >> loading file tokenizer_config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/tokenizer_config.json

[WARNING|logging.py:314] 2024-05-18 13:59:59,296 >> Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

05/18/2024 13:59:59 - INFO - llamafactory.data.template - Replace eos token: <|eot_id|>

05/18/2024 13:59:59 - INFO - llamafactory.data.loader - Loading dataset identity.json...

Generating train split: 91 examples [00:00, 5252.40 examples/s]

Converting format of dataset: 100% 91/91 [00:00<00:00, 7062.42 examples/s]

05/18/2024 13:59:59 - INFO - llamafactory.data.loader - Loading dataset alpaca_en_demo.json...

Generating train split: 1000 examples [00:00, 101255.44 examples/s]

Converting format of dataset: 100% 500/500 [00:00<00:00, 45283.12 examples/s]

Running tokenizer on dataset: 100% 591/591 [00:00<00:00, 1534.82 examples/s]

input_ids:

[128000, 128006, 9125, 128007, 271, 2675, 527, 264, 11190, 18328, 13, 128009, 128006, 882, 128007, 271, 6151, 128009, 128006, 78191, 128007, 271, 9906, 0, 358, 1097, 445, 81101, 12, 18, 11, 459, 15592, 18328, 8040, 555, 445, 8921, 4940, 17367, 13, 2650, 649, 358, 7945, 499, 3432, 30, 128009]

inputs:

<|begin_of_text|><|start_header_id|>system<|end_header_id|>You are a helpful assistant.<|eot_id|><|start_header_id|>user<|end_header_id|>hi<|eot_id|><|start_header_id|>assistant<|end_header_id|>Hello! I am Llama-3, an AI assistant developed by LLaMA Factory. How can I assist you today?<|eot_id|>

label_ids:

[-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 9906, 0, 358, 1097, 445, 81101, 12, 18, 11, 459, 15592, 18328, 8040, 555, 445, 8921, 4940, 17367, 13, 2650, 649, 358, 7945, 499, 3432, 30, 128009]

labels:

Hello! I am Llama-3, an AI assistant developed by LLaMA Factory. How can I assist you today?<|eot_id|>

config.json: 100% 1.15k/1.15k [00:00<00:00, 9.54MB/s]

[INFO|configuration_utils.py:726] 2024-05-18 14:00:00,782 >> loading configuration file config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/config.json

[INFO|configuration_utils.py:789] 2024-05-18 14:00:00,783 >> Model config LlamaConfig {"_name_or_path": "unsloth/llama-3-8b-Instruct-bnb-4bit","architectures": ["LlamaForCausalLM"],"attention_bias": false,"attention_dropout": 0.0,"bos_token_id": 128000,"eos_token_id": 128009,"hidden_act": "silu","hidden_size": 4096,"initializer_range": 0.02,"intermediate_size": 14336,"max_position_embeddings": 8192,"model_type": "llama","num_attention_heads": 32,"num_hidden_layers": 32,"num_key_value_heads": 8,"pretraining_tp": 1,"quantization_config": {"_load_in_4bit": true,"_load_in_8bit": false,"bnb_4bit_compute_dtype": "bfloat16","bnb_4bit_quant_type": "nf4","bnb_4bit_use_double_quant": true,"llm_int8_enable_fp32_cpu_offload": false,"llm_int8_has_fp16_weight": false,"llm_int8_skip_modules": null,"llm_int8_threshold": 6.0,"load_in_4bit": true,"load_in_8bit": false,"quant_method": "bitsandbytes"},"rms_norm_eps": 1e-05,"rope_scaling": null,"rope_theta": 500000.0,"tie_word_embeddings": false,"torch_dtype": "bfloat16","transformers_version": "4.40.2","use_cache": true,"vocab_size": 128256

}05/18/2024 14:00:00 - INFO - llamafactory.model.utils.quantization - Loading ?-bit BITSANDBYTES-quantized model.

🦥 Unsloth: Will patch your computer to enable 2x faster free finetuning.

[INFO|configuration_utils.py:726] 2024-05-18 14:00:01,407 >> loading configuration file config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/config.json

[INFO|configuration_utils.py:789] 2024-05-18 14:00:01,410 >> Model config LlamaConfig {"_name_or_path": "unsloth/llama-3-8b-Instruct-bnb-4bit","architectures": ["LlamaForCausalLM"],"attention_bias": false,"attention_dropout": 0.0,"bos_token_id": 128000,"eos_token_id": 128009,"hidden_act": "silu","hidden_size": 4096,"initializer_range": 0.02,"intermediate_size": 14336,"max_position_embeddings": 8192,"model_type": "llama","num_attention_heads": 32,"num_hidden_layers": 32,"num_key_value_heads": 8,"pretraining_tp": 1,"quantization_config": {"_load_in_4bit": true,"_load_in_8bit": false,"bnb_4bit_compute_dtype": "bfloat16","bnb_4bit_quant_type": "nf4","bnb_4bit_use_double_quant": true,"llm_int8_enable_fp32_cpu_offload": false,"llm_int8_has_fp16_weight": false,"llm_int8_skip_modules": null,"llm_int8_threshold": 6.0,"load_in_4bit": true,"load_in_8bit": false,"quant_method": "bitsandbytes"},"rms_norm_eps": 1e-05,"rope_scaling": null,"rope_theta": 500000.0,"tie_word_embeddings": false,"torch_dtype": "bfloat16","transformers_version": "4.40.2","use_cache": true,"vocab_size": 128256

}==((====))== Unsloth: Fast Llama patching release 2024.5\\ /| GPU: Tesla T4. Max memory: 14.748 GB. Platform = Linux.

O^O/ \_/ \ Pytorch: 2.2.1+cu121. CUDA = 7.5. CUDA Toolkit = 12.1.

\ / Bfloat16 = FALSE. Xformers = 0.0.25. FA = False."-____-" Free Apache license: http://github.com/unslothai/unsloth

[INFO|configuration_utils.py:726] 2024-05-18 14:00:01,510 >> loading configuration file config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/config.json

[INFO|configuration_utils.py:789] 2024-05-18 14:00:01,511 >> Model config LlamaConfig {"_name_or_path": "unsloth/llama-3-8b-Instruct-bnb-4bit","architectures": ["LlamaForCausalLM"],"attention_bias": false,"attention_dropout": 0.0,"bos_token_id": 128000,"eos_token_id": 128009,"hidden_act": "silu","hidden_size": 4096,"initializer_range": 0.02,"intermediate_size": 14336,"max_position_embeddings": 8192,"model_type": "llama","num_attention_heads": 32,"num_hidden_layers": 32,"num_key_value_heads": 8,"pretraining_tp": 1,"quantization_config": {"_load_in_4bit": true,"_load_in_8bit": false,"bnb_4bit_compute_dtype": "bfloat16","bnb_4bit_quant_type": "nf4","bnb_4bit_use_double_quant": true,"llm_int8_enable_fp32_cpu_offload": false,"llm_int8_has_fp16_weight": false,"llm_int8_skip_modules": null,"llm_int8_threshold": 6.0,"load_in_4bit": true,"load_in_8bit": false,"quant_method": "bitsandbytes"},"rms_norm_eps": 1e-05,"rope_scaling": null,"rope_theta": 500000.0,"tie_word_embeddings": false,"torch_dtype": "bfloat16","transformers_version": "4.40.2","use_cache": true,"vocab_size": 128256

}[INFO|configuration_utils.py:726] 2024-05-18 14:00:01,711 >> loading configuration file config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/config.json

[INFO|configuration_utils.py:789] 2024-05-18 14:00:01,712 >> Model config LlamaConfig {"_name_or_path": "unsloth/llama-3-8b-Instruct-bnb-4bit","architectures": ["LlamaForCausalLM"],"attention_bias": false,"attention_dropout": 0.0,"bos_token_id": 128000,"eos_token_id": 128009,"hidden_act": "silu","hidden_size": 4096,"initializer_range": 0.02,"intermediate_size": 14336,"max_position_embeddings": 8192,"model_type": "llama","num_attention_heads": 32,"num_hidden_layers": 32,"num_key_value_heads": 8,"pretraining_tp": 1,"quantization_config": {"_load_in_4bit": true,"_load_in_8bit": false,"bnb_4bit_compute_dtype": "bfloat16","bnb_4bit_quant_type": "nf4","bnb_4bit_use_double_quant": true,"llm_int8_enable_fp32_cpu_offload": false,"llm_int8_has_fp16_weight": false,"llm_int8_skip_modules": null,"llm_int8_threshold": 6.0,"load_in_4bit": true,"load_in_8bit": false,"quant_method": "bitsandbytes"},"rms_norm_eps": 1e-05,"rope_scaling": null,"rope_theta": 500000.0,"tie_word_embeddings": false,"torch_dtype": "float16","transformers_version": "4.40.2","use_cache": true,"vocab_size": 128256

}model.safetensors: 100% 5.70G/5.70G [00:35<00:00, 162MB/s]

[INFO|modeling_utils.py:3429] 2024-05-18 14:00:37,611 >> loading weights file model.safetensors from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/model.safetensors

[INFO|modeling_utils.py:1494] 2024-05-18 14:00:37,747 >> Instantiating LlamaForCausalLM model under default dtype torch.float16.

[INFO|configuration_utils.py:928] 2024-05-18 14:00:37,753 >> Generate config GenerationConfig {"bos_token_id": 128000,"eos_token_id": 128009

}[INFO|modeling_utils.py:4170] 2024-05-18 14:01:03,794 >> All model checkpoint weights were used when initializing LlamaForCausalLM.[INFO|modeling_utils.py:4178] 2024-05-18 14:01:03,794 >> All the weights of LlamaForCausalLM were initialized from the model checkpoint at unsloth/llama-3-8b-Instruct-bnb-4bit.

If your task is similar to the task the model of the checkpoint was trained on, you can already use LlamaForCausalLM for predictions without further training.

generation_config.json: 100% 131/131 [00:00<00:00, 844kB/s]

[INFO|configuration_utils.py:883] 2024-05-18 14:01:04,017 >> loading configuration file generation_config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/generation_config.json

[INFO|configuration_utils.py:928] 2024-05-18 14:01:04,017 >> Generate config GenerationConfig {"bos_token_id": 128000,"eos_token_id": [128001,128009]

}tokenizer_config.json: 100% 51.1k/51.1k [00:00<00:00, 628kB/s]

tokenizer.json: 100% 9.09M/9.09M [00:00<00:00, 13.7MB/s]

special_tokens_map.json: 100% 459/459 [00:00<00:00, 3.85MB/s]

[INFO|tokenization_utils_base.py:2087] 2024-05-18 14:01:05,847 >> loading file tokenizer.json from cache at huggingface_tokenizers_cache/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/tokenizer.json

[INFO|tokenization_utils_base.py:2087] 2024-05-18 14:01:05,847 >> loading file added_tokens.json from cache at None

[INFO|tokenization_utils_base.py:2087] 2024-05-18 14:01:05,847 >> loading file special_tokens_map.json from cache at huggingface_tokenizers_cache/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/special_tokens_map.json

[INFO|tokenization_utils_base.py:2087] 2024-05-18 14:01:05,848 >> loading file tokenizer_config.json from cache at huggingface_tokenizers_cache/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/tokenizer_config.json

[INFO|tokenization_utils_base.py:2087] 2024-05-18 14:01:05,947 >> loading file tokenizer.json from cache at huggingface_tokenizers_cache/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/tokenizer.json

[INFO|tokenization_utils_base.py:2087] 2024-05-18 14:01:05,947 >> loading file added_tokens.json from cache at None

[INFO|tokenization_utils_base.py:2087] 2024-05-18 14:01:05,947 >> loading file special_tokens_map.json from cache at huggingface_tokenizers_cache/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/special_tokens_map.json

[INFO|tokenization_utils_base.py:2087] 2024-05-18 14:01:05,947 >> loading file tokenizer_config.json from cache at huggingface_tokenizers_cache/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/tokenizer_config.json

[WARNING|logging.py:314] 2024-05-18 14:01:06,354 >> Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

05/18/2024 14:01:07 - INFO - llamafactory.model.utils.checkpointing - Gradient checkpointing enabled.

05/18/2024 14:01:07 - INFO - llamafactory.model.adapter - Upcasting trainable params to float32.

05/18/2024 14:01:07 - INFO - llamafactory.model.adapter - Fine-tuning method: LoRA

05/18/2024 14:01:07 - INFO - llamafactory.model.utils.misc - Found linear modules: gate_proj,o_proj,up_proj,v_proj,down_proj,k_proj,q_proj

[WARNING|logging.py:329] 2024-05-18 14:01:07,723 >> Unsloth 2024.5 patched 32 layers with 32 QKV layers, 32 O layers and 32 MLP layers.

05/18/2024 14:01:07 - INFO - llamafactory.model.loader - trainable params: 20971520 || all params: 8051232768 || trainable%: 0.2605

[INFO|trainer.py:626] 2024-05-18 14:01:07,793 >> Using auto half precision backend

05/18/2024 14:01:08 - INFO - llamafactory.train.utils - Using LoRA+ optimizer with loraplus lr ratio 16.00.

[WARNING|logging.py:329] 2024-05-18 14:01:08,161 >> ==((====))== Unsloth - 2x faster free finetuning | Num GPUs = 1\\ /| Num examples = 591 | Num Epochs = 3

O^O/ \_/ \ Batch size per device = 2 | Gradient Accumulation steps = 4

\ / Total batch size = 8 | Total steps = 222"-____-" Number of trainable parameters = 20,971,520

{'loss': 1.3241, 'grad_norm': 0.7079651355743408, 'learning_rate': 2.173913043478261e-05, 'epoch': 0.14}

{'loss': 1.1962, 'grad_norm': 0.9579208493232727, 'learning_rate': 4.347826086956522e-05, 'epoch': 0.27}

{'loss': 1.0155, 'grad_norm': 0.647014319896698, 'learning_rate': 4.98475042744222e-05, 'epoch': 0.41}

{'loss': 1.0735, 'grad_norm': 0.8048399090766907, 'learning_rate': 4.910506156279029e-05, 'epoch': 0.54}

{'loss': 1.0134, 'grad_norm': 1.2250347137451172, 'learning_rate': 4.7763104379936555e-05, 'epoch': 0.68}

{'loss': 1.008, 'grad_norm': 0.9024384021759033, 'learning_rate': 4.585500840294794e-05, 'epoch': 0.81}

{'loss': 1.0718, 'grad_norm': 1.1396949291229248, 'learning_rate': 4.342822968779448e-05, 'epoch': 0.95}

{'loss': 0.851, 'grad_norm': 1.457767367362976, 'learning_rate': 4.054312439471239e-05, 'epoch': 1.08}

{'loss': 0.7271, 'grad_norm': 0.5212425589561462, 'learning_rate': 3.727144767643984e-05, 'epoch': 1.22}

{'loss': 0.7867, 'grad_norm': 0.7233308553695679, 'learning_rate': 3.369456906329956e-05, 'epoch': 1.35}

{'loss': 0.804, 'grad_norm': 1.7029380798339844, 'learning_rate': 2.990144873009946e-05, 'epoch': 1.49}

{'loss': 0.7019, 'grad_norm': 0.9318327903747559, 'learning_rate': 2.5986424976906322e-05, 'epoch': 1.62}

{'loss': 0.6922, 'grad_norm': 1.2409173250198364, 'learning_rate': 2.2046867951027303e-05, 'epoch': 1.76}

{'loss': 0.7369, 'grad_norm': 0.6366718411445618, 'learning_rate': 1.8180757964234924e-05, 'epoch': 1.89}

{'loss': 0.6066, 'grad_norm': 0.6996042728424072, 'learning_rate': 1.4484248634655401e-05, 'epoch': 2.03}

{'loss': 0.5477, 'grad_norm': 0.976839542388916, 'learning_rate': 1.1049275460163999e-05, 'epoch': 2.16}

{'loss': 0.5469, 'grad_norm': 0.6803128719329834, 'learning_rate': 7.961269300209159e-06, 'epoch': 2.3}

{'loss': 0.5508, 'grad_norm': 0.6420938968658447, 'learning_rate': 5.297031633820193e-06, 'epoch': 2.43}

{'loss': 0.5453, 'grad_norm': 1.154207468032837, 'learning_rate': 3.1228244380351602e-06, 'epoch': 2.57}

{'loss': 0.4323, 'grad_norm': 0.6154763698577881, 'learning_rate': 1.4927221931831131e-06, 'epoch': 2.7}

{'loss': 0.4889, 'grad_norm': 0.8464673757553101, 'learning_rate': 4.472670021254899e-07, 'epoch': 2.84}

{'loss': 0.517, 'grad_norm': 0.8681368231773376, 'learning_rate': 1.2460271845654569e-08, 'epoch': 2.97}

100% 222/222 [23:48<00:00, 5.67s/it][INFO|<string>:474] 2024-05-18 14:24:56,760 >> Training completed. Do not forget to share your model on huggingface.co/models =){'train_runtime': 1428.5992, 'train_samples_per_second': 1.241, 'train_steps_per_second': 0.155, 'train_loss': 0.780267754922042, 'epoch': 3.0}

100% 222/222 [23:48<00:00, 6.44s/it]

[INFO|trainer.py:3305] 2024-05-18 14:24:56,763 >> Saving model checkpoint to llama3_lora

[INFO|configuration_utils.py:726] 2024-05-18 14:24:57,091 >> loading configuration file config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/2950abc9d0b34ddd43fd52bbf0d7dca82807ce96/config.json

[INFO|configuration_utils.py:789] 2024-05-18 14:24:57,092 >> Model config LlamaConfig {"_name_or_path": "meta-llama/Meta-Llama-3-8B-Instruct","architectures": ["LlamaForCausalLM"],"attention_bias": false,"attention_dropout": 0.0,"bos_token_id": 128000,"eos_token_id": 128009,"hidden_act": "silu","hidden_size": 4096,"initializer_range": 0.02,"intermediate_size": 14336,"max_position_embeddings": 8192,"model_type": "llama","num_attention_heads": 32,"num_hidden_layers": 32,"num_key_value_heads": 8,"pretraining_tp": 1,"quantization_config": {"_load_in_4bit": true,"_load_in_8bit": false,"bnb_4bit_compute_dtype": "bfloat16","bnb_4bit_quant_type": "nf4","bnb_4bit_use_double_quant": true,"llm_int8_enable_fp32_cpu_offload": false,"llm_int8_has_fp16_weight": false,"llm_int8_skip_modules": null,"llm_int8_threshold": 6.0,"load_in_4bit": true,"load_in_8bit": false,"quant_method": "bitsandbytes"},"rms_norm_eps": 1e-05,"rope_scaling": null,"rope_theta": 500000.0,"tie_word_embeddings": false,"torch_dtype": "bfloat16","transformers_version": "4.40.2","use_cache": true,"vocab_size": 128256

}[INFO|tokenization_utils_base.py:2488] 2024-05-18 14:24:57,297 >> tokenizer config file saved in llama3_lora/tokenizer_config.json

[INFO|tokenization_utils_base.py:2497] 2024-05-18 14:24:57,297 >> Special tokens file saved in llama3_lora/special_tokens_map.json

***** train metrics *****epoch = 3.0total_flos = 17013792GFtrain_loss = 0.7803train_runtime = 0:23:48.59train_samples_per_second = 1.241train_steps_per_second = 0.155

[INFO|modelcard.py:450] 2024-05-18 14:24:57,468 >> Dropping the following result as it does not have all the necessary fields:

{'task': {'name': 'Causal Language Modeling', 'type': 'text-generation'}}

Llama 3 的大型语言模型 参数 的含义:

-

_name_or_path: 指定了模型的名称或者模型文件的路径,这里是 “unsloth/llama-3-8b-Instruct-bnb-4bit”,表明这是一个名为 “llama-3-8b-Instruct” 的模型,且经过了4位量化处理。 -

architectures: 列出了模型的架构,这里只有一个 “LlamaForCausalLM”,意味着这个模型是为了因果语言模型(Causal Language Model)设计的。 -

attention_bias: 表示是否使用注意力偏差,这里设置为false,即不使用。 -

attention_dropout: 注意力机制中的dropout率,这里设置为0.0,表示没有dropout。 -

bos_token_id: 开始标记(Begin of Sentence)的ID,这里设置为128000。 -

eos_token_id: 结束标记(End of Sentence)的ID,这里设置为128009。 -

hidden_act: 隐藏层激活函数的类型,这里使用的是 “silu”。 -

hidden_size: 隐藏层的大小,这里是4096。 -

initializer_range: 初始化权重的范围,这里是0.02。 -

intermediate_size: 中间层的大小,这里是14336。 -

max_position_embeddings: 最大位置嵌入的大小,这里是8192。 -

model_type: 模型的类型,这里是 “llama”。 -

num_attention_heads: 注意力头的数量,这里是32。 -

num_hidden_layers: 隐藏层的数量,这里是32。 -

num_key_value_heads: 键值对头的数量,这里是8。 -

pretraining_tp: 预训练时使用的TP(Tensor Processor)数量,这里是1。 -

quantization_config: 量化配置,包括是否加载为4位或8位量化,使用的计算数据类型,量化类型,是否使用双量化,以及加载量化模型的方法等。 -

rms_norm_eps: RMSNorm(Root Mean Square Normalization)的epsilon值,这里是1e-05。 -

rope_scaling: 相对位置编码的缩放因子,这里没有设置。 -

rope_theta: 相对位置编码的theta值,这里是500000.0。 -

tie_word_embeddings: 是否共享输入和输出的词嵌入,这里设置为false。 -

torch_dtype: PyTorch中使用的数据类型,这里是 “bfloat16”。 -

transformers_version: transformers库的版本,这里是 “4.40.2”。 -

use_cache: 是否使用缓存机制,这里设置为true。 -

vocab_size: 词汇表的大小,这里是128256。

这些参数定义了模型的具体配置,包括其架构、量化设置、激活函数、层数、词汇量等关键特性。

Num examples = 591:训练数据集中有591个样本。

Num Epochs = 3:训练将进行3个周期(Epochs)。

Batch size per device = 2:每个设备上的批次大小为2。

Gradient Accumulation steps = 4:梯度累积步骤为4。

Total batch size = 8:总批次大小为8(Batch size per device乘以设备数)。

Total steps = 222:总训练步骤数为222。

Number of trainable parameters = 20,971,520: 可训练参数的数量为20,971,520。

大模型技术分享

《企业级生成式人工智能LLM大模型技术、算法及案例实战》线上高级研修讲座

模块一:Generative AI 原理本质、技术内核及工程实践周期详解

模块二:工业级 Prompting 技术内幕及端到端的基于LLM 的会议助理实战

模块三:三大 Llama 2 模型详解及实战构建安全可靠的智能对话系统

模块四:生产环境下 GenAI/LLMs 的五大核心问题及构建健壮的应用实战

模块五:大模型应用开发技术:Agentic-based 应用技术及案例实战

模块六:LLM 大模型微调及模型 Quantization 技术及案例实战

模块七:大模型高效微调 PEFT 算法、技术、流程及代码实战进阶

模块八:LLM 模型对齐技术、流程及进行文本Toxicity 分析实战

模块九:构建安全的 GenAI/LLMs 核心技术Red Teaming 解密实战

模块十:构建可信赖的企业私有安全大模型Responsible AI 实战

Llama3Responsible_AI_666">Llama3关键技术深度解析与构建Responsible AI、算法及开发落地实战

1、Llama开源模型家族大模型技术、工具和多模态详解:学员将深入了解Meta Llama 3的创新之处,比如其在语言模型技术上的突破,并学习到如何在Llama 3中构建trust and safety AI。他们将详细了解Llama 3的五大技术分支及工具,以及如何在AWS上实战Llama指令微调的案例。

2、解密Llama 3 Foundation Model模型结构特色技术及代码实现:深入了解Llama 3中的各种技术,比如Tiktokenizer、KV Cache、Grouped Multi-Query Attention等。通过项目二逐行剖析Llama 3的源码,加深对技术的理解。

3、解密Llama 3 Foundation Model模型结构核心技术及代码实现:SwiGLU Activation Function、FeedForward Block、Encoder Block等。通过项目三学习Llama 3的推理及Inferencing代码,加强对技术的实践理解。

4、基于LangGraph on Llama 3构建Responsible AI实战体验:通过项目四在Llama 3上实战基于LangGraph的Responsible AI项目。他们将了解到LangGraph的三大核心组件、运行机制和流程步骤,从而加强对Responsible AI的实践能力。

5、Llama模型家族构建技术构建安全可信赖企业级AI应用内幕详解:深入了解构建安全可靠的企业级AI应用所需的关键技术,比如Code Llama、Llama Guard等。项目五实战构建安全可靠的对话智能项目升级版,加强对安全性的实践理解。

6、Llama模型家族Fine-tuning技术与算法实战:学员将学习Fine-tuning技术与算法,比如Supervised Fine-Tuning(SFT)、Reward Model技术、PPO算法、DPO算法等。项目六动手实现PPO及DPO算法,加强对算法的理解和应用能力。

7、Llama模型家族基于AI反馈的强化学习技术解密:深入学习Llama模型家族基于AI反馈的强化学习技术,比如RLAIF和RLHF。项目七实战基于RLAIF的Constitutional AI。

8、Llama 3中的DPO原理、算法、组件及具体实现及算法进阶:学习Llama 3中结合使用PPO和DPO算法,剖析DPO的原理和工作机制,详细解析DPO中的关键算法组件,并通过综合项目八从零开始动手实现和测试DPO算法,同时课程将解密DPO进阶技术Iterative DPO及IPO算法。

9、Llama模型家族Safety设计与实现:在这个模块中,学员将学习Llama模型家族的Safety设计与实现,比如Safety in Pretraining、Safety Fine-Tuning等。构建安全可靠的GenAI/LLMs项目开发。

10、Llama 3构建可信赖的企业私有安全大模型Responsible AI系统:构建可信赖的企业私有安全大模型Responsible AI系统,掌握Llama 3的Constitutional AI、Red Teaming。

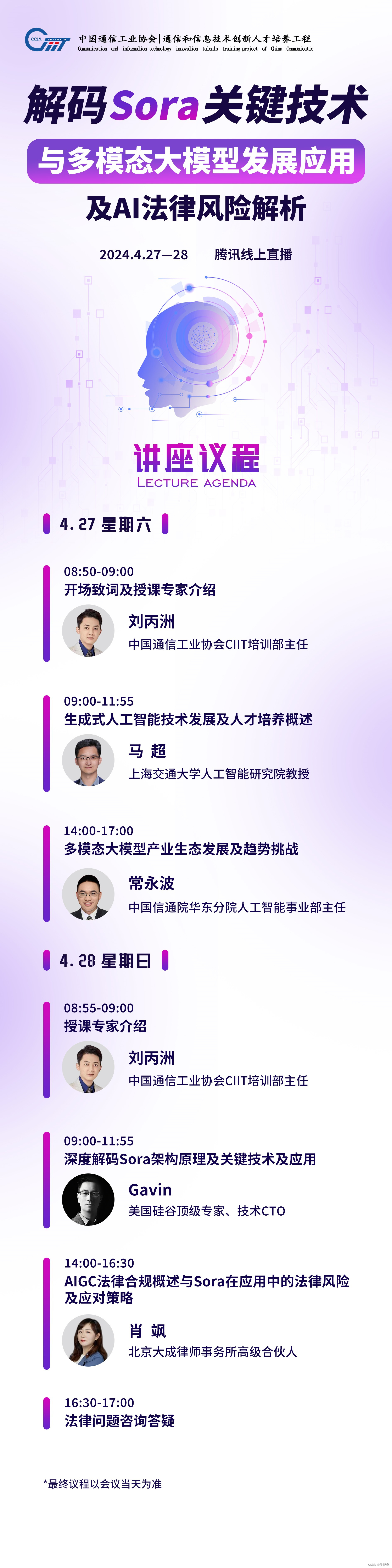

解码Sora架构、技术及应用

一、为何Sora通往AGI道路的里程碑?

1,探索从大规模语言模型(LLM)到大规模视觉模型(LVM)的关键转变,揭示其在实现通用人工智能(AGI)中的作用。

2,展示Visual Data和Text Data结合的成功案例,解析Sora在此过程中扮演的关键角色。

3,详细介绍Sora如何依据文本指令生成具有三维一致性(3D consistency)的视频内容。 4,解析Sora如何根据图像或视频生成高保真内容的技术路径。

5,探讨Sora在不同应用场景中的实践价值及其面临的挑战和局限性。

二、解码Sora架构原理

1,DiT (Diffusion Transformer)架构详解

2,DiT是如何帮助Sora实现Consistent、Realistic、Imaginative视频内容的?

3,探讨为何选用Transformer作为Diffusion的核心网络,而非技术如U-Net。

4,DiT的Patchification原理及流程,揭示其在处理视频和图像数据中的重要性。

5,Conditional Diffusion过程详解,及其在内容生成过程中的作用。

三、解码Sora关键技术解密

1,Sora如何利用Transformer和Diffusion技术理解物体间的互动,及其对模拟复杂互动场景的重要性。

2,为何说Space-time patches是Sora技术的核心,及其对视频生成能力的提升作用。

3,Spacetime latent patches详解,探讨其在视频压缩和生成中的关键角色。

4,Sora Simulator如何利用Space-time patches构建digital和physical世界,及其对模拟真实世界变化的能力。

5,Sora如何实现faithfully按照用户输入文本而生成内容,探讨背后的技术与创新。

6,Sora为何依据abstract concept而不是依据具体的pixels进行内容生成,及其对模型生成质量与多样性的影响。