准备工作

- 准备特定场景微调数据1000左右的量级作为训练集,300左右的数据作为测试集(最好是英文版),数据集的格式示例如下:

// 判别式分类任务

[{"instrcution": "# Goal\nYou are a senior python programmer, please check is there any risk of endless loop in the code snippet.\n#code\n```python\nint n = 0\nwhile n < 100:\ns = get_token();\n```","input": "","output": "{\"label\":1}"}, //...

]

//文本生成式任务

[{"instrcution": "# Goal\nYou are a senior python programmer, please write a binary search algorithm.","input": "","output": "def binary_search(arr, target):\nleft = 0\nright = len(arr) - 1\nwhile left <= right:\nmid = left + (right - left) // 2\nif arr[mid] == target:\n. return mid\nelif arr[mid] > target:\nright = mid - 1\nelse:\nleft = mid + 1\nreturn -1"}, //...

]

# unsloth是一个比较轻量级的框架,适合开发者从0-1自定义微调路径

# 本实战教程是基于unsloth框架的

https://github.com/unslothai/unsloth

# 里面有很多微调教程、量化的大模型、数据集可参考使用# 其他主流微调框架,功能全面,可视化完善

# https://github.com/huggingface/trl

# https://github.com/OpenAccess-AI-Collective/axolotl

# https://github.com/hiyouga/LLaMA-Factory

# https://github.com/modelscope/swift

# ...还有很多其他框架,百花齐放

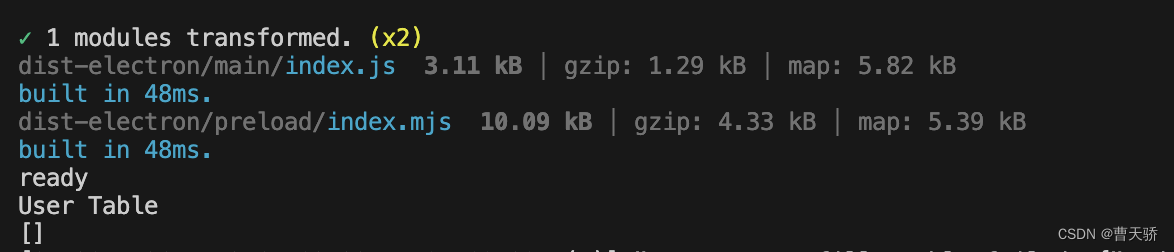

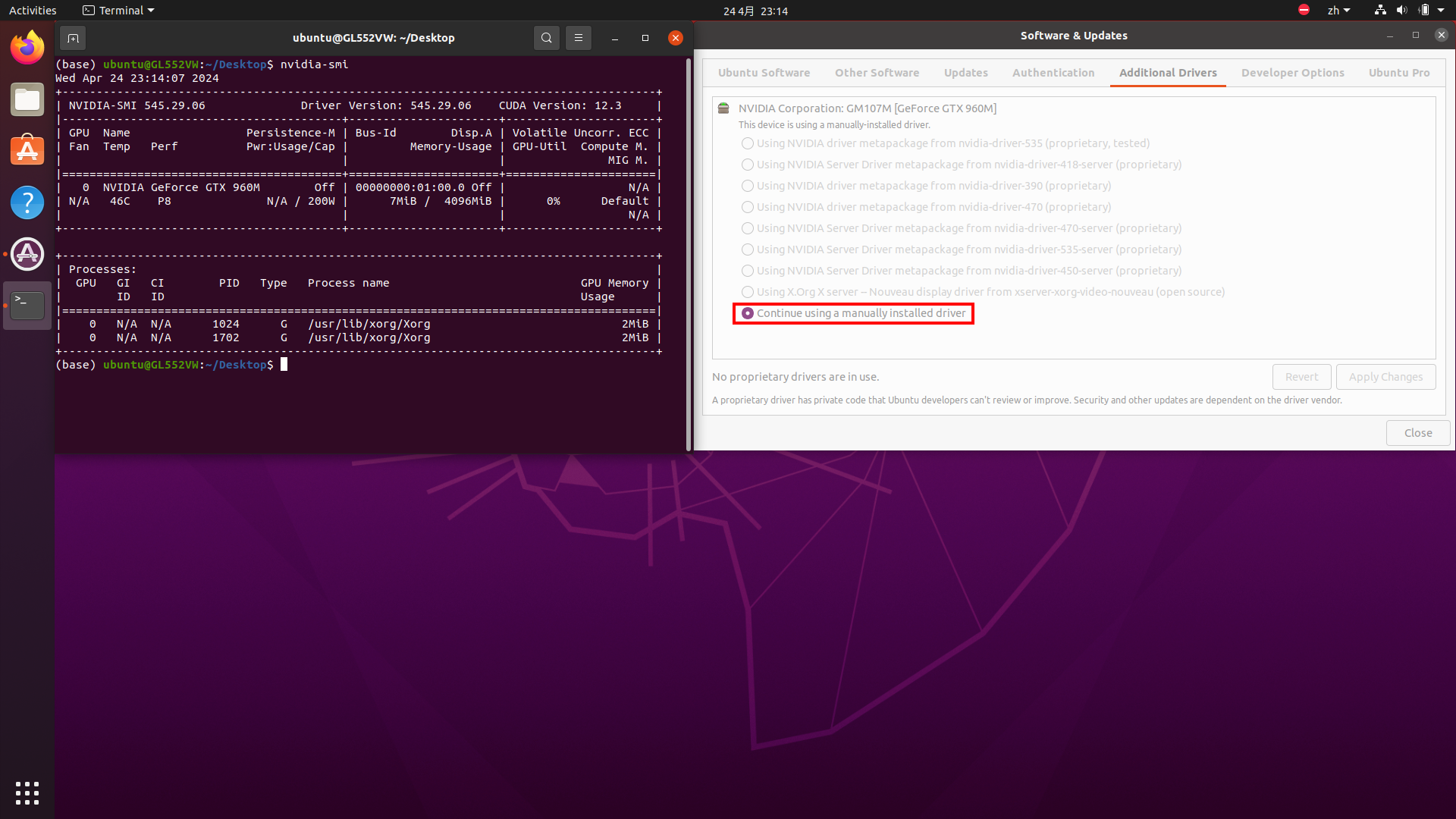

- python环境准备:

查看系统GPU配置信息:

import torch

major_version, minor_version = torch.cuda.get_device_capability()

print(major_version)

根据配置信息安装指定的包

# major_version>=8时,GPU环境一般为Ampere, Hopper GPUs (RTX 30xx, RTX 40xx, A100, H100, L40)等,需要根据以下语句安装环境

pip3 install --no-deps packaging ninja einops flash-attn xformers trl peft accelerate bitsandbytes

# major_version<8时,GPU环境一般为(V100, Tesla T4, RTX 20xx)等,需要根据以下语句安装环境

pip3 install --no-deps xformers trl peft accelerate bitsandbytes

导包如果报错的话,大概率是包版本的冲突,如果重装一些包还是存在冲突,实在解决不了,就新建一个conda环境,按提示一个一个装

LoRA微调

- step1:样本转换,json转换至jsonl的脚本cover_alpaca2jsonl.py

import argparse

import json

from tqdm import tqdmdef format_example(example: dict) -> dict:context = f"Instruction: {example['instruction']}\n"if example.get("input"):context += f"Input: {example['input']}\n"context += "Answer: "target = example["output"]return {"context": context, "target": target}def main():parser = argparse.ArgumentParser()parser.add_argument("--data_path", type=str, default="data/train_data/samples_train_llama.json")parser.add_argument("--save_path", type=str, default="data/train_data/samples_train_llama.jsonl")args = parser.parse_args()with open(args.data_path) as f:examples = json.load(f)with open(args.save_path, 'w') as f:for example in tqdm(examples, desc="formatting.."):f.write(json.dumps(format_example(example)) + '\n')if __name__ == "__main__":main()

- step2:生产tokenize文件夹,为后续自定义损失函数做数据准备,脚本tokenize_dataset_rows.py

import argparse

import json

from tqdm import tqdmimport datasets

import transformers

import osdef preprocess(tokenizer, config, example, max_seq_length, version):if version == 'v1':prompt = example["context"]target = example["target"]prompt_ids = tokenizer.encode(prompt, max_length=max_seq_length, truncation=True)target_ids = tokenizer.encode(target,max_length=max_seq_length,truncation=True,add_special_tokens=False)input_ids = prompt_ids + target_ids + [config.eos_token_id]return {"input_ids": input_ids, "seq_len": len(prompt_ids)}if version == 'v2':query = example["context"]target = example["target"]history = Noneprompt = tokenizer.build_prompt(query, history)a_ids = tokenizer.encode(text=prompt, add_special_tokens=True, truncation=True,max_length=max_seq_length)b_ids = tokenizer.encode(text=target, add_special_tokens=False, truncation=True,max_length=max_seq_length)input_ids = a_ids + b_ids + [tokenizer.eos_token_id]return {"input_ids": input_ids, "seq_len": len(a_ids)}def read_jsonl(path, max_seq_length, base_model_path, version='v1', skip_overlength=False):tokenizer = transformers.AutoTokenizer.from_pretrained(base_model_path, trust_remote_code=True)config = transformers.AutoConfig.from_pretrained(base_model_path, trust_remote_code=True, device_map='auto')with open(path, "r") as f:for line in tqdm(f.readlines()):example = json.loads(line)# feature = preprocess(tokenizer, config, example, max_seq_length)feature = preprocess(tokenizer, config, example, max_seq_length, version)if skip_overlength and len(feature["input_ids"]) > max_seq_length:continue# feature["input_ids"] = feature["input_ids"][:max_seq_length]yield featuredef main():parser = argparse.ArgumentParser()parser.add_argument("--jsonl_path", type=str, default="data/train_data/samples_train_llama.jsonl")parser.add_argument("--save_path", type=str, default="data/llama3_train_tokenize")parser.add_argument("--max_seq_length", type=int, default=5000)parser.add_argument("--skip_overlength", type=bool, default=True)parser.add_argument("--base_model", type=str, default='你下载的模型文件路径/Meta-Llama-3-8B')parser.add_argument("--version", type=str, default='v1')args = parser.parse_args()dataset = datasets.Dataset.from_generator(lambda: read_jsonl(args.jsonl_path, args.max_seq_length, args.base_model, args.version, args.skip_overlength))dataset.save_to_disk(args.save_path)if __name__ == "__main__":main()

"""

llama3微调

"""

import os

import argparse

import torch

import datasets

from datasets import load_dataset

from trl import SFTTrainer

from transformers import TrainingArguments

from torch.utils.tensorboard import SummaryWriter

from transformers.integrations import TensorBoardCallback

from transformers import PreTrainedTokenizerBase

from dataclasses import dataclass, field

from transformers import AutoTokenizer, AutoModel

from peft import get_peft_model, LoraConfig, TaskType

from transformers import Trainer, HfArgumentParser

from unsloth import FastLanguageModel@dataclass

class DataCollator:tokenizer: PreTrainedTokenizerBasedef __call__(self, features: list) -> dict:len_ids = [len(feature["input_ids"]) for feature in features]longest = max(len_ids)input_ids = []labels_list = []for ids_l, feature in sorted(zip(len_ids, features), key=lambda x: -x[0]):ids = feature["input_ids"]seq_len = feature["seq_len"]# 仅计算label部分的损失labels = ([-100] * (seq_len - 1)+ ids[(seq_len - 1):]+ [-100] * (longest - ids_l))ids = ids + [self.tokenizer.pad_token_id] * (longest - ids_l)_ids = torch.LongTensor(ids)labels_list.append(torch.LongTensor(labels))input_ids.append(_ids)input_ids = torch.stack(input_ids)labels = torch.stack(labels_list)return {"input_ids": input_ids,"labels": labels,}class ModifiedTrainer(Trainer):"""自定义trainer"""def compute_loss(self, model, inputs, return_outputs=False):# 仅计算标签的损失用于模型优化return model(input_ids=inputs["input_ids"],labels=inputs["labels"],).lossdef save_model(self, output_dir=None, _internal_call=False):from transformers.trainer import TRAINING_ARGS_NAMEos.makedirs(output_dir, exist_ok=True)torch.save(self.args, os.path.join(output_dir, TRAINING_ARGS_NAME))saved_params = {k: v.to("cpu") for k, v in self.model.named_parameters() if v.requires_grad}torch.save(saved_params, os.path.join(output_dir, "adapter_model.bin"))class Llama3Fintune(object):"""Llama3微调"""def __init__(self):passdef train(self):"""训练入口:return:"""writer = SummaryWriter()model, tokenizer = FastLanguageModel.from_pretrained(model_name="你下载的模型路径/Meta-Llama-3-8B", max_seq_length=5000,dtype=None, load_in_4bit=True)# 另一种不进行预处理,直接加载数据集的方式# EOS_TOKEN = tokenizer.eos_token# dataset = self.load_dataset(EOS_TOKEN)dataset = datasets.load_from_disk("data/llama3_train_tokenize")# 配置lora参数model = FastLanguageModel.get_peft_model(model,# 低秩表征:Choose any number > 0 ! Suggested 8, 16, 32, 64, 128r=16,target_modules=["q_proj", "k_proj", "v_proj", "o_proj","gate_proj", "up_proj", "down_proj", ],lora_alpha=16,lora_dropout=0, # Supports any, but = 0 is optimized# [NEW] "unsloth" uses 30% less VRAM, fits 2x larger batch sizes!use_gradient_checkpointing="unsloth", # True or "unsloth" for very long contextrandom_state=3407,use_rslora=False, # We support rank stabilized LoRAloftq_config=None, # And LoftQinference_mode=False,)# 模型训练参数配置# trl框架的SFTTrainer# trainer = SFTTrainer(# model=model,# tokenizer=tokenizer,# train_dataset=dataset,# dataset_text_field="text",# max_seq_length=5000,# dataset_num_proc=2,# packing=False, # Can make training 5x faster for short sequences.# args=TrainingArguments(# per_device_train_batch_size=2,# gradient_accumulation_steps=4,# warmup_steps=5,# max_steps=1,# learning_rate=2e-4,# fp16=not torch.cuda.is_bf16_supported(),# bf16=torch.cuda.is_bf16_supported(),# logging_steps=1,# optim="adamw_8bit",# weight_decay=0.01,# lr_scheduler_type="linear",# seed=3407,# output_dir="lora_trained_llama3",# # num_train_epochs=3# ),# )# 自定义损失的Trainertrain_args = TrainingArguments(remove_unused_columns=False,per_device_train_batch_size=2,gradient_accumulation_steps=4,warmup_steps=5,# max_steps=52000,learning_rate=2e-4,fp16=not torch.cuda.is_bf16_supported(),bf16=torch.cuda.is_bf16_supported(),logging_steps=100,optim="adamw_torch",weight_decay=0.01,lr_scheduler_type="linear",seed=3407,output_dir="lora_trained_llama3",num_train_epochs=10)trainer = ModifiedTrainer(model=model,train_dataset=dataset,args=train_args,callbacks=[TensorBoardCallback(writer)],data_collator=DataCollator(tokenizer),)# 开始训练trainer.train()writer.close()# 存储微调模型至文件夹trainer.save_model("lora_trained_llama3")def load_dataset(self, eos_token):"""加载数据集"""dataset = load_dataset("json", data_files="data/train_data/samples_train_llama.json", split="train")dataset = dataset.map(lambda x: self.formatting_data(x, eos_token), batched=True)return datasetdef formatting_data(self, samples, eos_token):"""格式化样本数据"""prompt_frame = """### Instruction:{}### Input:{}### Response:{}"""instructions = samples["instruction"]inputs = samples["input"]outputs = samples["output"]texts = []for instruction, input, output in zip(instructions, inputs, outputs):text = prompt_frame.format(instruction, input, output) + eos_tokentexts.append(text)return {"text": texts}if __name__ == '__main__':Llama3Fintune().train()

参考实现步骤:

- Alpaca + Llama-3 8b full example.ipynb;

- 从0到1基于ChatGLM-6B使用LoRA进行参数高效微调(包含自定义损失函数);

- unsloth框架:https://github.com/unslothai/unsloth;

效果评估

分类微调模型评估

在测试集上统计结果并计算分类准召,脚本classifier_inference.py

from transformers import AutoModel,AutoTokenizer

import torch

from peft import PeftModel

import json

import pandas as pd

from cover_alpaca2jsonl import format_example

from collections import Counter

from tqdm import tqdmdevice = torch.device("cuda:0") if torch.cuda.is_available() else torch.device("cpu")class ClassifyInference(object):"""推理测试分类任务微调模型准召率计算"""@classmethoddef eval_on_classify_test_set(cls, test_file_path, basic_model_path, lora_model_path):"""在测试集上评估模型"""model = AutoModel.from_pretrained(basic_model_path, trust_remote_code=True, load_in_8bit=True, device_map='auto', revision="")tokenizer = AutoTokenizer.from_pretrained(basic_model_path, trust_remote_code=True, revision="")model = PeftModel.from_pretrained(model, lora_model_path)test_cases = json.load(open(test_file_path, "r"))model_predicts, sample_labels = [], []with torch.no_grad():for idx, item in tqdm(enumerate(test_cases), desc="Inference process: "):feature = format_example(item)input_text = feature["context"]ids = tokenizer.encode(input_text)input_ids = torch.LongTensor([ids])input_ids = input_ids.to(device)predict_label = cls.get_multi_predict(model, input_ids, tokenizer, input_text)sample_label = int(json.loads(item.get('output').replace("`", "").replace("json", "").replace("\n", "").strip())["label"])model_predicts.append(predict_label)sample_labels.append(sample_label)# print(out_text)# print(f"### {idx + 1}.Answer:\n", item.get('output'), '\n\n')# 评估效果res_df = pd.DataFrame({"predict": model_predicts, "label": sample_labels})cls.evaluate_by_df_for_classify_task(res_df, target_label=1)@classmethoddef evaluate_by_df_for_classify_task(cls, res_df, target_label=1, class_id="all"):"""根据dataframe计算评估结果"""if res_df is None or len(res_df) <= 0:print("no res to be evaluate for class: {}".format(class_id))returnagainst_label = 0 if target_label == 1 else 1tar_to_tar = len(res_df[(res_df["predict"] == target_label) & (res_df["label"] == target_label)])tar_to_aga = len(res_df[(res_df["predict"] == against_label) & (res_df["label"] == target_label)])aga_to_tar = len(res_df[(res_df["predict"] == target_label) & (res_df["label"] == against_label)])precision = tar_to_tar / (tar_to_tar + aga_to_tar) if tar_to_tar + aga_to_tar > 0 else 0recall = tar_to_tar / (tar_to_tar + tar_to_aga) if tar_to_tar + tar_to_aga > 0 else 0f1 = 2 * precision * recall / (precision + recall) if precision + recall > 0 else 0print("class: {}, precision: {}/{}={}, recall: {}/{}={}, f1: {}".format(class_id, tar_to_tar, tar_to_tar + aga_to_tar, precision, tar_to_tar, tar_to_tar + tar_to_aga, recall,f1))@classmethoddef get_multi_predict(cls, model, input_ids, tokenizer, input_text, round_count=3):"""获取多轮预测的结果:return:"""predict_labels = []for i in range(round_count):out = model.generate(input_ids=input_ids,max_new_tokens=4090,do_sample=False,temperature=0,)out_text = tokenizer.decode(out[0])predict = out_text.replace(input_text, "").replace("\nEND", "").strip()predict_label = cls.parse_predict_label(predict)predict_labels.append(predict_label)counter = Counter(predict_labels)return counter.most_common(1)[0][0]@classmethoddef parse_predict_label(cls, predict):"""解析预测结果"""try:predict = predict.replace("json", "").replace("`", "").replace("\n", "").strip()predict = json.loads(predict)predict_label = int(predict["label"])return predict_labelexcept Exception as e:return 0if __name__ == '__main__':ClassifyInference.eval_on_classify_test_set(test_file_path="data/sample_test.json",basic_model_path="你下载的本地模型路径/Meta-Llama-3-8B",lora_model_path="lora_trained_llama3")

生成式微调模型评估

生成式模型则需要预测生成结果和target之间的文本相似度(BLEU)、n-gram拆分后的单词召回率(ROUGE),脚本generator_inferecce.py

from transformers import AutoModel,AutoTokenizer

import torch

from peft import PeftModel

import json

import numpy as np

import jieba

from rouge_chinese import Rouge

from nltk.translate.bleu_score import sentence_bleu, SmoothingFunction

import pandas as pd

from cover_alpaca2jsonl import format_example

from collections import Counter

from tqdm import tqdmdevice = torch.device("cuda:0") if torch.cuda.is_available() else torch.device("cpu")class GenerateInference(object):"""推理测试生成任务微调模型效果评估"""@classmethoddef eval_on_classify_test_set(cls, test_file_path, basic_model_path, lora_model_path):"""在测试集上评估模型"""model = AutoModel.from_pretrained(basic_model_path, trust_remote_code=True, load_in_8bit=True, device_map='auto', revision="")tokenizer = AutoTokenizer.from_pretrained(basic_model_path, trust_remote_code=True, revision="")model = PeftModel.from_pretrained(model, lora_model_path)test_cases = json.load(open(test_file_path, "r"))bleu_4, rouge_1, rouge_2, rouge_l = [], [], [], []with torch.no_grad():for idx, item in tqdm(enumerate(test_cases), desc="Inference process: "):feature = format_example(item)input_text = feature["context"]ids = tokenizer.encode(input_text)input_ids = torch.LongTensor([ids])input_ids = input_ids.to(device)predict_answers = cls.get_multi_predict_answers(model, input_ids, tokenizer, input_text)label_answer = item.get('output')cur_rouge_1, cur_rouge_2, cur_rouge_l, cur_bleu_4 = cls.get_multi_eval_metrics(predict_answers, label_answer)rouge_1.append(cur_rouge_1)rouge_2.append(cur_rouge_2)rouge_l.append(cur_rouge_l)bleu_4.append(cur_bleu_4)print(f"rouge-1: {np.mean(rouge_1)}")print(f"rouge-2: {np.mean(rouge_2)}")print(f"rouge-l: {np.mean(rouge_l)}")print(f"bleu-4: {np.mean(bleu_4)}")@classmethoddef get_multi_eval_metrics(cls, predict_answers, label_answer, language="en"):"""计算每个样本的4个指标"""score_dic = {"rouge-1": [],"rouge-2": [],"rouge-l": [],"bleu-4": []}# 分词split_label_answer = jieba.cut(label_answer) if language == "zh" else label_answerrouge = Rouge()for predict_answer in predict_answers:split_predict_answer = jieba.cut(predict_answer) if language == "zh" else predict_answerrouge_scores = rouge.get_scores(" ".join(split_predict_answer), " ".join(split_label_answer))rouge_res = rouge_scores[0]for rouge_name, rouge_value in rouge_res.items():score_dic[rouge_name].append(round(rouge_value["f"] * 100, 6))bleu_4 = sentence_bleu(list(split_label_answer), list(split_predict_answer), smoothing_function=SmoothingFunction().method3)score_dic["bleu-4"].append(round(bleu_4 * 100, 6))for score_name, score_values in score_dic.items():score_dic[score_name] = float(np.mean(score_values))return score_dic["rouge-1"], score_dic["rouge-2"], score_dic["rouge-l"], score_dic["bleu-4"]@classmethoddef get_multi_predict_answers(cls, model, input_ids, tokenizer, input_text, round_count=3):"""获取多轮预测的结果:return:"""predict_answers = []for i in range(round_count):out = model.generate(input_ids=input_ids,max_new_tokens=4090,do_sample=False,temperature=0,)out_text = tokenizer.decode(out[0])predict_ans = out_text.replace(input_text, "").replace("\nEND", "").strip()predict_answers.append(predict_ans)return predict_answersif __name__ == '__main__':GenerateInference.eval_on_classify_test_set(test_file_path="你的测试集样本路径/samples_test.json",basic_model_path="你下载的模型路径/Meta-Llama-3-8B",lora_model_path="lora_train_llama3")