一、Prometheus

获取配置文件

docker run -d -p 9090:9090 --name prometheus prom/prometheus

mkdir -p /app/prometheus

docker cp prometheus:/etc/prometheus/prometheus.yml /app/prometheus/prometheus.yml

停止并删除旧的容器,重新启动

docker run -d --name prometheus -p 9090:9090 -v /app/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml prom/prometheus

访问prometheus

http://XX.xx.xx.xx:9090

二、Grafana

看板ID 搜CN查询中文看板

https://grafana.com/grafana/dashboards/

设置grafana映射文件夹

mkdir -p /app/grafana

chmod 777 -R /app/grafana

启动

docker run -d -p 3000:3000 --name=grafana -v /app/grafana:/var/lib/grafana grafana/grafana

默认密码admin admin

三、引入node-exporter监控服务器

docker run -d --name node-exporter -p 9100:9100 \-v /etc/localtime:/etc/localtime -v /etc/timezone:/etc/timezone \prom/node-exporter

将node-exporter服务暴露公网的ip和端口(内网ip也可以) 配置到Prometheus的prometheus.yml文件中

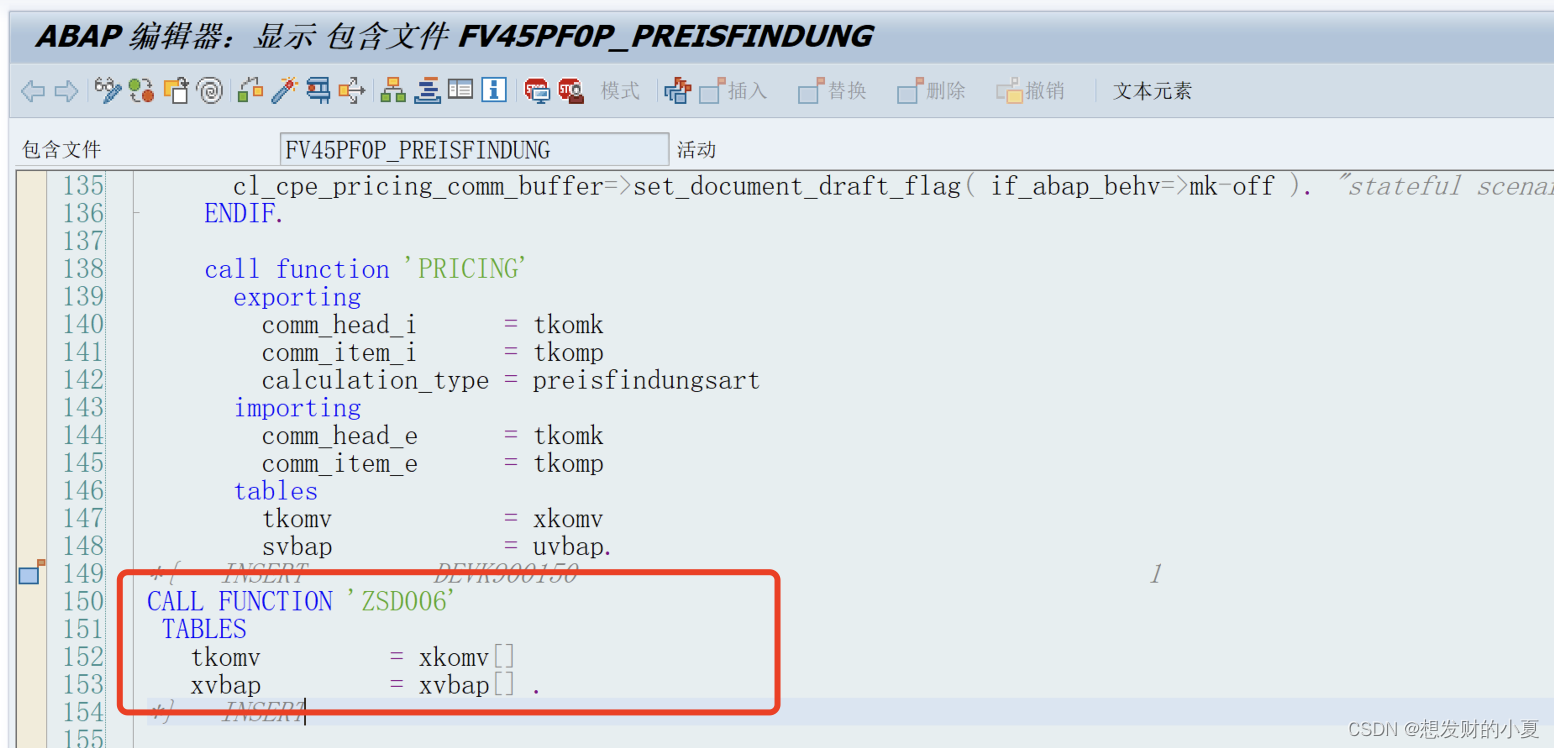

- job_name: 'linux'static_configs:- targets: ['106.54.220.184:9100']重启容器,查看Prometheus

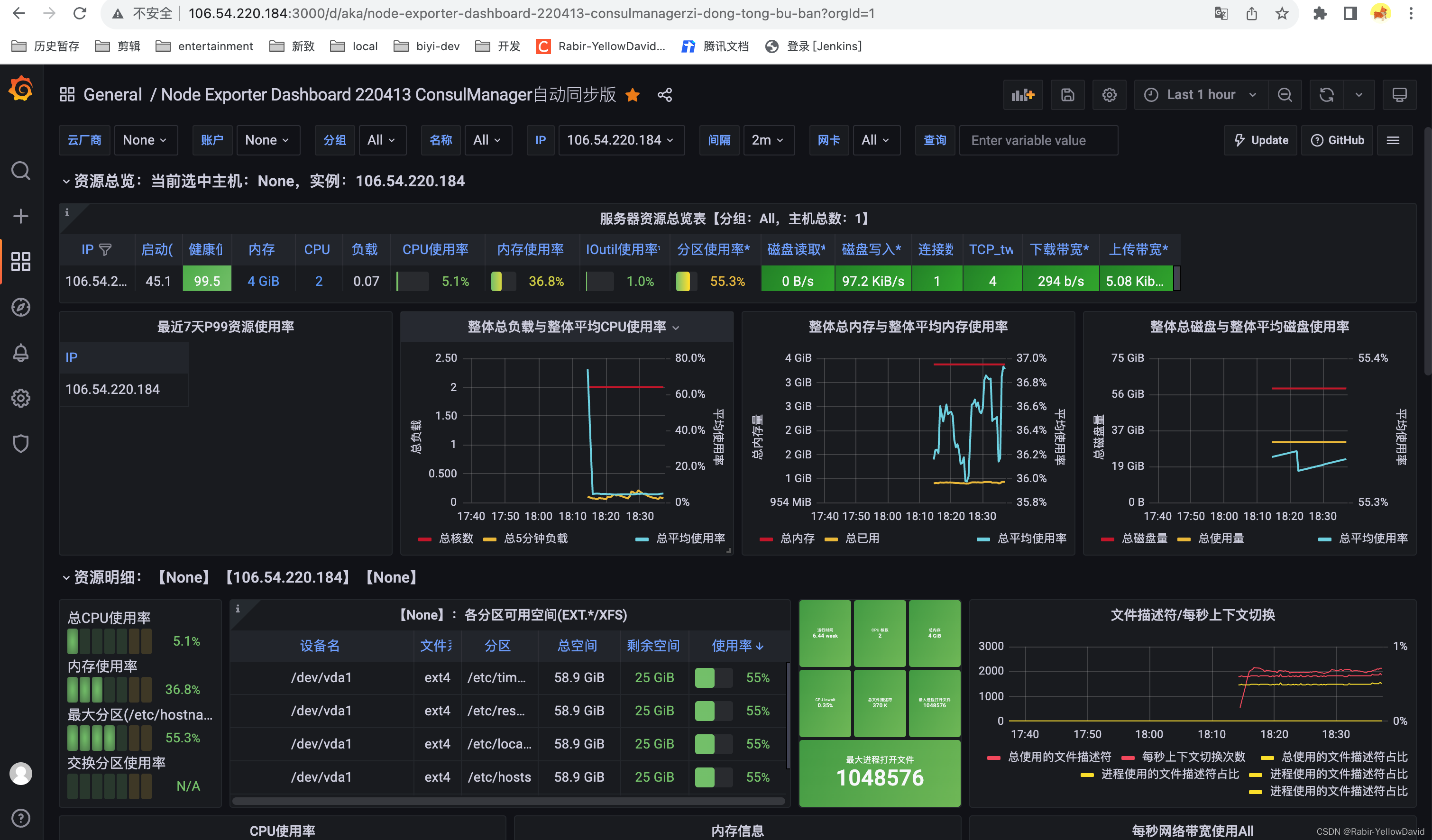

看板:Node Exporter Dashboard 220413 ConsulManager自动同步版

四、引入mysqld-exporter监控Mysql

在监控的mysql创建只能读取数据的用户,使用该用户登录返回数据库信息

CREATE USER 'mysqlexporter'@'106.54.220.184' IDENTIFIED BY 'mysqlexporter' WITH MAX_USER_CONNECTIONS 3;

GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'mysqlexporter'@'106.54.220.184';

flush privileges;

启动Mysql监听

docker run -d --name mysql-exporter -p 9104:9104 -e DATA_SOURCE_NAME="用户名:密码@(ip:3306)/mysql" prom/mysqld-exporter

docker run -d --name mysql-exporter -p 9104:9104 -e DATA_SOURCE_NAME="mysqlexporter:mysqlexporter@(ip:3306)/mysql" prom/mysqld-exporter

将mysqld-exporter服务暴露公网的ip和端口(内网ip也可以) 配置到Prometheus的prometheus.yml文件中

- job_name: 'mysql'static_configs:- targets: ['ip:9104']

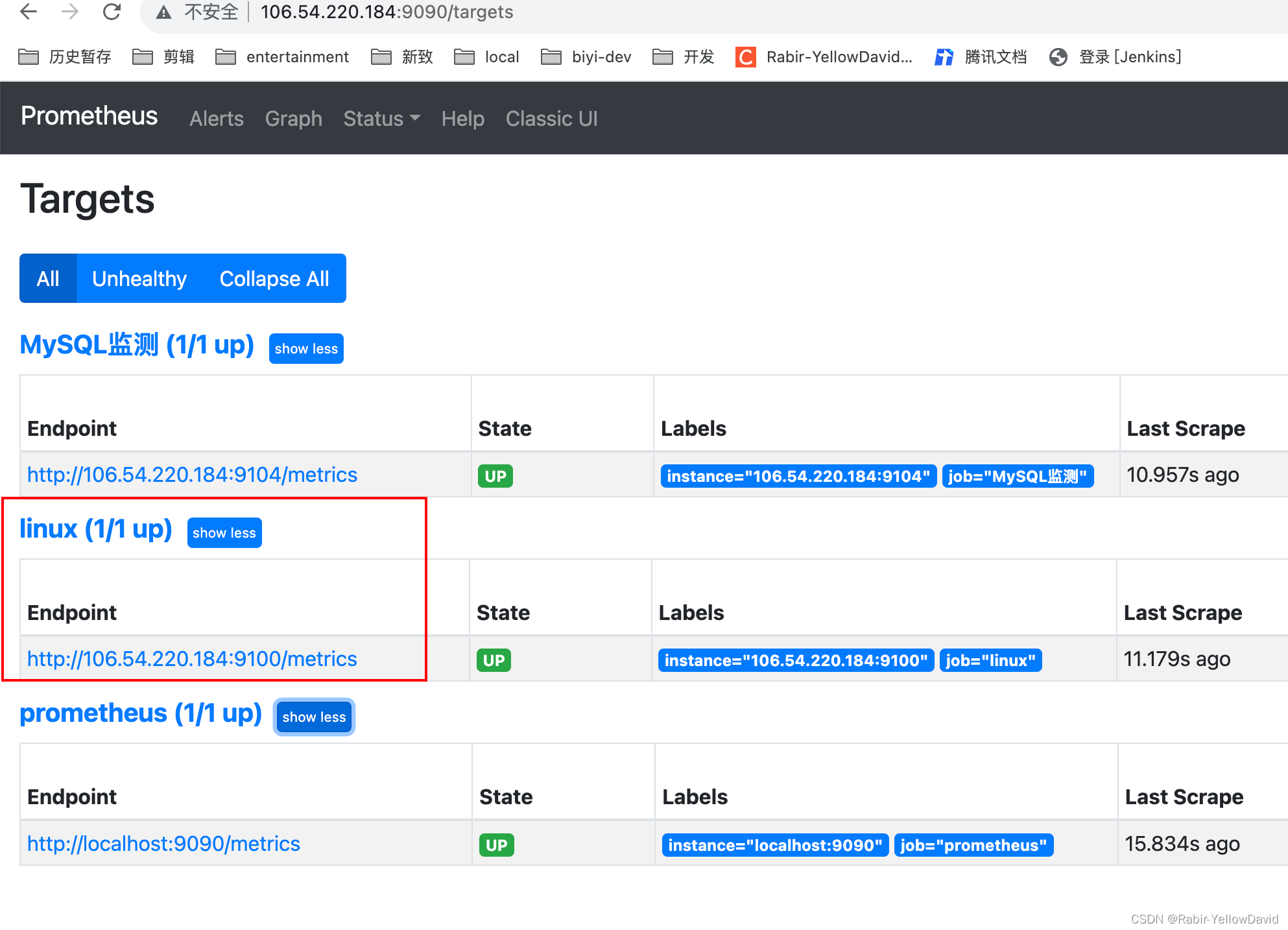

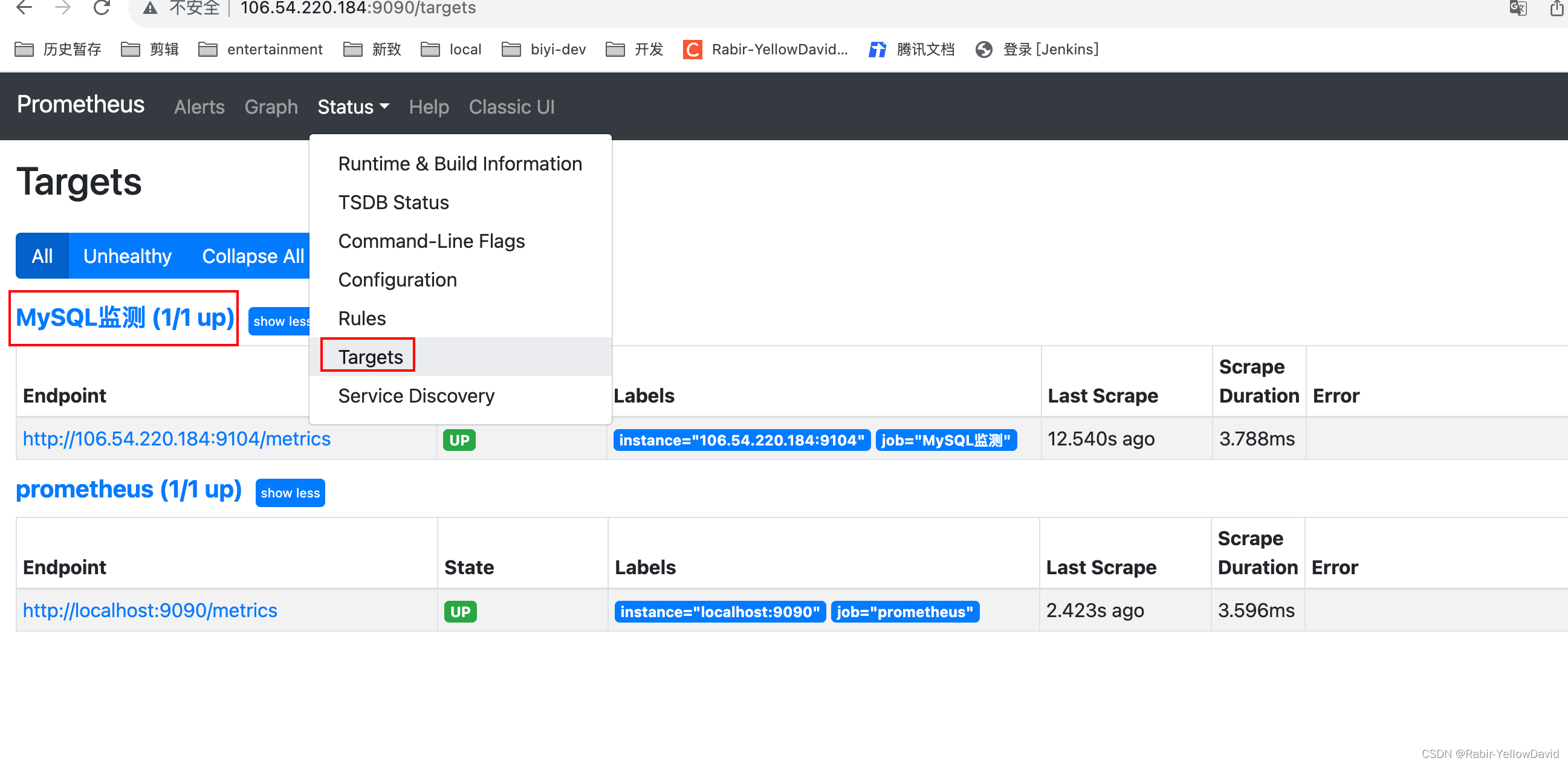

查看普罗米修斯 是否新增了监听

在Grafana配置Prometheus数据源

Configuration -> Data Sources ->add data source -> Prometheus

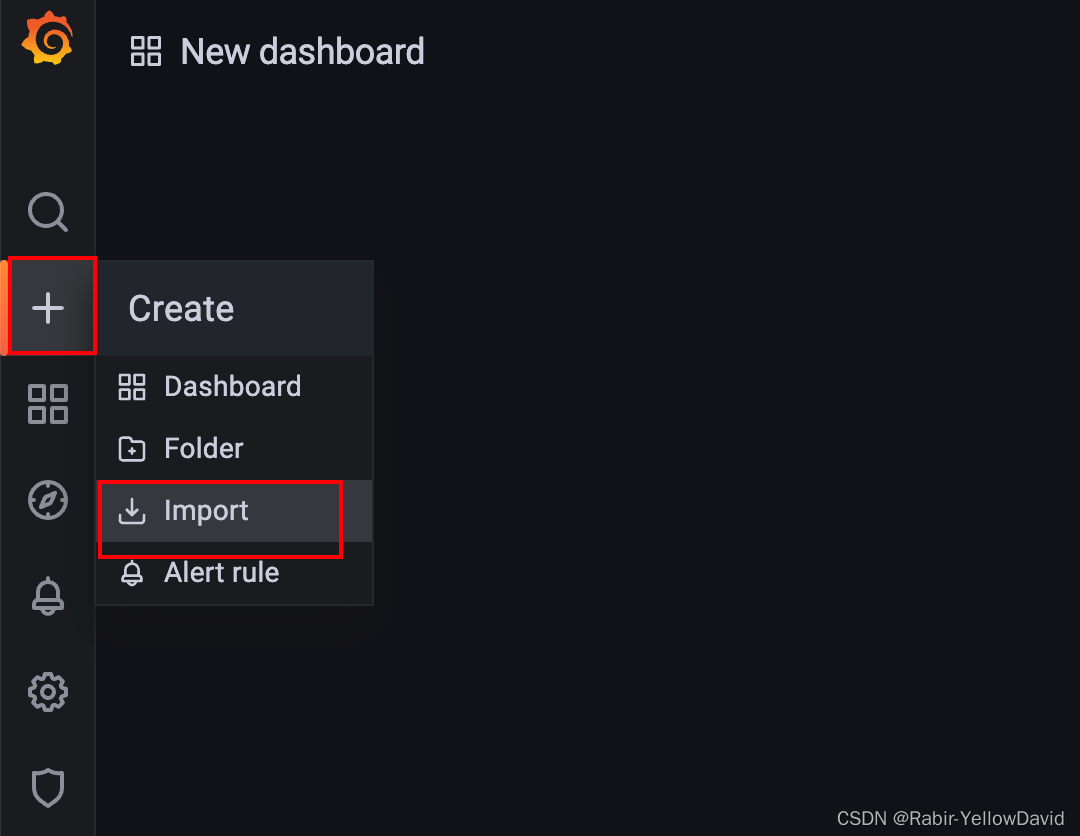

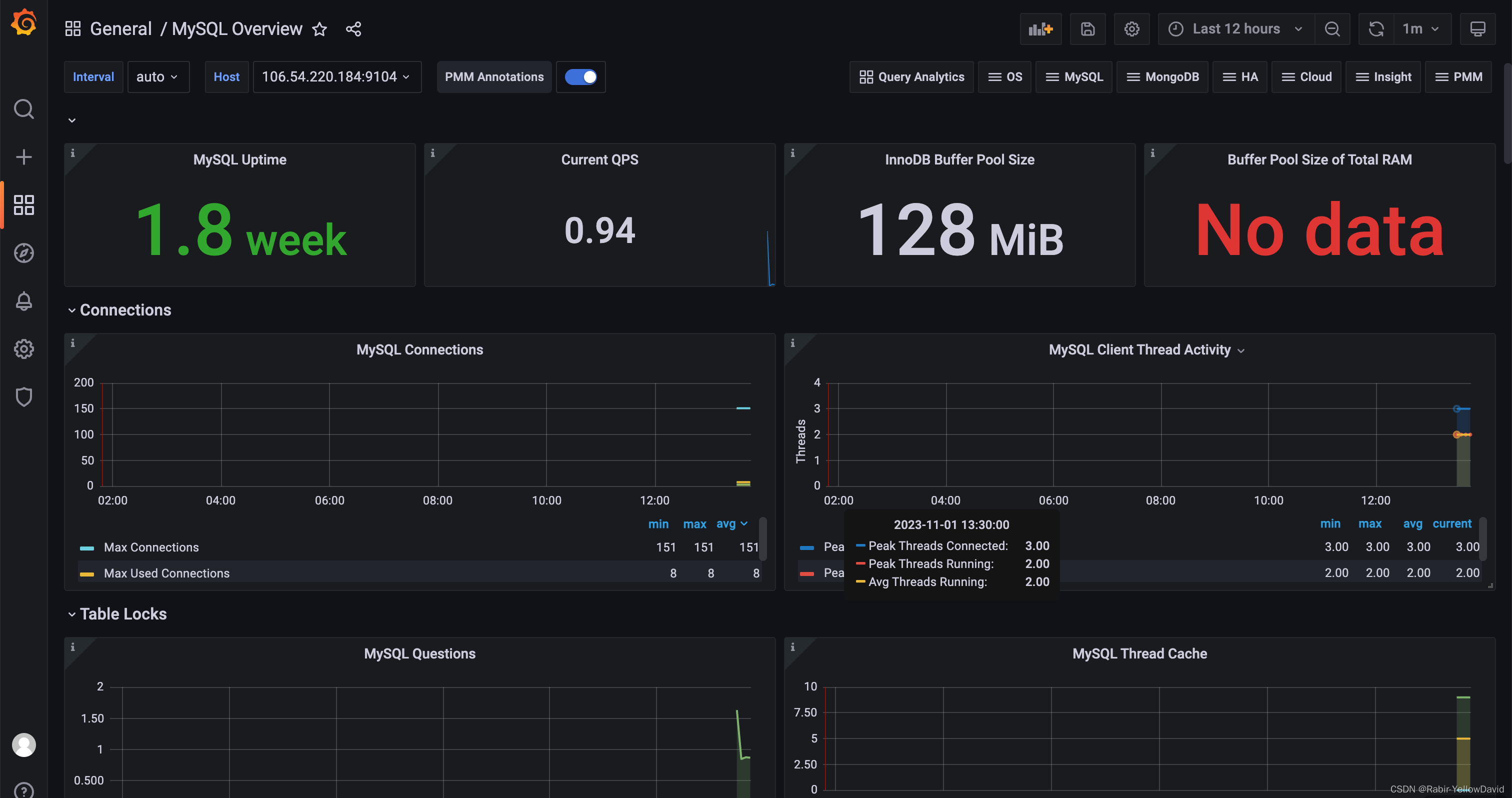

Create import Mysql看板ID:8919 中文Mysql看板:17320

五、Springboot引入prometheus监测Jvm

应用引入prometheus依赖

<dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-actuator</artifactId></dependency><dependency><groupId>io.micrometer</groupId><artifactId>micrometer-registry-prometheus</artifactId><scope>runtime</scope></dependency>

修改配置文件

#prometheus监控平台配置

management:endpoint:metrics:enabled: true #支持metricsprometheus:enabled: true #支持Prometheusmetrics:export:prometheus:enabled: truetags:application: ruoyi-dev #实例名采集endpoints:web:exposure:include: '*' #开放所有端口

配置发送资源接口放行

如果应用有app应用上下文,加入上下文/api

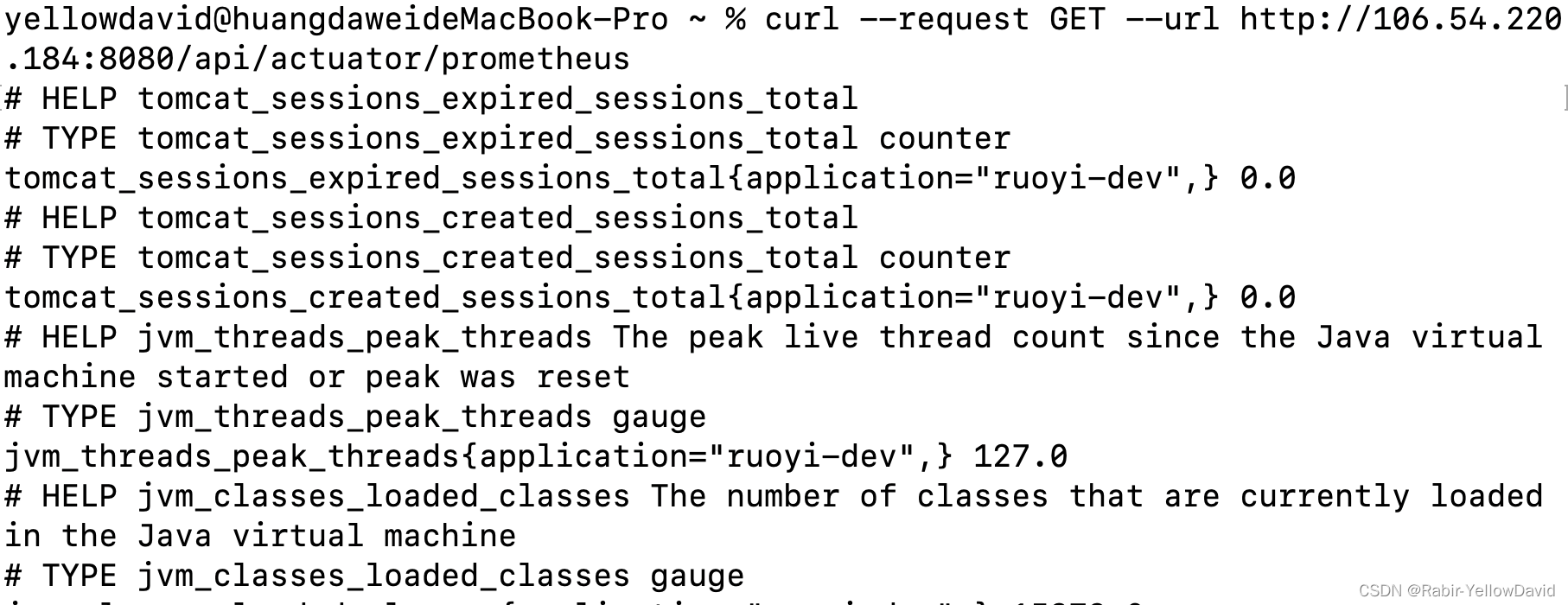

curl --request GET --url http://106.54.220.184:8080/api/actuator/prometheus

默认的话

curl --request GET --url http://ip:端口/actuator/prometheus

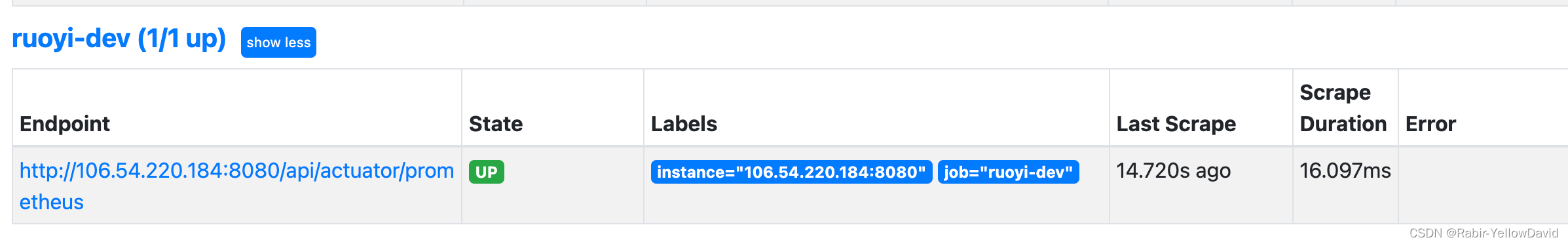

配置到prometheus监听

- job_name: "ruoyi-dev"# metrics_path defaults to '/metrics'# scheme defaults to 'http'.metrics_path: /api/actuator/prometheusstatic_configs:- targets: ["106.54.220.184:8080"]

备份prometheus.yml默认配置

# my global config

global:scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.# scrape_timeout is set to the global default (10s).# Alertmanager configuration

alerting:alertmanagers:- static_configs:- targets:# - alertmanager:9093# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:# - "first_rules.yml"# - "second_rules.yml"# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.- job_name: "prometheus"# metrics_path defaults to '/metrics'# scheme defaults to 'http'.# 监测本机普罗米修斯static_configs:- targets: ["localhost:9090"]# 监测Linux - job_name: 'Linux-184'static_configs:- targets: ['106.54.220.184:9100'] # 监测Mysql - job_name: "MySQL-184"static_configs:- targets: ["106.54.220.184:9104"]# 监测Jvm- job_name: "ruoyi-dev"metrics_path: /api/actuator/prometheusstatic_configs:- targets: ["106.54.220.184:8080"]