文章目录 安装前准备 安装前准备配置yum源等 安装前准备docker安装 安装kubeadm 参考链接

cat >> /etc/hosts <<EOF

192.168.100.30 k8s-01

192.168.100.31 k8s-02

EOF

hostnamectl set-hostname k8s-01 #所有机器按照要求修改

hostnamectl set-hostname k8s-02

bash

# 只要在k8s-01 设置免密,主机密码 123456Asda,各位自己改成自己的

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y expectsed -i "s@PermitRootLogin no@PermitRootLogin yes@g" /etc/ssh/sshd_config

sed -i "s@PasswordAuthentication no@PasswordAuthentication yes@g" /etc/ssh/sshd_config

systemctl restart sshd

#分发公钥

ssh-keygen -t rsa -P "" -f /root/.ssh/id_rsa

for i in k8s-01 k8s-02 ;do

expect -c "

spawn ssh-copy-id -i /root/.ssh/id_rsa.pub root@$iexpect {\"*yes/no*\" {send \"yes\r\"; exp_continue}\"*password*\" {send \"123456Asda\r\"; exp_continue}\"*Password*\" {send \"123456Asda\r\";}} "

done

systemctl stop firewalld

systemctl disable firewalld

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat

iptables -P FORWARD ACCEPT

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

setenforce 0

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

#目前官方推荐内核版本大于3.10,此部分选装

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm#默认安装为最新内核

yum --enablerepo=elrepo-kernel install kernel-ml#修改内核顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg#使用下面命令看看确认下是否启动默认内核指向上面安装的内核

grubby --default-kernel

#这里的输出结果应该为我们升级后的内核信息reboot

#可以等所有初始化步骤结束进行reboot操作curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum clean all

yum makecache

yum -y install gcc gcc-c++ make autoconf libtool-ltdl-devel gd-devel freetype-devel libxml2-devel libjpeg-devel libpng-devel openssh-clients openssl-devel curl-devel bison patch libmcrypt-devel libmhash-devel ncurses-devel binutils compat-libstdc++-33 elfutils-libelf elfutils-libelf-devel glibc glibc-common glibc-devel libgcj libtiff pam-devel libicu libicu-devel gettext-devel libaio-devel libaio libgcc libstdc++ libstdc++-devel unixODBC unixODBC-devel numactl-devel glibc-headers sudo bzip2 mlocate flex lrzsz sysstat lsof setuptool system-config-network-tui system-config-firewall-tui ntsysv ntp pv lz4 dos2unix unix2dos rsync dstat iotop innotop mytop telnet iftop expect cmake nc gnuplot screen xorg-x11-utils xorg-x11-xinit rdate bc expat-devel compat-expat1 tcpdump sysstat man nmap curl lrzsz elinks finger bind-utils traceroute mtr ntpdate zip unzip vim wget net-tools

modprobe br_netfilter

modprobe ip_conntrack

cat > kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.overcommit_memory=1 # 不检查物理内存是否够用

vm.panic_on_oom=0 # 开启 OOM

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf#分发到所有节点

for i in k8s-02

doscp kubernetes.conf root@$i:/etc/sysctl.d/ssh root@$i sysctl -p /etc/sysctl.d/kubernetes.conf

donecat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOFchmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack#查看是否已经正确加载所需的内核模块yum install ipset -y

yum install ipvsadm -y

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0

systemctl restart rsyslog

systemctl restart crond

export VERSION=19.03

curl -fsSL "https://get.docker.com/" | bash -s -- --mirror Aliyun所有机器配置加速源并配置docker的启动参数使用systemd,使用systemd是官方的建议,详见 https://kubernetes.io/docs/setup/cri/mkdir -p /etc/docker/

cat>/etc/docker/daemon.json<<EOF

{"exec-opts": ["native.cgroupdriver=systemd"],"registry-mirrors": ["https://fz5yth0r.mirror.aliyuncs.com","https://dockerhub.mirrors.nwafu.edu.cn/","https://mirror.ccs.tencentyun.com","https://docker.mirrors.ustc.edu.cn/","https://reg-mirror.qiniu.com","http://hub-mirror.c.163.com/","https://registry.docker-cn.com"],"storage-driver": "overlay2","storage-opts": ["overlay2.override_kernel_check=true"],"log-driver": "json-file","log-opts": {"max-size": "100m","max-file": "3"}

}

EOF

systemctl enable --now docker

cat <<EOF >/etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

EOF# master 节点安装

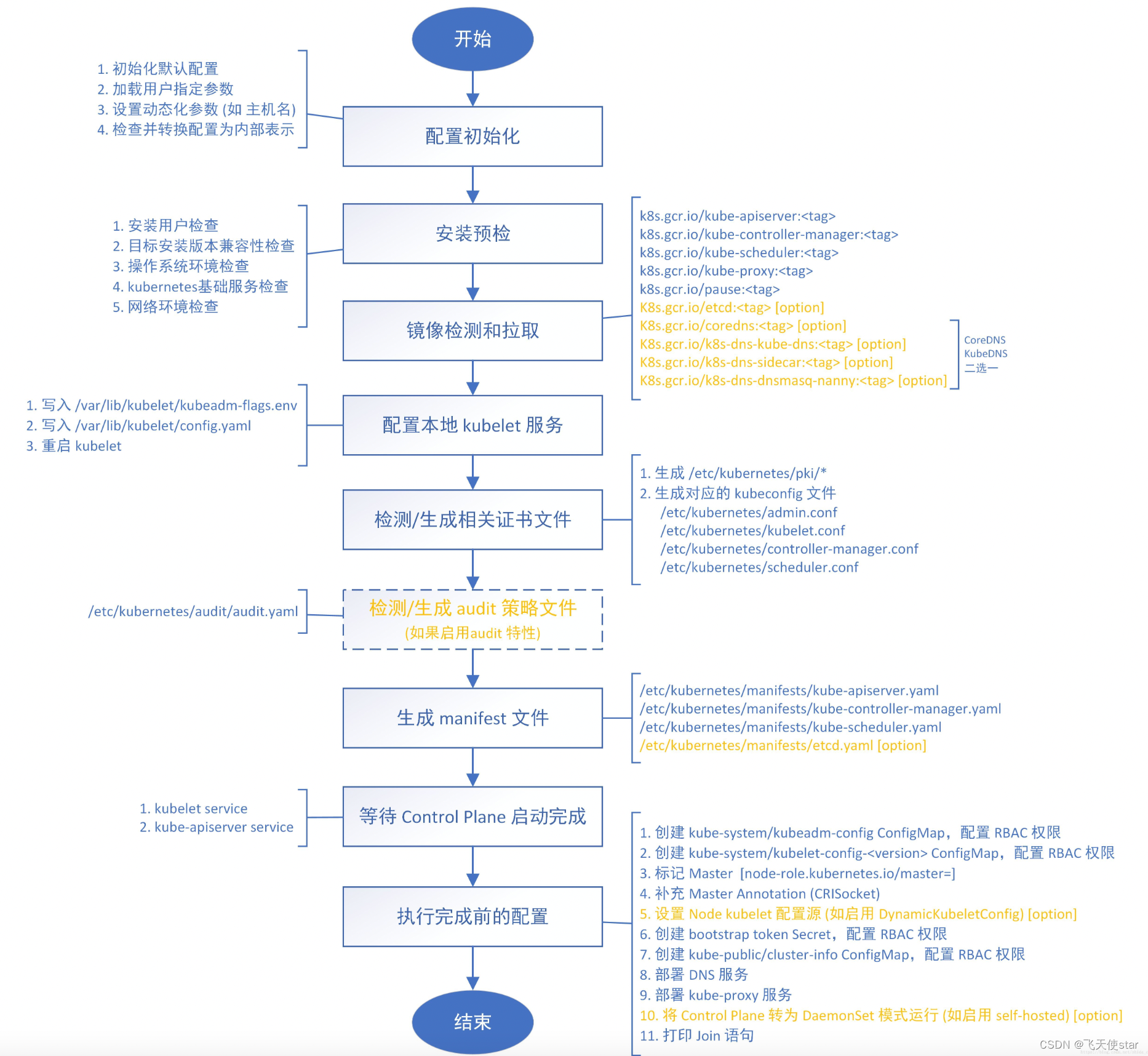

yum install -y \kubeadm-1.18.3 \kubectl-1.18.3 \kubelet-1.18.3 \--disableexcludes=kubernetes && \systemctl enable kubeletkubeadm 安装集群

kubectl 通过命令行访问apiserver

kubelet 负责Pod对应容器的创建、停止等任务

node节点不需要安装kubectl,kubectl是一个agent读取kubeconfig访问api-server来操作集群,node节点一般不需要# node节点安装

yum install -y \kubeadm-1.18.3 \kubelet-1.18.3 \--disableexcludes=kubernetes && \systemctl enable kubelet#node节点安装默认是在所有节点安装,但是k8s中的master节点已经安装过了,我们就只在k8s-02中安装如果是多master ,可以使用keepalived 对api-server 进行高可用,一般云环境会采用云负载均衡来操作,单master 则跳过这些,因为是单点kubeadm config print init-defaults > kubeadm-init.yaml修改 kubeadm-init.yaml

请对应我的IP进行配置,这里主要是master的IP.可以复制我的,但是主机名等要和我相同apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authentication

kind: InitConfiguration

localAPIEndpoint:advertiseAddress: 192.168.100.30bindPort: 6443

nodeRegistration:criSocket: /var/run/dockershim.sockname: k8s-01taints:- effect: NoSchedulekey: node-role.kubernetes.io/master

---

apiServer:timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:type: CoreDNS

etcd:local:dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.18.0

networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12podSubnet: "10.244.0.0/16"

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:SupportIPVSProxyMode: true

mode: ipvs//生成默认初始化配置文件

kubeadm config print init-defaults >kubeadm.yaml

//修改生成的配置文件

advertiseAddress: 192.168.100.30.2 //主节点IP地址

kubernetesVersion: v1.18.2 //安装的版本

imageRepository: registry.aliyuncs.com/google_containers //修改安装源为阿里云镜像

networking:podSubnet: "10.244.0.0/16" //增加一行设置pod分配的网段信息

//在最后添加下列信息,将默认的调度方式改为IPVS

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:SupportIPVSProxyMode: true

mode: ipvs单节点的安装成这样

kubeadm init --config kubeadm-init.yaml --dry-runkubeadm config images list --config kubeadm-init.yaml

kubeadm config images pull --config kubeadm-init.yaml

kubeadm init --config kubeadm-init.yaml--upload-certsYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.100.30:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:7af6d307bf991b433a85d9cf188ac652c6233fb7348409a808a6fe1c1bcbfd01 主节点需要执行

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/confignode节点新加入kubeadm join 192.168.100.30:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:7af6d307bf991b433a85d9cf188ac652c6233fb7348409a808a6fe1c1bcbfd01

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

//kube-flannel中Pod网段必须和kubernetes中配置的Pod网段一致

//kube-flannel默认Pod的网段为10.244.0.0/16

kubectl create -f kube-flannel.yml

kubectl -n kube-system get pod -o widecat<<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:name: nginx

spec:selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- image: nginx:alpinename: nginxports:- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:name: nginx

spec:selector:app: nginxtype: NodePortports:- protocol: TCPport: 80targetPort: 80nodePort: 30001

---

apiVersion: v1

kind: Pod

metadata:name: busyboxnamespace: default

spec:containers:- name: busyboximage: abcdocker9/centos:v1command:- sleep- "3600"imagePullPolicy: IfNotPresentrestartPolicy: Always

EOF[root@k8s-01 ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/busybox 1/1 Running 0 40s

pod/nginx-97499b967-jzxwg 1/1 Running 0 40sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16m

service/nginx NodePort 10.104.210.165 <none> 80:30001/TCP 40s使用nslookup查看是否能返回地址

[root@k8s-01 ~]# kubectl exec -ti busybox -- nslookup kubernetes

Server: 10.96.0.10

Address: 10.96.0.10#53Name: kubernetes.default.svc.cluster.local

Address: 10.96.0.1测试nginx svc以及Pod内部网络通信是否正常

for i in k8s-01 k8s-02

dossh root@$i curl -s 10.104.210.165 #nginx svc ipssh root@$i curl -s 10.244.1.2 #pod ip

done或者用外网打开试一试

[root@k8s-01 ~]# curl 192.168.100.31:30001

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p><p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p>

</body>

</html>二进制安装 :https://blog.csdn.net/startfefesfe/article/details/132408330?spm=1001.2014.3001.5501

其他方式安装:https://blog.csdn.net/startfefesfe/article/details/132339032?spm=1001.2014.3001.5501

https://i4t.com/4732.html

https://www.cnblogs.com/xhyan/p/13591309.html