1.先启动流服务器 ./mediamtx

2.开始推流: ffmpeg -re -stream_loop -1 -i /Users/hackerx/Desktop/test.mp4 -c copy -rtsp_transport tcp -f rtsp rtsp://127.0.0.1:8554/stream

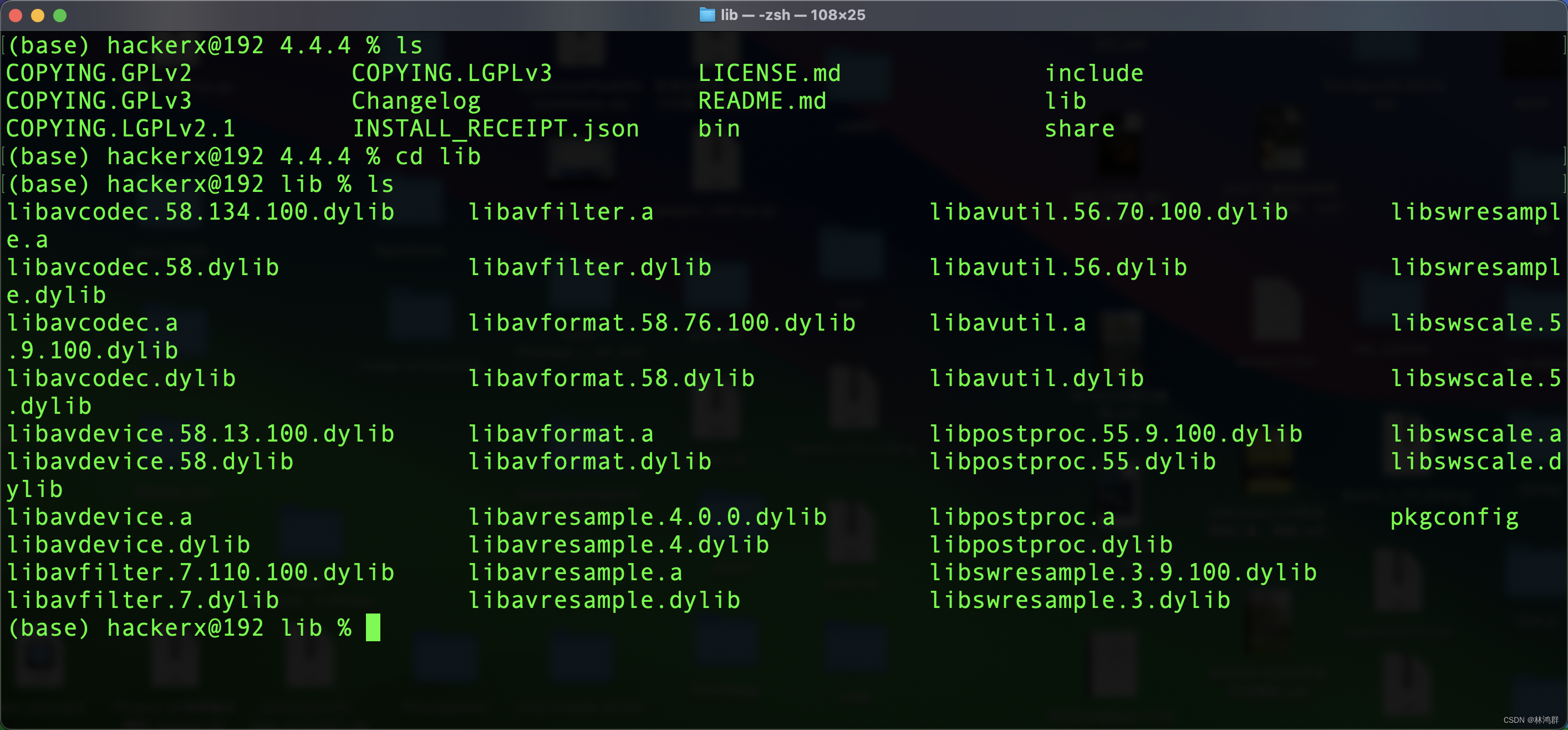

3. 安装ffmpeg 4.4

brew install ffmpeg@4

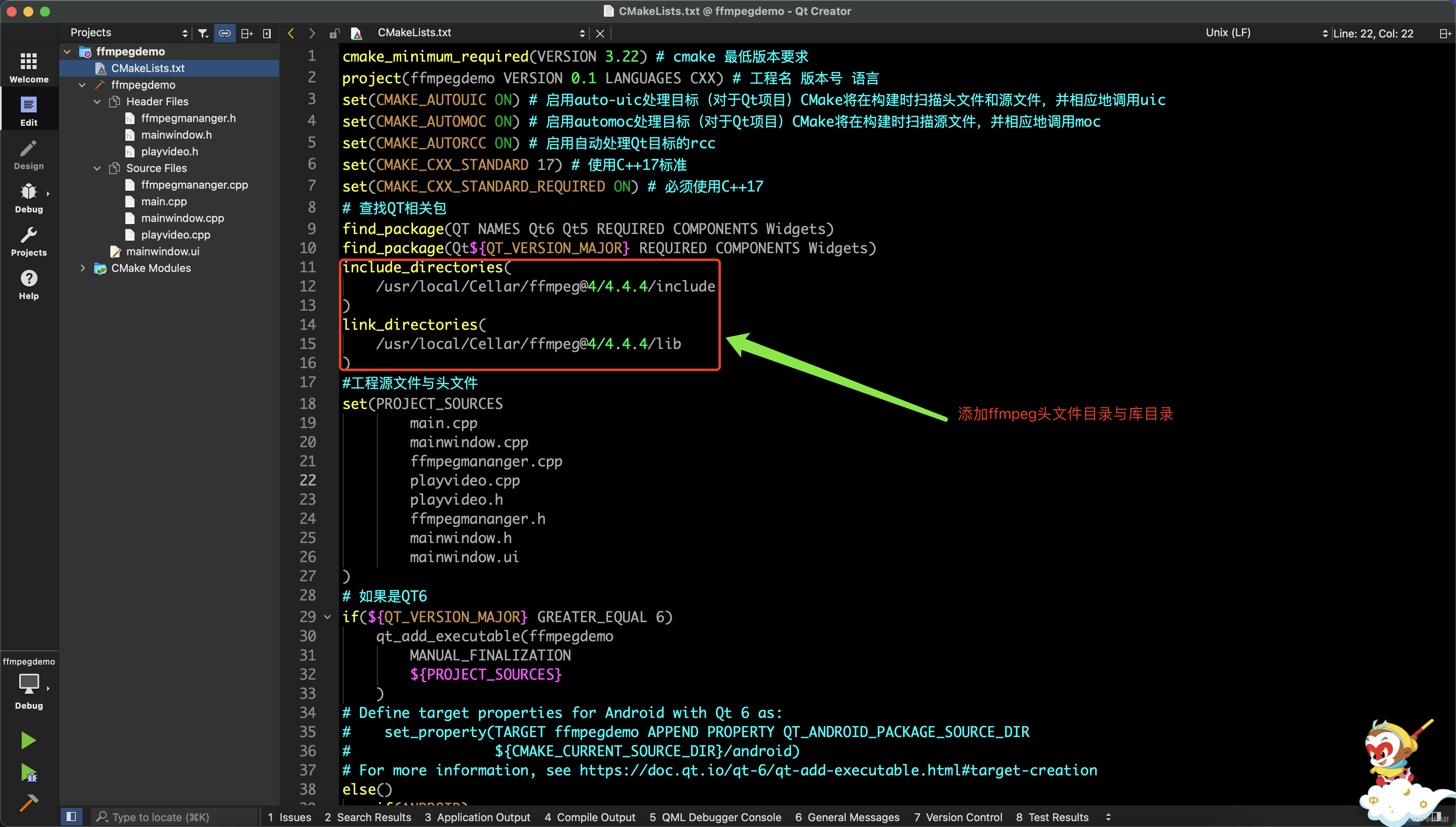

4.添加ffmpeg头文件目录与库目录

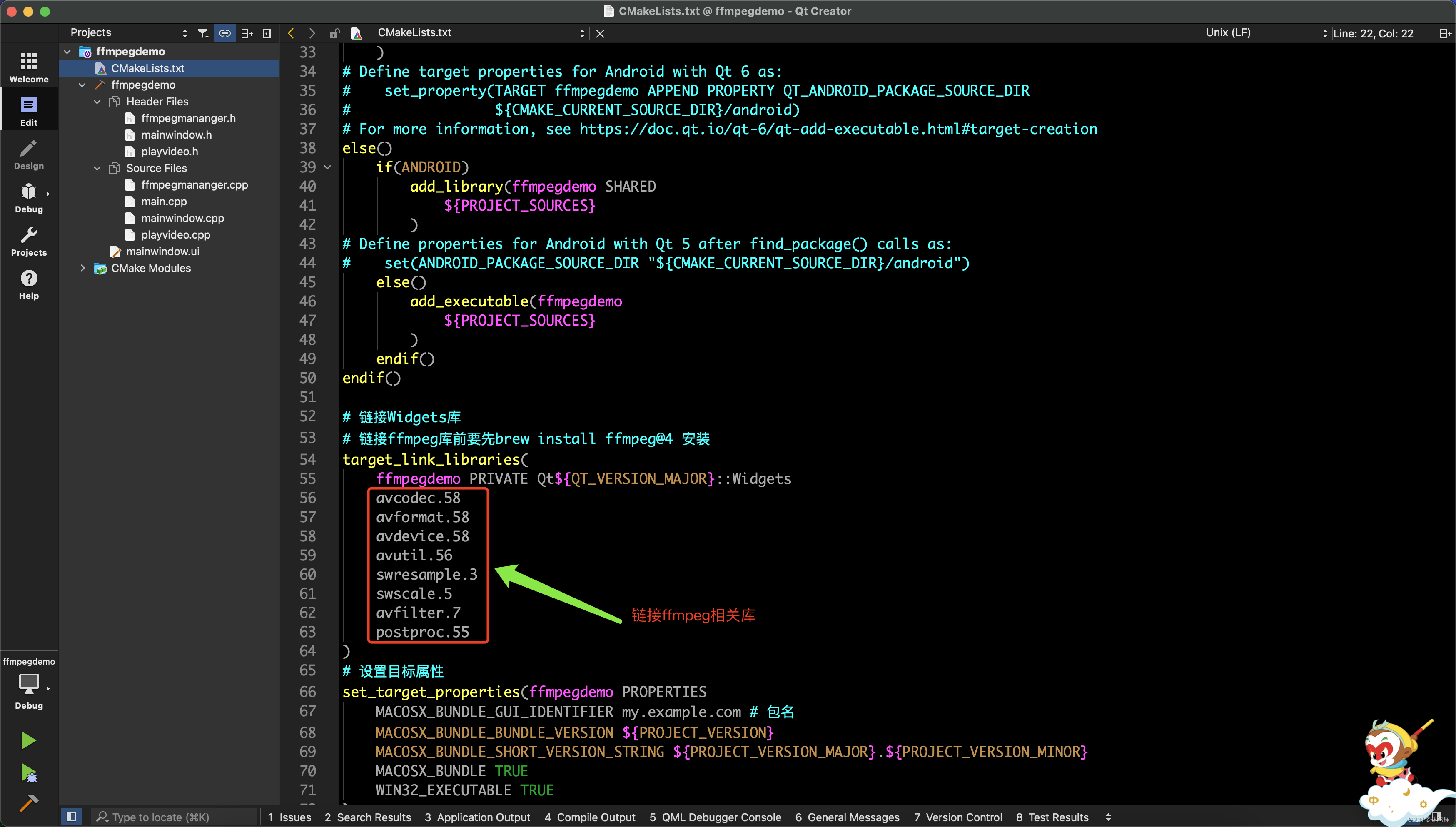

5.链接ffmpeg相关库

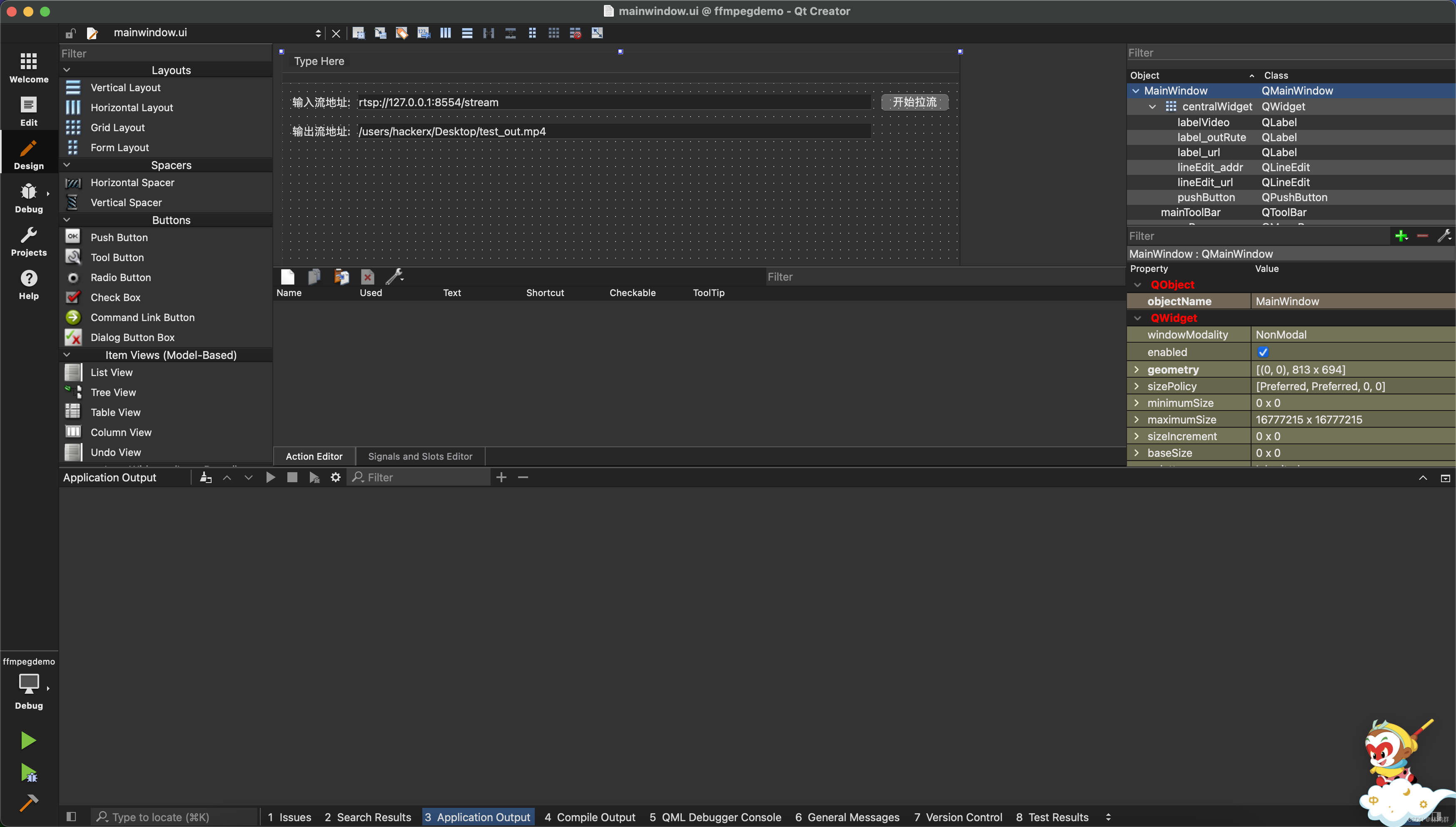

6.设计界面

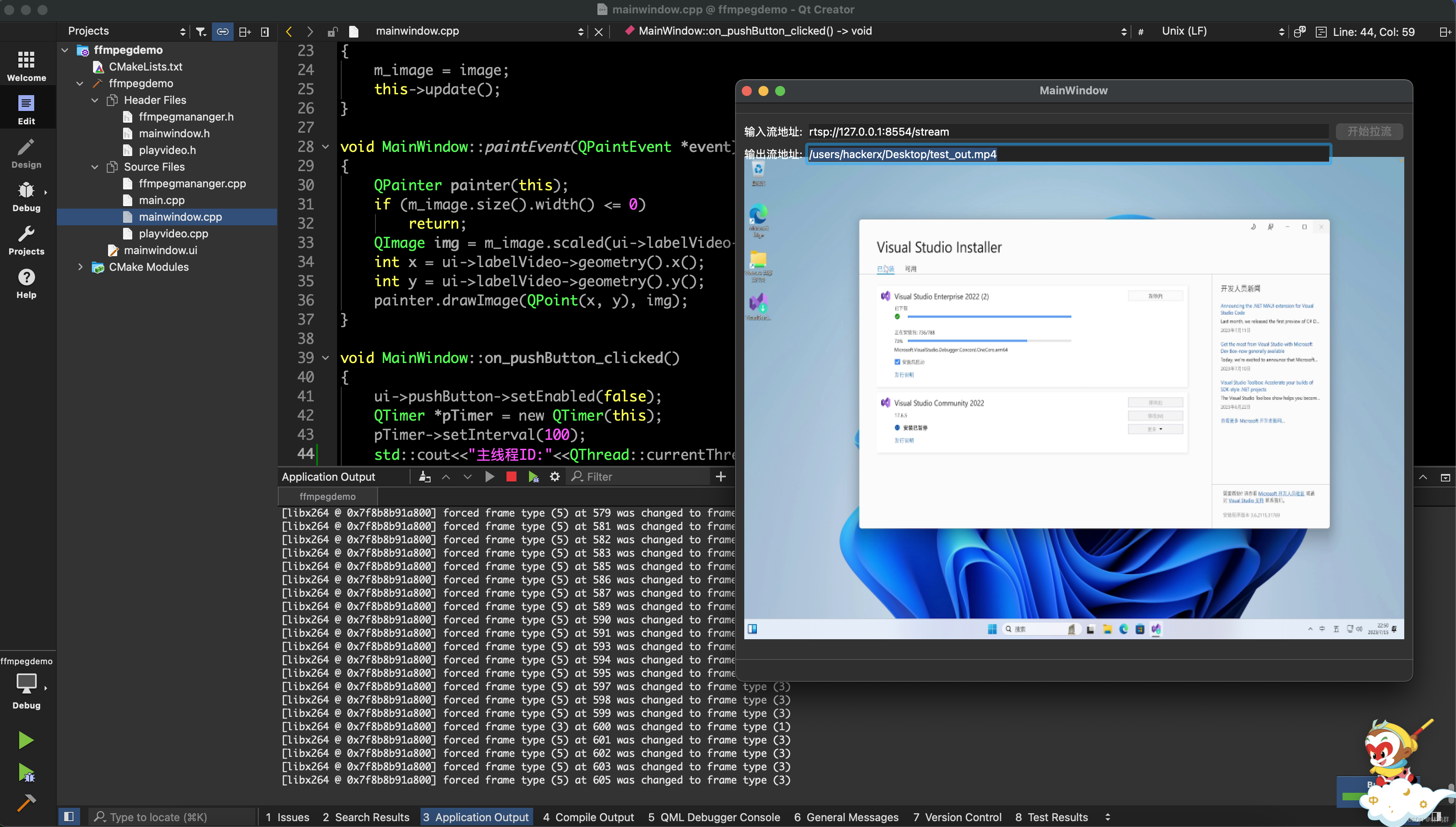

7.拉流

ffmpegmanager.cpp:

#include "ffmpegmananger.h"

#include <QThread>

//构造

ffmpegMananger::ffmpegMananger(QObject *parent) : QObject{parent}

{m_pInFmtCtx = nullptr;//输入流格式上下文m_pTsFmtCtx = nullptr;//输出流格式上下文m_strInputStreamUrl = "";//输入流地址m_strOutputStreamPath = "";//输出流地址}//拆构

ffmpegMananger::~ffmpegMananger()

{avformat_free_context(m_pInFmtCtx);//释放输入流格式上下文avformat_free_context(m_pTsFmtCtx);//释放输出流格式上下文

}//取输入流地址

void ffmpegMananger::getRtspAddress(QString url)

{this->m_strInputStreamUrl = url;

}//取输出流地址

void ffmpegMananger::getOutputAddress(QString path)

{this->m_strOutputStreamPath = path;printf("输出流地址: %s\n",m_strOutputStreamPath.toStdString().c_str());

}void ffmpegMananger::setOutputCtx(AVCodecContext *encCtx, AVFormatContext **pTsFmtCtx,int &nVideoIdx_out)

{avformat_alloc_output_context2(pTsFmtCtx , nullptr, nullptr, m_strOutputStreamPath.toStdString().c_str());if (!pTsFmtCtx ) {printf("创建输出上下文失败: avformat_alloc_output_context2\n");return;}if (avio_open(&((*pTsFmtCtx)->pb), m_strOutputStreamPath.toStdString().c_str(), AVIO_FLAG_READ_WRITE) < 0){avformat_free_context(*pTsFmtCtx);printf("打开输出流失败: avio_open\n");return;}AVStream *out_stream = avformat_new_stream(*pTsFmtCtx, encCtx->codec);nVideoIdx_out = out_stream->index;avcodec_parameters_from_context(out_stream->codecpar, encCtx);printf("输出流信息:\n");av_dump_format(*pTsFmtCtx, 0, m_strOutputStreamPath.toStdString().c_str(), 1);printf("----------------------------\n");

}//拉流并播放

int ffmpegMananger::ffmepgInput()

{int nRet = 0;AVCodecContext *encCtx = nullptr;std::string temp = m_strInputStreamUrl.toStdString();const char *pUrl = temp.c_str();printf("输入流地址: %s\n",pUrl);//设置选项AVDictionary *dict = nullptr;av_dict_set(&dict,"rtsp_transport", "tcp", 0);av_dict_set(&dict,"stimeout","10000000",0);av_dict_set(&dict, "buffer_size", "1024000", 0);//打开输入流nRet = avformat_open_input(&m_pInFmtCtx,pUrl,nullptr,&dict);if( nRet < 0){printf("打开输入流失败\n");return nRet;}avformat_find_stream_info(m_pInFmtCtx, nullptr);printf("输入流信息:\n");av_dump_format(m_pInFmtCtx, 0, pUrl, 0);printf("---------------------------\n");//视频流索引int nVideo_indx = av_find_best_stream(m_pInFmtCtx,AVMEDIA_TYPE_VIDEO,-1,-1,nullptr,0);if(nVideo_indx < 0){avformat_free_context(m_pInFmtCtx);printf("查找视频流索引失败: av_find_best_stream\n");return -1;}//查找解码器auto pInCodec = avcodec_find_decoder(m_pInFmtCtx->streams[nVideo_indx]->codecpar->codec_id);if(nullptr == pInCodec){printf("查找解码器失败: avcodec_find_decoder fail.");return -1;}//解码器上下文AVCodecContext* pInCodecCtx = avcodec_alloc_context3(pInCodec);//设置解码器参数nRet = avcodec_parameters_to_context(pInCodecCtx, m_pInFmtCtx->streams[nVideo_indx]->codecpar);if(nRet < 0){avcodec_free_context(&pInCodecCtx);printf("设置解码器参数失败: avcodec_parameters_to_context");return -1;}//打开解码器if(avcodec_open2(pInCodecCtx, pInCodec, nullptr) < 0){avcodec_free_context(&pInCodecCtx);printf("打开解码器失败: avcodec_open2\n");return -1;}//输出视频分辨率printf("视频宽:%d\n", pInCodecCtx->width);printf("视频高:%d\n", pInCodecCtx->height);int frame_index = 0;//帧索引int got_picture = 0;//帧解码结果//输出输入流AVStream *in_stream =nullptr;AVStream *out_stream =nullptr;//分配内存AVFrame *pFrame= av_frame_alloc();AVFrame *pFrameRGB = av_frame_alloc();AVPacket *newpkt = av_packet_alloc();AVPacket *packet = av_packet_alloc();//初始化视频包av_init_packet(newpkt);av_init_packet(packet);//图像色彩空间转换、分辨率缩放、前后图像滤波处理SwsContext *m_SwsContext = sws_getContext(pInCodecCtx->width,pInCodecCtx->height,pInCodecCtx->pix_fmt,pInCodecCtx->width,pInCodecCtx->height,AV_PIX_FMT_RGB32,SWS_BICUBIC,nullptr, nullptr, nullptr);int bytes = av_image_get_buffer_size(AV_PIX_FMT_RGB32,pInCodecCtx->width,pInCodecCtx->height,4);uint8_t *m_OutBuffer = (uint8_t *)av_malloc(bytes * sizeof(uint8_t));//将分配的内存空间给pFrameRGB使用avpicture_fill((AVPicture *)pFrameRGB,m_OutBuffer,AV_PIX_FMT_RGB32,pInCodecCtx->width,pInCodecCtx->height);if(encCtx == nullptr){//打开编码器openEncoder(pInCodecCtx->width, pInCodecCtx->height,&encCtx);}//视频索引int videoindex_out = 0;//设置输出文件上下文setOutputCtx(encCtx,&m_pTsFmtCtx,videoindex_out);//写文件头if (avformat_write_header(m_pTsFmtCtx, nullptr) < 0){avformat_free_context(m_pTsFmtCtx);printf("写文件头失败\n");return -1;}printf("写文件头成功.\n");int count = 0;//已解码帧数量nRet = 0;//读取帧结果//从pInFmtCtx读H264数据到packet;while(av_read_frame(m_pInFmtCtx, packet) >= 0){if(packet->stream_index != nVideo_indx)//仅保留图像{continue;}//送packet中H264数据给解码器码器进行解码,解码好的YUV数据放在pInCodecCtx,if(avcodec_send_packet(pInCodecCtx, packet)<0){break;}//释放已解码帧引用av_packet_unref(packet);//把解码好的YUV数据放到pFrame中got_picture = avcodec_receive_frame(pInCodecCtx, pFrame);//解码好一帧数据if(0 == got_picture){//发送显示图像的信号// 对解码视频帧进行缩放、格式转换等操作sws_scale(m_SwsContext,(uint8_t const * const *)pFrame->data,pFrame->linesize,0,pInCodecCtx->height,pFrameRGB->data,pFrameRGB->linesize);// 转换到QImageQImage tmmImage((uchar *)m_OutBuffer, pInCodecCtx->width, pInCodecCtx->height, QImage::Format_RGB32);//复制图像QImage image = tmmImage.copy();//发送图像帧解码完成信息emit Sig_GetOneFrame(image);//设置解码器PTSsetDecoderPts(newpkt->stream_index,count, pFrame);count++;//已解码计数//送原始数据给编码器进行编码nRet = avcodec_send_frame(encCtx,pFrame);if(nRet < 0){continue;}//从编码器获取编号的数据while(nRet >= 0){//接收已编码包nRet = avcodec_receive_packet(encCtx,newpkt);if(nRet < 0){break;}//设置编码包PTSsetEncoderPts(nVideo_indx,frame_index,videoindex_out,newpkt);int _count = 1;printf("写%d包,大小:%5d,PTS:%lld\n", _count,newpkt->size, newpkt->pts);if (av_interleaved_write_frame(m_pTsFmtCtx, newpkt) < 0){printf("写帧失败: av_interleaved_write_frame\n");goto end;}_count++;av_packet_unref(newpkt);//释放已编码包}}}while(1)//从pInFmtCtx读H264数据到packet;{if(packet->stream_index != nVideo_indx)//仅保留图像{continue;}//送packet中H264数据给解码器码器进行解码,解码好的YUV数据放在pInCodecCtx,if(avcodec_send_packet(pInCodecCtx, packet)<0){continue;}//释放已解码包av_packet_unref(packet);//把解码好的YUV数据放到pFrame中got_picture = avcodec_receive_frame(pInCodecCtx, pFrame);//解码好一帧数据if(!got_picture){AVRational in_time_base1 = in_stream->time_base;in_stream = m_pInFmtCtx->streams[newpkt->stream_index];//PTSint64_t in_duration = (double)AV_TIME_BASE / av_q2d(in_stream->r_frame_rate);pFrame->pts = (double)(count*in_duration) / (double)(av_q2d(in_time_base1)*AV_TIME_BASE);count++;//送原始数据给编码器进行编码nRet = avcodec_send_frame(encCtx,pFrame);if(nRet < 0){break;}//从编码器获取编号的数据while(nRet >= 0){nRet = avcodec_receive_packet(encCtx,newpkt);if(nRet < 0){continue;}in_stream = m_pInFmtCtx->streams[newpkt->stream_index];out_stream = m_pTsFmtCtx->streams[videoindex_out];if (newpkt->stream_index == nVideo_indx){if (newpkt->pts == AV_NOPTS_VALUE){//写入PTSAVRational time_base1 = in_stream->time_base;int64_t calc_duration = (double)AV_TIME_BASE / av_q2d(in_stream->r_frame_rate);//设置包参数newpkt->pts = (double)(frame_index*calc_duration) / (double)(av_q2d(time_base1)*AV_TIME_BASE);newpkt->dts = newpkt->pts;newpkt->duration = (double)calc_duration / (double)(av_q2d(time_base1)*AV_TIME_BASE);frame_index++;}}//转换PTS/DTSnewpkt->pts = av_rescale_q_rnd(newpkt->pts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));newpkt->dts = av_rescale_q_rnd(newpkt->dts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));newpkt->duration = av_rescale_q(newpkt->duration, in_stream->time_base, out_stream->time_base);newpkt->pos = -1;newpkt->stream_index = videoindex_out;int count = 1;printf("写%d包,大小:%5dPTS:%lld\n", count,newpkt->size, newpkt->pts);if (av_interleaved_write_frame(m_pTsFmtCtx, newpkt) < 0){printf("写帧失败: av_interleaved_write_frame\n");goto end;}count++;av_packet_unref(newpkt);}}}//Write file trailerav_write_trailer(m_pTsFmtCtx);

end:av_frame_free(&pFrame);av_frame_free(&pFrameRGB);av_packet_unref(newpkt);av_packet_unref(packet);std::cout<<"拉流完成";return 0;

}void ffmpegMananger::setDecoderPts(int idx,int count,AVFrame *pFrame)

{AVStream* in_stream = m_pInFmtCtx->streams[idx];AVRational in_time_base1 = in_stream->time_base;//Duration between 2 frames (us)int64_t in_duration = (double)AV_TIME_BASE / av_q2d(in_stream->r_frame_rate);pFrame->pts = (double)(count*in_duration) / (double)(av_q2d(in_time_base1)*AV_TIME_BASE);

}void ffmpegMananger::setEncoderPts(int nVideo_indx,int frame_index,int videoindex_out,AVPacket *newpkt)

{AVStream*in_stream = m_pInFmtCtx->streams[newpkt->stream_index];AVStream*out_stream = m_pTsFmtCtx->streams[videoindex_out];if (newpkt->stream_index == nVideo_indx){//FIX:No PTS (Example: Raw H.264)//Simple Write PTSif (newpkt->pts == AV_NOPTS_VALUE){//Write PTSAVRational time_base1 = in_stream->time_base;//Duration between 2 frames (us)int64_t calc_duration = (double)AV_TIME_BASE / av_q2d(in_stream->r_frame_rate);//Parametersnewpkt->pts = (double)(frame_index*calc_duration) / (double)(av_q2d(time_base1)*AV_TIME_BASE);newpkt->dts = newpkt->pts;newpkt->duration = (double)calc_duration / (double)(av_q2d(time_base1)*AV_TIME_BASE);frame_index++;}}//Convert PTS/DTSnewpkt->pts = av_rescale_q_rnd(newpkt->pts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));newpkt->dts = av_rescale_q_rnd(newpkt->dts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));newpkt->duration = av_rescale_q(newpkt->duration, in_stream->time_base, out_stream->time_base);newpkt->pos = -1;newpkt->stream_index = videoindex_out;

}void ffmpegMananger::writeTail()

{//Write file trailerav_write_trailer(m_pTsFmtCtx);

}void ffmpegMananger::openEncoder(int width, int height, AVCodecContext** enc_ctx)

{//使用libx264编码器auto pCodec = avcodec_find_encoder_by_name("libx264");if(nullptr == pCodec){printf("avcodec_find_encoder_by_name fail.\n");return;}//获取编码器上下文*enc_ctx = avcodec_alloc_context3(pCodec);if(nullptr == enc_ctx){printf("avcodec_alloc_context3(pCodec) fail.\n");return;}//sps/pps(*enc_ctx)->profile = FF_PROFILE_H264_MAIN;(*enc_ctx)->level = 30;//表示level是5.0//分辨率(*enc_ctx)->width = width;(*enc_ctx)->height = height;//gop(*enc_ctx)->gop_size = 25;//i帧间隔(*enc_ctx)->keyint_min = 20;//设置最小自动插入i帧的间隔.OPTION//B帧(*enc_ctx)->max_b_frames = 0;//不要B帧(*enc_ctx)->has_b_frames = 0;////参考帧(*enc_ctx)->refs = 3;//OPTION//设置输入的yuv格式(*enc_ctx)->pix_fmt = AV_PIX_FMT_YUV420P;//设置码率(*enc_ctx)->bit_rate = 3000000;//设置帧率(*enc_ctx)->time_base = (AVRational){1,25};//帧与帧之间的间隔(*enc_ctx)->framerate = (AVRational){25,1};//帧率 25帧每秒if(avcodec_open2((*enc_ctx),pCodec,nullptr) < 0){printf("avcodec_open2 fail.\n");}return;

}ffmpegmanager.h

#ifndef FFMPEGMANANGER_H

#define FFMPEGMANANGER_H

#pragma execution_character_set("utf-8")

//QT头

#include <QObject>

#include <QTimer>

#include <QImage>

//C标准头

#include <stdio.h>

#include <iostream>

//FFmpeg头

extern "C"

{#include "libswscale/swscale.h"#include "libavdevice/avdevice.h"#include "libavcodec/avcodec.h"#include "libavcodec/bsf.h"#include "libavformat/avformat.h"#include "libavutil/avutil.h"#include "libavutil/imgutils.h"#include "libavutil/log.h"#include "libavutil/time.h"#include <libswresample/swresample.h>}class ffmpegMananger : public QObject

{Q_OBJECT

public://构造explicit ffmpegMananger(QObject *parent = nullptr);//拆构~ffmpegMananger();//取输入流地址void getRtspAddress(QString url);//取输出流地址void getOutputAddress(QString path);//ffmpeg拉流播放int ffmepgInput();//打开解码器void openEncoder(int width, int height, AVCodecContext** enc_ctx);//设置输出上下文void setOutputCtx(AVCodecContext *encCtx, AVFormatContext **pTsFmtCtx,int &nVideoIdx_out);//写文件尾void writeTail();//设置解码的ptsvoid setDecoderPts(int idx,int count,AVFrame *pFrame);//设置编码的ptsvoid setEncoderPts(int nVideo_indx,int frame_index,int videoindex_out,AVPacket *newpkt);

signals://取一帧图像信号void Sig_GetOneFrame(QImage img);

private://输入流地址QString m_strInputStreamUrl;//输出流地址QString m_strOutputStreamPath;//输入流动格式上下文AVFormatContext *m_pInFmtCtx;//输出流动格式上下文AVFormatContext *m_pTsFmtCtx;bool m_ifRec;};#endif // FFMPEGMANANGER_H