ansible部署zookeeper和kafka集群

- 基础环境准备

- 配置ansible文件(zookeeper)

- 配置ansible文件(kafka)

| 节点 | IP |

|---|---|

| ansible | 192.168.200.75 |

| node1 | 192.168.200.76 |

| node2 | 192.168.200.77 |

| node3 | 192.168.200.78 |

基础环境准备

基础环境配置就不过多赘述了

主机名、主机解析、免密访问、ansible下载、配置ansible主机、防火墙、selinux、配置centos2009镜像、配置ftp远程。均已配置

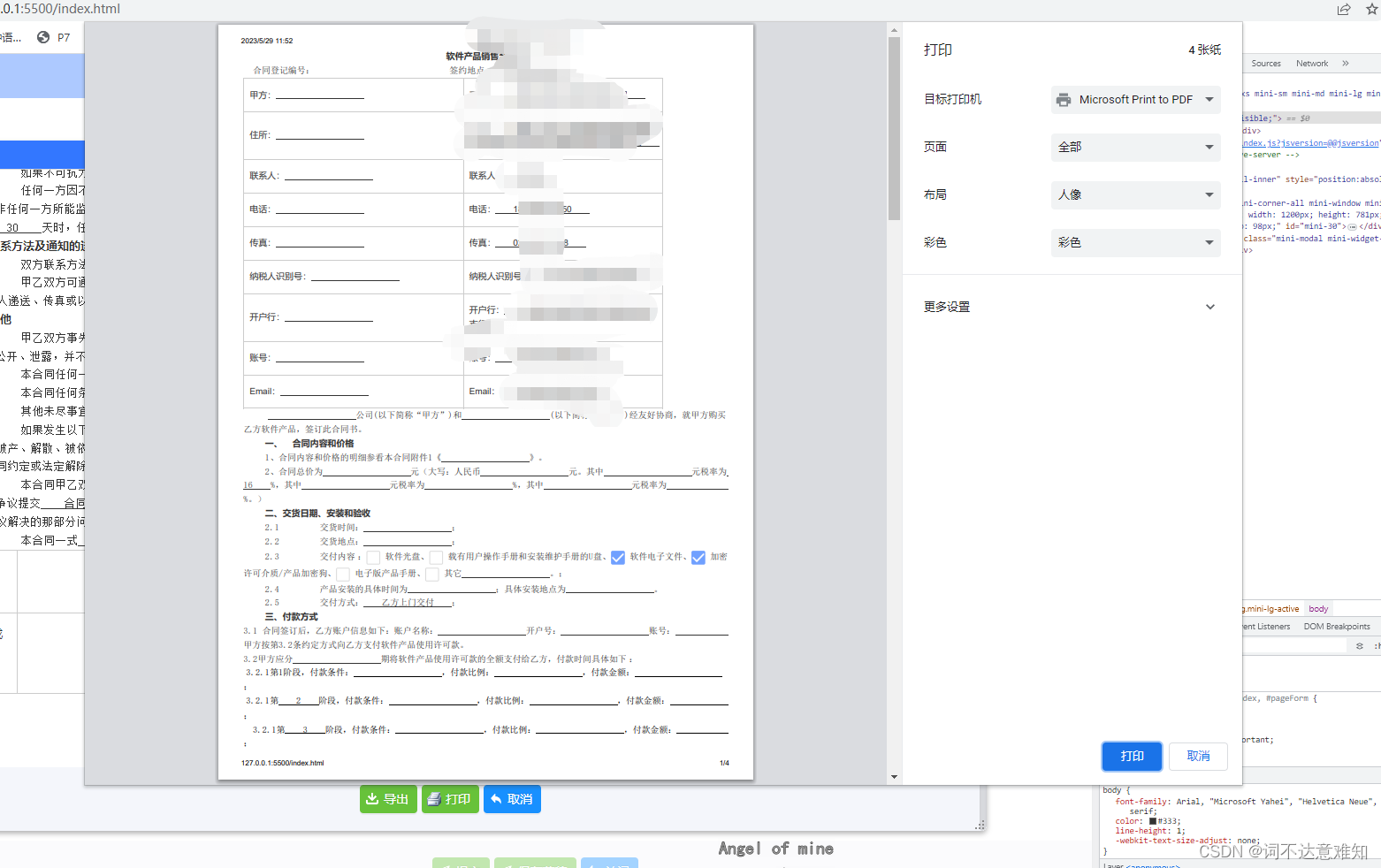

配置ansible文件(zookeeper)

在ansible节点的/root目录下创建example目录,作为Ansible工作目录,并创建cscc_install.yml文件作为部署的入口文件,编写如下内容:

[root@ansible ~]# mkdir example

[root@ansible ~]# cd example/

[root@ansible example]# mkdir -p myid/{myid1,myid2,myid3}

[root@ansible example]# cat ftp.repo

[gpmall-repo]

name=gpmall

baseurl=ftp://ansible/gpmall-repo

gpgcheck=0

enabled=1

[centos]

name=centos

baseurl=ftp://ansible/centos

gpgcheck=0

enabled=1[root@ansible example]# cat zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/tmp/zookeeper

clientPort=2181

server.1=192.168.200.76:2888:3888

server.2=192.168.200.77:2888:3888

server.3=192.168.200.78:2888:3888[root@ansible example]# cat cscc_install.yml

---

- hosts: allremote_user: roottasks:- name: rm reposhell: rm -rf /etc/yum.repos.d/*- name: copy repocopy: src=ftp.repo dest=/etc/yum.repos.d/- name: install javashell: yum -y install java-1.8.0-*- name: copy zookeepercopy: src=zookeeper-3.4.14.tar.gz dest=/root/zookeeper-3.4.14.tar.gz- name: tar-zookeepershell: tar -zxvf zookeeper-3.4.14.tar.gz- name: copy zoo.cfgcopy: src=zoo.cfg dest=/root/zookeeper-3.4.14/conf/zoo.cfg- name: mkdirshell: mkdir -p /tmp/zookeeper

- hosts: node1remote_user: roottasks:- name: copy myid1copy: src=myid/myid1/myid dest=/tmp/zookeeper/myid

- hosts: node2remote_user: roottasks:- name: copy myid2copy: src=myid/myid2/myid dest=/tmp/zookeeper/myid

- hosts: node3remote_user: roottasks:- name: copy myid3copy: src=myid/myid3/myid dest=/tmp/zookeeper/myid

- hosts: allremote_user: roottasks:- name: start zookerpershell: /root/zookeeper-3.4.14/bin/zkServer.sh start[root@ansible example]# ls

cscc_install.yml ftp.repo myid zoo.cfg zookeeper-3.4.14.tar.gz

检查剧本

[root@ansible example]# ansible-playbook --syntax-check cscc_install.ymlplaybook: cscc_install.yml[root@ansible example]# ansible-playbook cscc_install.ymlPLAY [all] *************************************************************************************************************

TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.77]

ok: [192.168.200.78]

ok: [192.168.200.76]TASK [rm repo] *********************************************************************************************************

[WARNING]: Consider using the file module with state=absent rather than running 'rm'. If you need to use command

because file is insufficient you can add 'warn: false' to this command task or set 'command_warnings=False' in

ansible.cfg to get rid of this message.

changed: [192.168.200.77]

changed: [192.168.200.78]

changed: [192.168.200.76]TASK [copy repo] *******************************************************************************************************

changed: [192.168.200.76]

changed: [192.168.200.78]

changed: [192.168.200.77]TASK [install java] ****************************************************************************************************

[WARNING]: Consider using the yum module rather than running 'yum'. If you need to use command because yum is

insufficient you can add 'warn: false' to this command task or set 'command_warnings=False' in ansible.cfg to get rid

of this message.

changed: [192.168.200.77]

changed: [192.168.200.76]

changed: [192.168.200.78]TASK [copy zookeeper] **************************************************************************************************

changed: [192.168.200.76]

changed: [192.168.200.78]

changed: [192.168.200.77]TASK [tar-zookeeper] ***************************************************************************************************

[WARNING]: Consider using the unarchive module rather than running 'tar'. If you need to use command because unarchive

is insufficient you can add 'warn: false' to this command task or set 'command_warnings=False' in ansible.cfg to get

rid of this message.

changed: [192.168.200.78]

changed: [192.168.200.76]

changed: [192.168.200.77]TASK [copy zoo.cfg] ****************************************************************************************************

changed: [192.168.200.76]

changed: [192.168.200.77]

changed: [192.168.200.78]TASK [mkdir] ***********************************************************************************************************

[WARNING]: Consider using the file module with state=directory rather than running 'mkdir'. If you need to use command

because file is insufficient you can add 'warn: false' to this command task or set 'command_warnings=False' in

ansible.cfg to get rid of this message.

changed: [192.168.200.76]

changed: [192.168.200.77]

changed: [192.168.200.78]PLAY [node1] ***********************************************************************************************************TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.76]TASK [copy myid1] ******************************************************************************************************

changed: [192.168.200.76]PLAY [node2] ***********************************************************************************************************TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.77]TASK [copy myid2] ******************************************************************************************************

changed: [192.168.200.77]PLAY [node3] ***********************************************************************************************************TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.78]TASK [copy myid3] ******************************************************************************************************

changed: [192.168.200.78]PLAY [all] *************************************************************************************************************TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.76]

ok: [192.168.200.77]

ok: [192.168.200.78]TASK [start zookerper] *************************************************************************************************

changed: [192.168.200.76]

changed: [192.168.200.77]

changed: [192.168.200.78]PLAY RECAP *************************************************************************************************************

192.168.200.76 : ok=12 changed=9 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.200.77 : ok=12 changed=9 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.200.78 : ok=12 changed=9 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

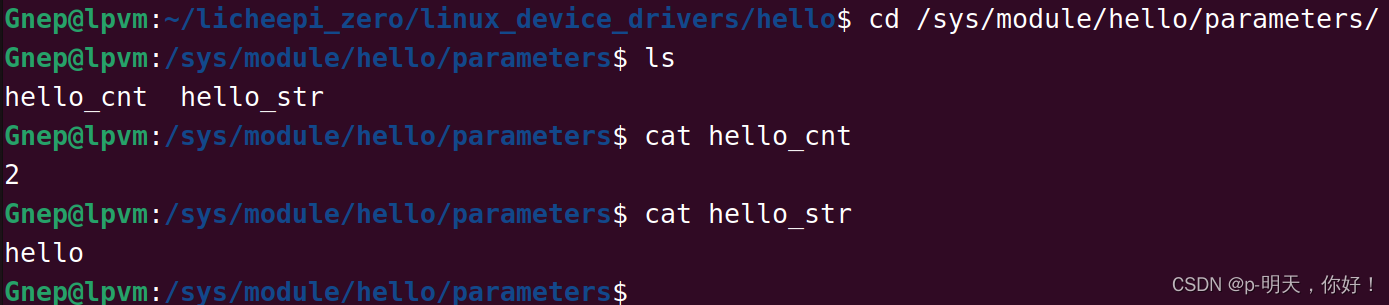

node验证

[root@node1 ~]# jps

10964 QuorumPeerMain

10997 Jps

[root@node2 ~]# jps

2530 Jps

2475 QuorumPeerMain

[root@node3 ~]# jps

2528 QuorumPeerMain

2586 Jps

配置ansible文件(kafka)

[root@ansible example]# mkdir -p server/{server1,server2,server3}

[root@ansible example]# cat server/server1/server.properties

broker.id=1num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/tmp/kafka-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000zookeeper.connect=192.168.200.76:2181,192.168.200.77:2181,192.168.200.78:2181

listeners = PLAINTEXT://192.168.200.76:9092zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

[root@ansible example]# cat server/server2/server.properties

broker.id=2num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/tmp/kafka-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000zookeeper.connect=192.168.200.76:2181,192.168.200.77:2181,192.168.200.78:2181

listeners = PLAINTEXT://192.168.200.77:9092zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

[root@ansible example]# cat server/server3/server.properties

broker.id=3num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/tmp/kafka-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000zookeeper.connect=192.168.200.76:2181,192.168.200.77:2181,192.168.200.78:2181

listeners = PLAINTEXT://192.168.200.78:9092zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0[root@ansible example]# cat cscc_install_kafka.yml

---

- hosts: allremote_user: roottasks:- name: copy kafkacopy: src=kafka_2.11-1.1.1.tgz dest=/root/kafka_2.11-1.1.1.tgz- name: tar-kafkashell: tar -zxvf kafka_2.11-1.1.1.tgz

- hosts: node1remote_user: roottasks:- name: copy server1copy: src=server/server1/server.properties dest=/root/kafka_2.11-1.1.1/config

- hosts: node2remote_user: roottasks:- name: copy server2copy: src=server/server2/server.properties dest=/root/kafka_2.11-1.1.1/config

- hosts: node3remote_user: roottasks:- name: copy server3copy: src=server/server3/server.properties dest=/root/kafka_2.11-1.1.1/config

- hosts: allremote_user: roottasks:- name: copy kafka.shcopy: src=start_kafka.sh dest=/root/start_kafka.sh- name: start kafkashell: bash /root/start_kafka.sh[root@ansible example]# cat start_kafka.sh

/root/kafka_2.11-1.1.1/bin/kafka-server-start.sh -daemon /root/kafka_2.11-1.1.1/config/server.properties[root@ansible example]# ls

cscc_install_kafka.yml ftp.repo myid start_kafka.sh zookeeper-3.4.14.tar.gz

cscc_install.yml kafka_2.11-1.1.1.tgz server zoo.cfg

[root@ansible example]#

检查剧本并执行

[root@ansible example]# ansible-playbook --syntax-check cscc_install_kafka.ymlplaybook: cscc_install_kafka.yml[root@ansible example]# ansible-playbook cscc_install_kafka.ymlPLAY [all] *************************************************************************************************************TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.77]

ok: [192.168.200.76]

ok: [192.168.200.78]TASK [copy kafka] ******************************************************************************************************

ok: [192.168.200.76]

ok: [192.168.200.78]

ok: [192.168.200.77]TASK [tar-kafka] *******************************************************************************************************

[WARNING]: Consider using the unarchive module rather than running 'tar'. If you need to use command because unarchive

is insufficient you can add 'warn: false' to this command task or set 'command_warnings=False' in ansible.cfg to get

rid of this message.

changed: [192.168.200.76]

changed: [192.168.200.78]

changed: [192.168.200.77]PLAY [node1] ***********************************************************************************************************TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.76]TASK [copy server1] ****************************************************************************************************

changed: [192.168.200.76]PLAY [node2] ***********************************************************************************************************TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.77]TASK [copy server2] ****************************************************************************************************

changed: [192.168.200.77]PLAY [node3] ***********************************************************************************************************TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.78]TASK [copy server3] ****************************************************************************************************

changed: [192.168.200.78]PLAY [all] *************************************************************************************************************TASK [Gathering Facts] *************************************************************************************************

ok: [192.168.200.76]

ok: [192.168.200.78]

ok: [192.168.200.77]TASK [start kafka] *****************************************************************************************************

changed: [192.168.200.78]

changed: [192.168.200.77]

changed: [192.168.200.76]PLAY RECAP *************************************************************************************************************

192.168.200.76 : ok=7 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.200.77 : ok=7 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.200.78 : ok=7 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

node验证

[root@node1 ~]# jps

19057 Jps

10964 QuorumPeerMain

18999 Kafka

[root@node2 ~]# jps

9589 Kafka

2475 QuorumPeerMain

9613 Jps

[root@node3 ~]# jps

2528 QuorumPeerMain

9318 Kafka

9342 Jps

如果要一键执行只需要把yml文件合并即可