{}字典[]列表

请求对象的定制

urllib.request.Request(url,headers)

urllib.request.urlopen(请求对象)

因为urlopen方法中不能存储字典 所以headers不能传递进去

注意:因为参数顺序的问题,不能直接写url和headers,中间还有data,所以我们需要关键字传参

python">import urllib.requesturl = 'https://www.baidu.com'# url组成

# https://www.baidu.com/s?wd=周杰伦

# http/https www.baidu.com 80/443 s wd = 周杰伦 #

# 协议 主机 端口号 路径 参数 锚点

# http 80

# https 443

# mysql 3306

# redis 6379

# mongodb 27017

# oracle 1521headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}

request = urllib.request.Request(url=url, headers=headers)

response = urllib.request.urlopen(request)content = response.read().decode('utf8')

print(content)

编解码

GET请求

urllib.parse.quote('周杰伦')得到对应编码:%E5%91%A8%E6%9D%B0%E4%BC%A6

python">import urllib.request

import urllib.parseurl = 'https://www.baidu.com/s?wd='name = urllib.parse.quote('周杰伦')

# print(name)

# https://www.baidu.com/s?wd=%E5%91%A8%E6%9D%B0%E4%BC%A6

url = url + name

# print(url)headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}

request = urllib.request.Request(url=url, headers=headers)

response = urllib.request.urlopen(request)content = response.read().decode('utf8')

print(content)

python">data = {'wd': '周杰伦','sex': '男','location': '中国台湾省'

}urllib.parse.urlencode(data)得到对应编码:wd=%E5%91%A8%E6%9D%B0%E4%BC%A6&sex=%E7%94%B7&location=%E4%B8%AD%E5%9B%BD%E5%8F%B0%E6%B9%BE%E7%9C%81

python">import urllib.request

import urllib.parse# https://www.baidu.com/s?wd=周杰伦&sex=男&location=中国台湾省

base_url = 'https://www.baidu.com/s?'

data = {'wd': '周杰伦','sex': '男','location': '中国台湾省'

}

new_data = urllib.parse.urlencode(data)

# print(urllib.parse.urlencode(data))

url = base_url + new_dataheaders = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}

request = urllib.request.Request(url=url, headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf8')

print(content)

POST请求

post请求的参数是不会拼接在url后面的,而是需要放在请求对象定制的参数中,另外post请求的参数必须进行编码

python">import urllib.request

import urllib.parseurl = 'https://fanyi.baidu.com/sug'headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}data = {'kw': 'spider'

}

# post请求参数必须要进行编码

data = urllib.parse.urlencode(data).encode("utf-8")request = urllib.request.Request(url=url, data=data, headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

print(type(content))

# 字符串--》json对象

import jsonobj = json.loads(content)

print(obj)

Ajax请求获取豆瓣电影第一页

python">import urllib.request

import urllib.parse# 获取豆瓣电影第一页

url = 'https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&start=0&limit=20'

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}

request = urllib.request.Request(url=url, headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

fp = open('douban.json', 'w', encoding='utf-8')

fp.write(content)

Ajax请求获取豆瓣电影前10页

1.请求对象的定制

2.获取响应的数据

3.下载数据

if __name__='__main__':

python">import urllib.request

import urllib.parse# 获取豆瓣电影前10页数据# https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&

# start=0&limit=20

# https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&

# start=20&limit=20

# https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&

# start=40&limit=20

# 找出规律 start=(page-1)*20

# page 1 2 3 4 5

# start 0 20 40 60 80

def create_request(page):base_url = 'https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&'data = {'start': (page - 1) * 20,'limit': 20}data = urllib.parse.urlencode(data)url = base_url + dataheaders = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'}request = urllib.request.Request(url=url, headers=headers)return requestdef get_response(request):response = urllib.request.urlopen(request)content = response.read().decode('utf-8')return contentdef down_load(page, content):with open('douban_' + str(page) + '.json', 'w', encoding='utf-8') as wf:wf.write(content)if __name__ == '__main__':start_page = int(input('请输入起始的页码:'))end_page = int(input('请输入结束的页码:'))for page in range(start_page, end_page + 1):# 每一页对应的请求对象定制request = create_request(page)# 获取响应的数据content = get_response(request)# 下载down_load(page, content)

Ajax请求获取肯德基北京餐厅信息查询信息

python"># 第一页

# https://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname

# post

# cname: 北京

# pid:

# pageIndex: 1

# pageSize: 10# 第二页

# https://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname

# post

# cname: 北京

# pid:

# pageIndex: 2

# pageSize: 10import urllib.request

import urllib.parsedef create_request(page):base_url = 'https://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname'headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'}data = {'cname': '北京','pid': '','pageIndex': page,'pageSize': 10}data = urllib.parse.urlencode(data).encode('utf-8')request = urllib.request.Request(url=base_url, data=data, headers=headers)return requestdef get_response(request):response = urllib.request.urlopen(request)content = response.read().decode('utf-8')return contentdef down_load(page, content):with open('kfc_' + str(page) + '.json', 'w', encoding='utf-8') as wf:wf.write(content)if __name__ == '__main__':start_page = int(input('请输入起始页码:'))end_page = int(input('请输入结束页码:'))for page in range(start_page, end_page + 1):request = create_request(page)content = get_response(request)down_load(page, content)

HTTPError和URLError

HTTPError:路径错误

URLError:域名端口写错

微博的cookie登录

python">import urllib.request

import urllib.parseurl = 'https://weibo.cn/7278281557/info'

headers = {# 可以携带cookie进入到任何页面'Cookie': 'SCF=AoU7bQRwxo-YoBcPj9viD7uPYruPTlAD9Tze3kFz1Gl2J3r46ArWSU8JX5OZ73fdq_WKlsSvcxBrNDdsK14p5hc.; ''SUB=_2A25LYswbDeRhGeFM7FoT-C_JzjuIHXVoHkHTrDV6PUJbktAGLVbakW1NQKQzV5lr5ytRXCBieJfiPTxUBuLCklnl; ''SUBP=0033WrSXqPxfM725Ws9jqgMF55529P9D9WFBVN93Q_aw4plWnDQDi8sV5NHD95QNeoMReonpSK-NWs4DqcjMi--NiK.Xi-2Ri--ciKnRi-zNS0zN1hzReK-fS7tt; ''SSOLoginState=1718008908; ALF=1720600908; _T_WM=19f4c6b4c9b5f298db45a9719f1fd1ee',# 判断当前路径是不是由上一个路径进来的,一般情况下,是做图片防盗链'Referer': 'https://weibo.cn/','User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}request = urllib.request.Request(url=url, headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode()

with open('weibo.html', 'w', encoding='utf-8') as wf:wf.write(content)

Handler处理器

python"># 获取handler对象

handler = urllib.request.HTTPHandler()

# 获取opener对象

opener = urllib.request.build_opener(handler)

# 调用open方法

response = opener.open(request)上面三句相当于urllib.request.urlopen(request) 但是上面可以做代理

python">import urllib.request

import urllib.parseurl = 'https://www.baidu.com'

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}

request = urllib.request.Request(url=url, headers=headers)# 获取handler对象

handler = urllib.request.HTTPHandler()

# 获取opener对象

opener = urllib.request.build_opener(handler)

# 调用open方法

response = opener.open(request)content = response.read().decode('utf-8')

print(content)

代理

python">proxies = {'http': '117.42.94.79:17622'

}

# 获取handler对象

handler = urllib.request.ProxyHandler(proxies=proxies)python">import urllib.request

import urllib.parseurl = 'http://www.baidu.com/s?wd=ip'

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}

request = urllib.request.Request(url=url, headers=headers)proxies = {'http': '117.42.94.79:17622'

}

# 获取handler对象

handler = urllib.request.ProxyHandler(proxies=proxies)

# 获取opener对象

opener = urllib.request.build_opener(handler)

# 调用open方法

response = opener.open(request)content = response.read().decode('utf-8')

with open('daili.html', 'w', encoding='utf-8') as wf:wf.write(content)

代理池

python">import random

proxies_pool = [{'http': '117.42.94.79:17622'},{'http': '117.42.94.78:17623'}

]

proxies = random.choice(proxies_pool)解析

XPath.crx安装

浏览器打开按住Ctrl+Shift+x

XPath解析本地文件

<!DOCTYPE html>

<html lang="en">

<head><meta charset="UTF-8"/><title>Title</title>

</head>

<body>

<ul><li id="l1" class="c1">北京</li><li id="l2">上海</li><li id="c3">广州</li><li id="c4">深圳</li>

</ul>

</body>

</html>python">from lxml import etree# xpath解析

# 本地文件 etree.parse()

# 服务器响应的数据 etree.HTML()

tree = etree.parse('Xpath解析本地文件.html')

# print(tree)

# 查找ul下面的li

li_list = tree.xpath('//body/ul/li')

print(li_list)

print(len(li_list)) # 4# 查找所有有id属性的li标签

li_list = tree.xpath('//ul/li[@id]')

print(li_list)

print(len(li_list)) # 2# 查找所有有id属性的li标签的内容

li_list = tree.xpath('//ul/li[@id]/text()')

print(li_list) # ['北京', '上海']

print(len(li_list)) # 2# 查找id属性等于l1的li标签的内容

li_list = tree.xpath('//ul/li[@id="l1"]/text()')

print(li_list) # ['北京']

print(len(li_list)) # 1# 查找id属性等于l1的li标签class的属性值

li_list = tree.xpath('//ul/li[@id="l1"]/@class')

print(li_list) # ['c1']

print(len(li_list)) # 1# 查询id中包含l的li标签

li_list = tree.xpath('//ul/li[contains(@id,"l")]/text()')

print(li_list) # ['北京', '上海']

print(len(li_list)) # 2# 查询id值以l开头的li标签

li_list = tree.xpath('//ul/li[starts-with(@id,"c")]/text()')

print(li_list) # ['广州', '深圳']

print(len(li_list)) # 2# 查找id属性等于l1并且class属性等于c1的li标签的内容

li_list = tree.xpath('//ul/li[@id="l1" and @class="c1"]/text()')

print(li_list) # ['北京']

print(len(li_list)) # 1# 查找id属性等于l1或者class属性等于c1的li标签的内容

li_list = tree.xpath('//ul/li[@id="l1"]/text() | //ul/li[@class="c1"]/text()')

print(li_list) # ['北京']

print(len(li_list)) # 1

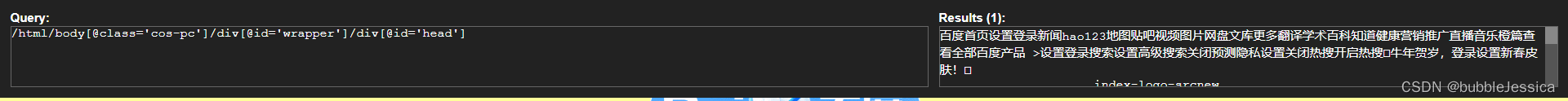

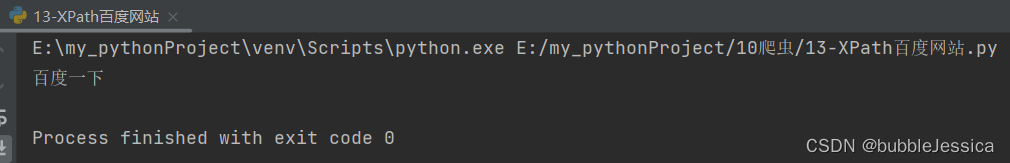

获取百度网站

核心代码

python"># 解析网页源码 获取想要的数据

from lxml import etree# 解析服务器响应的文件

tree = etree.HTML(content)

# 获取想要的数据

result = tree.xpath('//input[@id="su"]/@value')[0]

print(result)python"># 获取网页的源码

# 解析服务器响应的文件 etree.HTML

# 打印

import urllib.requesturl = 'https://www.baidu.com'headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}

# 请求对象的定制

request = urllib.request.Request(url=url, headers=headers)# 模拟浏览器访问服务器

response = urllib.request.urlopen(request);

# 获取网页源码

content = response.read().decode('utf8')

# 解析网页源码 获取想要的数据

from lxml import etree# 解析服务器响应的文件

tree = etree.HTML(content)

# 获取想要的数据

result = tree.xpath('//input[@id="su"]/@value')[0]

print(result)

JsonPath

python">import urllib.requesturl = 'https://dianying.taobao.com/cityAction.json?_ksTS=1718253005566_19&jsoncallback=jsonp20&action=cityAction&n_s=new&event_submit_doLocate=true'headers = {'Cookie': 'cna=BWjTHN2sx34CAXBeYTZVYSoT; ''t=9dc4fe3cd8292c5d6960214d0aabd4b7; ''cookie2=122c7f20c925da7e46dc8c127442cdee; ''v=0; _tb_token_=e5aebe87341b6; xlly_s=1; ''isg=BK-vc_rpFUz4FRFwwK-kv8L5PsO5VAN2gzvOIME8S54lEM8SySSTxq3GkgAuaNvu','Referer': 'https://dianying.taobao.com/','User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/126.0.0.0 Safari/537.36'

}

request = urllib.request.Request(url=url, headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

content = content.split('(')[1].split(')')[0]

with open('解析淘票票.json', 'w', encoding='utf-8') as wf:wf.write(content)import json

import jsonpathobj = json.load(open('解析淘票票.json', 'r', encoding='utf-8'))

city_list = jsonpath.jsonpath(obj, '$..name')

print(city_list)

BeautifulSoup

基本使用

<!DOCTYPE html>

<html lang="en">

<head><meta charset="UTF-8"><title>Title</title>

</head>

<body>

<div><ul><li id="l1">张三</li><li id="l2">李四</li><li>王五</li><a href="" id="" class="a1">尚硅谷</a><span>嘿嘿嘿</span></ul>

</div><a href="" title="a2">百度</a>

<div id="d1"><span>哈哈哈</span>

</div>

<p id="p1" class="p1"></p>

</body>

</html>python">from bs4 import BeautifulSoup# 默认打开编码格式为GBK

soup = BeautifulSoup(open('bs4基本使用.html', encoding='utf-8'), 'lxml')

# print(soup)# 根据标签名查找到第一个符合条件的节点

print(soup.a)

# 根据标签名查找到第一个符合条件的节点的属性

print(soup.a.attrs)

# 函数

# print(soup.find('a', title="a2"))

# print(soup.find('a', class_="a1"))

# print(soup.find_all(['a', 'span'])) # 返回的是一个列表

# print(soup.find_all('li', limit=2))

# print(soup.select('a'))

print(soup.select('.a1'))

print(soup.select('#l1'))# 查询到li标签中有id的标签

print(soup.select('li[id]'))

print(soup.select('li[id="l2"]'))# 层级选择器

# 后代选择器: 空格

# print(soup.select('div li'))

# 子代选择器: >

print(soup.select('div > ul > li'))

print(soup.select('a,li'))# 节点信息

# 获取节点内容

obj = soup.select('#d1')[0]

# 如果对象中只有内容,那么string和get_text()都可以使用

# 如果对象中除了内容还有标签,那么string获取不到数据,而get_text()可以获取数据

print(obj.string) # None

print(obj.get_text()) # 哈哈哈# 获取属性

# name标签名字

obj = soup.select('#p1')[0]

print(obj.name)

# 将属性值作为一个字典返回

print(obj.attrs)# 获取节点的属性

print(obj.attrs.get('class'))

print(obj.get('class'))

print(obj['class'])

获取星巴克数据

python">import urllib.requesturl = 'https://www.starbucks.com.cn/menu/'response = urllib.request.urlopen(url)

content = response.read().decode('utf-8')

print(content)from bs4 import BeautifulSoupsoup = BeautifulSoup(content, 'lxml')

# < ul class ='grid padded-3 product' >

# < li >

# < a id = 'menu-product-related-affogato' href = '/menu/beverages/coffee-plus-ice-cream/' class ='thumbnail' >

# < div class ='preview circle' style='background-image: url("/images/products/affogato.jpg")' > < / div >

# < strong > 阿馥奇朵™ < / strong >

# < / a >

# < /li >

# </ul># //ul[@class="grid padded-3 product"]//strong/text()

name_list = soup.select('ul[class="grid padded-3 product"] strong')for name in name_list:print(name.get_text())

requests

Requests: 让 HTTP 服务人类 — Requests 2.18.1 文档

python">import requestsurl = 'http://www.baidu.com'response = requests.get(url=url)

# 一个类型和六个属性

# <class 'requests.models.Response'>

# print(type(response))

# 设置响应编码的格式

response.encoding = 'utf-8'

# 以字符串形式返回网页源码

# print(response.text)# 返回url地址

print(response.url)# 返回的是二进制的数据

print(response.content)# 返回响应的状态码

print(response.status_code)# 返回响应头

print(response.headers)get请求

python">import requestsurl = 'http://www.baidu.com/s'

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

}

data = {'wd': '北京'

}response = requests.get(url=url, params=data, headers=headers)

content = response.text

print(content)

- 参数需要使用params传递

- 参数无需urlencode编码

- 不需要请求对象的定制

- 请求资源路径中的?可以加可以不加

post请求

python">import requestsurl = 'http://fanyi.baidu.com/sug?'headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/126.0.0.0 Safari/537.36'

}data = {'kw': 'eye'

}response = requests.post(url=url, data=data, headers=headers)

content = response.text

import jsonobj = json.loads(content, encoding='utf-8')

print(obj)

验证码登录案例

python">import requestsurl = 'https://so.gushiwen.cn/user/login.aspx?from=http%3a%2f%2fso.gushiwen.cn%2fuser%2fcollect.aspx'

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/126.0.0.0 Safari/537.36'

}

response = requests.post(url=url, headers=headers)

content = response.textfrom bs4 import BeautifulSoupsoup = BeautifulSoup(content, 'lxml')# 获取__VIEWSTATE

viewstate = soup.select('#__VIEWSTATE')[0].attrs.get('value')# 获取__VIEWSTATEGENERATOR

viewstategenerator = soup.select('#__VIEWSTATEGENERATOR')[0].attrs.get('value')

# print(viewstate)

# print(viewstategenerator)# 获取验证码图片

code = soup.select('#imgCode')[0].attrs.get('src')

code_url = 'https://so.gushiwen.cn' + code

session = requests.session()

response_code = session.get(code_url)

# 图片下载使用二进制

content_code = response_code.content

# wb二进制写入文件

with open('code.jpg', 'wb') as fp:fp.write(content_code)code_name = input('请输入你的验证码:')

# 点击登录

data_post = {'__VIEWSTATE': viewstate,'__VIEWSTATEGENERATOR': viewstategenerator,'from': 'https://so.gushiwen.cn/user/collect.aspx','email': '', # 输入邮箱'pwd': '', # 输入密码'code': code_name,'denglu': '登录'

}

response_post = session.post(url=url, headers=headers, data=data_post)

content_post = response_post.text

with open('gushiwen.html', 'w', encoding='utf-8') as wf:wf.write(content_post)