基于R 4.2.2版本演示

一、写在前面

有不少大佬问做机器学习分类能不能用R语言,不想学Python咯。

答曰:可!用GPT或者Kimi转一下就得了呗。

加上最近也没啥内容写了,就帮各位搬运一下吧。

二、R代码实现Logistic分类

(1)导入数据

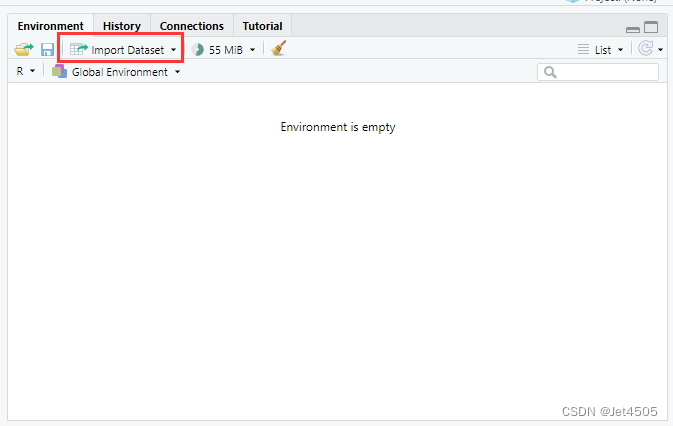

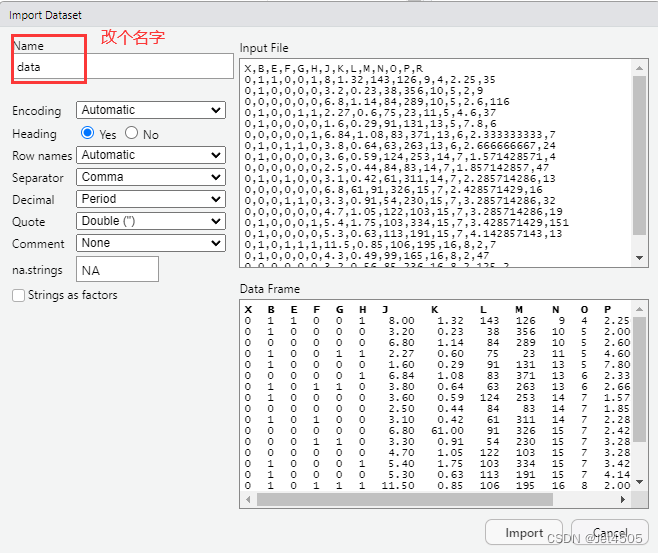

我习惯用RStudio自带的导入功能:

(2)建立LR模型

# Load necessary libraries

library(caret)

library(pROC)

library(ggplot2)# Assume 'data' is your dataframe containing the data

# Set seed to ensure reproducibility

set.seed(123)# Split data into training and validation sets (80% training, 20% validation)

trainIndex <- createDataPartition(data$X, p = 0.8, list = FALSE)

trainData <- data[trainIndex, ]

validData <- data[-trainIndex, ]# Fit logistic regression model on the training set

model <- glm(X ~ ., data = trainData, family = "binomial")# Predict on the training and validation sets

trainPredict <- predict(model, trainData, type = "response")

validPredict <- predict(model, validData, type = "response")主要解读下R里面LR回归的参数吧,也就是这个glm()函数:

在R语言中,glm()函数用于拟合广义线性模型(Generalized Linear Models),包括LR回归。以下是glm()函数的参数:

①formula: 一个公式对象,表示模型的因变量(响应变量)和自变量(解释变量)。对于LR回归,因变量应该是二元的(0和1),表示分类结果。自变量可以包括一个或多个连续或分类变量。

②data: 包含模型所需变量的数据框(data frame)或矩阵。

③family: 指定误差分布和链接函数的家族。对于LR回归,应设置为binomial。这指定了二项分布和逻辑斯蒂(logit)链接函数。

④weights: 可选参数,用于指定观测的权重。在逻辑回归中,这通常用于处理不平衡的数据集,对少数类进行加权以提高其在模型训练过程中的影响力。

⑤subset: 可选参数,用于指定用于拟合模型的数据子集。

⑥start: 可选参数,用于指定模型参数的初始估计值。

⑦etastart: 可选参数,用于指定线性预测器的初始估计值。

⑧mustart: 可选参数,用于指定是否必须提供初始值。

⑨offset: 可选参数,用于指定一个先验估计的已知量,通常用于泊松回归。

⑩control: 可选参数,用于指定控制模型拟合过程的参数。

可选参数主要关注weights吧,毕竟很多现实的数据都是不平衡数据。

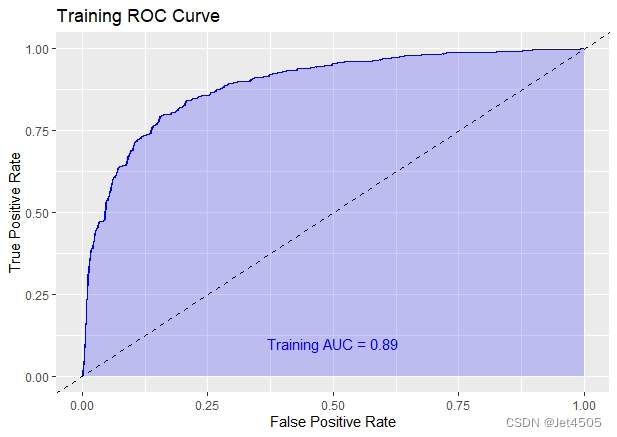

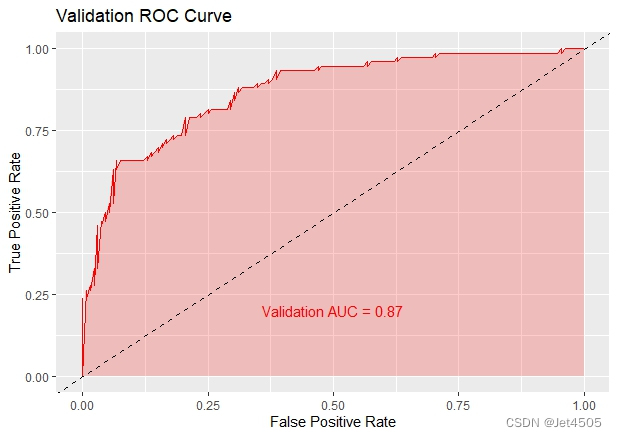

(3)模型评价:ROC曲线

# Convert true values to factor for ROC analysis

trainData$X <- as.factor(trainData$X)

validData$X <- as.factor(validData$X)# Calculate ROC curves and AUC values

trainRoc <- roc(response = trainData$X, predictor = trainPredict)

validRoc <- roc(response = validData$X, predictor = validPredict)# Plot ROC curves with AUC values

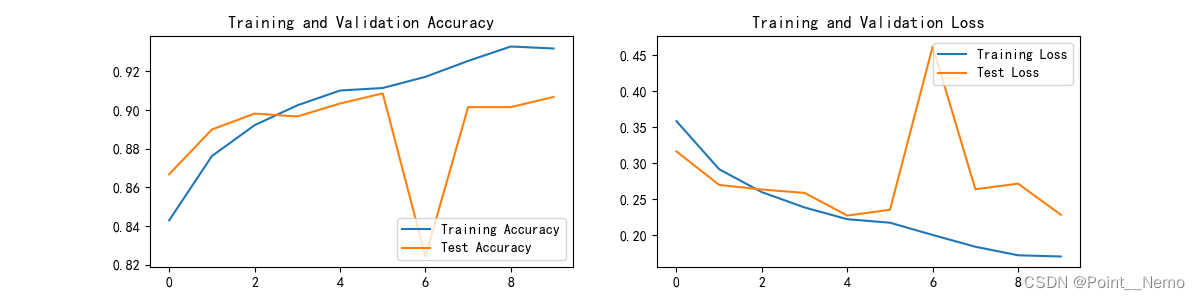

ggplot(data = data.frame(fpr = trainRoc$specificities, tpr = trainRoc$sensitivities), aes(x = 1 - fpr, y = tpr)) +geom_line(color = "blue") +geom_area(alpha = 0.2, fill = "blue") +geom_abline(slope = 1, intercept = 0, linetype = "dashed", color = "black") +ggtitle("Training ROC Curve") +xlab("False Positive Rate") +ylab("True Positive Rate") +annotate("text", x = 0.5, y = 0.1, label = paste("Training AUC =", round(auc(trainRoc), 2)), hjust = 0.5, color = "blue")ggplot(data = data.frame(fpr = validRoc$specificities, tpr = validRoc$sensitivities), aes(x = 1 - fpr, y = tpr)) +geom_line(color = "red") +geom_area(alpha = 0.2, fill = "red") +geom_abline(slope = 1, intercept = 0, linetype = "dashed", color = "black") +ggtitle("Validation ROC Curve") +xlab("False Positive Rate") +ylab("True Positive Rate") +annotate("text", x = 0.5, y = 0.2, label = paste("Validation AUC =", round(auc(validRoc), 2)), hjust = 0.5, color = "red")结果如下:

嗯,R作图很有自己的特色!

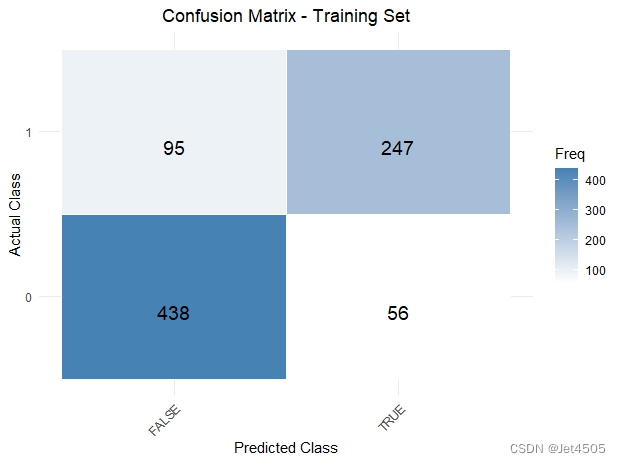

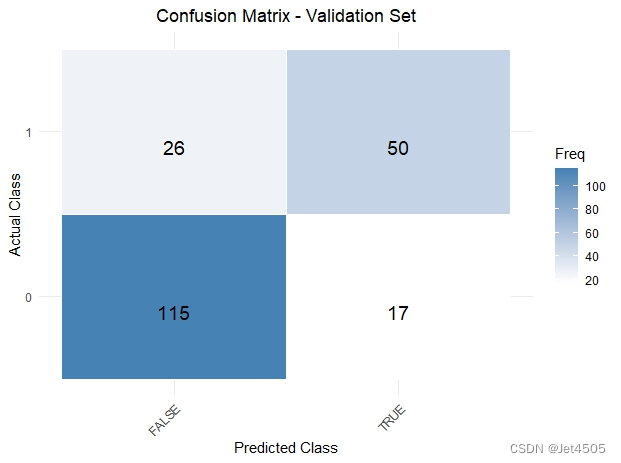

(4)模型评价:混淆矩阵和指标计算

# Function to plot confusion matrix using ggplot2

plot_confusion_matrix <- function(conf_mat, dataset_name) {conf_mat_df <- as.data.frame(as.table(conf_mat))colnames(conf_mat_df) <- c("Actual", "Predicted", "Freq")ggplot(data = conf_mat_df, aes(x = Predicted, y = Actual, fill = Freq)) +geom_tile(color = "white") +geom_text(aes(label = Freq), vjust = 1.5, color = "black", size = 5) +scale_fill_gradient(low = "white", high = "steelblue") +labs(title = paste("Confusion Matrix -", dataset_name, "Set"), x = "Predicted Class", y = "Actual Class") +theme_minimal() +theme(axis.text.x = element_text(angle = 45, hjust = 1), plot.title = element_text(hjust = 0.5))

}# Calculate confusion matrices

confMatTrain <- table(trainData$X, trainPredict >= 0.5)

confMatValid <- table(validData$X, validPredict >= 0.5)# Now call the function to plot and display the confusion matrices

plot_confusion_matrix(confMatTrain, "Training")

plot_confusion_matrix(confMatValid, "Validation")# Extract values for calculations

a_train <- confMatTrain[1, 1]

b_train <- confMatTrain[1, 2]

c_train <- confMatTrain[2, 1]

d_train <- confMatTrain[2, 2]a_valid <- confMatValid[1, 1]

b_valid <- confMatValid[1, 2]

c_valid <- confMatValid[2, 1]

d_valid <- confMatValid[2, 2]# Training Set Metrics

acc_train <- (a_train + d_train) / sum(confMatTrain)

error_rate_train <- 1 - acc_train

sen_train <- d_train / (d_train + c_train)

sep_train <- a_train / (a_train + b_train)

precision_train <- d_train / (b_train + d_train)

F1_train <- (2 * precision_train * sen_train) / (precision_train + sen_train)

MCC_train <- (d_train * a_train - b_train * c_train) / sqrt((d_train + b_train) * (d_train + c_train) * (a_train + b_train) * (a_train + c_train))

auc_train <- roc(response = trainData$X, predictor = trainPredict)$auc# Validation Set Metrics

acc_valid <- (a_valid + d_valid) / sum(confMatValid)

error_rate_valid <- 1 - acc_valid

sen_valid <- d_valid / (d_valid + c_valid)

sep_valid <- a_valid / (a_valid + b_valid)

precision_valid <- d_valid / (b_valid + d_valid)

F1_valid <- (2 * precision_valid * sen_valid) / (precision_valid + sen_valid)

MCC_valid <- (d_valid * a_valid - b_valid * c_valid) / sqrt((d_valid + b_valid) * (d_valid + c_valid) * (a_valid + b_valid) * (a_valid + c_valid))

auc_valid <- roc(response = validData$X, predictor = validPredict)$auc# Print Metrics

cat("Training Metrics\n")

cat("Accuracy:", acc_train, "\n")

cat("Error Rate:", error_rate_train, "\n")

cat("Sensitivity:", sen_train, "\n")

cat("Specificity:", sep_train, "\n")

cat("Precision:", precision_train, "\n")

cat("F1 Score:", F1_train, "\n")

cat("MCC:", MCC_train, "\n")

cat("AUC:", auc_train, "\n\n")cat("Validation Metrics\n")

cat("Accuracy:", acc_valid, "\n")

cat("Error Rate:", error_rate_valid, "\n")

cat("Sensitivity:", sen_valid, "\n")

cat("Specificity:", sep_valid, "\n")

cat("Precision:", precision_valid, "\n")

cat("F1 Score:", F1_valid, "\n")

cat("MCC:", MCC_valid, "\n")

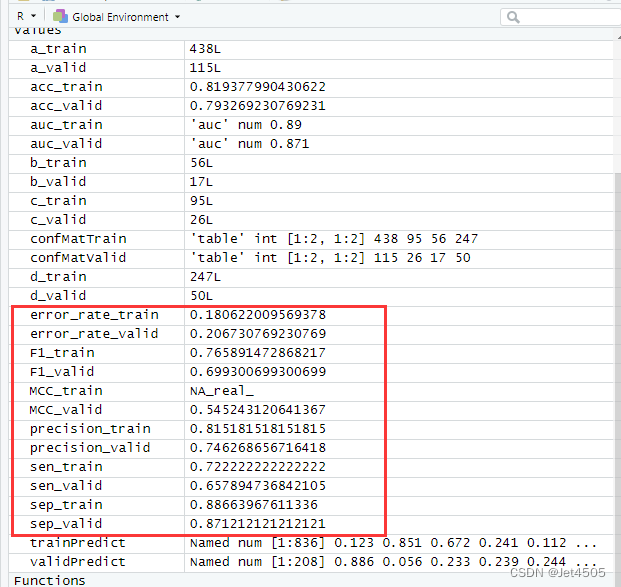

cat("AUC:", auc_valid, "\n")结果如下:

各种指标:

三、全部代码整合

知道各位懒得自己整合:

# Load necessary libraries

library(caret)

library(pROC)

library(ggplot2)# Assume 'data' is your dataframe containing the data

# Set seed to ensure reproducibility

set.seed(123)# Split data into training and validation sets (80% training, 20% validation)

trainIndex <- createDataPartition(data$X, p = 0.8, list = FALSE)

trainData <- data[trainIndex, ]

validData <- data[-trainIndex, ]# Fit logistic regression model on the training set

model <- glm(X ~ ., data = trainData, family = "binomial")# Predict on the training and validation sets

trainPredict <- predict(model, trainData, type = "response")

validPredict <- predict(model, validData, type = "response")# Convert true values to factor for ROC analysis

trainData$X <- as.factor(trainData$X)

validData$X <- as.factor(validData$X)# Calculate ROC curves and AUC values

trainRoc <- roc(response = trainData$X, predictor = trainPredict)

validRoc <- roc(response = validData$X, predictor = validPredict)# Plot ROC curves with AUC values

ggplot(data = data.frame(fpr = trainRoc$specificities, tpr = trainRoc$sensitivities), aes(x = 1 - fpr, y = tpr)) +geom_line(color = "blue") +geom_area(alpha = 0.2, fill = "blue") +geom_abline(slope = 1, intercept = 0, linetype = "dashed", color = "black") +ggtitle("Training ROC Curve") +xlab("False Positive Rate") +ylab("True Positive Rate") +annotate("text", x = 0.5, y = 0.1, label = paste("Training AUC =", round(auc(trainRoc), 2)), hjust = 0.5, color = "blue")ggplot(data = data.frame(fpr = validRoc$specificities, tpr = validRoc$sensitivities), aes(x = 1 - fpr, y = tpr)) +geom_line(color = "red") +geom_area(alpha = 0.2, fill = "red") +geom_abline(slope = 1, intercept = 0, linetype = "dashed", color = "black") +ggtitle("Validation ROC Curve") +xlab("False Positive Rate") +ylab("True Positive Rate") +annotate("text", x = 0.5, y = 0.2, label = paste("Validation AUC =", round(auc(validRoc), 2)), hjust = 0.5, color = "red")# Function to plot confusion matrix using ggplot2 and automatically display it

plot_confusion_matrix <- function(conf_mat, dataset_name) {conf_mat_df <- as.data.frame(as.table(conf_mat))colnames(conf_mat_df) <- c("Actual", "Predicted", "Freq")# Create the plotp <- ggplot(data = conf_mat_df, aes(x = Predicted, y = Actual, fill = Freq)) +geom_tile(color = "white") +geom_text(aes(label = Freq), vjust = 1.5, color = "black", size = 5) +scale_fill_gradient(low = "white", high = "steelblue") +labs(title = paste("Confusion Matrix -", dataset_name, "Set"), x = "Predicted Class", y = "Actual Class") +theme_minimal() +theme(axis.text.x = element_text(angle = 45, hjust = 1), plot.title = element_text(hjust = 0.5))# Display the plotprint(p)

}# Calculate confusion matrices

confMatTrain <- table(trainData$X, trainPredict >= 0.5)

confMatValid <- table(validData$X, validPredict >= 0.5)# Now call the function to plot and display the confusion matrices

plot_confusion_matrix(confMatTrain, "Training")

plot_confusion_matrix(confMatValid, "Validation")# Extract values for calculations

a_train <- confMatTrain[1, 1]

b_train <- confMatTrain[1, 2]

c_train <- confMatTrain[2, 1]

d_train <- confMatTrain[2, 2]a_valid <- confMatValid[1, 1]

b_valid <- confMatValid[1, 2]

c_valid <- confMatValid[2, 1]

d_valid <- confMatValid[2, 2]# Training Set Metrics

acc_train <- (a_train + d_train) / sum(confMatTrain)

error_rate_train <- 1 - acc_train

sen_train <- d_train / (d_train + c_train)

sep_train <- a_train / (a_train + b_train)

precision_train <- d_train / (b_train + d_train)

F1_train <- (2 * precision_train * sen_train) / (precision_train + sen_train)

MCC_train <- (d_train * a_train - b_train * c_train) / sqrt((d_train + b_train) * (d_train + c_train) * (a_train + b_train) * (a_train + c_train))

auc_train <- roc(response = trainData$X, predictor = trainPredict)$auc# Validation Set Metrics

acc_valid <- (a_valid + d_valid) / sum(confMatValid)

error_rate_valid <- 1 - acc_valid

sen_valid <- d_valid / (d_valid + c_valid)

sep_valid <- a_valid / (a_valid + b_valid)

precision_valid <- d_valid / (b_valid + d_valid)

F1_valid <- (2 * precision_valid * sen_valid) / (precision_valid + sen_valid)

MCC_valid <- (d_valid * a_valid - b_valid * c_valid) / sqrt((d_valid + b_valid) * (d_valid + c_valid) * (a_valid + b_valid) * (a_valid + c_valid))

auc_valid <- roc(response = validData$X, predictor = validPredict)$auc# Print Metrics

cat("Training Metrics\n")

cat("Accuracy:", acc_train, "\n")

cat("Error Rate:", error_rate_train, "\n")

cat("Sensitivity:", sen_train, "\n")

cat("Specificity:", sep_train, "\n")

cat("Precision:", precision_train, "\n")

cat("F1 Score:", F1_train, "\n")

cat("MCC:", MCC_train, "\n")

cat("AUC:", auc_train, "\n\n")cat("Validation Metrics\n")

cat("Accuracy:", acc_valid, "\n")

cat("Error Rate:", error_rate_valid, "\n")

cat("Sensitivity:", sen_valid, "\n")

cat("Specificity:", sep_valid, "\n")

cat("Precision:", precision_valid, "\n")

cat("F1 Score:", F1_valid, "\n")

cat("MCC:", MCC_valid, "\n")

cat("AUC:", auc_valid, "\n")四、最后

亲测:代码调试还是的GPT-4,Kimi还是不太行。

数据嘛:

链接:https://pan.baidu.com/s/1rEf6JZyzA1ia5exoq5OF7g?pwd=x8xm

提取码:x8x