1.介绍

phometheus:当前一套非常流行的开源监控和报警系统。

运行原理:通过HTTP协议周期性抓取被监控组件的状态。输出被监控组件信息的HTTP接口称为exporter。

常用组件大部分都有exporter可以直接使用,比如haproxy,nginx,Mysql,Linux系统信息(包括磁盘、内存、CPU、网络等待)。

prometheus主要特点:

- 一个多维数据模型(时间序列由metrics指标名字和设置key/value键/值的labels构成)。

- 非常高效的存储,平均一个采样数据占~3.5字节左右,320万的时间序列,每30秒采样,保持60天,消耗磁盘大概228G。

- 一种灵活的查询语言(PromQL)。

- 无依赖存储,支持local和remote不同模型。

- 采用http协议,使用pull模式,拉取数据。

- 监控目标,可以采用服务器发现或静态配置的方式。

- 多种模式的图像和仪表板支持,图形化友好。

- 通过中间网关支持推送时间。

Grafana:是一个用于可视化大型测量数据的开源系统,可以对Prometheus 的指标数据进行可视化。

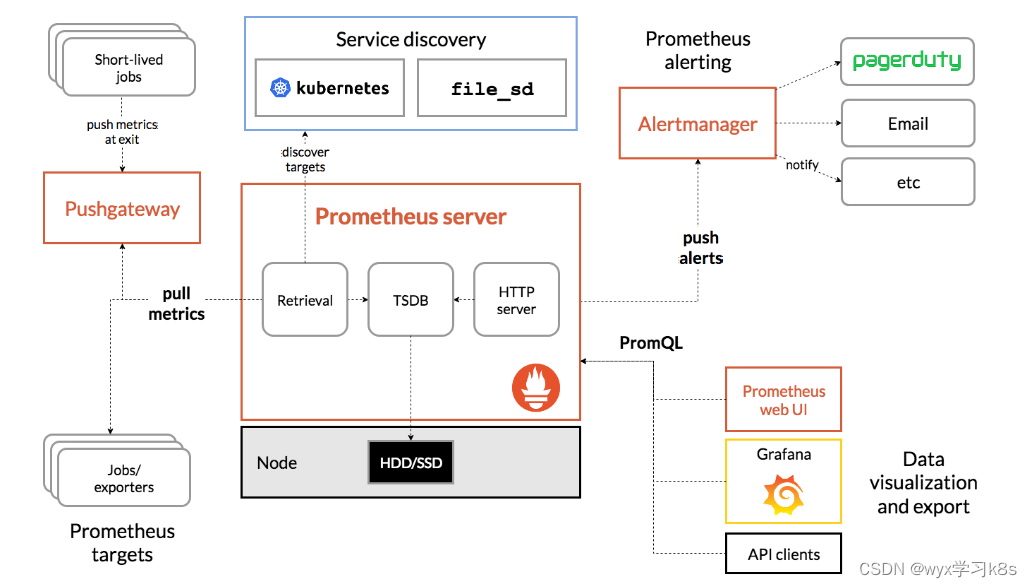

Prometheus的体系结构图:

Prometheus直接或通过中间推送网关从已检测的作业中删除指标,以处理短暂的作业。它在本地存储所有报废的样本,并对这些数据运行规则,以汇总和记录现有数据中的新时间序列,或生成警报。Grafana或其他API使用者可以用来可视化收集的数据。

2.部署prometheus

2.1 使用RBAC进行授权

[root@k8s-node01 k8s-prometheus]# cat prometheus-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: prometheusnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: prometheuslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

rules:- apiGroups:- ""resources:- nodes- nodes/metrics- services- endpoints- podsverbs:- get- list- watch- apiGroups:- ""resources:- configmapsverbs:- get- nonResourceURLs:- "/metrics"verbs:- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: prometheuslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: prometheus

subjects:

- kind: ServiceAccountname: prometheusnamespace: kube-system[root@k8s-node01 k8s-prometheus]# kubectl apply -f prometheus-rbac.yaml

serviceaccount/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created2.2 配置管理

使用Configmap保存不需要加密配置信息,yaml中修改对应的NODE IP即可。

[root@k8s-node01 k8s-prometheus]# cat prometheus-configmap.yaml

# Prometheus configuration format https://prometheus.io/docs/prometheus/latest/configuration/configuration/

apiVersion: v1

kind: ConfigMap

metadata:name: prometheus-confignamespace: kube-system labels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: EnsureExists

data:prometheus.yml: |rule_files:- /etc/config/rules/*.rulesscrape_configs:- job_name: prometheusstatic_configs:- targets:- localhost:9090- job_name: kubernetes-nodesscrape_interval: 30sstatic_configs:- targets:- 11.0.1.13:9100- 11.0.1.14:9100- job_name: kubernetes-apiserverskubernetes_sd_configs:- role: endpointsrelabel_configs:- action: keepregex: default;kubernetes;httpssource_labels:- __meta_kubernetes_namespace- __meta_kubernetes_service_name- __meta_kubernetes_endpoint_port_namescheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtinsecure_skip_verify: truebearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token- job_name: kubernetes-nodes-kubeletkubernetes_sd_configs:- role: noderelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)scheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtinsecure_skip_verify: truebearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token- job_name: kubernetes-nodes-cadvisorkubernetes_sd_configs:- role: noderelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- target_label: __metrics_path__replacement: /metrics/cadvisorscheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtinsecure_skip_verify: truebearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token- job_name: kubernetes-service-endpointskubernetes_sd_configs:- role: endpointsrelabel_configs:- action: keepregex: truesource_labels:- __meta_kubernetes_service_annotation_prometheus_io_scrape- action: replaceregex: (https?)source_labels:- __meta_kubernetes_service_annotation_prometheus_io_schemetarget_label: __scheme__- action: replaceregex: (.+)source_labels:- __meta_kubernetes_service_annotation_prometheus_io_pathtarget_label: __metrics_path__- action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2source_labels:- __address__- __meta_kubernetes_service_annotation_prometheus_io_porttarget_label: __address__- action: labelmapregex: __meta_kubernetes_service_label_(.+)- action: replacesource_labels:- __meta_kubernetes_namespacetarget_label: kubernetes_namespace- action: replacesource_labels:- __meta_kubernetes_service_nametarget_label: kubernetes_name- job_name: kubernetes-serviceskubernetes_sd_configs:- role: servicemetrics_path: /probeparams:module:- http_2xxrelabel_configs:- action: keepregex: truesource_labels:- __meta_kubernetes_service_annotation_prometheus_io_probe- source_labels:- __address__target_label: __param_target- replacement: blackboxtarget_label: __address__- source_labels:- __param_targettarget_label: instance- action: labelmapregex: __meta_kubernetes_service_label_(.+)- source_labels:- __meta_kubernetes_namespacetarget_label: kubernetes_namespace- source_labels:- __meta_kubernetes_service_nametarget_label: kubernetes_name- job_name: kubernetes-podskubernetes_sd_configs:- role: podrelabel_configs:- action: keepregex: truesource_labels:- __meta_kubernetes_pod_annotation_prometheus_io_scrape- action: replaceregex: (.+)source_labels:- __meta_kubernetes_pod_annotation_prometheus_io_pathtarget_label: __metrics_path__- action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2source_labels:- __address__- __meta_kubernetes_pod_annotation_prometheus_io_porttarget_label: __address__- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- action: replacesource_labels:- __meta_kubernetes_namespacetarget_label: kubernetes_namespace- action: replacesource_labels:- __meta_kubernetes_pod_nametarget_label: kubernetes_pod_namealerting:alertmanagers:- static_configs:- targets: ["alertmanager:80"][root@k8s-node01 k8s-prometheus]# kubectl apply -f prometheus-configmap.yaml

configmap/prometheus-config created2.3 有状态部署prometheus

这里使用storageclass进行动态供给,给prometheus的数据进行持久化

[root@k8s-node01 k8s-prometheus]# cat prometheus-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: prometheus namespace: kube-systemlabels:k8s-app: prometheuskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcileversion: v2.2.1

spec:serviceName: "prometheus"replicas: 1podManagementPolicy: "Parallel"updateStrategy:type: "RollingUpdate"selector:matchLabels:k8s-app: prometheustemplate:metadata:labels:k8s-app: prometheusannotations:scheduler.alpha.kubernetes.io/critical-pod: ''spec:priorityClassName: system-cluster-criticalserviceAccountName: prometheusinitContainers:- name: "init-chown-data"image: "busybox:latest"imagePullPolicy: "IfNotPresent"command: ["chown", "-R", "65534:65534", "/data"]volumeMounts:- name: prometheus-datamountPath: /datasubPath: ""containers:- name: prometheus-server-configmap-reloadimage: "jimmidyson/configmap-reload:v0.1"imagePullPolicy: "IfNotPresent"args:- --volume-dir=/etc/config- --webhook-url=http://localhost:9090/-/reloadvolumeMounts:- name: config-volumemountPath: /etc/configreadOnly: trueresources:limits:cpu: 10mmemory: 10Mirequests:cpu: 10mmemory: 10Mi- name: prometheus-serverimage: "prom/prometheus:v2.2.1"imagePullPolicy: "IfNotPresent"args:- --config.file=/etc/config/prometheus.yml- --storage.tsdb.path=/data- --web.console.libraries=/etc/prometheus/console_libraries- --web.console.templates=/etc/prometheus/consoles- --web.enable-lifecycleports:- containerPort: 9090readinessProbe:httpGet:path: /-/readyport: 9090initialDelaySeconds: 30timeoutSeconds: 30livenessProbe:httpGet:path: /-/healthyport: 9090initialDelaySeconds: 30timeoutSeconds: 30# based on 10 running nodes with 30 pods eachresources:limits:cpu: 200mmemory: 1000Mirequests:cpu: 200mmemory: 1000MivolumeMounts:- name: config-volumemountPath: /etc/config- name: prometheus-datamountPath: /datasubPath: ""- name: prometheus-rulesmountPath: /etc/config/rulesterminationGracePeriodSeconds: 300volumes:- name: config-volumeconfigMap:name: prometheus-config- name: prometheus-rulesconfigMap:name: prometheus-rulesvolumeClaimTemplates:- metadata:name: prometheus-dataspec:storageClassName: managed-nfs-storage accessModes:- ReadWriteOnceresources:requests:storage: "1Gi"[root@k8s-node01 k8s-prometheus]# kubectl apply -f prometheus-statefulset.yaml

Warning: spec.template.metadata.annotations[scheduler.alpha.kubernetes.io/critical-pod]: non-functional in v1.16+; use the "priorityClassName" field instead

statefulset.apps/prometheus created[root@k8s-node01 k8s-prometheus]#kubectl get pod -n kube-system |grep prometheus

NAME READY STATUS RESTARTS AGE

prometheus-0 2/2 Running 6 1d2.4 创建service暴露访问端口

[root@k8s-node01 k8s-prometheus]# cat prometheus-service.yaml

kind: Service

apiVersion: v1

metadata: name: prometheusnamespace: kube-systemlabels: kubernetes.io/name: "Prometheus"kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

spec: type: NodePortports: - name: http port: 9090protocol: TCPtargetPort: 9090nodePort: 30090selector: k8s-app: prometheus[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-service.yaml2.5 web访问

使用任意一个NodeIP加端口进行访问,访问地址:http://NodeIP:Port

3.部署Grafana

[root@k8s-master prometheus-k8s]# cat grafana.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: grafananamespace: kube-system

spec:serviceName: "grafana"replicas: 1selector:matchLabels:app: grafanatemplate:metadata:labels:app: grafanaspec:containers:- name: grafanaimage: grafana/grafanaports:- containerPort: 3000protocol: TCPresources:limits:cpu: 100mmemory: 256Mirequests:cpu: 100mmemory: 256MivolumeMounts:- name: grafana-datamountPath: /var/lib/grafanasubPath: grafanasecurityContext:fsGroup: 472runAsUser: 472volumeClaimTemplates:- metadata:name: grafana-dataspec:storageClassName: managed-nfs-storage #和prometheus使用同一个存储类accessModes:- ReadWriteOnceresources:requests:storage: "1Gi"---apiVersion: v1

kind: Service

metadata:name: grafananamespace: kube-system

spec:type: NodePortports:- port : 80targetPort: 3000nodePort: 30091selector:app: grafana[root@k8s-master prometheus-k8s]#kubectl apply -f grafana.yaml访问方式:

使用任意一个NodeIP加端口进行访问,访问地址:http://NodeIP:Port ,默认账号密码为admin

4.监控K8S集群中Pod、Node、资源对象数据的方法

Pod:

kubelet的节点使用cAdvisor提供的metrics接口获取该节点所有Pod和容器相关的性能指标数据,安装kubelet默认就开启了

Node:

需要使用node_exporter收集器采集节点资源利用率。

使用node_exporter.sh脚本分别在所有服务器上部署node_exporter收集器,不需要修改可直接运行脚本

[root@k8s-master prometheus-k8s]# cat node_exporter.sh

#!/bin/bashwget https://github.com/prometheus/node_exporter/releases/download/v0.17.0/node_exporter-0.17.0.linux-amd64.tar.gztar zxf node_exporter-0.17.0.linux-amd64.tar.gz

mv node_exporter-0.17.0.linux-amd64 /usr/local/node_exportercat <<EOF >/usr/lib/systemd/system/node_exporter.service

[Unit]

Description=https://prometheus.io[Service]

Restart=on-failure

ExecStart=/usr/local/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service[Install]

WantedBy=multi-user.target

EOFsystemctl daemon-reload

systemctl enable node_exporter

systemctl restart node_exporter

[root@k8s-master prometheus-k8s]# ./node_exporter.sh[root@k8s-master prometheus-k8s]# ps -ef|grep node_exporter

root 6227 1 0 Oct08 ? 00:06:43 /usr/local/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service

root 118269 117584 0 23:27 pts/0 00:00:00 grep --color=auto node_exporter资源对象:

kube-state-metrics采集了k8s中各种资源对象的状态信息,只需要在master节点部署就行

1.创建rbac的yaml对metrics进行授权

[root@k8s-master prometheus-k8s]# cat kube-state-metrics-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: kube-state-metricsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: kube-state-metricslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]resources:- configmaps- secrets- nodes- pods- services- resourcequotas- replicationcontrollers- limitranges- persistentvolumeclaims- persistentvolumes- namespaces- endpointsverbs: ["list", "watch"]

- apiGroups: ["extensions"]resources:- daemonsets- deployments- replicasetsverbs: ["list", "watch"]

- apiGroups: ["apps"]resources:- statefulsetsverbs: ["list", "watch"]

- apiGroups: ["batch"]resources:- cronjobs- jobsverbs: ["list", "watch"]

- apiGroups: ["autoscaling"]resources:- horizontalpodautoscalersverbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: kube-state-metrics-resizernamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]resources:- podsverbs: ["get"]

- apiGroups: ["extensions"]resources:- deploymentsresourceNames: ["kube-state-metrics"]verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: kube-state-metricslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: kube-state-metrics

subjects:

- kind: ServiceAccountname: kube-state-metricsnamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: kube-state-metricsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: kube-state-metrics-resizer

subjects:

- kind: ServiceAccountname: kube-state-metricsnamespace: kube-system

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-rbac.yaml2.编写Deployment和ConfigMap的yaml进行metrics pod部署,不需要进行修改

[root@k8s-master prometheus-k8s]# cat kube-state-metrics-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: kube-state-metricsnamespace: kube-systemlabels:k8s-app: kube-state-metricskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcileversion: v1.3.0

spec:selector:matchLabels:k8s-app: kube-state-metricsversion: v1.3.0replicas: 1template:metadata:labels:k8s-app: kube-state-metricsversion: v1.3.0annotations:scheduler.alpha.kubernetes.io/critical-pod: ''spec:priorityClassName: system-cluster-criticalserviceAccountName: kube-state-metricscontainers:- name: kube-state-metricsimage: lizhenliang/kube-state-metrics:v1.3.0ports:- name: http-metricscontainerPort: 8080- name: telemetrycontainerPort: 8081readinessProbe:httpGet:path: /healthzport: 8080initialDelaySeconds: 5timeoutSeconds: 5- name: addon-resizerimage: lizhenliang/addon-resizer:1.8.3resources:limits:cpu: 100mmemory: 30Mirequests:cpu: 100mmemory: 30Mienv:- name: MY_POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: MY_POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: config-volumemountPath: /etc/configcommand:- /pod_nanny- --config-dir=/etc/config- --container=kube-state-metrics- --cpu=100m- --extra-cpu=1m- --memory=100Mi- --extra-memory=2Mi- --threshold=5- --deployment=kube-state-metricsvolumes:- name: config-volumeconfigMap:name: kube-state-metrics-config

---

# Config map for resource configuration.

apiVersion: v1

kind: ConfigMap

metadata:name: kube-state-metrics-confignamespace: kube-systemlabels:k8s-app: kube-state-metricskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

data:NannyConfiguration: |-apiVersion: nannyconfig/v1alpha1kind: NannyConfiguration

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-deployment.yaml3.编写Service的yaml对metrics进行端口暴露

[root@k8s-master prometheus-k8s]# cat kube-state-metrics-service.yaml

apiVersion: v1

kind: Service

metadata:name: kube-state-metricsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/name: "kube-state-metrics"annotations:prometheus.io/scrape: 'true'

spec:ports:- name: http-metricsport: 8080targetPort: http-metricsprotocol: TCP- name: telemetryport: 8081targetPort: telemetryprotocol: TCPselector:k8s-app: kube-state-metrics

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-service.yaml[root@k8s-master prometheus-k8s]# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/alertmanager-5d75d5688f-fmlq6 2/2 Running 0 9dpod/coredns-5bd5f9dbd9-wv45t 1/1 Running 1 9dpod/grafana-0 1/1 Running 2 15dpod/kube-state-metrics-7c76bdbf68-kqqgd 2/2 Running 6 14dpod/kubernetes-dashboard-7d77666777-d5ng4 1/1 Running 5 16dpod/prometheus-0 2/2 Running 6 15dNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager ClusterIP 10.0.0.207 <none> 80/TCP 13dservice/grafana NodePort 10.0.0.74 <none> 80:30091/TCP 15dservice/kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP 14dservice/kube-state-metrics ClusterIP 10.0.0.194 <none> 8080/TCP,8081/TCP 14dservice/kubernetes-dashboard NodePort 10.0.0.127 <none> 443:30001/TCP 17dservice/prometheus NodePort 10.0.0.33 <none> 9090:30090/TCP 14d报错一:进行2.1步骤时报错:ensure CRDs are installed first

[root@k8s-node01 k8s-prometheus]# kubectl apply -f prometheus-rbac.yaml

serviceaccount/prometheus unchanged

resource mapping not found for name: "prometheus" namespace: "" from "prometheus-rbac.yaml": no matches for kind "ClusterRole" in version "rbac.authorization.k8s.io/v1beta1"

ensure CRDs are installed first

resource mapping not found for name: "prometheus" namespace: "" from "prometheus-rbac.yaml": no matches for kind "ClusterRoleBinding" in version "rbac.authorization.k8s.io/v1beta1"

ensure CRDs are installed first使用附件的原yaml会报错,原因是因为api过期,需要手动修改 apiVersion: rbac.authorization.k8s.io/v1beta1为apiVersion: rbac.authorization.k8s.io/v1