修改ModelLink在RTX3090完成预训练、微调、推理、评估以及TRT-LLM转换、推理、性能测试

- 1 参考文档

- 2 测试环境

- 3 创建容器

- 4 安装AscendSpeed、ModelLink

- 5 下载LLAMA2-7B预训练权重和词表

- 6 huggingface模型的推理及性能测试

- 7.1 修改torch,deepspeed规避缺失npu环境的问题

- 7.2 修改点ModelLink规避缺失npu环境的问题

- 8 将权重从huggingface格式转化为AscendSpeed格式(PTD模式)

- 9 下载alpaca数据集并查看第一条记录

- 10.1 将alpacal转换成LLM预训练数据集格式

- 10.2 开始预训练

- 11.1 将alpacal转换成LLM指令微调微调数据集格式

- 11.2 开始全参微调

- 11.3 采用ModelLink进行指令微调模型的推理测试

- 11.4.1 准备MMLU精度测试数据集

- 11.4.2 采用ModelLink进行指令微调模型的MMLU精度测试

- 11.5 将模型从Megatron格式转回HuggingFace格式

- 12 指令微调后HuggingFace格式模型的推理测试

- 13 TensorRT-LLM推理测试

- 14 异常处理--提示tensorrt找不到

背景:因为没有华为的训练卡,又想跑ModelLink,顺便熟悉LLM从训练到部署的完全过程,记录备用

1 参考文档

- ModelLink LLAMA2-7B

- TensorRT-LLM

2 测试环境

- 8张 NVIDIA GeForce RTX 3090 ; Driver Version: 530.30.02 ; CUDA Version: 12.1

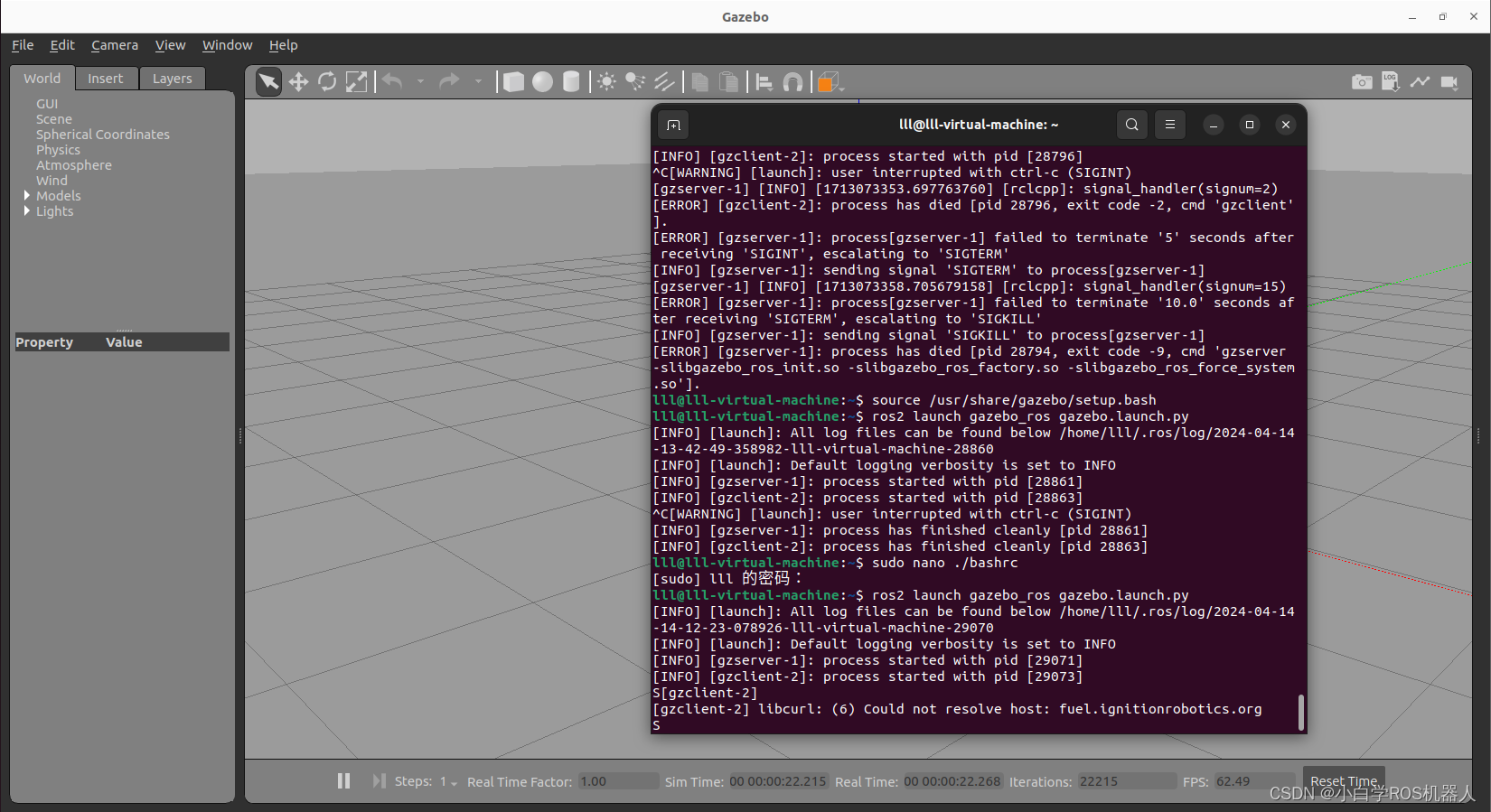

3 创建容器

docker run --gpus all --shm-size=32g -ti -e NVIDIA_VISIBLE_DEVICES=all \--privileged --net=host -v $PWD:/home \-w /home --name ModelLink \nvcr.io/nvidia/pytorch:23.07-py3 /bin/bash

mkdir -p /home/ModelLink

ModelLink_21">4 安装AscendSpeed、ModelLink

cd /home/ModelLink

git clone https://gitee.com/ascend/ModelLink.git

git clone https://github.com/NVIDIA/Megatron-LM.git

cd Megatron-LM

git checkout -f bcce6f

cp -r megatron ../ModelLink/

cd ..

cd ModelLink# 非必须,为了生成diff,看看我修改了哪些地方

git add * -f

git commit -m "add"mkdir logs

mkdir model_from_hf

mkdir dataset

mkdir ckpt#安装AscendSpeed

cd /home/ModelLink

git clone https://gitee.com/ascend/AscendSpeed.git

cd AscendSpeed

git checkout 224ae35e8fc96778f957029d1371ddb623452a50

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

pip3 install -e .

cd ..#安装deepspeed

pip install deepspeed#安装ModelLink

cd /home/ModelLink/ModelLink

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

pip3 install -e .#其它

pip uninstall transformer-engine -y #不卸载会报错,与容器里的torch版本不兼容

5 下载LLAMA2-7B预训练权重和词表

cd /home/ModelLink

mkdir -p llama-2-7b-hf

cd llama-2-7b-hf

wget https://huggingface.co/daryl149/llama-2-7b-hf/resolve/main/config.json

wget https://huggingface.co/daryl149/llama-2-7b-hf/resolve/main/generation_config.json

wget https://huggingface.co/daryl149/llama-2-7b-hf/resolve/main/pytorch_model-00001-of-00002.bin

wget https://huggingface.co/daryl149/llama-2-7b-hf/resolve/main/pytorch_model-00002-of-00002.bin

wget https://huggingface.co/daryl149/llama-2-7b-hf/resolve/main/pytorch_model.bin.index.json

wget https://huggingface.co/daryl149/llama-2-7b-hf/resolve/main/special_tokens_map.json

wget https://huggingface.co/daryl149/llama-2-7b-hf/resolve/main/tokenizer.json

wget https://huggingface.co/daryl149/llama-2-7b-hf/resolve/main/tokenizer.model

wget https://huggingface.co/daryl149/llama-2-7b-hf/resolve/main/tokenizer_config.json

6 huggingface模型的推理及性能测试

cd /home/ModelLink

tee torch_infer.py <<-'EOF'

import sys

import os

import gc

from transformers import AutoModelForCausalLM, AutoTokenizer,BitsAndBytesConfig

import torch

import time

import numpy as np

torch.cuda.empty_cache()

gc.collect()

os.environ["PYTORCH_CUDA_ALLOC_CONF"] = "max_split_size_mb:128"device = torch.device("cuda:4" if torch.cuda.is_available() else "cpu")

model_name = sys.argv[1]import json

import torch

from torch.utils.data import Dataset, DataLoaderclass TextGenerationDataset(Dataset):def __init__(self, json_data):self.data = json.loads(json_data)def __len__(self):return len(self.data)def __getitem__(self, idx):item = self.data[idx]input_text = item['input']expected_output = item['expected_output']return input_text, expected_output# 创建 Dataset 实例

json_data =r'''

[{"input": "Give three tips for staying healthy", "expected_output": "TODO"}

]

'''def get_gpu_mem_usage():allocated_memory = torch.cuda.memory_allocated(device) / (1024 ** 2)max_allocated_memory = torch.cuda.max_memory_allocated(device) / (1024 ** 2)cached_memory = torch.cuda.memory_reserved(device) / (1024 ** 2) max_cached_memory = torch.cuda.max_memory_reserved(device) / (1024 ** 2)return np.array([allocated_memory,max_allocated_memory,cached_memory,max_cached_memory])def load_model_fp16():model = AutoModelForCausalLM.from_pretrained(model_name).half().to(device)return modeldef predict(model,tokenizer,test_dataloader):global devicedataloader_iter = iter(test_dataloader)input_text, expected_output=next(dataloader_iter)inputs = tokenizer(input_text, return_tensors="pt").to(device)for _ in range(3):torch.manual_seed(42)start_time = time.time()with torch.no_grad():outputs = model.generate(**inputs, max_new_tokens=1)first_token_time = time.time() - start_timefirst_token = tokenizer.decode(outputs[0], skip_special_tokens=True)torch.manual_seed(42)start_time = time.time()with torch.no_grad():outputs = model.generate(**inputs,max_length=128)total_time = time.time() - start_timegenerated_tokens = len(outputs[0]) - len(inputs["input_ids"][0])tokens_per_second = generated_tokens / total_timeresponse = tokenizer.decode(outputs[0], skip_special_tokens=True)print("\n\n---------------------------------------- Response -------------------------------------")print(f"{response}")print("---------------------------------------------------------------------------------------")print(f"Time taken for first token: {first_token_time:.4f} seconds")print(f"Total time taken: {total_time:.4f} seconds")print(f"Number of tokens generated: {generated_tokens}")print(f"Tokens per second: {tokens_per_second:.2f}")test_dataset = TextGenerationDataset(json_data)

test_dataloader = DataLoader(test_dataset, batch_size=1, shuffle=False)tokenizer = AutoTokenizer.from_pretrained(model_name)

model=load_model_fp16()

mem_usage_0=get_gpu_mem_usage()

predict(model,tokenizer,test_dataloader)

mem_usage_1=get_gpu_mem_usage()print(f"BEFORE MA: {mem_usage_0[0]:.2f} MMA: {mem_usage_0[1]:.2f} CA: {mem_usage_0[2]:.2f} MCA: {mem_usage_0[3]:.2f}")

print(f"AFTER MA: {mem_usage_1[0]:.2f} MMA: {mem_usage_1[1]:.2f} CA: {mem_usage_1[2]:.2f} MCA: {mem_usage_1[3]:.2f}")

diff=mem_usage_1-mem_usage_0

print(f"DIFF MA: {diff[0]:.2f} MMA: {diff[1]:.2f} CA: {diff[2]:.2f} MCA: {diff[3]:.2f}")

EOF

python3 torch_infer.py ./llama-2-7b-hf

输出:(40.15 tps)

---------------------------------------- Response -------------------------------------

Give three tips for staying healthy during the holidays.

The holidays are a time of celebration and joy, but they can also be a time of stress and overindulgence. Here are three tips for staying healthy during the holidays:

1. Eat healthy foods.

2. Exercise regularly.

3. Get enough sleep.

What are some of the most common health problems during the holidays?

The most common health problems during the holidays are colds, flu, and stomach problems.

What are some of

---------------------------------------------------------------------------------------

Time taken for first token: 0.0251 seconds

Total time taken: 2.9637 seconds

Number of tokens generated: 119

Tokens per second: 40.15

BEFORE MA: 12884.52 MMA: 12884.52 CA: 12886.00 MCA: 12886.00

AFTER MA: 12892.65 MMA: 13019.47 CA: 13036.00 MCA: 13036.00

DIFF MA: 8.12 MMA: 134.94 CA: 150.00 MCA: 150.00

7.1 修改torch,deepspeed规避缺失npu环境的问题

tee -a /usr/local/lib/python3.10/dist-packages/torch/__init__.py <<-'EOF'

class FakeDevice(object):def __init__(self, name=""):self.name = namedef __getattr__(self, item):return FakeDevice(f"{self.name}.{item}")def __call__(self, *args, **kwargs):return 0torch.npu = FakeDevice("torch.npu")

fake_torch_npu = FakeDevice("torch_npu")

fake_deepspeed_npu = FakeDevice("deepspeed_npu")sys.modules.update({"torch.npu": torch.npu,"torch.npu.contrib": torch.npu.contrib,"torch_npu": fake_torch_npu,"torch_npu.utils": fake_torch_npu.utils,"torch_npu.contrib": fake_torch_npu.contrib,"torch_npu.testing": fake_torch_npu.testing,"torch_npu.testing.testcase": fake_torch_npu.testing.testcase,"deepspeed_npu": fake_deepspeed_npu

})

EOF

sed -i 's/accelerator_name = "npu"/accelerator_name = "cuda"/g' /usr/local/lib/python3.10/dist-packages/deepspeed/accelerator/real_accelerator.py

ModelLinknpu_228">7.2 修改点ModelLink规避缺失npu环境的问题

diff --git a/megatron/optimizer/__init__.py b/megatron/optimizer/__init__.py

index 33744a2..b8e2553 100644

--- a/megatron/optimizer/__init__.py

+++ b/megatron/optimizer/__init__.py

@@ -1,7 +1,9 @@# Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.-from apex.optimizers import FusedAdam as Adam

-from apex.optimizers import FusedSGD as SGD

+#from apex.optimizers import FusedAdam as Adam

+#from apex.optimizers import FusedSGD as SGD

+from torch.optim import SGD

+from torch.optim import Adamfrom megatron import get_argsdiff --git a/megatron/optimizer/distrib_optimizer.py b/megatron/optimizer/distrib_optimizer.py

index d58b1b0..ded0ba7 100644

--- a/megatron/optimizer/distrib_optimizer.py

+++ b/megatron/optimizer/distrib_optimizer.py

@@ -3,7 +3,8 @@"""Megatron distributed optimizer."""-from apex.optimizers import FusedAdam as Adam

+#from apex.optimizers import FusedAdam as Adam

+from torch.optim import Adamimport mathimport torchdiff --git a/modellink/__init__.py b/modellink/__init__.py

index a2cb976..682cf28 100644

--- a/modellink/__init__.py

+++ b/modellink/__init__.py

@@ -14,6 +14,32 @@# limitations under the License.import logging

+import torch

+import sys

+class FakeDevice(object):

+ def __init__(self, name=""):

+ self.name = name

+ def __getattr__(self, item):

+ return FakeDevice(f"{self.name}.{item}")

+ def __call__(self, *args, **kwargs):

+ return 0

+

+torch.npu = FakeDevice("torch.npu")

+fake_torch_npu = FakeDevice("torch_npu")

+fake_deepspeed_npu = FakeDevice("deepspeed_npu")

+

+sys.modules.update({

+ "torch.npu": torch.npu,

+ "torch.npu.contrib": torch.npu.contrib,

+ "torch_npu": fake_torch_npu,

+ "torch_npu.npu": fake_torch_npu.npu,

+ "torch_npu.utils": fake_torch_npu.utils,

+ "torch_npu.contrib": fake_torch_npu.contrib,

+ "torch_npu.testing": fake_torch_npu.testing,

+ "torch_npu.testing.testcase": fake_torch_npu.testing.testcase,

+ "deepspeed_npu": fake_deepspeed_npu

+})

+try:import torch_npu

diff --git a/modellink/model/transformer.py b/modellink/model/transformer.py

index bd1ef11..9745a4b 100644

--- a/modellink/model/transformer.py

+++ b/modellink/model/transformer.py

@@ -442,9 +442,9 @@ class FlashSelfAttention(torch.nn.Module):if not hasattr(self, 'attention_mask') or self.attention_mask.shape[0] != seq_length:if use_sliding_windows:self.attention_mask = torch.triu(

- torch.ones(self.FA_SPARSE_ATTN_MASK_LEN, self.FA_SPARSE_ATTN_MASK_LEN), 1).bool().npu()

+ torch.ones(self.FA_SPARSE_ATTN_MASK_LEN, self.FA_SPARSE_ATTN_MASK_LEN), 1).bool().cuda()else:

- self.attention_mask = torch.triu(torch.ones(seq_length, seq_length), 1).bool().npu()

+ self.attention_mask = torch.triu(torch.ones(seq_length, seq_length), 1).bool().cuda()q, k, v = [rearrange(x, 's b h d -> s b (h d)') for x in [q, k, v]]

diff --git a/requirements.txt b/requirements.txt

index 3cb83fd..dd9cb61 100644

--- a/requirements.txt

+++ b/requirements.txt

@@ -10,8 +10,8 @@ datasetspybind11acceleratesix

-torch==2.1.0

-torchvision==0.16.0

+#torch==2.1.0

+#torchvision==0.16.0protobufpeft==0.7.1

-tiktoken

\ No newline at end of file

+tiktoken

diff --git a/tools/checkpoint/convert_ckpt.py b/tools/checkpoint/convert_ckpt.py

index 5c71645..6cc1dfd 100644

--- a/tools/checkpoint/convert_ckpt.py

+++ b/tools/checkpoint/convert_ckpt.py

@@ -80,6 +80,9 @@ def main():loader.add_arguments(parser)saver.add_arguments(parser)+ import torch

+ torch.multiprocessing.set_start_method('spawn')

+args = parser.parse_args()queue = mp.Queue(maxsize=args.max_queue_size)

8 将权重从huggingface格式转化为AscendSpeed格式(PTD模式)

cd /home/ModelLink/ModelLink

rm ../model_weights -rf

mkdir -p ../model_weights

python tools/checkpoint/convert_ckpt.py \--model-type GPT \--loader llama2_hf \--saver megatron \--target-tensor-parallel-size 8 \--target-pipeline-parallel-size 1 \--load-dir ../llama-2-7b-hf \--save-dir ../model_weights/llama-2-7b-hf-v0.1-tp8-pp1/ \--tokenizer-model ../llama-2-7b-hf/tokenizer.model

9 下载alpaca数据集并查看第一条记录

cd /home/ModelLink

mkdir dataset_llama2

wget https://huggingface.co/datasets/tatsu-lab/alpaca/resolve/main/data/train-00000-of-00001-a09b74b3ef9c3b56.parquet -O dataset_llama2/train-00000-of-00001-a09b74b3ef9c3b56.parquet

#查看第一条记录

python -c "import pandas as pd;df = pd.read_parquet('dataset_llama2/train-00000-of-00001-a09b74b3ef9c3b56.parquet');first_row = df.iloc[0];print(first_row)"

输出

instruction Give three tips for staying healthy.

input

output 1.Eat a balanced diet and make sure to include...

text Below is an instruction that describes a task....

Name: 0, dtype: object

10.1 将alpacal转换成LLM预训练数据集格式

cd /home/ModelLink/ModelLink

rm -rf ../dataset

mkdir -p ../dataset/llama-2-7b-hf/

python ./tools/preprocess_data.py \--input ../dataset_llama2/train-00000-of-00001-a09b74b3ef9c3b56.parquet \--tokenizer-name-or-path ../llama-2-7b-hf/ \--output-prefix ../dataset/llama-2-7b-hf/alpaca \--workers 4 \--log-interval 1000 \--tokenizer-type PretrainedFromHF

10.2 开始预训练

因内存不足,将batchsize改为1024,优化器变成sgd

export CUDA_DEVICE_MAX_CONNECTIONS=1

GPUS_PER_NODE=8

MASTER_ADDR=localhost

MASTER_PORT=6000

NNODES=1

NODE_RANK=0

WORLD_SIZE=$(($GPUS_PER_NODE*$NNODES))CKPT_LOAD_DIR="../model_weights/llama-2-7b-hf-v0.1-tp8-pp1/"

CKPT_SAVE_DIR="./ckpt/llama-2-7b-hf/"

TOKENIZER_MODEL="../llama-2-7b-hf/tokenizer.model" #词表路径

DATA_PATH="../dataset/llama-2-7b-hf/alpaca_text_document" #数据集路径TP=8

PP=1DISTRIBUTED_ARGS="--nproc_per_node $GPUS_PER_NODE \--nnodes $NNODES \--node_rank $NODE_RANK \--master_addr $MASTER_ADDR \--master_port $MASTER_PORT

"GPT_ARGS="--tensor-model-parallel-size ${TP} \--pipeline-model-parallel-size ${PP} \--sequence-parallel \--num-layers 32 \--hidden-size 4096 \--ffn-hidden-size 11008 \--num-attention-heads 32 \--tokenizer-type Llama2Tokenizer \--tokenizer-model ${TOKENIZER_MODEL} \--seq-length 1024 \--max-position-embeddings 1024 \--micro-batch-size 1 \--global-batch-size 32 \--make-vocab-size-divisible-by 1 \--lr 1.25e-6 \--train-iters 5000 \--lr-decay-style cosine \--untie-embeddings-and-output-weights \--disable-bias-linear \--attention-dropout 0.0 \--init-method-std 0.01 \--hidden-dropout 0.0 \--position-embedding-type rope \--normalization RMSNorm \--swiglu \--no-masked-softmax-fusion \--attention-softmax-in-fp32 \--min-lr 1.25e-7 \--weight-decay 1e-1 \--lr-warmup-fraction 0.01 \--clip-grad 1.0 \--adam-beta1 0.9 \--initial-loss-scale 65536 \--adam-beta2 0.95 \--no-gradient-accumulation-fusion \--no-load-optim \--no-load-rng \--optimizer sgd \--fp16

"DATA_ARGS="--data-path $DATA_PATH \--split 949,50,1

"OUTPUT_ARGS="--log-interval 1 \--save-interval 15 \--eval-interval 15 \--exit-interval 15--eval-iters 10 \

"CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 torchrun $DISTRIBUTED_ARGS pretrain_gpt.py \$GPT_ARGS \$DATA_ARGS \$OUTPUT_ARGS \--distributed-backend nccl \--load $CKPT_LOAD_DIR \--save $CKPT_SAVE_DIR

输出:

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 530.30.02 Driver Version: 530.30.02 CUDA Version: 12.1 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3090 On | 00000000:01:00.0 Off | N/A |

| 54% 58C P2 178W / 350W| 18256MiB / 24576MiB | 99% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA GeForce RTX 3090 On | 00000000:25:00.0 Off | N/A |

| 53% 58C P2 189W / 350W| 18260MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 2 NVIDIA GeForce RTX 3090 On | 00000000:41:00.0 Off | N/A |

| 54% 57C P2 184W / 350W| 18252MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 3 NVIDIA GeForce RTX 3090 On | 00000000:61:00.0 Off | N/A |

| 46% 52C P2 175W / 350W| 18308MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 4 NVIDIA GeForce RTX 3090 On | 00000000:81:00.0 Off | N/A |

| 57% 58C P2 174W / 350W| 18256MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 5 NVIDIA GeForce RTX 3090 On | 00000000:A1:00.0 Off | N/A |

| 46% 57C P2 174W / 350W| 18338MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 6 NVIDIA GeForce RTX 3090 On | 00000000:C1:00.0 Off | N/A |

| 51% 55C P2 182W / 350W| 18316MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 7 NVIDIA GeForce RTX 3090 On | 00000000:E1:00.0 Off | N/A |

| 48% 53C P2 175W / 350W| 18328MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------++---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 50516 C /usr/bin/python 18254MiB |

| 1 N/A N/A 50517 C /usr/bin/python 18258MiB |

| 2 N/A N/A 50518 C /usr/bin/python 18250MiB |

| 3 N/A N/A 50519 C /usr/bin/python 18306MiB |

| 4 N/A N/A 50520 C /usr/bin/python 18254MiB |

| 5 N/A N/A 50521 C /usr/bin/python 18336MiB |

| 6 N/A N/A 50522 C /usr/bin/python 18314MiB |

| 7 N/A N/A 50523 C /usr/bin/python 18326MiB |

+---------------------------------------------------------------------------------------+

training ...

[before the start of training step] datetime: 2024-05-31 06:12:35iteration 2/ 5000 | consumed samples: 64 | elapsed time per iteration (ms): 42203.8 | learning rate: 2.500E-08 | global batch size: 32 | lm loss: 1.413504E+00 | loss scale: 65536.0 | grad norm: 3.595 | number of skipped iterations: 0 | number of nan iterations: 0 |

[Rank 6] (after 2 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.30908203125 | reserved: 17666.0 | max reserved: 17666.0

[Rank 2] (after 2 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.30908203125 | reserved: 17666.0 | max reserved: 17666.0

[Rank 5] (after 2 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.30908203125 | reserved: 17666.0 | max reserved: 17666.0

[Rank 4] (after 2 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.30908203125 | reserved: 17670.0 | max reserved: 17670.0

[Rank 1] (after 2 iterations) memory (MB) | allocated: 13043.18505859375 | max allocated: 16303.18408203125 | reserved: 17674.0 | max reserved: 17674.0

[Rank 3] (after 2 iterations) memory (MB) | allocated: 13043.18505859375 | max allocated: 16303.18408203125 | reserved: 17658.0 | max reserved: 17658.0

[Rank 7] (after 2 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.30908203125 | reserved: 17678.0 | max reserved: 17678.0

[Rank 0] (after 2 iterations) memory (MB) | allocated: 13043.18505859375 | max allocated: 16303.18408203125 | reserved: 17670.0 | max reserved: 17670.0iteration 3/ 5000 | consumed samples: 96 | elapsed time per iteration (ms): 39887.2 | learning rate: 5.000E-08 | global batch size: 32 | lm loss: 1.355680E+00 | loss scale: 65536.0 | grad norm: 3.954 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 4/ 5000 | consumed samples: 128 | elapsed time per iteration (ms): 39955.4 | learning rate: 7.500E-08 | global batch size: 32 | lm loss: 1.411086E+00 | loss scale: 65536.0 | grad norm: 3.844 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 5/ 5000 | consumed samples: 160 | elapsed time per iteration (ms): 39904.5 | learning rate: 1.000E-07 | global batch size: 32 | lm loss: 1.387277E+00 | loss scale: 65536.0 | grad norm: 3.820 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 6/ 5000 | consumed samples: 192 | elapsed time per iteration (ms): 39893.3 | learning rate: 1.250E-07 | global batch size: 32 | lm loss: 1.375117E+00 | loss scale: 65536.0 | grad norm: 4.150 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 7/ 5000 | consumed samples: 224 | elapsed time per iteration (ms): 39911.5 | learning rate: 1.500E-07 | global batch size: 32 | lm loss: 1.372537E+00 | loss scale: 65536.0 | grad norm: 3.742 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 8/ 5000 | consumed samples: 256 | elapsed time per iteration (ms): 39928.2 | learning rate: 1.750E-07 | global batch size: 32 | lm loss: 1.371606E+00 | loss scale: 65536.0 | grad norm: 3.806 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 9/ 5000 | consumed samples: 288 | elapsed time per iteration (ms): 40145.0 | learning rate: 2.000E-07 | global batch size: 32 | lm loss: 1.396583E+00 | loss scale: 65536.0 | grad norm: 4.110 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 10/ 5000 | consumed samples: 320 | elapsed time per iteration (ms): 39902.6 | learning rate: 2.250E-07 | global batch size: 32 | lm loss: 1.378992E+00 | loss scale: 65536.0 | grad norm: 3.984 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 11/ 5000 | consumed samples: 352 | elapsed time per iteration (ms): 39896.7 | learning rate: 2.500E-07 | global batch size: 32 | lm loss: 1.361869E+00 | loss scale: 65536.0 | grad norm: 4.185 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 12/ 5000 | consumed samples: 384 | elapsed time per iteration (ms): 39892.9 | learning rate: 2.750E-07 | global batch size: 32 | lm loss: 1.380939E+00 | loss scale: 65536.0 | grad norm: 3.436 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 13/ 5000 | consumed samples: 416 | elapsed time per iteration (ms): 39925.1 | learning rate: 3.000E-07 | global batch size: 32 | lm loss: 1.426522E+00 | loss scale: 65536.0 | grad norm: 4.136 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 14/ 5000 | consumed samples: 448 | elapsed time per iteration (ms): 39911.0 | learning rate: 3.250E-07 | global batch size: 32 | lm loss: 1.367694E+00 | loss scale: 65536.0 | grad norm: 3.859 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 15/ 5000 | consumed samples: 480 | elapsed time per iteration (ms): 39910.0 | learning rate: 3.500E-07 | global batch size: 32 | lm loss: 1.414699E+00 | loss scale: 65536.0 | grad norm: 4.009 | number of skipped iterations: 0 | number of nan iterations: 0 |

(min, max) time across ranks (ms):evaluate .......................................: (161025.27, 161025.82)

----------------------------------------------------------------------------------------------validation loss at iteration 15 | lm loss value: 1.410507E+00 | lm loss PPL: 4.098031E+00 |

----------------------------------------------------------------------------------------------

saving checkpoint at iteration 15 to ./ckpt/llama-2-7b-hf/successfully saved checkpoint at iteration 15 to ./ckpt/llama-2-7b-hf/

(min, max) time across ranks (ms):save-checkpoint ................................: (87862.41, 87862.74)

[exiting program at iteration 15] datetime: 2024-05-31 06:26:05

11.1 将alpacal转换成LLM指令微调微调数据集格式

cd /home/ModelLink/ModelLink

rm -rf ../finetune_dataset

mkdir -p ../finetune_dataset/llama-2-7b-hf/

python ./tools/preprocess_data.py \--input ../dataset_llama2/train-00000-of-00001-a09b74b3ef9c3b56.parquet \--tokenizer-name-or-path ../llama-2-7b-hf/ \--output-prefix ../finetune_dataset/llama-2-7b-hf/alpaca \--workers 4 \--log-interval 1000 \--tokenizer-type PretrainedFromHF \--handler-name GeneralInstructionHandler \--append-eod

11.2 开始全参微调

**加载前面预训练后的权值./ckpt/llama-2-7b-hf **

cd /home/ModelLink/ModelLink

export CUDA_DEVICE_MAX_CONNECTIONS=1

GPUS_PER_NODE=8

MASTER_ADDR=localhost

MASTER_PORT=6000

NNODES=1

NODE_RANK=0

WORLD_SIZE=$(($GPUS_PER_NODE*$NNODES))CKPT_PATH="./ckpt/llama-2-7b-hf/"

CKPT_SAVE_DIR="./ckpt/llama-2-7b-hf-finetune/"

TOKENIZER_MODEL="../llama-2-7b-hf/tokenizer.model" #词表路径

DATA_PATH="../finetune_dataset/llama-2-7b-hf/alpaca"

TOKENIZER_PATH="../llama-2-7b-hf/"TP=8

PP=1DISTRIBUTED_ARGS="--nproc_per_node $GPUS_PER_NODE \--nnodes $NNODES \--node_rank $NODE_RANK \--master_addr $MASTER_ADDR \--master_port $MASTER_PORT

"GPT_ARGS="--tensor-model-parallel-size ${TP} \--pipeline-model-parallel-size ${PP} \--sequence-parallel \--num-layers 32 \--hidden-size 4096 \--ffn-hidden-size 11008 \--num-attention-heads 32 \--tokenizer-type Llama2Tokenizer \--tokenizer-model ${TOKENIZER_MODEL} \--seq-length 1024 \--max-position-embeddings 1024 \--micro-batch-size 1 \--global-batch-size 32 \--make-vocab-size-divisible-by 1 \--lr 1.25e-6 \--train-iters 5000 \--lr-decay-style cosine \--untie-embeddings-and-output-weights \--disable-bias-linear \--attention-dropout 0.0 \--init-method-std 0.01 \--hidden-dropout 0.0 \--position-embedding-type rope \--normalization RMSNorm \--swiglu \--no-masked-softmax-fusion \--attention-softmax-in-fp32 \--min-lr 1.25e-7 \--weight-decay 1e-1 \--lr-warmup-fraction 0.01 \--clip-grad 1.0 \--adam-beta1 0.9 \--initial-loss-scale 65536 \--adam-beta2 0.95 \--finetune \--is-instruction-dataset \--tokenizer-type PretrainedFromHF \--tokenizer-name-or-path ${TOKENIZER_PATH} \--tokenizer-not-use-fast \--no-gradient-accumulation-fusion \--no-load-optim \--no-load-rng \--optimizer sgd \--fp16

"DATA_ARGS="--data-path $DATA_PATH \--split 949,50,1

"OUTPUT_ARGS="--log-interval 1 \--save-interval 15 \--eval-interval 15 \--exit-interval 15--eval-iters 10 \

"CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 torchrun $DISTRIBUTED_ARGS pretrain_gpt.py \$GPT_ARGS \$DATA_ARGS \$OUTPUT_ARGS \--distributed-backend nccl \--load $CKPT_PATH \--save $CKPT_SAVE_DIR

输出

training ...

(min, max) time across ranks (ms):model-and-optimizer-setup ......................: (85970.98, 86006.16)train/valid/test-data-iterators-setup ..........: (606.22, 698.84)

[before the start of training step] datetime: 2024-05-31 06:51:44iteration 1/ 5000 | consumed samples: 32 | elapsed time per iteration (ms): 41487.9 | learning rate: 0.000E+00 | global batch size: 32 | loss scale: 65536.0 | number of skipped iterations: 1 | number of nan iterations: 0 |iteration 2/ 5000 | consumed samples: 64 | elapsed time per iteration (ms): 38955.8 | learning rate: 0.000E+00 | global batch size: 32 | loss scale: 32768.0 | number of skipped iterations: 1 | number of nan iterations: 0 |iteration 3/ 5000 | consumed samples: 96 | elapsed time per iteration (ms): 39195.7 | learning rate: 2.500E-08 | global batch size: 32 | lm loss: 1.242152E+00 | loss scale: 32768.0 | grad norm: 12.467 | number of skipped iterations: 0 | number of nan iterations: 0 |

[Rank 6] (after 3 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.3095703125 | reserved: 17674.0 | max reserved: 17674.0

[Rank 2] (after 3 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.3095703125 | reserved: 17674.0 | max reserved: 17674.0

[Rank 0] (after 3 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.3095703125 | reserved: 17678.0 | max reserved: 17678.0[Rank 1] (after 3 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.3095703125 | reserved: 17666.0 | max reserved: 17666.0[Rank 7] (after 3 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.3095703125 | reserved: 17666.0 | max reserved: 17666.0

[Rank 4] (after 3 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.3095703125 | reserved: 17682.0 | max reserved: 17682.0

[Rank 3] (after 3 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.3095703125 | reserved: 17674.0 | max reserved: 17674.0[Rank 5] (after 3 iterations) memory (MB) | allocated: 13042.31005859375 | max allocated: 16302.3095703125 | reserved: 17666.0 | max reserved: 17666.0iteration 4/ 5000 | consumed samples: 128 | elapsed time per iteration (ms): 39234.5 | learning rate: 2.500E-08 | global batch size: 32 | loss scale: 16384.0 | number of skipped iterations: 1 | number of nan iterations: 0 |iteration 5/ 5000 | consumed samples: 160 | elapsed time per iteration (ms): 38909.1 | learning rate: 5.000E-08 | global batch size: 32 | lm loss: 1.327399E+00 | loss scale: 16384.0 | grad norm: 16.184 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 6/ 5000 | consumed samples: 192 | elapsed time per iteration (ms): 38792.2 | learning rate: 7.500E-08 | global batch size: 32 | lm loss: 1.326726E+00 | loss scale: 16384.0 | grad norm: 12.158 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 7/ 5000 | consumed samples: 224 | elapsed time per iteration (ms): 39337.1 | learning rate: 1.000E-07 | global batch size: 32 | lm loss: 1.260413E+00 | loss scale: 16384.0 | grad norm: 15.909 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 8/ 5000 | consumed samples: 256 | elapsed time per iteration (ms): 38932.4 | learning rate: 1.250E-07 | global batch size: 32 | lm loss: 1.284461E+00 | loss scale: 16384.0 | grad norm: 18.599 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 9/ 5000 | consumed samples: 288 | elapsed time per iteration (ms): 38752.6 | learning rate: 1.500E-07 | global batch size: 32 | lm loss: 1.455263E+00 | loss scale: 16384.0 | grad norm: 13.974 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 10/ 5000 | consumed samples: 320 | elapsed time per iteration (ms): 39324.6 | learning rate: 1.750E-07 | global batch size: 32 | lm loss: 1.400642E+00 | loss scale: 16384.0 | grad norm: 14.888 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 11/ 5000 | consumed samples: 352 | elapsed time per iteration (ms): 38945.9 | learning rate: 2.000E-07 | global batch size: 32 | lm loss: 1.290374E+00 | loss scale: 16384.0 | grad norm: 20.459 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 12/ 5000 | consumed samples: 384 | elapsed time per iteration (ms): 38755.6 | learning rate: 2.250E-07 | global batch size: 32 | lm loss: 1.346803E+00 | loss scale: 16384.0 | grad norm: 14.086 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 13/ 5000 | consumed samples: 416 | elapsed time per iteration (ms): 39292.3 | learning rate: 2.500E-07 | global batch size: 32 | lm loss: 1.247773E+00 | loss scale: 16384.0 | grad norm: 17.651 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 14/ 5000 | consumed samples: 448 | elapsed time per iteration (ms): 38935.8 | learning rate: 2.750E-07 | global batch size: 32 | lm loss: 1.277381E+00 | loss scale: 16384.0 | grad norm: 21.269 | number of skipped iterations: 0 | number of nan iterations: 0 |iteration 15/ 5000 | consumed samples: 480 | elapsed time per iteration (ms): 38725.8 | learning rate: 3.000E-07 | global batch size: 32 | lm loss: 1.202904E+00 | loss scale: 16384.0 | grad norm: 16.246 | number of skipped iterations: 0 | number of nan iterations: 0 |

(min, max) time across ranks (ms):evaluate .......................................: (161834.87, 161840.18)

----------------------------------------------------------------------------------------------validation loss at iteration 15 | lm loss value: 1.186715E+00 | lm loss PPL: 3.276301E+00 |

----------------------------------------------------------------------------------------------

saving checkpoint at iteration 15 to ./ckpt/llama-2-7b-hf-finetune/successfully saved checkpoint at iteration 15 to ./ckpt/llama-2-7b-hf-finetune/

(min, max) time across ranks (ms):save-checkpoint ................................: (92042.34, 92042.48)

[exiting program at iteration 15] datetime: 2024-05-31 07:05:45

ModelLink_737">11.3 采用ModelLink进行指令微调模型的推理测试

cd /home/ModelLink/ModelLink

export CUDA_DEVICE_MAX_CONNECTIONS=1

CHECKPOINT="./ckpt/llama-2-7b-hf-finetune"

TOKENIZER_MODEL="../llama-2-7b-hf/tokenizer.model" #词表路径

DATA_PATH="../finetune_dataset/llama-2-7b-hf/alpaca"

TOKENIZER_PATH="../llama-2-7b-hf/"MASTER_ADDR=localhost

MASTER_PORT=6001

NNODES=1

NODE_RANK=0

NPUS_PER_NODE=8

WORLD_SIZE=$(($NPUS_PER_NODE*$NNODES))DISTRIBUTED_ARGS="--nproc_per_node $NPUS_PER_NODE --nnodes $NNODES --node_rank $NODE_RANK --master_addr $MASTER_ADDR --master_port $MASTER_PORT"python -m torch.distributed.launch $DISTRIBUTED_ARGS inference.py \--tensor-model-parallel-size 8 \--pipeline-model-parallel-size 1 \--num-layers 32 \--hidden-size 4096 \--ffn-hidden-size 11008 \--position-embedding-type rope \--seq-length 1024 \--max-new-tokens 256 \--micro-batch-size 1 \--global-batch-size 32 \--num-attention-heads 32 \--max-position-embeddings 1024 \--swiglu \--load "${CHECKPOINT}" \--tokenizer-type PretrainedFromHF \--tokenizer-name-or-path "${TOKENIZER_PATH}" \--tokenizer-model "${TOKENIZER_MODEL}" \--tokenizer-not-use-fast \--fp16 \--normalization RMSNorm \--untie-embeddings-and-output-weights \--disable-bias-linear \--attention-softmax-in-fp32 \--no-load-optim \--no-load-rng \--no-masked-softmax-fusion \--no-gradient-accumulation-fusion \--exit-on-missing-checkpoint \--make-vocab-size-divisible-by 1

输出:

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 530.30.02 Driver Version: 530.30.02 CUDA Version: 12.1 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3090 On | 00000000:01:00.0 Off | N/A |

| 32% 56C P2 182W / 350W| 4302MiB / 24576MiB | 99% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA GeForce RTX 3090 On | 00000000:25:00.0 Off | N/A |

| 32% 56C P2 190W / 350W| 4292MiB / 24576MiB | 99% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 2 NVIDIA GeForce RTX 3090 On | 00000000:41:00.0 Off | N/A |

| 52% 58C P2 191W / 350W| 4302MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 3 NVIDIA GeForce RTX 3090 On | 00000000:61:00.0 Off | N/A |

| 45% 53C P2 181W / 350W| 4302MiB / 24576MiB | 99% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 4 NVIDIA GeForce RTX 3090 On | 00000000:81:00.0 Off | N/A |

| 45% 57C P2 175W / 350W| 4292MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 5 NVIDIA GeForce RTX 3090 On | 00000000:A1:00.0 Off | N/A |

| 32% 55C P2 176W / 350W| 4302MiB / 24576MiB | 100% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 6 NVIDIA GeForce RTX 3090 On | 00000000:C1:00.0 Off | N/A |

| 45% 54C P2 185W / 350W| 4292MiB / 24576MiB | 99% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 7 NVIDIA GeForce RTX 3090 On | 00000000:E1:00.0 Off | N/A |

| 43% 52C P2 179W / 350W| 4302MiB / 24576MiB | 99% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

INFO:root:

=============== Greedy Search ================

INFO:root:

You:

how are you?ModelLink:

I hope you are doing well. I am doing well. I am in the middle of a very busy week. I have a lot of things to do. I have to go to the store, I have to go to the bank, I have to go to the doctor, I have to go to the dentist, I have to go to the post office, I have to go to the library, I have to go to the gym, I have to go to the park, I have to go to the movies, I have to go to the mall, I have to go to the grocery store, I have to go to the gas station, I have to go to the car wash, I have to go to the car dealership, I have to go to the car repair shop, I have to go to the car rental place, I have to go to the car wash, I have to go to the car dealership, I have to go to the car repair shop, I have to go to the car rental place, I have to go to the car wash, I have to go to the car dealership, I have to go to the car repair shop, I have to go to the car

INFO:root:==============================================

INFO:root:

Elapsed: 36.62s

INFO:root:

================ Do Sample =================

INFO:root:

You:

how are you?ModelLink:

['I’m a little in my thirties and I work as a teacher everybodynik. for an ag company in C.... in the... UK. ItN IC and I havem a niceN.MS-mN.N,E,NMSN-B MSNM,NMSNMSMSMS MSMS-MS MS MS.MSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMS', 'How ares the weather nobody thanksgiving went? Are was it good for?om was,..…\n\n thought I would write the best thing about this game,. The best thing about this game is when it comes hard, the game can be easy. with the right equipmenties.\nit iss a tip shot of the game: You’\ncan change the wall to your lik..\nHere. P .\n:..IC: http.\nI.ICECHow\n:\nMS:LMS.\nMS:MSMS.\nMS\n\nMS thinkR\nMMSMS.\n.\nMS:MS...\nS’:MMS.MS\nMSMSMSMS\nMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMSMS']

INFO:root:============================================

INFO:root:

Elapsed: 77.06s

INFO:root:

=============== Beam Search =================

INFO:root:

You:

how are you?ModelLink:

I hope you are doing well. I am doing well.

I have been thinking about you a lot lately. I miss you and I want to see you again. I want to hold you in my arms and kiss you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you. I want to be with you

INFO:root:=============================================

INFO:root:

Elapsed: 76.65s

INFO:root:

======== Beam Search with sampling ==========

INFO:root:

You:

how are you?ModelLink:

I'm sierp nobody: I'm a girl!

Ich bin 18 Jahre alt. Hinweis: I'm 18 years old.

Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis: I'm 18 years old. Ich bin 18 Jahre alt. Hinweis:

INFO:root:=============================================

INFO:root:

Elapsed: 76.63s

INFO:root:

===========================================

INFO:root:Probability Distribution:

tensor([[4.0281e-11, 6.5011e-10, 3.4718e-04, ..., 9.7066e-07, 1.2008e-07,2.8805e-07],[5.8844e-06, 7.9362e-04, 6.7673e-05, ..., 1.1114e-04, 2.2738e-04,1.4215e-04],[4.0714e-09, 7.5630e-08, 1.4504e-02, ..., 9.7873e-08, 1.1134e-07,1.9238e-07],...,[1.0839e-11, 4.2041e-11, 1.5068e-06, ..., 1.3649e-11, 1.9026e-11,2.0660e-11],[4.5711e-12, 7.6339e-11, 2.2782e-06, ..., 1.3781e-11, 7.7152e-12,1.0912e-11],[8.3764e-11, 3.1335e-10, 6.8695e-05, ..., 1.7646e-10, 1.7127e-09,2.6986e-10]], device='cuda:0')

INFO:root:Beam Search Score:

tensor([0.9320, 0.7111], device='cuda:0')

INFO:root:===========================================

INFO:root:

Elapsed: 111.55s

INFO:root:===========================================================

INFO:root:1. If you want to quit, please entry one of [q, quit, exit]

INFO:root:2. To create new title, please entry one of [clear, new]

INFO:root:===========================================================

11.4.1 准备MMLU精度测试数据集

cd /home/ModelLink/ModelLink

mkdir -p ../mmlu/

wget https://people.eecs.berkeley.edu/~hendrycks/data.tar -O ../mmlu/data.tar

tar -xf ../mmlu/data.tar -C ../mmlu

ModelLinkMMLU_916">11.4.2 采用ModelLink进行指令微调模型的MMLU精度测试

cd /home/ModelLink/ModelLink

export CUDA_DEVICE_MAX_CONNECTIONS=1TOKENIZER_PATH="../llama-2-7b-hf/"

CHECKPOINT="./ckpt/llama-2-7b-hf-finetune"

DATA_PATH="../mmlu/data/test/"

TASK="mmlu"MASTER_ADDR=localhost

MASTER_PORT=6001

NNODES=1

NODE_RANK=0

NPUS_PER_NODE=8

DISTRIBUTED_ARGS="--nproc_per_node $NPUS_PER_NODE --nnodes $NNODES --node_rank $NODE_RANK --master_addr $MASTER_ADDR --master_port $MASTER_PORT"python -m torch.distributed.launch $DISTRIBUTED_ARGS evaluation.py \--task-data-path $DATA_PATH \--task $TASK\--seq-length 1024 \--max-new-tokens 1 \--evaluation-batch-size 1 \--max-position-embeddings 1024 \--tensor-model-parallel-size 8 \--pipeline-model-parallel-size 1 \--num-layers 32 \--hidden-size 4096 \--ffn-hidden-size 11008 \--num-attention-heads 32 \--swiglu \--disable-bias-linear \--load ${CHECKPOINT} \--normalization RMSNorm \--tokenizer-type PretrainedFromHF \--tokenizer-name-or-path ${TOKENIZER_PATH} \--tokenizer-not-use-fast \--fp16 \--micro-batch-size 1 \--position-embedding-type rope \--exit-on-missing-checkpoint \--no-load-rng \--no-load-optim \--untie-embeddings-and-output-weights \--no-masked-softmax-fusion \--make-vocab-size-divisible-by 1 \--seed 42

输出:

INFO:modellink.tasks.evaluation.eval_impl.mmlu_eval:mmlu acc = 6430/14042=0.4579119783506623

INFO:__main__:subject question_n acc

0 virology 166 0.421687

1 college_chemistry 100 0.310000

2 computer_security 100 0.600000

3 elementary_mathematics 378 0.269841

4 high_school_geography 198 0.479798

5 management 103 0.514563

6 high_school_biology 310 0.512903

7 human_aging 223 0.560538

8 high_school_statistics 216 0.259259

9 professional_law 1534 0.370274

10 high_school_mathematics 270 0.285185

11 conceptual_physics 235 0.438298

12 jurisprudence 108 0.537037

13 medical_genetics 100 0.520000

14 college_medicine 173 0.416185

15 clinical_knowledge 265 0.452830

16 college_computer_science 100 0.360000

17 high_school_microeconomics 238 0.424370

18 high_school_chemistry 203 0.359606

19 professional_psychology 612 0.449346

20 astronomy 152 0.434211

21 high_school_computer_science 100 0.390000

22 high_school_world_history 237 0.654008

23 abstract_algebra 100 0.300000

24 formal_logic 126 0.293651

25 public_relations 110 0.536364

26 professional_medicine 272 0.522059

27 philosophy 311 0.591640

28 high_school_psychology 545 0.603670

29 anatomy 135 0.481481

30 college_biology 144 0.451389

31 college_mathematics 100 0.310000

32 human_sexuality 131 0.541985

33 econometrics 114 0.289474

34 us_foreign_policy 100 0.660000

35 high_school_us_history 204 0.519608

36 moral_scenarios 895 0.253631

37 sociology 201 0.621891

38 moral_disputes 346 0.523121

39 logical_fallacies 163 0.490798

40 high_school_european_history 165 0.600000

41 business_ethics 100 0.500000

42 high_school_macroeconomics 390 0.448718

43 miscellaneous 783 0.630907

44 high_school_physics 151 0.337748

45 professional_accounting 282 0.358156

46 nutrition 306 0.496732

47 machine_learning 112 0.375000

48 global_facts 100 0.330000

49 prehistory 324 0.481481

50 security_studies 245 0.538776

51 electrical_engineering 145 0.496552

52 world_religions 171 0.684211

53 marketing 234 0.670940

54 college_physics 102 0.215686

55 high_school_government_and_politics 193 0.652850

56 international_law 121 0.611570

57 total 14042 0.457912

total: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 57/57 [1:27:12<00:00, 91.80s/it]

INFO:__main__:MMLU Running Time:, 5232.327924013138

11.5 将模型从Megatron格式转回HuggingFace格式

–save-dir需要填入原始HF模型路径,新权重会存到该路径的子目录mg2hg下

cd /home/ModelLink/ModelLink

python tools/checkpoint/convert_ckpt.py \--model-type GPT \--loader megatron \--saver megatron \--save-model-type save_huggingface_llama \--load-dir ./ckpt/llama-2-7b-hf-finetune/ \--target-tensor-parallel-size 1 \--target-pipeline-parallel-size 1 \--save-dir ../llama-2-7b-hf/#拷贝到独立的目录(别忘了从原始模型里复制词表相关的文件)

cd /home/ModelLink

mv ./llama-2-7b-hf/mg2hg ./llama-2-7b-hf-mg2hg

cp -vf llama-2-7b-hf/tokenizer* llama-2-7b-hf-mg2hg/

12 指令微调后HuggingFace格式模型的推理测试

cd /home/ModelLink/

python3 torch_infer.py ./llama-2-7b-hf-mg2hg

输出:

---------------------------------------- Response -------------------------------------

Give three tips for staying healthy during the holidays.

The holidays are a time of celebration and joy, but they can also be a time of stress and overindulgence. Here are three tips for staying healthy during the holidays:

1. Eat healthy foods.

2. Exercise regularly.

3. Get enough sleep.

What are some of the most common health problems during the holidays?

The holidays are a time of celebration and joy, but they can also be a time of stress and overindulgence

---------------------------------------------------------------------------------------

Time taken for first token: 0.0249 seconds

Total time taken: 2.9708 seconds

Number of tokens generated: 119

Tokens per second: 40.06

BEFORE MA: 12884.52 MMA: 12884.52 CA: 12886.00 MCA: 12886.00

AFTER MA: 12892.65 MMA: 13019.47 CA: 13036.00 MCA: 13036.00

DIFF MA: 8.12 MMA: 134.94 CA: 150.00 MCA: 150.00

13 TensorRT-LLM推理测试

cd /home/ModelLink#安装tensorrt_llm

pip3 install tensorrt_llm -U --pre --extra-index-url https://pypi.nvidia.com#下载TensorRT-LLM(需要里面的llama sample)

git clone https://github.com/NVIDIA/TensorRT-LLM.git

cd /home/ModelLink/TensorRT-LLM/examples/llama#将HuggingFace格式的模型转换到TensorRT-LLM格式

python convert_checkpoint.py --model_dir /home/ModelLink/llama-2-7b-hf-mg2hg/ \--output_dir ./tllm_checkpoint_1gpu_fp16 \--dtype float16#模型编译

trtllm-build --checkpoint_dir ./tllm_checkpoint_1gpu_fp16 \--output_dir ./tllm_1gpu_fp16_engine \--gemm_plugin auto#运行推理DEMO

python ../run.py --max_output_len=256 \--tokenizer_dir /home/ModelLink/llama-2-7b-hf-mg2hg/ \--engine_dir=./tllm_1gpu_fp16_engine \--input_text "Give three tips for staying healthy"#推理性能测试

python ../../benchmarks/python/benchmark.py \-m llama_7b \--mode plugin \--batch_size "1" \--engine_dir ./tllm_1gpu_fp16_engine \--input_output_len "512,512"

输出

#DEMO输出

Input [Text 0]: "<s> Give three tips for staying healthy"

Output [Text 0 Beam 0]: "during the holidays.

The holidays are a time of celebration and joy, but they can also be a time of stress and overindulgence. Here are three tips for staying healthy during the holidays:

1. Eat healthy foods.

2. Exercise regularly.

3. Get enough sleep.

What are some tips for staying healthy during the holidays?

The holidays are a time of celebration and joy, but they can also be a time of stress and overindulgence. Here are some tips for staying healthy during the holidays:

1. Eat healthy foods. The holidays are a time to indulge, but it’s important to remember to eat healthy foods as well. Focus on eating fruits and vegetables, lean proteins, and whole grains.

2. Exercise regularly. Exercise is important year-round, but it’s especially important during the holidays. Exercise helps to reduce stress, improve mood, and boost energy levels.

3. Get enough sleep. Sleep is important for overall health and well-be"#性能测试输出

[TensorRT-LLM] TensorRT-LLM version: 0.11.0.dev2024052800

[BENCHMARK] model_name llama_7b world_size 1 num_heads 32 num_kv_heads 32 num_layers 32 hidden_size 4096 vocab_size 32000 precision float16 batch_size 1 gpu_weights_percent 1.0 input_length 512 output_length 512

gpu_peak_mem(gb) 14.321 build_time(s) 0 tokens_per_sec 51.9 percentile95(ms) 9878.526 percentile99(ms) 9878.526 latency(ms) 9864.582 compute_cap sm86 quantization QuantMode.0 generation_time(ms) 9742.378 total_generated_tokens 511.0 generation_tokens_per_second 52.451#SMI信息

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3090 On | 00000000:01:00.0 Off | N/A |

| 89% 68C P2 341W / 350W| 14664MiB / 24576MiB | 99% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

14 异常处理–提示tensorrt找不到

wget https://developer.download.nvidia.cn/compute/machine-learning/tensorrt/10.0.1/tars/TensorRT-10.0.1.6.Linux.x86_64-gnu.cuda-12.4.tar.gz

tar -xf TensorRT-10.0.1.6.Linux.x86_64-gnu.cuda-12.4.tar.gz

cd TensorRT-10.0.1.6

\cp bin include lib targets /usr/local/cuda -ravf

cd python/

pip uninstall tensorrt -y

pip install tensorrt-10.0.1-cp310-none-linux_x86_64.whl tensorrt_dispatch-10.0.1-cp310-none-linux_x86_64.whl tensorrt_lean-10.0.1-cp310-none-linux_x86_64.whl